Abstract

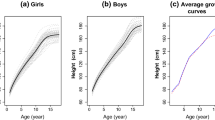

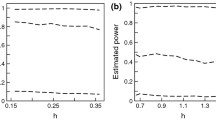

This paper investigates the hypothesis test of the parametric component in partial functional linear regression. We propose a test procedure based on the residual sums of squares under the null and alternative hypothesis, and establish the asymptotic properties of the resulting test. A simulation study shows that the proposed test procedure has good size and power with finite sample sizes. Finally, we present an illustration through fitting the Berkeley growth data with a partial functional linear regression model and testing the effect of gender on the height of kids.

Similar content being viewed by others

References

Aneiros-Pérez G, Vieu P (2006) Semi-functional partial linear regression. Stat Probab Lett 76(11):1102–1110

Bosq D (2000) Linear processes in function spaces: theory and applications. Springer, New York

Cai T, Hall P (2006) Prediction in functional linear regression. Ann Stat 34(5):2159–2179

Cai T, Yuan M (2012) Minimax and adaptive prediction for functional linear regression. J Am Stat Assoc 107(499):1201–1216

Cardot H, Ferraty F, Sarda P (1999) Functional linear model. Stat Probab Lett 45(1):11–22

Cardot H, Ferraty F, Mas A, Sarda P (2003) Testing hypotheses in the functional linear model. Scand J Stat 30(1):241–255

Crambes C, Kneip A, Sarda P (2009) Smoothing splines estimators for functional linear regression. Ann Stat 37(1):35–72

Delsol L, Ferraty F, Vieu P (2011) Structural test in regression on functional variables. J Multivar Anal 102(3):422–447

Ferraty F, Vieu P (2006) Nonparametric functional data analysis: theory and practice. Springer, New York

Hall P, Horowitz JL (2007) Methodology and convergence rates for functional linear regression. Ann Stat 35(1):70–91

Horváth L, Kokoszka P (2012) Inference for functional data with applications. Springer, New York

Horváth L, Kokoszka P, Reimherr M (2009) Two sample inference in functional linear models. Can J Stat 37(4):571–591

Ignaccolo R, Ghigo S, Giovenali E (2008) Analysis of air quality monitoring networks by functional clustering. Environmetrics 19(7):672–686

Kokoszka P, Maslova I, Sojka J, Zhu L (2008) Testing for lack of dependence in the functional linear model. Can J Stat 36(2):207–222

Kokoszka P, Miao H, Zhang X (2014) Functional dynamic factor model for intraday price curves. J Financ Econom nbu004:1–22

Kong D, Staicu A, Maity A (2013) Classical testing in functional linear models. North Carol State Univ Dep Stat Tech Rep 2647:1–23

Lu Y, Du J, Sun Z (2014) Functional partially linear quantile regression model. Metrika 77:317–332

Mas A (2007) Testing for the mean of random curves: a penalization approach. Stat inference Stoch process 10(2):147–163

Ramsay JO, Dalzell CJ (1991) Some tools for functional data analysis (with discussion). J R Stat Soc: Ser B 53:539–572

Ramsay JO, Silverman BW (2005) Functional data analysis, 2nd edn. Springer, New York

Reimherr M, Nicolae D (2014) A functional data analysis approach for genetic association studies. Ann Appl Stat 8(1):406–429

Shin H (2009) Partial functional linear regression. J Stat Plan Inference 139(10):3405–3418

Tuddenham R, Snyder M (1954) Physical growth of California boys and girls from birth to eighteen years. Calif Publ Child Dev 1:183–364

Xu H, Shen Q, Yang X, Shoptaw S (2011) A quasi F-test for functional linear models with functional covariates and its application to longitudinal data. Stat med 30(23):2842–2853

Yao F, Müller HG, Wang JL (2005) Functional linear regression analysis for longitudinal data. Ann Stat 33(6):2873–2903

Acknowledgments

The author thank anonymous referees for their valuable comments and suggestions, which improved substantially the early version of this paper. Yu and Zhang’s work is partly supported by the National Natural Science Foundation of China (No. 11271039), and Education Ministry Funds for Doctor Supervisors. Du’s research is supported by the National Natural Science Foundation of China (No. 11501018) and Program for Rixin Talents in Beijing University of Technology (No. 006000514116003).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

In order to provide the proofs of the theorems, we first define the notation and give preliminary results.

Write \({\hat{V}}_k(g)=\sum _{j=1}^m\frac{\langle {\hat{C}}_{z_kX},{\hat{v}}_j\rangle \langle {\hat{v}}_j,g\rangle }{{\hat{\lambda }}_j}\), \(V_k(g)=\sum _{j=1}^{\infty }\frac{\langle C_{z_kX},v_j\rangle \langle v_j,g\rangle }{\lambda _j}\) for \(g\in L^2[0,1]\), \(\hat{{\varvec{B}}}={\hat{C}}_{{\varvec{z}}}-\{{\hat{V}}_k({\hat{C}}_{z_{l}X})\}_{k,l=1,\ldots ,p}\). Then it is easy to show that \({\varvec{B}}\) defined in Assumption 6 can be expressed as \({\varvec{B}}=C_{{\varvec{z}}}-\{{V_k(C_{z_{l}X})}\}_{k,l=1,\ldots ,p}\), and that \(\hat{{\varvec{B}}}=\frac{1}{n}{\varvec{Z}}^T({\varvec{I}}-{\varvec{S}}_m){\varvec{Z}}\) if \({\hat{\lambda }}_1>\cdots>{\hat{\lambda }}_n>0\) holds. Furthermore, we have Lemma 1.

Lemma 1

Suppose Assumptions 1–6 hold. Then, one has

Proof

This is a straightforward corollary of Theorem 3.1 in Shin (2009).

Lemma 2

If \(H_0\) and Assumptions 1–3 hold, then

Proof

Let vector \({\varvec{U}}_{mi}\) be the ith row of matrix \({\varvec{U}}_m\), then

Using the law of large numbers, we get

By the Cauchy-Schwarz inequality and Theorem 1 in Hall and Horowitz (2007), we therefore have

Similarly, it holds

This completes the proof of Lemma 2. \(\square \)

Proof of Theorem 1

Firstly, according to \(\hat{\varvec{\beta }}_1=({\varvec{Z}}^T({\varvec{I}}-{\varvec{S}}_m){\varvec{Z}})^{-1}{\varvec{Z}}^T({\varvec{I}}-{\varvec{S}}_m){\varvec{Y}}\), one has

Furthermore it is easy to get

Then

Following from Theorem 3.1 in Shin (2009), one has

Combining this with Lemma 1 and Lemma 2, we can obtain Theorem 1. \(\square \)

Proof of Theorem 2

Theorem 3.1 in Shin (2009) and Lemma 2 imply that

Setting \({\varvec{V}}=[\langle X_1,\gamma \rangle ,\ldots ,\langle X_n,\gamma \rangle ]^T\), under the alternative hypothesis,

First applying Lemma 1 we conclude that

By routine calculation, one has

and

Thus it holds that

Notice that

Applying the orthogonality of \({\hat{v}}_i\), one has

where the last equality follows from

Then, we have

Applying Lemma 1 and \(\varvec{\varepsilon }\) is independent of \({\varvec{Z}}\) and X, we have

Thus

Using the Cauchy-Schwarz inequality, (13) and (15), one can establish that

Similarly, using the Cauchy-Schwarz inequality, (14) and (15), one has

As a result

Theorem 2 is proven by the positive definiteness of the matrix \({\varvec{B}}\). \(\square \)

Proof of Theorem 3

According to Theorem 3.1 in Shin (2009) and the model under \({{\widetilde{H}}}_A\)

one has

where W is a p-dimensional normally distributed vector with mean zero and covariance matrix \(\sigma ^2{\varvec{B}}^{-1}\).

Furthermore, similar to \(\frac{1}{n}{\text{ RSS }}( H_0)\), one has

The combination of (19) and (20) and the definition of non-central chi-squared distribution allow us to finish the proof of Theorem 3. \(\square \)

Rights and permissions

About this article

Cite this article

Yu, P., Zhang, Z. & Du, J. A test of linearity in partial functional linear regression. Metrika 79, 953–969 (2016). https://doi.org/10.1007/s00184-016-0584-x

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-016-0584-x

Keywords

- Functional data analysis

- Partial functional linear regression

- Functional principal component analysis

- Asymptotics