Abstract

Within the latest years, digital twins have become one of the most promising concepts that can be applied to complex manufacturing processes, due to their accuracy and adaptiveness in real-time what-if scenarios. In the current study, the process of material removal utilizing femtolasers has been examined both theoretically, with the use of molecular dynamics based simulations, and experimentally. The simulation responses are integrated into a digital twin utilizing machine learning techniques, physics and decision-making algorithms. The experimental data from the femtolaser ablation have been compared with the simulation results and the applicability of the digital twin model has been evaluated.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Laser micro-processing has been increasingly emerged into the manufacturing industry within the past years, due to the various opportunities and applications provided by micro and submicro-scale products. Femtolasers are broadly utilized in laser micro-processing applications. Laser ablation is being carried out using pulse duration in femtosecond range, causing sublimation, vaporization and melting through the irradiation occurred by the interaction between the laser beam and the target material. Femtolaser (FL) ablation is a promising micro-processing process, characterized by low temporal and spatial scales and high pressure and temperature, due to its several benefits in comparison with the conventional lasers using longer pulse duration [1,2,3]. Its exceedingly short pulse duration delivers the constrain of heat diffusion and, hence, the limitation of the heat-affected zone (HAZ). Smaller material volume removal occurs due to the concentrated heating in each laser pulse, and thus, more precise machining results are being obtained, compared with those acquired from laser pulses of longer duration [4, 5].

As revealed by FL ablation experimental investigations, a debris formation occurs along with the crater. The height of the debris is structured relatively to the target matter, the laser energy and the number of pulses fired to the target. As it has been further observed, the crater diameters are larger than diameters of the laser beam. Correlation to the crater shape, including radiation intensity distribution, is being observed only at low-fluence values. The increase of the crater diameters occurs due to the scatter of the laser beam in the near-surface plasma, causing action among the plasma and the crater generation. The appearance of debris is the outcome from both the liquid phase explosion and the plasma particle condensation [6]. Experimental results [5] are shown in Fig. 1.

1.1 Femtolasers and molecular dynamics

The essential difficulty deriving from micro-scale processing, including FL ablation, requires the use of rather accurate simulation mechanisms [7]. Molecular dynamics (MD) simulates and monitors the natural movement of the molecular system, relying on the numerical solution of Newton’s equation of motion. Due to the high complexity of molecular system deriving from the vast number of particles, numerical methods are required to accurately simulate the physical movement of molecules. Mass points, each consisted of the ensemble of N particles, interact through force fields, resulting from interacting potentials. Through the MD simulation, the characteristics of the molecular system, including kinetic/potential energies, velocities and particle positions, are provided for each timestep as selected within the time period of the process. Additional parameters as pressure, autocorrelation functions and temperature can be assessed without the need of implementing new parameters [8, 9].

The energy transfer in FL ablation can be separated into the following two phases: (1) electrons absorb the photon energy, and (2) the absorbed energy redistributes to the lattice that leads to removal of the material [10, 11]. The specific separation relies on the consideration that ionization (few to tens of femtosecond) and free electron heating (during pulsewidth) are achieved in a certain short time, in which the lattice temperature does not change during the absorption of the laser pulse [12]. Pair potential approximation (PPA) is the principal approach used in MD for the determination of various interacting potentials, due to the accurate and efficient description of the properties of the material and the reduced computational time required [13]. In order to obtain realistic assessment within the atomic interactions, potential functions are being utilized that are phenomenologically acquired. In this study, the Morse potential function (MPF) is selected as more appropriation, due to its efficient applicability to metals [8, 14].

Significant efforts have been performed within the last years on studying the decomposing process in femtolasers on metals. As assessed in several studies, the MD modelling outcomes on ultrashort laser ablation can be estimated based on the laser fluence levels and the ablation threshold [15]. Additionally, a thermo-elastic stress is being developed on the material removal at laser fluences close to the ablation threshold. At higher fluence levels, the material removal is highly affected by the critical point phase separation. The increase of fluence leads to phase explosion owing to the high overheating of the irradiated volume. The material ablation process is analyzed considering distinct laser pulse fluences, in order for the effect of temperature as well as the stresses to be revealed [16, 17].

Herein, molecular dynamics is chosen against other modelling techniques, such as hierarchical modelling and higher order theories [18] due to geometry specifications, being the crater height and width.

1.2 Digital twins

Modelling under the framework of digitalization is expected to lead to digital twins. There is a plethora of works dealing with this concept; however, for use in manufacturing process modelling, the definition of Söderberg [19] is adapted that “links large amounts of data to fast simulation making them possible to perform real-time optimization of products and production processes”. This refers to the establishment of sophisticated virtual models, applied throughout manufacturing [20, 21]. Along the lines of Industry 4.0, digital twins are becoming increasingly emerged in real-time process monitoring and optimization [22,23,24]. Several works have been conducted examining the application of digital twins in complex manufacturing processes to achieve the closed loop optimization of the manufacturing system [25, 26]. The development of dynamic systems as real-time data-driven models utilized as digital twins can increase the adaptability and monitoring efficiency, delivering optimized thermal (laser-based) manufacturing processes [27, 28].

The technology implied under the concept of digital twin varies from integrating physics [29], to knowledge management [30], control theory [31] and machine learning [32]. Each one of the technologies presents their own disadvantages, namely, the correspondence with the abovementioned methods includes uncertainty handling, quantification, inner system state estimation and intuitiveness. Consequently, the uncertainty management, quantification, internal system evaluation and intuitiveness are the key aspects that need to be addressed.

In any case, both the model itself and the digital twin extraction seem to be rather prone to objectiveness, as they are adapted to individual cases. The challenge that needs to be addressed is the utilization of MD models towards the creation of a digital twin. This is done by determining the requirements, originating from the functionalities of the digital twin that can handle the complicated case of femtolaser process. The key concept towards this direction is able to give responses to what-if scenarios and thus even lead to decision-making under (near) zero-defect manufacturing [33].

1.3 Research structure and added value

The current work focuses on integrating physics and decision-making algorithms for the creation of a digital twin. The paradigm of femtolaser ablation is utilized as it has the appropriate complexity for demonstration purposes. Molecular dynamics is being utilized for modelling the femtolaser ablation process. In the current work, the first section deals with the architecture of the digital twin designed for the prediction and analysis of the femtolaser material removal process. In the next section, the laser ablation material removal of iron has been examined through the development of a MD simulation approach. Furthermore, the proposed approach has been examined through a case study performed as a proof-of-concept for evaluating the performance and efficiency of the digital twin, in line with the utilization of machine learning techniques.

The added value of this work is to present a method that can answer to what-if scenarios and thus leading to addressing partially the gap of decision-making under (near) zero-defect manufacturing. The integration of a hidden Markov model (HMM) and internet of things (IoT) into the digital twin can deliver the probability and model the uncertainty of the different states occurring along the manufacturing process, along the concept of real time.

2 Digital twin framework

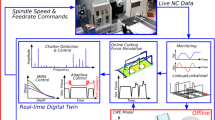

The goal of this framework is to design a tool that will be able to predict and visualize the progress of a manufacturing process, in particular, that of the femtolaser material removal. To this effect, the communication with other levels, namely, machine and system levels, has to be realizable. Three individual modules will be utilized, which will interact and receive data during the entire process. A digital twin will be used as an input of the real data collected from these modules, in order for real-time adjustments and optimize processing to be performed [19]. The digital twin will be established on the basis of the MD modelling technique and the decision-making algorithms. As both modelling and communications among the modules have to include all potential states, regarding the process, functionalities have to be defined at the very beginning of the digital twin framework design. This definition is performed in a four-stage modelling preparation (Fig. 2) and will help in responding to what-if scenarios during run phase (stage 5 in Fig. 3).

Regarding the first requirement of the digital twin, it is that of having model adaptivity, as a functionality in order to be capable of real-time adjustments. The process parameters of the digital twin have been selected, based on its highest impact on the adaptivity of the virtual model. Thus, the model should be capable of being reconfigured, regarding the process parameters and the boundary conditions. All of the above can be translated into the digital twin that can respond to what-if scenarios.

The second functionality is that of giving responses regarding quality assessment. In this study, the parameters used for the quality assessment are the keyhole shape and the temperature distribution. This requirement also leads to comparison being made between scenarios; however, in this case, the changes in properties have to have local temporal or spatial variations.

Process control is the third functionality that should be addressed. This leads to the integration of different process parameter time profiles, which refer to the time of interaction among the laser beam and the target material. In addition to these requirements, there have been foreseen three kinds of modules that have to mutually interact and feed data into the virtual model for the prediction of failure and the adaptation to changes:

- 1.

Molecular dynamics module (MD)

- 2.

Metrology and control enforcement module (IoT)

- 3.

Decision-making module based on artificial intelligence

As a matter of fact, the most sufficient way (computationally) for all requirements to be met simultaneously is through the use of heuristics [34]. To this effect, a plethora of modelling configurations (changing parametrically process parameters and boundary conditions) can be assessed and the most suitable, according to measurements, can be determined. Two major drawbacks exist in this case: it can be time-consuming along with two different configurations (namely defects) that may lead to the same effect, if there is no systematic study of the cases. Thus, regarding technological feasibility, these issues will be addressed with the use of a machine learning module (MLM), preferably implemented by a variational autoencoder or the generative adversarial networks.

The MLM will make predictions in order for all requirements to be met simultaneously through the analysis of the collected data and the progressive improvement of the model’s performance. An intermediate solution that is examined herein would be that of a hidden Markov model (HMM). HMMs are statistical models, under the concept of Markov chains, that can represent random sequential processes undergoing transitions to different states [35]. These models have been proved to have high performance in modelling different types of discrete and continuous data and predicting scenarios prior to their occurrence. Compared with other data-driven approaches, HMMs deliver high efficiency when dealing with non-observable or missing data by identifying latent parameters that could not be disclosed through observed data [36].

The HMM will use information, related to systemic modelling, pre-programmed within the digital twin platform, in order to perform predictions about future states, based on the current state. Furthermore, it is re-trainable and the hidden state estimation is significantly simplified through the Viterbi algorithm. Evidently, the HMM can be also used so as to model the uncertainty on the material occurring, due to supply chain. It is worth noting that the cooperation of the HMM with a reduced order model [37] tailored to MD is expected to give a system with maximum efficiency in terms of computational effort. The concept of the HMM will rely on the use of two matrixes (required to be known a priori), mentioned as the “transition” matrix and the “emission” matrix. The transition matrix refers to modelling the probabilities of transition among the states in the HMM, while the emission matrix is estimated by modelling the reliability of the sensor. However, the requirement for the a priori determination of these matrixes can change in the process. The digital twin framework is summarized in Fig. 3. Regarding the physical space, the IoT module will feed data to the MD module and directly to the HMM. Such data are related to the temperature profile of the laser ablation process performed in the physical space. Likewise, the MD module will use the determined process parameters and the data collected from the physical space to feed the HMM. The HMM will make predictions, having considered the calibration of the model, the quality assessment and the adaptive control. The results received regarding the calibration of the model will be fed into the MD model and will increase the ability of reconfiguration by altering the process parameters or the material property. The results related to the adaptive control will be fed into the IoT module by integrating different process parameters. Finally, the data collected, considering the quality assessment, will identify the defect type.

3 Molecular dynamics simulations

3.1 General description

MD is a deterministic method, initially developed by Alder and Wainwrigh (1956) [38] and based on classical mechanics. The MD simulations can be considered to provide an identical representation of the physical experiments. MD relies on the basic idea that given the creation of each element from an individual particle and defining the dynamic constrains of each particle, the macroscopic natural attributes for each element can be obtained by utilizing statistical methods [39]. The MD method can deal with several restrictions occurring in modelling of thermal processes, including modelling of the temperature distribution, the melt pool size and the surface tensions [40].

Having energy from the laser beam absorbed by the material, significant increase is being observed on the electrons and lattice temperatures. With the increase of the material temperature in a certain level, specific phase changes are being performed leading to the removal of the material from the bulk. The material removal is a result of complex thermal and mechanical processes. The dominant parameter affecting this process is temperature. This is so, owing to the fact that the high temperature required by the laser irradiation, in order to achieve material melting and evaporation. As the lasers utilized in micro and nano processing are being operated in the femtosecond range, the irradiated material is considered to convert from solid to gas, without melting occurring [37]. The target used for the irradiation is assessed to have body-centred cubic (BCC) structure, at an initial constant environment temperature. Temperature increase leads to the modification of pure Fe lattice structure. Up to 1118 K, the crystal obtains a BCC structure. Having this temperature reached and until 1663 K, the structure is rearranged to face-centred cubic (FCC). For temperatures above 1663 K, the structure returns to BCC. Because of the ultrafast heating given by the femtosecond pulses, it is fair to conclude that the crystal structure of pure Fe is constant in BCC structure. During ablation, a uniform temporal distribution is created on the target as laser beam diffuses perpendicularly to the target surface. The intensity of the laser beam is considered to have a Gaussian temporal distribution and the Beer-Lambert law has been utilized to model the exponential distribution of photons to the target. An MD developed approach applied in a code is implemented in this study, considering the Morse potential function (MPF) for simulating the laser ablation processes [41]. As presented in Fig. 4, four basic segments can be considered based on this approach [42].

The MD-obtained data will provide the material removal and phase change findings. Explanation of the MD code sections, and the methodology followed for each one, is being explained in [41]. The determination of the inter-atomic potential and the potential energy in the particles of the systems is being obtained by the Morse potential function (MPF) [14]. The BCC structure is used to model the initial positions of the particles and Maxwell-Boltzmann random distribution is used for the definition of the initial velocities at room temperature.

The MPF is of the general form

The potential energy must have a minimum value when r = r0. The magnitude of the potential energy must decrease faster with r than r−3and all elastic constants should have positive values.

As per the elastic constraints C11 and C12, C11 must be greater than C12. Dj is the operator determined by Eq. 4.

As φ(rij) = −D, where D is the dissociation energy, the MPF fulfills the aforementioned conditions and can be applied to cubic metals [14].

The determination of the initial conditions, referring to the material structure, potential energy, kinetic energy, total energy and boundary conditions (BCs), is being performed as a primary step for the establishment of the MD simulations. This involves defining the material structure as BCC, the potential energy based on pair potentials and the use of MPF, the kinetic energy of particles arranged on BCC, the total energy extracted from a simpler Hamiltonian and the boundary conditions trough periodic boundary conditions (PBCs), reflecting boundary conditions (RBCs) and free boundary conditions (FBCs) [41].

For obtaining the required initial thermal equilibrium, definition of the initial velocities of the particles is being performed. The finite difference method is being utilized for solving standard differential equations. Having the positions, velocities and accelerations of the particles at instant, the corresponding values are being accurately obtained for t + δt. For the integration of motion to the simulation mechanism, the Verlet algorithm has been applied [43].

To guarantee that the velocities of the particles follow the Maxwell-Boltzmann distribution, the velocity autocorrelation function (VAF) has been implemented. Periodic boundary conditions (PBCs) have been applied as a set of boundary conditions widely implemented in computer simulations and mathematical models, considering that the target is infinite in the lateral direction. In MD simulations, PBCs are widely utilized to compute balk gasses, liquids, crystals or mixtures [44].

The upper surface of the target has been modelled to the free boundary conditions (FBCs). The FBC approach has been selected to permit particles to move and be removed. FBCs are applied where no additional conditions are defined for the boundary atoms of the model’s volume [45]. Additionally, to guarantee velocity damping at the bottom surface, the reflecting boundary conditions (RBCs) have been implemented. RBCs are being utilized for analysing the effect of finite size on ensemble averages, simulating “caging” or other boundary effects. Reversing velocities at defined simulation boundaries or the utilization of confining potential that affects all particles can be performed in the simulation [46]. During photon absorbing, the kinetic energy of the particles, as well as their velocity, is being increased. To estimate the increased velocities based on the irradiation, the particle removal principle has been utilized. The specific criterion relies on the cohesive energy that is of major significance in MD modelling due to its capability of defining the amount of energy that can be engrossed by the particles. The cohesive energy per particle is being compared with the overall energy of all particles. Based on the cohesive energy criterion, if the overall energy is more than the cohesive energy per particle, the particle will be ablated. Additionally, if the overall energy is less than the cohesive energy per particle, it will not be ablated. This work focuses on the MPF approach for delivering the cohesive energy estimation. Figure 5 illustrates the MD simulation code for the laser ablation process. The cohesive energy principle enables the determination of the particles that can change state from the equilibration state [42].

The particles being in the gaseous state are disconnected from the model of the crystal. Based on the MD code, the effects of the duration of the laser irradiation have been examined. The particles that do not satisfy the cohesive energy criterion of removal from the crystal, new higher velocities are implemented. The time set for the simulation affects its termination or continuation. Considering that after the removal of the particles from the simulation crystal, the simulation time has not ended, and the simulation proceeds in another loop using the new rescaled velocities.

3.2 Simulation characteristics and experimentation

MD simulation of laser ablation is based on the selection of iron (Fe) as metal material, due to its wide application within the industrial sector. Table 1 includes the Fe parameters that have been utilized in the simulation and are considered to be constant between the different timesteps.

For performing the simulation, laser fluences within a range of threshold up to 30 J/cm2 have been utilized to perform the computational examination of the ablation process [42]. To model the velocities of the atoms, the Verlet algorithm has been implemented to the system, and the MPF has been utilized to simulate the interactions between the particles. The corresponding Fe characteristics on the MPF parameters, as obtained from the compressibility, the lattice constants and the energy of sublimation for a perfect lattice are presented in Table 2.

The system is considered to be initially of BCC configuration. Particle iterations follow the Maxwell-Boltzmann distribution to reach the anticipated initial temperature (300 K). The model describing the laser beam expresses the number of photons moving within the material structure. Gaussian distribution of the photos is fully achieved in the XY plane, while PBCs have been also implemented as boundary conditions to model an infinite medium. As artificial ablation effects could appear due to the shock wave reflection, velocity dampening and RBC have been implemented at the bottom surface of the computational cell. The Beer-Lambert law has been utilized to define the number of photons. The following parameters have been further defined to perform the MD simulations: laser wavelength 800 nm and laser fluence from 0.1 to 30 J/cm2. The interaction time between the laser beam and the target and the duration of pulse are considered constant during testing.

To evaluate the MD model, a number of FL ablation experiments have been performed. The laser system utilized consisted of a mode-locked Ti: sapphire laser system (CPA-2101, Clark-MRX, Inc.) pumped with a CW, solid state, Nd:YVO4 laser (Millenia Vs, Spectra Physics). To expand the laser beam and avoid back aperture, a 5-fold expanding telescope has been utilized. Through this tight focusing geometry, high laser fluences can be achieved to perform the FL ablation experiments. Lens of 10 mm focal length have been used to concentrate the laser ablation on the target surface. The irradiation power was monitored using a combination of a motorized λ/2 plate and an ultrafast polarizer (Melles Griot, 16PPB200). For monitoring the anticipated total interaction time of the laser beam and the target, a fast-mechanical shutter (rise system time 3 ms, Newport 845HP) has been integrated. The scanning electron microscope (SEM) has been integrated for monitoring the ablated area. Measurements on the ablation depth have been obtained through the laser scanning microscope (LSM). The parameters of the developed system are presented in Table 3. The overall system setup for the MD experiments is illustrated in Fig. 6.

Plates of 0.5-mm thickness and 99.99% Fe density have been used as material targets. Properties of the Fe specimens that have been utilized on the experiments have been presented in Table 4.

As it can be observed in Fig. 7, the movement and removal of the material particles are much more intense at the centerline of the laser beam, while moving even further, along the laser beam radius, it is clear that the number of moved or removed particles decays. The simulation code developed also allows the investigation of the temporal behaviour of the ablation process. Initially, there was a significant move and removal observed at the particles of the simulation volume near the upper surface. A reduction has been observed in the ablation rate, as the depth of the produced keyhole in the bulk material increased. This was due to the fact that the number of photons reaching the lower layers of the material was reduced due to the Beer-Lambert law. Additionally, the particles that have changed state (i.e. from solid to vapour) but continue existing within the keyhole tend to absorb a number of photons.

The lighter red color corresponds to a particle in a greater depth, while the yellow corresponds to particles that have been moved beyond the material surface i.e. have been ejaculated.

Changes on the ablation rate are being also observed in the experimental results. Certain features are being further observed on the ablation area (Fig. 8). Figure 9 shows the SEM views of the ablated holes at 0.2, 1, 6 and 20 J/cm2 for 50 pulses. A molten phase occurs as expressed through the disordered geometry of the surface. At fluences close to the ablation threshold, no trace of molten material has been observed.

As illustrated in Fig. 10, the ablation depth values increase linearly with the laser fluence values. As obtained through the experiments that have been performed, the smallest depth has been calculated at 0.5 nm using a 0.07-J/cm2 laser pulse. The SEM acquired images reveal material removal from the target surface at fluence values less than 0.07 J/cm2 pulse. This declination is resulting from the lack of accuracy in LSM for depth values less than 25 nm. As obtained through the computational outcomes, the FL ablation initiates at 0.06 J/cm2. The mean deviation of computation and the experimental results have been calculated to be 20%.

In Fig. 11, the experimental and computational results for laser fluence at the scale of 0.1 to 1 J/cm2 are illustrated. The ablation is becoming more distinct and follows an exponential growth, as the ablation depths are estimated at the range of 2 to 12 nm. The mean deviation of the computation and experimental results has been calculated to be equal to 12.7%.

Within the third ablation area, consisting of the values from 1 to 10 J/cm2 (Fig. 12), the ablation is becoming more intense. Holes are generated, obtaining depths from 12 to 500 nm per laser pulse. Similar to the second ablation area, exponential growth in the ablation depth is observed relatively to the laser fluence. The mean deviation of the computation and experimental results has been calculated to be equal to 6.7%.

Moreover, the idea to introduce defects can be addressed by taking advantage of micro-scale handling through molecular dynamics. More specifically, regions of desired shape and size, yet in the scale of microns, can be selected and different properties can be assigned to them.

The simulation code developed also enables the investigation of the temporal behaviour of the ablation process. Initially, there is a significant move and removal observed at the particles of the simulation volume near the upper surface. A reduction in the ablation rate is observed as the depth of the produced keyhole in the bulk material is increased.

Additionally, the particles that have changed state (i.e. from solid to vapour) but still remain within the keyhole tend to absorb a number of photons. Moreover, the idea of defects being introduced can be addressed by taking advantage of micro-scale handling through molecular dynamics. More specifically, regions of the desired shape and size, yet in the scale of microns, can be selected and different properties can be assigned to them.

4 Case study

To prove the concept of the usability of HMM, two cases have been utilized, as shown in Fig. 13: one for calibration by detecting variations in boundary conditions, under the framework of model adaptivity and another one for quality assessment by detecting defects. This includes the following steps: (i) measurement extraction (i.e. temperature field) from MD modules: this part supplies the appropriate parts of information required for each one of the what-if scenarios. (ii) Consideration of two (at least) states for each scenario: this initial stage of modelling will provide the appropriate level of detail for the corresponding scenario. (iii) The transition matrix definition for each case has to be performed. This is indicative of the status quo of the production line. Matrixes [0.85 0.15; 0.15, 0.85] and [0.98, 0.02; 0.98, 0.02] can be considered respectively for the two scenarios. These particular matrixes are able to model the fact that defects are expected to be rare [48] in the case of quality assessment. Furthermore, on the contrary, boundary condition changes can be much more frequent due to scheduling of the production, and depending on the demand. (iv) Next is the metrology reliability matrix definition. It is considered being equal to [p 1-p; 1-p p] in both cases. This means that the specific hidden states are matched one-to-one with the sensor decisions. There is flexibility, however, to choose the error that electronics can incur. It is implied here that the sensor is in reality a complex system of sensors (i.e. multi/hyper-spectral camera) accompanied by a local system that makes decisions. This eliminates the need for further modelling; however, it can cooperate with other systems, as it is also implied in Fig. 2. (v) Finally, an adequate number of runs have to be made, in order for mean values of the estimation (identification) error to be acquired.

5 Results

As indicated in Fig. 14, a result of the afore described procedure, two diagrams of error are shown as functions of probability p. The left diagram refers to the calibration case and the right diagram refers to the quality assessment case. The error rate represents the declination of the HMM applied to each case as a function of probability. Having performed a sufficient number of runs, it is evident that the system affects the error rate of low probabilities. The declination, however, remains at an acceptable level. Furthermore, in both cases, the reliability of the sensors plays the major role above the threshold of 98%, delivering promising results about the system’s functionality.

6 Conclusions

Molecular dynamics seems a good candidate simulation technology for utilization in a digital twin framework, since it is precise down to the molecular level and provides maximum efficiency, regarding the computational effort. Moreover, as shown above, through the cooperation with an HMM, the MD can handle uncertainty related to the part itself, and may lead to robust modelling and control that can be very efficient towards the part and process optimization. Regarding the framework of DT, the exchange with an MLM of a very specific structure, described above and based on stochastic approach, will result time consumption to be eliminated and the systematic study of the cases to be enhanced. Finally, based on the case study that has been examined, the virtual model can deliver satisfactory results while the error rate is affected for low probabilities in both of the cases of model adaptivity and quality assessment. Nevertheless, above the threshold of 98%, the error rate is mainly affected by the reliability of the sensors. Regarding future work, it is required that further elaboration of the HMM should be performed. In addition, the time efficiency of the framework has to be taken into consideration, along with the modelling part of the states that should be in a more formal way.

References

Ogawa Y, Ota M, Nakamoto K, Fukaya T, Russell M, Zohdi T, Yamazaki K, Aoyama H (2016) A study on machining of binder-less polycrystalline diamond by femtosecond pulsed laser for fabrication of micro milling tools. CIRP Ann 65(1):245–248

Meijer J, Du K, Gillner A, Hoffmann D, Kovalenko VS, Masuzawa T, Ostendorf A, Poprawe R, Schulz W (2002) Laser machining by short and ultrashort pulses, state of the art and new opportunities in the age of the photons. CIRP Ann 51(2):531–550

Schmidt M, Zäh M, Li L, Duflou J, Overmeyer L, Vollertsen F (2018) Advances in macro-scale laser processing. CIRP Ann 67(2):719–742

Semerok A, Chaléard C, Detalle V, Lacour J-L, Mauchien P, Meynadier P, Nouvellon C, Sallé B, Palianov P, Perdrix M, Petite G (1999) Experimental investigations of laser ablation efficiency of pure metals with femto, pico and nanosecond pulses. Appl Surf Sci 138–139:311–314

Nolte S, Momma C, Kamlage G, Ostendorf A, Fallnich C, von Alvensleben F, Welling H (1999) Polarization effects in ultrashort-pulse laser drilling. Appl Phys A Mater Sci Process 68(5):563–567

Kelly R., Miotello A. (1994) Laser-pulse sputtering of atoms and molecules Part II. Recondensation effects. In Nuclear Instruments and Methods in Physics Research Section B: Beam Interactions with Materials and Atoms (Vol. 91, pp. 682–691). North-Holland.

Stavropoulos P., Chryssolouris G. (2006) Nanomanufacturing processes and simulation: a critical review. Proceedings of the 4th International Symposium on Nanomanufacturing (ISNM), Cambridge, MA, USA, (November 2006), pp. 46-52.

Rieth M. (2000) Molecular dynamics calculations for nanostructured systems. University of Patras, School of Engineering, Engineering Science Department, PhD Thesis.

Allen M.P. (2004) Introduction to molecular dynamics simulation. Computational Soft Matter: From Synthetic Polymers to Proteins, Lecture Notes, NCI Series 23, pp. 1-28.

Ladieu F, Martin P, Guizard S (2002) Measuring thermal effects in femtosecond laser-induced breakdown of dielectrics. Appl Phys Lett 81:957–959

Diels JC, Rudolph W (1996) Ultrashort laser pulse phenomena. Academic Press

Gibbon P, Forster E (1996) Short-pulse laser-plasma interactions. Plasma Phys. Control Fusion 38:769–793

Allen MP, Tildesley DJ (1989) Computer simulation of liquids. Oxford Clarendon Press

Girifalco LA, Weizer VG (1959) Application of the Morse potential function to cubic metals. Phys Rev 114(3):687–690

Nedialkov NN, Imamova SE, Atanasov PA, Berger P, Dausinger F (2005) Mechanism of ultrashort laser ablation of metals: molecular dynamics simulation. Appl Surf Sci 247(1–4):243–248

Cheng C, Xu X (2004) Molecular dynamic study of volumetric phase change induced by a femtosecond laser pulse. Appl Phys A 79:761–765

Afanasiev YV, Chichkov BN, Demchenko NN, Isakov VA, Zavestovskaya IN (1999) Ablation of metals by ultrashort laser pulses: theoretical modeling and computer simulations. J Russ Laser Res 20(2):89–115

Papacharalampopoulos A, Makris S, Bitzios A, Chryssolouris G (2016) Prediction of cabling shape during robotic manipulation. Int J Adv Manuf Technol 82(1–4):123–132

Söderberg R, Wärmefjord K, Carlson JS, Lindkvist L (2017) Toward a digital twin for real-time geometry assurance in individualized production. CIRP Ann 66(1):137–140

Schleich B, Anwer N, Mathieu L, Wartzack S (2017) Shaping the digital twin for design and production engineering. CIRP Ann 66(1):141–144

Tao F, Zhang M, Liu Y, Nee AYC (2018) Digital twin driven prognostics and health management for complex equipment. CIRP Ann 67(1):169–172

Schützer K, de Andrade Bertazzi J, Sallati C, Anderl R, Zanul E (2019) Contribution to the development of a Digital Twin based on product lifecycle to support the manufacturing process. Procedia CIRP 84:82–87

Liu J, Zhou H, Tian G, Liu X, Jing X (2019) Digital twin-based process reuse and evaluation approach for smart process planning. Int J Adv Manuf Technol 100:1619–1634

Tao F, Cheng J, Qi Q, Zhang M, Zhang H, Sui F (2018) Digital twin-driven product design, manufacturing and service with big data. Int J Adv Manuf Technol 94:3563–3576

Lee J, Bagheri B, Kao HA (2015) A cyber-physical systems architecture for Industry 4.0-based manufacturing systems. Manufacturing Letters 3:18–23

Kritzinger W, Karner M, Traar G, Henjes J, Sihn W (2018) Digital Twin in manufacturing: a categorical literature review and classification. IFAC-PapersOnLine 51(11):1016–1022

Papacharalampopoulos A, Stavropoulos P (2019) Towards a digital twin for thermal processes: control-centric approach. Procedia CIRP 86:110–115

Chang PC, Chen LY (2007) A hybrid regulation system by evolving CBR with GA for a twin laser measuring system. Int J Adv Manuf Technol 31:1156–1168

Aguado JV, Huerta A, Chinesta F, Cueto E (2015) Real-time monitoring of thermal processes by reduced-order modeling. Int J Numer Methods Eng 102(5):991–1017

Banerjee A, Dalal R, Miial S, Joshi KP (2017) Generating digital twin models using knowledge graphs for industrial production lines. UMBC Information Systems Department

Zubov A, Naeem O, Hauger SO, Bouaswaig A, Gjertsen F, Singstad P, Hungenberg KD, Kosek J (2017) Bringing the on-line control and optimization of semibatch emulsion copolymerization to the pilot plant. Macromol React Eng 11(4):1700014

Nikolakis N, Alexopoulos K, Xanthakis E, Chryssolouris G (2019) The digital twin implementation for linking the virtual representation of human-based production tasks to their physical counterpart in the factory-floor. Int J Comput Integr Manuf 32(1):1–12

Papacharalampopoulos A, Petrides D, Stavropoulos P (2019) A defect tracking tool framework for multi-process products. Procedia CIRP 79:523–527

Raymond JW, Gardiner EJ, Willett P (2002) Heuristics for similarity searching of chemical graphs using a maximum common edge subgraph algorithm. J Chem Inf Comput Sci 42(2):305–316

Rabiner LR (1989) A tutorial on hidden Markov models and selected applications in speech recognition. Proc IEEE 77:257–286

Nasfi R., Amayri M., Bouguila N. (2020) A novel approach for modelling positive vectors with inverted Dirichlet-based hidden Markov models. Knowledge-Based Systems, 192.

Tönshoff HK, Momma C, Ostendorf A, Nolte S, Kamlage G (2000) Microdrilling of metals with ultrashort laser pulses. J Laser Appl 12(1):23–27

Alder BJ, Wainwright TE (1957) Phase transition of a hard sphere system. J Chem Phys 27:1208–1209

Stavropoulos P., Salonitis K., Chryssolouris G. (2008) Molecular dynamics simulations for nanomanufacturing processes: a critical review. 6th International Conference on Manufacturing Research, Uxbridge, UK, 655-664.

Foteinopoulos P, Papacharalampopoulos A, P. Stavropoulos P. (2018) On thermal modeling of additive manufacturing processes. CIRP J Manuf Sci Technol 20:66–83

Stavropoulos P, Chryssolouris G (2007) Molecular dynamics simulations of laser ablation: the Morse potential function approach. Int J Nanomanuf 1(6):736–750

Stavropoulos P, Efthymiou K, Chryssolouris G (2012) Investigation of the material removal efficiency during femtosecond laser machining. Procedia CIRP 3:471–476

Amini M, Eastwood JW, Hokcney RW (1987) Time integration in particle models. Comput Phys Commun 44:83–93

Rapaport DC (2004) The art of molecular dynamics simulation (2nd ed.). Cambridge University Press. ISBN 0-521-82568-7. See esp. pp15–20.

Schäfer C, Urbassek HM, Zhigilei LV, Garrison BJ (2002) Pressure-transmitting boundary conditions for molecular-dynamics simulations. Comput Mater Sci 24(4):421–429

Lavrinenko YS, Morozov IV, Valuev IA (2016) Reflecting boundary conditions for classical molecular dynamics simulations of nonideal plasmas. Conference Series, Journal of Physics, p 774

Alfa Aesar GmbH & Co KG Chemicals (2006) Alfa Aesar Material Catalogue 2006-2007.

Leone C., Lopresto V., Pagano N., Genna S., Iorio I. (2010) Laser cutting of silicon wafer by pulsed Nd: YAG source, IPROMS-6th Virtual International Conf. on Innovative Production Machines and Systems.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Stavropoulos, P., Papacharalampopoulos, A. & Athanasopoulou, L. A molecular dynamics based digital twin for ultrafast laser material removal processes. Int J Adv Manuf Technol 108, 413–426 (2020). https://doi.org/10.1007/s00170-020-05387-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-020-05387-7