Abstract

Optimization of arthroscopic view with technological innovation. To formulate objective measures for arthroscopic image quality. This is achieved by defining uniquely interpretable descriptions of disturbances of the arthroscopic image. Disturbances in the arthroscopic view were defined focusing on the size of the disturbance area, and the duration of a disturbance. They were categorized as Bleeding, Turbidity, Air Bubbles, Loose Fibrous Tissue, Attached Fibrous Tissue, Tissue too Close, and Instrument too Close. Ten arthroscopic knee procedures were recorded on digital film with a frame rate of 25 images per second (almost 5 h in total). Using time-action analysis, two investigators individually scored the presence and type of each disturbance in all 5 h of the ten arthroscopies. The kappa statistic was used to assess agreement between the investigators. Additionally, five other observers, who had no prior training, scored 1 min of each of the ten arthroscopies (total of 10 min) to evaluate the definitions for unique interpretability. The adjusted kappa statistic (kappa is corrected for high prevalence) shows good tester agreement for all disturbances (range 0.71–0.96), also for the observers who had no prior training. Only Turbidity shows a moderate agreement (0.59 and 0.44). This is due to the difficulty to assess the exact start and end time of Turbidity. We succeeded in describing uniquely interpretable definitions for seven disturbances. This allows objective and quantitative determination of arthroscopic image quality, which is required to improve future arthroscopic equipment and training facilities.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The image quality during arthroscopic procedures is dependent on a number of factors: condition of the camera, the light source and the arthroscope, type of irrigation system, use of tourniquet, condition of the joint, portal placement, use of instruments, and skills of the surgeon. Even if the basic conditions are present, i.e., properly functioning equipment, and sufficient surgical skills, the maintenance of a clear view is sometimes difficult [19]. In the literature, no consensus exists on the optimal configuration or conditions for irrigation to maintain a certain arthroscopic image quality [8, 18]. In order to study the arthroscopic image quality, objective and quantitative measures are required, which at present are not available to our knowledge. Literature only presents measures that investigate the effectiveness of automated pump systems, which is partly related to the arthroscopic image quality [2, 7, 12, 13]. However, these measures are either subjective, such as visual clarity on a three-point scale, or objective, such as the mean operation time or the number of fluid bags used. In the case that the measures are objective, they are not directly related to the image quality. Therefore, these measures are less relevant for surgeons, because their focus is on the quality of the arthroscopic view during a procedure.

In order to objectify arthroscopic image quality, we propose to define disturbances of the arthroscopic view containing information on the size of the disturbed areas, and their duration. From these definitions, the arthroscopic image quality can be defined as good or sufficient, if none of the disturbances is present. The aim in this study is to present uniquely interpretable descriptive definitions of disturbances of the arthroscopic view. The level of agreement in interpreting the definitions is determined by performing time-action analysis and kappa statistic.

Methods

Before the study design is presented, time-action analysis is introduced, and the descriptive definitions of the disturbances are proposed.

Time-action analysis

Taylor, one of the pioneers of process analysis, introduced time measuring of process variables in 1881 [16]. He improved the productivity of Midval Steel Company by determining time standards for standardized jobs, and by separating the planning and the execution of work. Time-action analysis is a quantitative method to determine the number and duration of actions, and has been previously used in the medical field [3, 4, 11, 15]. It represents the relative timing of different events and the duration of the individual events. In the medical field, time-action analysis has proven its value in objectifying and quantifying surgical actions. It is successfully introduced as a method to determine learning curves [5], and to compare the efficiency of new instruments or techniques [6, 15]. In this study, time-action analysis was used in a different manner: instead of documenting surgical actions disturbances of the arthroscopic view were measured.

Definitions of disturbances

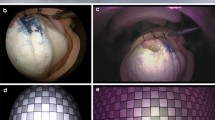

As was stated, the image quality during arthroscopic procedures is dependent on a number of factors. It was assumed that the camera, the scope, and the light source were functioning properly, as these should be inspected regularly. Furthermore, we assumed that our only source of information is the movie of the arthroscopic view. This implies that although a disturbance could be caused by different factors, only its effect on the view is determined. From these conditions and our own observations we defined seven disturbances: Bleeding (is influenced by the use of a tourniquet, and instruments), Turbidity (is influenced by the condition of the joint), Air Bubbles (is influenced by type of irrigation system, and the use of instruments), Loose Fibrous Tissue (is influenced by the condition of the joint, and the use of instruments), Attached Fibrous Tissue (is influenced by the condition of the joint), Tissue too Close (is influenced by the condition of the joint, portal placement, and skills of the surgeon), and Instrument too Close (is influenced by skills of the surgeon; Fig. 1). Each description of a disturbance contained a time component, and an image area component.

The definition of the time component was the same for all disturbances:

-

The start time is the image frame for which a disturbance conforms to the image area definition.

-

The end time is the last image frame for which a disturbance conforms to the image area definition. In practice, one always has to view at least one frame more than the end time.

-

There is a threshold of one image frame. This implies that a disturbance of one frame only is not marked, and vice versa an undisturbed view of one frame only is not marked.

-

One disturbed image frame in between an undisturbed frame, and a disturbed frame of another category is not marked.

-

If a Disturbance 1 is overruled by another Disturbance 2, marking of Disturbance 1 is stopped, and the last image frame that does contain Disturbance 1 is marked as end time. If Disturbance 1 is visible again a new start time is indicated, e.g., Turbidity will be marked until another disturbance Air Bubbles or Tissue too Close takes over.

The image area component was different for the disturbances. Since no guidelines could be found in literature about image quality, the image area that at least should be disturbed was set to a fairly logical 25% of the total circular arthroscopic image (Fig. 2). We also acknowledged that a disturbance localized in the center of the image would have more impact. For Bleeding, Turbidity, Air Bubbles, Loose Fibrous Tissue, and Attached Fibrous Tissue the image area components were described as:

-

The disturbance should cover at least a 25% of the image area or

-

If the disturbance is less than 25% of the image area, but covers at least 5% of the image area, and is propagating through the image center for at least one frame.

In the case of Bleeding, the image area should be red and not transparent, else it is marked as Turbidity (Fig. 1a). Turbidity contains thin streams of blood, yellowish thick joint fluid (Fig. 1b), fatty bubbles, or small pieces of tissue, that should be no greater than the fatty bubbles and in numbers less than ten. For Attached Fibrous Tissue, which is fibrous tissue that is attached to one of the anatomic structures of the joint, the image area that is blocked should be less than 90% (Fig. 1e). Tissue too Close implies that tissue is blocking at least 90% up to 100% of the image area, and gives a blurry or out of focus view that could be colored white, yellow, or red (Fig. 1f). For Instrument too Close, at least 50% of the image area should be covered with the instrument (Fig. 1g), since than overview on the operation area is diminished strongly. The shape of the instrument becomes out of focus, blurry, and colored black. When the shaver is used, it is possible that both Attached Fibrous Tissue and Instrument too Close have to be marked simultaneously.

Evaluation

Arthroscopic knee procedures are performed most frequently, and it was expected that all the proposed disturbances would occur. Therefore, ten arthroscopic knee procedures were arbitrarily chosen to be recorded in a period of 1 month (Table 1). Only cruciate ligament reconstructions were excluded. The procedures were performed by two junior staff members. Drills, vaporizing instruments, microfacturing, or meniscal repair techniques were not used. The patients were not notified of the recordings, because the operation was not influenced, and the patients’ identities could not be traced from the video. There is one operation that took a rather unusual long time (91 min). We decided to keep this recording, because a large number of disturbances definitely occurred which was useful for the evaluation of the definitions.

The procedures were recorded with a consumer digital video camera (JVC GR D93), a notebook PC (HP Compaq NC6120 P-M 750 60 Gb 512 Mb), a S-VHS cable, and a fire wire cable. The arthroscopic view was captured in AVI-format (image resolution: 720 × 576, frame rate: 25 images per second). A time-action analysis was performed for each separate disturbance, where all arthroscopic images were marked as ‘1’ if that particular disturbance occurred, or marked ‘0’ if that disturbance was absent. This analysis was performed with Adobe Premiere Pro, version 1.5, Adobe Systems Incorporated, which enables frame by frame analysis of digital movies. The data were processed with Microsoft Office Excel 2003, Microsoft Corporation and Matlab, version 7.0.4.365 (R14), The Mathworks to calculate a kappa statistic.

In the first phase, two investigators independently performed the time-action analysis on all 5 h of film. Both investigators were no surgeon, which implied that they analyzed the arthroscopic images only with the definitions, and were not tempted to interpret the images based on surgical experience. After this, the results were discussed to identify possible differences in interpretation of the disturbances. This resulted in the adjustment of the definitions to the final version as presented. The main change was the addition of a transparent screen containing squares that matched 5% of the image area, which was superimposed on the film material. This facilitated the judgment of the image area component. In the second phase, five observers were asked to perform a time-action analysis on 1 min of each of the ten operations (total of 10 min). The 10 min were arbitrarily chosen, but care was taken that all disturbances would occur in this time period. The five observers had no prior training, and were asked to score the disturbances by using the definitions only. This way true tester agreement could be assessed.

A kappa value of 0.7 or more was considered to be a good agreement, and implies that the definitions were sufficiently formulated [1]. Since the prevalence of the majority of the disturbances was small in comparison to the total number of frames, we expected a high/low prevalence effect in the kappa statistic. Therefore, the adjusted kappa statistic that compensates for this effect was calculated as well [14]. The mean of the kappa’s was used in order to compare the results for more than two testers. Additionally, the absolute percentage of agreement was calculated.

Results

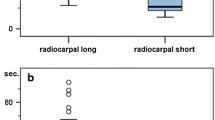

Ten arthroscopic knee procedures were performed with a mean operating time of 29 min, 59 s, and 9 frames (minimum was 13:37:17, and maximum 91:27:10). The total operation time of the ten procedures was 4 h, 59 min, 23 s, and 12 frames (Table 1). In the last two columns of Table 1, percentages of the operation time are presented for which disturbances occurred. These are merely shown to give an idea of the disturbance rate. The agreement of the two investigators that scored all 5 h of arthroscopies is summarized in Table 2. The absolute percentages of agreement are high for all disturbances. Comparing the normal and adjusted kappa’s, it is shown that the difference in prevalence between the disturbed frames, and undisturbed frames has a significant negative effect on the normal kappa values. The adjusted kappa shows only values above 0.78, which implies a good to very good agreement; accept for the adjusted kappa value of Turbidity, which is moderate (0.59). The results of the five observers that scored the 10 min of film are slightly lower than the results of the two investigators, but only the adjusted kappa of Turbidity is less than 0.7 (Table 3).

Discussion

Since optimal arthroscopic view is very important, a first step in achieving this is the set up of objective and quantitative measures to judge the arthroscopic image quality. The main result is that the proposed descriptive definitions of disturbances show sufficient tester agreement to (>0.7) to qualify for this purpose. Even with no prior training, the five observers gave only slightly lower kappa values than the two investigators who had set up the definitions, and had prior training with the analysis. The moderate agreement on Turbidity can partly be explained. To identify Turbidity, the movies had to be played at normal speed, and because Turbidity sometimes gradually evolved it was difficult to judge the occurrence consequently. This could easily result in a disagreement of a couple of frames for the start and the end time. This effect became significant, because Turbidity occurred so frequently.

To our knowledge similar studies in this field are unavailable. In order to place our results in some perspective, we compared it with studies in other medical fields where interpretation of anatomic structures is performed. The kappa values found in radiographic (0.42–0.99) and histologic (0.5) images are marginally unfavorable with our results [9, 10, 17], but this has to be interpreted with care.

In clinical practice, surgeons have more information at their disposal than the arthroscopic view on the monitor, e.g., the orientation of their instruments, and their surgical experience. Furthermore, we realize that some disturbances remain unnoticed by the operating surgeon, because she/he is used to a typical view, primarily concentrates on the treatment itself, or cannot minimize a disturbance with the current equipment. This could bias the interpretation of the arthroscopic images. Especially, since our intention is to apply the definitions in computerized disturbance detection, and such complex cognitive image interpretation is very difficult to incorporate. Therefore, observers were used who had no surgical experience. This might have enlarged the kappa values.

Some aspects of the definitions are defined fairly arbitrarily, such as the size of the image area covered by a disturbance. Changes in the size of the image area probably result in different outcomes of the actual frequency and duration of disturbances, but should not influence the tester agreement. Additional research is necessary to relate the definitions to the image quality experienced by the surgeons. Furthermore, the variations in clinical practice are too large to draw significant conclusions on the percentage of the operation time that each disturbance occurs. Nevertheless, this was not the scope of this study.

Although the time-action analysis is time-consuming, it gives detailed insight in the disturbances’ frequency, duration, and the moment of occurrence. In subsequent studies, the focus can be on reducing dominant disturbances, on comparing the aspects that influence the image quality quantitatively, and other scientific evaluation of arthroscopic practice with the arthroscope as basis. Although most of the disturbances depend on several factors, the majority could be minimized with new technological developments. This is particularly true for the disturbances that can be minimized by irrigation (Bleeding, Turbidity, Air Bubbles, and Loose Fibrous Tissue; Table 1). With the help of the strict definitions, computerized disturbance detection can be developed. Such a system could be implemented in any arthroscopic pump (gravity or automated pump) to optimize irrigation. With the redesign of sheaths or cannulae, the occurrence of Attached Fibrous Tissue could be reduced. Finally, Tissue too Close, and Instrument too Close depend partly on surgical skills. With the same computerized disturbance detection, the duration of the latter disturbances could be stored of a procedure, and used to give feedback to surgeons in training.

Conclusions

Seven disturbances were identified that serve as measures for arthroscopic image quality. In this study, we succeeded in describing definitions for these disturbances, that are sufficient uniquely interpretable. This allows the objective and quantitative determination of arthroscopic image quality, which is required to improve future arthroscopic equipment and training facilities.

References

Altman DG (1991) Practical statistics for medical research. Chapman & Hall, London, p 404

Ampat G, Bruguera J, Copeland SA (1997) Aquaflo pump vs FMS 4 pump for shoulder arthroscopic surgery. Ann R Coll Surg Engl 79:341–344

Claus GP, Sjoerdsma W, Jansen A, Grimbergen CA (1995) Quantitative standardised analysis of advanced laparoscopic surgical procedures. Endosc Surg Allied Technol 3:210–213

den Boer KT, de Wit LT, Dankelman J, Gouma DJ (1999) Peroperative time-motion analysis of diagnostic laparoscopy with laparoscopic ultrasonography. Br J Surg 86:951–955

den Boer KT, de Wit LT, Davids PH, Dankelman J, Gouma DJ (2001) Analysis of the quality and efficiency in learning laparoscopic skills. Surg Endosc 15:497–503

den Boer KT, Straatsburg IH, Schellinger AV, de Wit LT, Dankelman J, Gouma DJ (1999) Quantitative analysis of the functionality and efficiency of three surgical dissection techniques: a time-motion analysis. J Laparoendosc Adv Surg Tech A 9:389–395

Dolk T Augustini BG (1989) Three irrigation systems for motorized arthroscopic surgery: a comparative experimental and clinical study. Arthroscopy 5:307–314

Ewing JW, Noe DA, Kitaoka HB, Askew MJ (1986) Intra-articular pressures during arthroscopic knee surgery. Arthroscopy 2:264–269

Farmer ER, Gonin R, Hanna MP (1996) Discordance in the histopathologic diagnosis of melanoma and melanocytic nevi between expert pathologists. Hum Pathol 27:528–531

Holt RD, Azevedo MR (1989) Fibre optic transillumination and radiographs in diagnosis of approximal caries in primary teeth. Community Dent Health 6:239–247

Minekus JP, Valstar ER, Rozing PM, de Voss MJ, Diercks RL, Willems WJ, Dankelman J (2005) Factors influencing the surgical process during shoulder joint replacement: time-action analysis of five different prostheses and three different approaches. Med Sci Monit 11:CR14–CR20

Muellner T, Menth-Chiari WA, Reihsner R, Eberhardsteiner J, Engebretsen L (2001) Accuracy of pressure and flow capacities of four arthroscopic fluid management systems. Arthroscopy 17:760–764

Ogilvie-Harris DJ, Weisleder L (1995) Fluid pump systems for arthroscopy: a comparison of pressure control versus pressure and flow control. Arthroscopy 11:591–595

Sim J, Wright CC (2005) The kappa statistic in reliability studies: use, interpretation, and sample size requirements. Phys Ther 85:257–268

Sjoerdsma W, Meijer DW, Jansen A, den Boer KT, Grimbergen CA (2000) Comparison of efficiencies of three techniques for colon surgery. J Laparoendosc Adv Surg Tech A 10:47–53

Taylor F (1915) The principles of scientific management? Harper & Brothers, New York

Taylor WJ, Porter GG, Helliwell PS (2003) Operational definitions and observer reliability of the plain radiographic features of psoriatic arthritis. J Rheumatol 30:2645–2658

Tuijthof GJ, Dusee L, Herder JL, van Dijk CN, Pistecky PV (2005) Behavior of arthroscopic irrigation systems. Knee Surg Sports Traumatol Arthrosc 13:238–246

Tuijthof GJ, van Dijk CN, Herder JL, Pistecky PV (2005) Clinically-driven approach to improve arthroscopic techniques. Knee Surg Sports Traumatol Arthrosc 13:48–54

Acknowledgments

This research is supported by the Technology Foundation STW, applied science division of NOW and the technology program of the Ministry of Economic Affairs, the Netherlands. The authors wish to thank the OR-personnel of Academic Medical Centre, Amsterdam, the Netherlands for their contributions, especially Dr J. Bramer and Dr H. Sonneveld.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Tuijthof, G.J.M., Sierevelt, I.N. & van Dijk, C.N. Disturbances in the arthroscopic view defined with video analysis. Knee Surg Sports Traumatol Arthr 15, 1101–1106 (2007). https://doi.org/10.1007/s00167-007-0299-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00167-007-0299-7