Abstract

Multiply imaged time-variable sources can be used to measure absolute distances as a function of redshifts and thus determine cosmological parameters, chiefly the Hubble Constant H\(_0\). In the two decades up to 2020, through a number of observational and conceptual breakthroughs, this so-called time-delay cosmography has reached a precision sufficient to be an important independent voice in the current “Hubble tension” debate between early- and late-universe determinations of H\(_0\). The 2020s promise to deliver major advances in time-delay cosmography, owing to the large number of lenses to be discovered by new and upcoming surveys and the vastly improved capabilities for follow-up and analysis. In this review, after a brief summary of the foundations of the method and recent advances, we outline the opportunities for the decade and the challenges that will need to be overcome in order to meet the goal of the determination of H\(_0\) from time-delay cosmography with 1% precision and accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

When a distant variable source (e.g., a supernova or a quasar) is multiply imaged by a foreground mass distribution (e.g., a galaxy or cluster of galaxies), the multiple images appear offset in time to the observer. The delay(s) between the leading image and trailing one(s) arise from the combination of two effects. The first one is the difference in length of the optical paths. The second is a general relativistic effect, called the Shapiro (1964) delay, owing to the difference in gravitational potential experienced by the photons along the paths.

Well before the phenomenon was observed, Refsdal (1964) recognized its utility as a way to measure absolute distances and therefore infer cosmological parameters from the expansion history of the universe. In practice, a measurement of the delay(s) between the leading image and the trailing images(s) provides a measurement of the absolute difference in path length, provided the gravitational potential is sufficiently well known. Knowing the redshift, one can then infer the Hubble constant H\(_0\) and other cosmological parameters.

The prospect of a single-step direct measurement of the Hubble constant H\(_0\) is particularly appealing in light of the so-called “Hubble Tension”—the 8% difference between the determination of H\(_0\) from multiple local-universe probes (e.g., Riess et al. 2022, and references therein) and that inferred by early-universe probes under the assumption of a flat \(\Lambda\) cold dark matter (CDM) cosmology (e.g., Abdalla et al. 2022, and references therein). If the tension is real and not arising from unknown systematic errors, it implies that new physics is needed beyond the standard flat \(\Lambda\)CDM model (e.g., an early dark energy phase altering the expansion history of the universe prior to recombination, e.g., Knox and Millea 2020).

The technique—now known as strong lensing time-delay cosmography (or time-delay cosmography, TDC in short)—has come a long way since the initial proposal by Refsdal (1964). Hundreds of multiply imaged quasars (Treu et al. 2018; Lemon et al. 2022a) and the first examples of multiply imaged supernovae have been discovered (Kelly et al. 2015; Rodney et al. 2021). Techniques have been demonstrated to measure time delays with percent accuracy, given sufficient cadence and photometric precision (Courbin et al. 2018; Millon et al. 2020a). Major breakthroughs have been obtained in the modeling of high-resolution space- and ground-based images to constrain the gravitational potential of the main deflector (Suyu et al. 2010; Chen et al. 2019; Birrer et al. 2015; Shajib et al. 2020). Non-lensing data, especially stellar kinematics, has been shown to be crucial to break some of the degeneracies inherent to lens modeling (Treu and Koopmans 2002; Suyu et al. 2014; Birrer et al. 2016, 2020). The role of the environment and of the mass along the line of sight is much better understood than a decade ago (Suyu et al. 2010; Greene et al. 2013; Collett et al. 2013; McCully et al. 2017).

The improvements in data quality and quantity, and analysis techniques, over the past two decades have allowed time-delay cosmography to reach levels of precision of order of 2% percent in the determination of the Hubble constant H\(_0\) by 2020, based on a sample of just 7 lenses (Shajib et al. 2020; Wong et al. 2020; Millon et al. 2020).

In the current decade, attention has turned to investigating systematic uncertainties as well as further improving the precision, with the goal of achieving \(\sim\) 1% precision and accuracy. Achieving this goal will require larger samples (\(\gtrsim\) 40) of lenses with time delays than currently available, as well as high-angular-resolution imaging and spectroscopy to characterize the gravitational potential of the deflector, which is currently considered the main source of residual systematic uncertainty (Birrer et al. 2020). If all goes to plan, this decade will see first light of Euclid, of the Nancy Grace Roman Space Telescope, and of the Vera C. Rubin Observatory, which should provide orders of magnitude increases in sample size. In parallel, the recent first light of the James Webb Space Telescope and continued development of adaptive optics capabilities from the ground mean that high-angular-resolution imaging and spectroscopic follow-up will become feasible.

In sum, the 2020 decade is brimming with opportunities for time-delay cosmography, and major breakthroughs are within reach. However, exploiting the wealth of information expected from this influx of data presents non negligible logistical and modeling challenges. Describing the opportunities and challenges of the decade ahead is the main goal of this review, which builds on a review published in 2016 in this journal (Treu and Marshall 2016, hereafter TM16), to which we refer the reader for more details on the theoretical background, the history of time-delay cosmography, and developments up until 2015. We also refer the reader to a general review written in 2018 (Suyu et al. 2018), and one that is currently in preparation as a chapter of a book-size conference proceeding of reviews (Birrer et al. 2022, in prep.).

This review is organized as follows. In Sect. 2, we give a very brief theoretical background, aimed mostly at defining the notation and clarifying some of the more subtle aspects and recent developments of TDC. In Sect. 3, we review the history of time-delay cosmography, emphasizing chiefly the developments since 2015. In Sect. 4, we focus on the decade ahead, identifying the open issues, opportunities, and challenges, and concluding with some forecasts. Section 5 summarizes the main points of this review.

2 Theoretical background

In this section we provide a brief summary of the physics of gravitational lenses (Schneider et al. 2006) and how the observable lens time delays depend on both the cosmological world model and our assumptions about the mass distribution of the deflector.

2.1 General considerations

Gravitational lenses really are lenses, in that the optics of light traveling through the curved space-time around a massive galaxy are identical to those of a glass lens. For example, Fermat’s Principle of Least Time for a gravitational lens (Perlick 1990a, b) has that the light travel time through a gravitational lens is

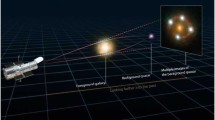

In this equation, \(\varvec{\theta }\) and \(\varvec{\beta }\) are the apparent (lensed) sky position and the true (unlensed) sky position of the background source, respectively. The observable position \(\varvec{\theta }\) and the unobservable position \(\varvec{\beta }\) differ by the scaled deflection angle \(\varvec{\alpha }({\varvec{\theta }})\), which is typically \(\sim 1\) arcsecond in a galaxy-scale strong gravitational lens system. We recognize the Fermat potential \(\Phi (\varvec{\theta };\varvec{\beta })\) as the refractive index of the lens. Unlike the refractive index of most glass lenses, \(\psi (\varvec{\theta })\) is, in general, spatially varying: \(\psi (\varvec{\theta })\) is the scaled, projected gravitational potential along the lens sightline. While the gravitational potential \(\psi (\varvec{\theta })\) is dominated by the massive deflector, contributions to \(\psi (\varvec{\theta })\) from other structures close to the optical axis have small but significant effects. Figure 1 provides an illustration.

Multiple images of the background source form at stationary points of the Fermat potential, where \(\varvec{\nabla }\tau (\varvec{\theta }) = \varvec{\nabla }\Phi (\varvec{\theta };\varvec{\beta }) = 0\). The time delay \(\Delta \tau _{\mathrm{AB}}\) between brightness fluctuations in image A and image B is observable:

Here, \(\Delta \Phi _{\mathrm{AB}}\) is the Fermat potential difference between the two image positions, which can be predicted from a model for the mass distribution of the lens, along with the deflection angle \(\varvec{\alpha }(\varvec{\theta })\). Equation (3) shows the route to inferring cosmological parameters from strong lens time delays: the lens model predicts \(\Delta \Phi _{\mathrm{AB}}\), and the world model predicts the time-delay distance \(D_{\Delta \mathrm{t}}\), defined in terms of standard angular diameter distances as

where the subscripts d and s denote the deflector and source, respectively. The predicted time delay can be compared with the observed time delays to infer jointly the lens model and cosmological parameters, as discussed in the next section.

Upper panel: schematic lens diagram, illustrating the deflection of light in a galaxy-scale gravitationally lensed quasar system. The deflection is dominated by the massive lens galaxy (graphic credit: Martin Millon). Lower panel: neighboring galaxies of the main deflector, the unseen cosmic web of dark matter that pervades the space along the line of sight to the lens, and the galaxies that inhabit it all contribute weak lensing effects that perturb the light rays and cause systematic error in the time-delay distance inference if they are not accurately accounted for. (The three component distances that go into the time-delay distance are illustrated at the bottom of the panel). Dark matter simulation and visualization: Tom Abel and Ralf Kaehler

2.2 From time delays to cosmological parameters

2.2.1 Distance measurements

With the lens image, time delays, and lens galaxy’s stellar kinematic data, we can constrain the time-delay distance \(D_{\Delta \mathrm{t}}\) and/or the distance to the lens \(D_\mathrm {d}\). i.e., we can obtain the marginalized posterior probability distribution \(P(D_{\Delta \mathrm{t}})\), \(P(D_\mathrm {d})\) and/or \(P(D_{\Delta \mathrm{t}},D_\mathrm {d})\) (e.g., Refsdal 1964; Paraficz and Hjorth 2009; Jee et al. 2019; Birrer et al. 2016, 2019; Wong et al. 2020). This in turn allows us to measure cosmological parameters, since the distances depend on both the cosmological model parameters and the lens/source redshifts, as we describe below.

The Friedmann-Lemaître-Robertson-Walker metric that describes a homogeneous and isotropic universe like ours is

where c is the speed of light, t is the cosmic time, a(t) is the cosmic scale factor (normalized to 1 today at \(t_0\), i.e., \(a(t_0)=1\)), \(\chi\) is the comoving radial coordinate, \((\vartheta , \varphi )\) are the angular coordinates on a unit sphere, and \(f_{K} (\chi )\) is the comoving angular diameter distance that depends on the spatial curvature K as

The angular diameter distance between two redshifts \(z_1\) and \(z_2\) is

As an example, the expression of \(\chi (z_1,z_2)\) in the cosmological model, \(\Lambda\)CDM, with density parameters \(\Omega _{\mathrm{m}}\) for matter, \(\Omega _{\mathrm{k}}\) for spatial curvature, and \(\Omega _{\Lambda }\) for dark energy described by the cosmological constant \(\Lambda\), is

where H\(_0\) is the Hubble constant, and \(K=-\Omega _{\mathrm{k}}\mathrm{H}_0^2/c^2\) is the spatial curvature. Consequently, the angular diameter distance \(D_{\mathrm{A}}\) depends on {H\(_0\), \(\Omega _{\mathrm{m}}\), \(\Omega _{\mathrm{k}}\) and \(\Omega _{\Lambda }\)} in the \(\Lambda\)CDM cosmological model. Since \(D_{\Delta \mathrm{t}}\) is a combination of angular diameter distances between the observer, lens and source, we can use \(P(D_{\Delta \mathrm{t}},D_\mathrm {d})\) to infer cosmological parameters, particularly the Hubble constant.

2.2.2 The Hubble constant

From Eqs. (4), (6), (7), (8), the time-delay distance \(D_{\Delta \mathrm{t}}\) is primarily sensitive to H\(_0\), in fact inversely proportional. This is illustrated in Fig. 2, which shows the cosmological constraint on \(\Omega _{\mathrm{m}}\) and H\(_0\) from the \(D_{\Delta \mathrm{t}}\) measurement to the strongly lensed quasar system HE0435\(-\)1223 (Wong et al. 2017); the constraint contours are oriented nearly vertically, with most constraints on H\(_0\). The tilt of the contours from vertical depends on the lens and source redshifts, with lower lens redshifts leading to smaller tilts and thus more constraining power on H\(_0\).

The distance to the lens, \(D_\mathrm {d}\), also places constraints on the cosmological parameters, but the dependence on H\(_0\) is more correlated with other cosmological parameters. Therefore, for the same relative uncertainty in distance measurements, \(D_{\Delta \mathrm{t}}\) provides tighter constraints on H\(_0\) than \(D_\mathrm {d}\). Nonetheless, the combination of the two distances provides even better constraint on H\(_0\) (e.g., Birrer et al. 2019; Wong et al. 2020; Yıldırım et al. 2020).

Image reproduced with permission from Wong et al. (2017), copyright by the authors

Constraint on the matter density parameter \(\Omega _{\mathrm{m}}\) and the Hubble constant H\(_0\) in the flat \(\Lambda\)CDM cosmological model from the \(D_{\Delta \mathrm{t}}\) measurement to HE0435\(-\)1223Ṫhe contours indicate the 68% and 95% credible regions, and the nearly vertical constraint shows that \(D_{\Delta \mathrm{t}}\) is primarily sensitive to H\(_0\).

Since we directly measure distances \(D_{\Delta \mathrm{t}}\) and \(D_\mathrm {d}\) from time-delay lensing and kinematics, our inference on H\(_0\) depends on the background cosmological model, as evidenced by Eq. (7), even though often \(D_{\Delta \mathrm{t}}\) depends mostly on H\(_0\) and only weakly on other cosmological parameters. Therefore, the H\(_0\) measurement from time-delay lensing needs to specify the background cosmological model. Given the current Hubble tension in flat \(\Lambda\)CDM model, the H\(_0\) measurements from lensing are often quoted for the flat \(\Lambda\)CDM model for direct comparison. To infer H\(_0\) in more general cosmological models with less dependence on the model assumptions, one way is to use the lensing distances to anchor the relative distance scale from supernovae of Type Ia (SNe Ia), as shown by, e.g., Jee et al. (2019); Taubenberger et al. (2019); Arendse et al. (2020).

2.2.3 Beyond the Hubble constant

First and foremost, if the Hubble tension is verified through strong lensing measurements and other cosmological probes, then this has great implications for physics. We will need new physics beyond the current standard flat \(\Lambda\)CDM to describe our Universe, which could be, for example, new relativistic species or an early dark energy phase.

In this light, measuring cosmological parameters from lensing distances in these new physical models will be important to shed light on the viable models for our Universe. Even though a single lens system provides mostly constraints on H\(_0\), as shown in Fig. 2, the different tilts of the constraint contours in this plot for different lens/source redshifts reduce cosmological parameter degeneracies. This results in cosmological constraints on parameters in addition to H\(_0\) once we have a sample of \(\gtrsim\)10 lenses at different redshifts. Furthermore, the cosmological constraints from time-delay lensing are oriented differently in the cosmological parameter space compared to that of other cosmological probes, especially in cosmological models that extend beyond the flat \(\Lambda\)CDM model. Therefore, the combination of time-delay lensing with these probes, including SNe Ia (Scolnic et al. 2018; Brout et al. 2021) and Cosmic Microwave Background (Planck Collaboration et al. 2020), is powerful in constraining cosmological parameters and models (e.g., Linder 2004, 2011; Suyu et al. 2010; Jee et al. 2016; Arendse et al. 2020; Krishnan et al. 2021.

2.3 The mass-sheet degeneracy

The above program to infer cosmological models from observed strong lens time delays depends critically on our ability to model accurately the total projected gravitational potential of the lens system. This model is constrained by a number of observables, separate from the time delays. Most important of these observables is the image of the “Einstein ring,” which constrains the lens model via the mapping of deflection angles (and thus derivatives of the lensing potential) across the image. This information is complemented by spectroscopy of the deflector galaxy: the kinematics of the stars in the lens galaxy provide independent physical constraints on the lens model. Also important are the observed properties (e.g., position, brightness, color) of the deflector’s neighbor galaxies, which inform the modeling of the mass distribution outside the main deflector (whether in the lens plane, or along the line of sight). Even with all possible physical components of the mass distribution included in the model and constrained by observations, some key flexibility remains. Parameter degeneracy, where very similar predictions arise from multiple different parameter combinations, is a generic feature of predictive models. However, lens models contain a particular exact degeneracy that dominates the uncertainty in the time-delay predictions.

The so-called “Mass-Sheet Degeneracy” can be expressed as a transformation of the lens equation involving a rescaling of the source position and deflection angle, plus a corresponding additional deflection equivalent to the lensing effect of a uniform convergenceFootnote 1 field, or “mass sheet” (Falco et al. 1985):

The observed image positions are invariant under this transformation, while the Fermat potentials are re-scaled by the same mass-sheet parameter \(\lambda\), such that \(\Delta \Phi _{\lambda } = \lambda \Delta \Phi\) (and therefore, for a given cosmology, \(\Delta t_{\lambda } = \lambda \Delta t\)).

The convergence of the lens transforms as follows:

In practice, lenses are modeled by assuming some functional form for \(\kappa (\varvec{\theta })\), and then inferring the parameters of that function. The mass-sheet transformation (MST) in Eq. (10) shows that there will be sets of model parameter combinations that are related to each other by (approximate) mass-sheet transformations with various values of \(\lambda\), which all yield the same (or very similar) observed images but which predict different Fermat potentials/time delays (see examples in Fig. 3).

Image reproduced with permission from Birrer et al. (2020), copyright by ESO

Illustration of the mass-sheet degeneracy: a range of deflector mass density profiles yield identical predicted image positions. In this figure, a composite (stellar, dotted lines, plus dark matter, dashed lines) model lens galaxy has its density profile transformed by an approximate MST (in the notation of the figure \(\lambda _{\mathrm{c}}\) is the parameter defined as \(\lambda\) in this article via Eq. (9)), leaving the Einstein radius unchanged: imaging data alone cannot distinguish between the four models shown. Non-lensing data, such as stellar kinematics, are required to break the degeneracy.

Propagating the transformed quantities through the equations that predict the time delay, it can be shown that the inferred time-delay distance and Hubble constant transform as

Applying an MST with \(\lambda > 1\) to a gravitational lens model leaves the image positions unchanged but moves the source angular position \(\lambda\) times farther from the optical axis. If the model time-delay distance is also scaled down by a factor of \(\lambda\) (bringing the observer, lens and source closer together) this exactly offsets the effect of the MST on the predicted time delay (see Fig. 4 for an illustration). This model will now fit both the image position data and the time-delay data just as well as the un-transformed model. This is the source of the potential systematic error on H\(_0\): the wrong distance can be inferred when jointly fitting observed image positions (or Einstein Ring images) and measured time delays. The MSD can be broken with information about the absolute size or luminosity of the source, or with non-lensing information about the mass distribution in the system.

Simultaneously applying a mass-sheet transformation to a lens model and re-scaling the Hubble constant by the same transformation parameter \(\lambda\) yields a model with both unchanged image positions and time delay. An extreme case of \(\lambda = 1.5\) is shown: under this MST, the lens model becomes 50% more massive but has a negative uniform convergence added to it, and the source position has to move 50% farther from the optical axis (in angle) while the distances need to be scaled downwards by 50% to keep the image positions fixed and predict the same time delay as in the un-transformed model

One way to break the mass-sheet degeneracy is to assume a simply parameterized density profile for the deflector. In this approach, in general, the set of parameter combinations related to each other by the MST is quite small (e.g., because the mass density profile of these models typically have specific shapes such as a power-law radial profile). To put it another way, applying the MST to one of these density profiles will yield a new profile that is not in the assumed model family: it is not possible to approximate the transformed density profile with the assumed functional form. We might consider such an approach to be “assertive,” in the sense that it involves asserting that we have very good prior information in favor of that particular density profile functional form.

At the opposite extreme, we might consider a “conservative” approach of including the MSD parameter \(\lambda\) in the model explicitly, along with some additional model flexibility, and then marginalizing over it. We consider this approach conservative because the model flexibility is maximally degenerate with distances and therefore H\(_0\), equivalent to assuming we know nothing about the mass profiles of galaxies. Prior probability densities for \(\lambda\) and the additional model components will be required, perhaps learned from simulations or other datasets, such as for example non time delay lenses (Birrer et al. 2020).

In conclusion, in either the assertive or conservative approach, additional information is needed to constrain the degenerate lens models. The information can be in the form of theoretically or empirically motivated priors or assumptions, or on non-lensing data such as stellar kinematics.

3 History of time-delay cosmography

3.1 Pioneering days: 1964–1999

The suggestion by Refsdal (1964) that gravitational lens time delays could be used to measure a physical distance, and therefore the Hubble Constant, preceded the discovery of the first multiply imaged quasar (Walsh et al. 1979) by 15 years. Detection of lensed quasars and monitoring of their light curves continued in the eighties and nineties, culminating in the first robust time-delay measurements (Kundic et al. 1997; Schechter et al. 1997). The measurement of time delays was particularly controversial during the nineties as the quality of the early data allowed for multiple estimated values (Press et al. 1992a, b), owing to the combined effects of gaps in the data, and microlensing noise in the optical light curves. This problem was solved definitively at the turn of the millennium with the beginning of modern monitoring campaigns, characterized by high cadence, high precision, and long duration, both at optical and radio wavelengths (Fassnacht et al. 1999, 2002; Burud et al. 2002; Hjorth et al. 2002; Eigenbrod et al. 2005).

As robust time delays started to become available, the focus of the controversy shifted to the modeling of the mass distribution of the lens. Before the year 2000, the only available model constraints were the quasar image positions, time delays, and to a lesser extent the flux ratios (limited by microlensing, variability, and differential extinction). Thus, the best one could do was to assume a very simple form for the lens mass distribution, such as a singular isothermal sphere (Koopmans and Fassnacht 1999), and to neglect the effects of structure along the line of sight. As a result of these necessary but overly simplistic assumptions, the apparent random errors grossly underestimated the total uncertainty, leading to measurements that were apparently significantly inconsistent between groups or with those from other techniques (Kochanek and Schechter 2004). At the opposite end of the spectrum, pixellated or “free-form” models that attempted to reconstruct the mass distribution or gravitational potential on a grid were underconstrained by the data and therefore their results and estimated uncertainties depended strongly on the regularization scheme and prior (Williams and Saha 2000).

Since recognizing these problems, the community has been pursuing high-quality data for each lens system, as discussed in the next section.

3.2 Modern times: 2000–2020

We focus here on cosmography with lensed quasars in the past two decades. New types of strongly lensed transients, such as lensed supernovae (e.g., Kelly et al. 2015; Goobar et al. 2017; Rodney et al. 2021) and lensed gamma-ray bursts (e.g., Paynter et al. 2021), are starting to be detected in recent years and will be discussed in Sect. 4.

To infer the distances \(D_{\Delta \mathrm{t}}\) and \(D_\mathrm {d}\), we need to model the lens mass distribution, particularly to obtain the Fermat potential at the quasar image positions where we measure the time delays. In the past decade, new imagers and spectrographs on space-based and ground-based telescopes have enabled the acquisition of high-SNR and high-resolution data of lens systems, particularly the time delays \(\varvec{\Delta t}\) (Sect. 3.2.2), imaging data \(\varvec{d}_{\mathrm{lens}}\), kinematic data \(\varvec{d}_{\mathrm{kin}}\) of the foreground deflector (Sect. 3.2.3), and environment data \(\varvec{d}_{\mathrm{env}}\) of the lens (Sect. 3.2.4). We first describe how lenses are found in Sect. 3.2.1 before diving into the assembly of the necessary ingredients and analysis in Sects. 3.2.2–3.2.4, and we present the latest cosmographic results from the past two decades of efforts in Sect. 3.2.5.

3.2.1 Finding new lenses

Strongly lensed quasars are inherently rare (and the other lensed transients with shorter durations of the transients are even rarer), with about 1 in \(\sim\)10\(^4\) massive galaxies acting as a lens. After the first discoveries of lensed quasars (e.g., Walsh et al. 1979), the Cosmic Lens All-Sky Survey (CLASS; Myers et al. 2003; Browne et al. 2003) systematically searched for lensed quasars by looking for flat spectrum radio sources with multiple components, which provided the first large sample of \(\sim\)20 lensed quasars. The next large sample came from the systematic search through the SDSS Quasar Lens Search (SQLS; Oguri et al. 2006; Inada et al. 2012; More et al. 2016), which performed a systematic search through the SDSS quasar sample. In recent years, wide-field imaging surveys that go deeper with better image quality (e.g., PanSTARRS, DES, ATLAS and HSC surveys, and the space telescope Gaia) have been a treasure trove for lensed quasar searches with now several hundreds of lensed quasars discovered (e.g., Agnello et al. 2015; Lemon et al. 2017; Ostrovski et al. 2017; Krone-Martins et al. 2018; Agnello et al. 2018; Rusu et al. 2019; Chan et al. 2020; Lemon et al. 2022a).

From these new lensed quasars, we can form a cosmological sample by acquiring the necessary ingredients: redshifts, time delays, high-resolution imaging, lens stellar kinematics for mass modeling, and wide-field imaging/spectroscopy data for characterizing the environment. The lens and source redshifts which could either come from the spectroscopic observations used to confirm the nature of the lens candidates or from further spectroscopic observations. We describe the other ingredients and analysis in the following subsections.

3.2.2 Measuring time delays

Obtaining time-delay measurements of lensed quasars requires dedicated monitoring. The basic idea is to detect variations in the brightness of the quasar images in a lens system and use these variations to determine the time delay between the multiple images, given that the intrinsic brightness variations of the quasar manifest in each of the multiple images. While simple in theory, practical implementation requires high cadence to detect the often small variations in the quasar brightness. Furthermore, the brightness of the quasars could also change due to microlensing by the stars in the foreground lens galaxy as these stars move past the sightline of the quasar images. Measuring the delays therefore requires disentangling the intrinsic quasar variability and the extrinsic microlensing variability.

Monitoring has been carried out in radio wavelengths (e.g., Fassnacht et al. 2002; Rumbaugh et al. 2015) and optical wavelengths (e.g., Kochanek et al. 2006b; Vuissoz et al. 2008; Paraficz et al. 2009; Courbin et al. 2011). Radio monitoring has the advantage that microlensing is minimal at these wavelengths given the large source size relative to the Einstein radii of the lensing stars, so the changes in the light curves of each quasar image mostly originate from intrinsic quasar variability. However, variability of quasars are typically small in the radio wavelengths, making it more difficult to detect features in the light curves to measure delays (Rumbaugh et al. 2015). Despite this, it was with radio monitoring that (Fassnacht et al. 2002) measured all three independent time delays of the quad lens system CLASS B1608+656 with uncertainties of a few percent, making this the first system that was used for accurate cosmological distance measurements (Suyu et al. 2010; Jee et al. 2019).

Most of the monitoring campaigns in the past decade have used optical facilities, notably by the COSmological Monitoring Of GRAvItational Lenses (COSMOGRAIL; Courbin et al. 2011) using a network of 1–2-m class telescopes across the globe and targeting the brighter lensed quasar systems. In the optical, the microlensing effects on the light curves are often prominent, and disentangling intrinsic and extrinsic variability has traditionally required decade-long light curves to obtain accurate time-delay measurements (Tewes et al. 2013). While COSMOGRAIL has monitored lenses in the last two decades, it is difficult to scale up on the number of lenses for cosmography in this fashion. The breakthrough in the past decade achieved by COSMOGRAIL came from the following ingredients, which allowed measurement of delays within 1–2 years of monitoring: (i) high-cadence (daily) observations, (ii) high signal-to-noise (SNR) ratio (\(\gtrsim\) 1000) of the lensed quasars images with milli-mag uncertainties on the photometries, and (iii) new curve-shifting and delay measurement techniques that incorporate the uncertainties due to microlensing.

Figure 5 is an example of high-cadence and high-SNR monitoring fulfilling (i) and (ii) of the lensed quasar system DES0408−5359, showing the light curves of the three brightest quasar images (A, B and D). The small data points are the high-cadence and high-SNR ones from the WFI instrument at the ESO/MPG2.2m telescope, whereas the larger data points are more sparse and noisier from the smaller 1.2-m Euler telescope (Courbin et al. 2018). The high-cadence and SNR data points show high frequency variations (e.g., the structure between the black solid lines) that can only come from quasar intrinsic variability and not microlensing, effectively allowing the time delays to be measured within a single year of monitoring.

Image reproduced with permission from Courbin et al. (2018), copyright by ESO

Top panel: light curves of the three brightest quasar images A, B and D of the strong lens system DES J0408−5359 over merely one monitoring season. The solid circular data points correspond to the high-cadence and high-SNR observations from the WFI instrument on the ESO/MPG 2.2-m telescope, whereas the large faint data points correspond to the measurement from the 1.2-m Euler telescope. The black lines show high-frequency variability in image A and B that allow the delays to be measured with only one season of monitoring. Bottom left (right) panel: time-shifted light curves of each of the three quasar images without (with) corrections for microlensing distortions. By modeling the extrinsic variability of each light curves due to microlensing, the bottom right panel shows a good match in the intrinsic quasar light curves of the 3 images.

Various techniques have been developed in the past decade to infer time delays, including spline fitting in the Python Curve Shifting package (PyCS; Tewes et al. 2013), Gaussian processes (Hojjati et al. 2013), smoothing and cross-correlation (e.g., Aghamousa and Shafieloo 2015), and Bayesian curve fitting with a latent continuous-time Ornstein–Uhlenbeck process (Tak et al. 2017). To crash test these approaches for time-delay measurement, a time-delay challenge has been set up where an “evil” team simulated mock light curves with the input delays hidden, and subsequently multiple “good” teams with various time-delay methods tried to measure the delays from these light curves as part of a blind test of their methods’ performances (Dobler et al. 2015; Liao et al. 2015). The results of the challenge demonstrate that some of the methods reach the target precision and accuracy in the delay measurements for cosmography, particularly the PyCS approach, which has been the basis of the COSMOGRAIL time-delay measurements.

A summary of the time-delay measurements of 37 galaxy-scale lensed quasar systems is presented in Table 3 of Millon et al. (2020b), where the majority of the time delays have been measured by the COSMOGRAIL collaboration through 15 years of monitoring. In addition to these, the time delays of 6 lensed quasars with the high-cadence and SNR monitoring are presented by Millon et al. (2020a).

3.2.3 Modeling the mass distribution of the main deflector and its neighbours

We describe the modeling process to predict the lensing and kinematic observables to compute the lensing likelihood \(P(\varvec{d}_{\mathrm{lens}}\vert \varvec{\eta }_{\mathrm{lens}}, D_{\Delta \mathrm{t}}, D_\mathrm {d})\) and kinematic likelihood \(P(\varvec{d}_{\mathrm{kin}}\vert \varvec{\eta }_{\mathrm{lens}}, D_{\Delta \mathrm{t}}, D_\mathrm {d})\), where \(\varvec{\eta }_{\mathrm{lens}}\) are the lens mass model parameters.

Let us consider a high-resolution image of the lens system as the lensing data \(\varvec{d}_{\mathrm{lens}}\), with an example shown in Fig. 6 panel (a). Studies in the past decades have shown that the thousands of intensity pixels especially of the lensed quasar host galaxy provide stringent constraints on the lens mass distribution (Kochanek et al. 2001; Dye and Warren 2005; Suyu et al. 2009, 2014; Wong et al. 2017; Chen et al. 2019). In order to use this data set, we need to model the image (panel (b)) with various components of light in the system, which are the lensed AGN (panel (c)), the lensed AGN host galaxy (panel (d)), and the primary deflector (panel (e)). To produce panels (c)–(e), we need to have a good model of the point spread function (PSF) of the telescope, especially for panel (c) with the bright AGN (point sources). We further require a lens mass model to ensure that a modeled AGN position on the source plane produces the multiple lensed AGN positions on the image plane that match the observations. The same lens mass model, together with a model of the surface brightness distribution of the AGN host galaxy (panel (f)), is also used to predict the lensed AGN host light shown in (d). The lens mass model accounts for all deflectors that have significant strong lensing effects on (c) and (d)Footnote 2. In this specific example, we show that the primary deflector and the nearest galaxy G1 have their mass distributions included in the strong lens model (panels (g) and (h)). The modeling procedure therefore requires a high number of parameters to describe the PSF, the deflectors’ mass distributions, the primary deflector’s light distribution, and the AGN host galaxy light distribution (up to hundreds of parameters, although some can be linear parameters and be optimized/marginalized analytically).

Figure based on mass models from Wong et al. (2017)

a High-resolution HST image of HE0435. b model of HE0435, composed of the three components of the light distribution in c the lensed AGN light, d the lensed AGN host galaxy light, and e the primary lens galaxy light. f The model AGN host galaxy light distribution. Producing the light distributions in c, d requires a model of the lens mass distribution, the AGN source position, and the AGN host galaxy light distribution. g, h Convergence map for the primary lens and the nearby galaxy respectively.

The PSF can be built from stars in the wider field of view (FOV) of the lensing image. However, due to color differences between the stars and the AGN, and the often limited number of stars in the FOV, the PSF model built from stars rarely match the AGN light down to the noise level. Therefore, modeling methods have been developed in recent years to simultaneously reconstruct the PSF together with the lens mass model by making use of the multiple AGN images (e.g., Chen et al. 2016; Wong et al. 2017; Birrer et al. 2017).

The deflectors’ mass distributions are often described using physically motivated models. One frequently used mass model is an elliptical mass distribution with radial power-law profile, where the three-dimensional density \(\rho (r)\propto r^{-\gamma '}\) with \(\gamma '\) as the constant power-law slope and \(\gamma '=2\) as the isothermal profile. Multiple studies with dynamics, lensing and X-rays have shown galaxies to be well described by a total power-law out to several effective radii (Cappellari 2015; Gavazzi et al. 2007). Furthermore, the pixelated lens potential reconstruction of the complex interacting lens galaxies in the system B1608+656 showed only small (within \(\sim 2\)%) deviations from the power-law model, validating the usage of the power-law model for the lens mass distribution, up to a mass-sheet transformation. A second mass model is a composite of baryonic matter (based on the stellar light distribution assuming a uniform mass-to-light ratio) and theoretically motivated dark matter distribution, typically parametrized as a Navarro et al. (1997) profile. Multiple studies show that these models also describe well lens galaxies (Shajib et al. 2021, and Fig. 7).

Current analysis of time-delay lenses have shown that these two models provide very similar constraints on the time-delay distances within a few percent per lens, and within 1% from a sample of 6 lenses (Millon et al. 2020). Both mass models have been employed for the primary deflector to quantify uncertainties due to these mass model assumptions (Suyu et al. 2014; Wong et al. 2017; Birrer and Treu 2019; Rusu et al. 2020; Shajib et al. 2020), and have been shown to fit the data well. From a physical point of view one can map residual freedom arising from the mass-sheet degeneracy (Schneider and Sluse 2013) as deviations from these baseline models, as shown in Fig. 7.

Physical interpretation of residual uncertainty allowed by mass-sheet degeneracy on mass density profile. Shajib et al. (2020) modeled a set of non-time-delay lens galaxies with exquisite HST images and unresolved stellar velocity dispersion of the deflector fully accounting for the mass-sheet degeneracy and expressed the results as deviations from standard “composite” (top row) and power-law mass profiles (bottom row). The standard composite model comprising a NFW (Navarro et al. 1997) dark matter halo and a stellar component with constant mass-to-light ratio is consistent with the data, although a small amount of contraction/expansion of the halo (top left panel) or a small gradient in mass-to-light ratio (top right panel) cannot be ruled out. Similarly, a power-law mass density profile is consistent with the data, although small deviations cannot be ruled out by the data (purple bands in the bottom panels). See (Shajib et al. 2020) for more description. When available, additional information—such as spatially resolved stellar kinematics—reduces the residual freedom and thus tighten the bounds on H\(_0\) when applied to time-delay lenses

The profiles of secondary deflectors in the field of view are less crucial as they are not the primary strong lens. Nonetheless, if they are sufficiently close to the strong lens system, as shown in Fig. 6 panel (a), then these secondary deflectors need to be included in the strong lens model. In the case where the secondary deflectors are at the same redshift as the primary deflector (e.g., due to natural clustering of galaxies into galaxy groups), all the deflectors can still be modeled via a single lens plane. The modeling to predict the lensing data \(\varvec{d}_{\mathrm{lens}}\) will actually not depend directly on the distances \(D_\mathrm {d}\) and \(D_{\Delta \mathrm{t}}\). In the case where a secondary deflector is at a different redshift than the primary lens, then multi-lens plane mass modeling is required. Multi-plane lensing requires a cosmological model and redshift measurements of the deflectors to trace the light rays that depend on the distance between the different deflector planes (e.g., McCully et al. 2017). Nonetheless, for the case of HE0435−1223 as illustrated in Fig. 6, one can still define an effective \(D_{\Delta \mathrm{t}}\) for the system for cosmographic inference despite the secondary deflector having a different redshift from the primary deflector.

The deflector light distribution is perhaps the simplest to model since the Sérsic (1968) profile provides a good overall description of galaxy light. Often two or three Sérsic components are needed to fit to the primary deflector light distribution (Wong et al. 2017; Shajib et al. 2020).

The AGN host galaxy light distribution can be modeled in several different ways, with the caveat that the model has sufficient flexibility to encapsulate the true underlying light distribution to avoid possible biases in the mass model parameters. One way is to model the host light distribution on a regular grid of pixels (Warren and Dye 2003; Suyu et al. 2006) or adaptive pixel grids (Dye and Warren 2005; Vegetti and Koopmans 2009). Another way is to use shapelets combined with Sérsic profiles (Birrer et al. 2015). A recently developed approach is to describe the source through wavelets (Joseph et al. 2019; Galan et al. 2021), although it has not yet been applied to time-delay lens analysis.

With models of the PSF, deflector mass and light distributions, and the AGN host light distribution, we can predict the pixel intensities of the lens system shown in panel (b), which we can then compare to the observations (panel (a)) to compute the lensing likelihood \(P(\varvec{d}_{\mathrm{lens}}\vert \varvec{\eta }_{\mathrm{lens}}, D_{\Delta \mathrm{t}}, D_\mathrm {d})\). The mass model can also predict the time delays between the quasar images in order to compare to the measured time delay (Sect. 3.2.2) and compute the time-delay likelihood \(P(\varvec{\Delta t}\vert \varvec{\eta }_{\mathrm{lens}}, D_{\Delta \mathrm{t}}, D_\mathrm {d})\). The primary deflector mass model can be further used to predict the stellar kinematics to compare to spectroscopic measurements \(\varvec{d}_{\mathrm{kin}}\), to compute the kinematic likelihood \(P(\varvec{d}_{\mathrm{kin}}\vert \varvec{\eta }_{\mathrm{lens}}, D_{\Delta \mathrm{t}}, D_\mathrm {d})\). With single-aperture averaged velocity dispersion measurements of the primary deflector, which are the typical data obtained from current facilities, often spherical Jeans modeling suffices to fit to the observations (Suyu et al. 2010; Birrer et al. 2016). Spatially resolved kinematic maps of the deflector would allow us to constrain more flexible mass models and further tighten constraints on the lensing distances for cosmography (Shajib et al. 2018; Yıldırım et al. 2020; Birrer et al. 2020; Birrer and Treu 2021). This information was until recently obtainable for only the brighter systems. However, as we discuss in Sect. 4, new and upcoming instruments and facilities will provide it for large samples of galaxies.

3.2.4 Characterizing the line of of sight

As described in Sect. 2.3, the presence of external convergence along the line-of-sight affects the inference of \(D_{\Delta \mathrm{t}}\). We distinguish between two types of external convergence. The first type associated with mass structures located sufficiently far (in projection on the sky) from the strong lens system such that their “flexion shifts” (McCully et al. 2017) are small and they can be approximated as mass sheets, with an overall effective external convergence as \(\kappa _{\mathrm{ext}}\). In this case, the inferred \(D_{\Delta \mathrm{t}}\) scales with the factor of (\(1-\kappa _{\mathrm{ext}}\)) via Eq. (11) with \(\lambda =(1-\kappa _{\mathrm{ext}})\). The second type of external convergence is associated with nearby massive galaxies located close (typically within \(\sim 10\)”) to the strong lens system. These galaxies have flexion shifts that are substantial and these galaxies need to be incorporated directly into the strong lens modeling as additional non-linear deflectors, given their non-linear effect on the \(D_{\Delta \mathrm{t}}\) measurements (McCully et al. 2017).

To incorporate the line-of-sight effects, the first step is to distinguish between these two types of external convergence. This requires measuring/estimating the redshift of galaxies near the strong lens, estimating the masses of these galaxies through e.g. stellar population synthesis and relations between stellar mass and halo mass. It also requires detecting and characterizing any galaxy groups or galaxy clusters near the lens system, as they are thought to have group/cluster scale halos that are more massive than those associated with individual galaxies; these galaxy groups/clusters can have significant flexion shift even if located further away (\(\sim\)arcmin) in projection (Keeton and Zabludoff 2004). Wide-field imaging and spectroscopic observations around the strong lens system are therefore necessary (e.g., Momcheva et al. 2015; Wilson et al. 2016; Sluse et al. 2017; Rusu et al. 2017, 2020).

Once we identify the galaxies with negligible flexion shifts (the first type), there are multiple complementary approaches to estimate the external convergence, which include (i) using the statistical properties of the environment around the lens system together with large numerical simulations of structure formation, and (ii) weak gravitational lensing around the strong lens system. We explain each in turn.

One effective way to characterize the environment around a strong-lens system is through counts of galaxies (e.g., Fassnacht et al. 2011) and comparison to those from numerical simulations of structure formation (Hilbert et al. 2007, 2009). Lines of sight in the simulations with, e.g., similar relative galaxy number counts as the strong lens system, are then selected and used to reconstruct the distribution of the convergence along the line-of-sight, \(\kappa _{\mathrm{ext}}\) (Suyu et al. 2010). This method can be extended to more complex summary statistics, such as including weights in the galaxy counts that take into account the mass of the galaxy, the (projected) separation of the galaxy from the strong lens system, and the galaxy redshift, i.e., quantities which affect the strength of lensing and hence \(\kappa _{\mathrm{ext}}\) (Greene et al. 2013). This approach requires a sufficiently large number of control fields, to accurately measure the relative galaxy number counts around the strong-lens system; relative galaxy counts are used since they are less sensitive to the inputs of the numerical simulations such as the cosmological parameters than the absolute galaxy count. (Rusu et al. 2017, 2020) have optimized this approach in terms of the weights and aperture for obtaining the \(\kappa _{\mathrm{ext}}\) distribution. Figure 8 shows an example of the \(\kappa _{\mathrm{ext}}\) probability distribution reconstructed based on number counts (in gray).

With high-quality and wide-field imaging, we can also measure the shapes of the galaxies around the strong-lens system and use these shapes to infer \(\kappa _{\mathrm{ext}}\) through the weak gravitational lensing effect. In short, the shapes of galaxies in different regions of the sky are averaged over, and since (physically unrelated) galaxies’ shapes are uncorrelated, any ellipticity in the average shape is due to weak lensing shear by line-of-sight structures. Measurements of the shear field allows us to reconstruction \(\kappa _{\mathrm{ext}}\). Tihhonova et al. (2018, 2020) have demonstrated the weak lensing reconstruction for two strong lens systems, HE0435−1223 with negligible \(\kappa _{\mathrm{ext}}\) and B1608+656 with significant \(\kappa _{\mathrm{ext}}\). As shown in Fig. 8, the results of the \(\kappa _{\mathrm{ext}}\) reconstructed from weak lensing agree well with the \(\kappa _{\mathrm{ext}}\) obtained from galaxy number counts.

Image reproduced with permission from Tihhonova et al. (2020), copyright by the authors

External convergence \(\kappa _{\mathrm{ext}}\) probability density distribution at the gravitational lens system B1608+656 based on HST imaging data in F814W. Color distributions (orange, purple and blue) are different weak lensing reconstructions using different filters/techniques (Tihhonova et al. 2020), whereas the gray distribution is from galaxy counts from Suyu et al. (2010). The \(\kappa _{\mathrm{ext}}\) distributions from the weak lensing and galaxy counts approaches agree well.

3.2.5 Putting it all together: blind cosmological measurements

By combining the time-delay measurements, the lens mass models, and characterisations of the external convergence along the line of sight, the H\(_0\) lenses in COSMOGRAIL’s Wellspring (H0LiCOW; Suyu et al. 2017) collaboration, together with COSMOGRAIL (Courbin et al. 2011) and Strong-lensing at High Angular Resolution Programme (SHARP; Chen et al. 2019), measured the distances \(D_{\Delta \mathrm{t}}\) and \(D_\mathrm {d}\) to 6 lensed quasar systems (Wong et al. 2020). The technique for measuring the distances was developed and demonstrated on the first lens system B1608+656 (Suyu et al. 2010; Jee et al. 2019). Similar analysis approaches were then applied to the remaining 5 lens systems, done crucially through a blind analysis to avoid confirmation bias. From the posterior measurement of \(P(D_{\Delta \mathrm{t}},D_\mathrm {d})\) for each of the lenses (or \(P(D_{\Delta \mathrm{t}})\) for two of the lens systems with multiplane strong lensing), constraints on cosmological parameters are inferred through the relations in Sect. 2.2.1.

In Fig. 9 top panel, we show the posterior probability distribution of H\(_0\) from these 6 lensed quasars based on two families of well-motivated models for the primary lens galaxy mass distribution (Wong et al. 2020; Suyu et al. 2010; Jee et al. 2019; Suyu et al. 2014; Chen et al. 2019; Wong et al. 2017; Birrer et al. 2019; Rusu et al. 2020). The H\(_0\) from each lens are statistically consistent with each other, allowing these lenses to be combined to yield a joint constraint on H\(_0\) of \(73.3^{+1.7}_{-1.8}\,{\,\mathrm {km}}\,\mathrm{s}^{-1}\,\mathrm{Mpc}^{-1}\) in flat \(\Lambda\)CDM, i.e., with 2.4% relative uncertainty, achieving the goals set out by the H0LiCOW program (Suyu et al. 2017). The STRong lensing Insights into the Dark Energy Survey (STRIDES; Treu et al. 2018) collaboration has further expanded the lensed quasar sample and measured the distances to a new lensed quasar system in a similar approach, resulting in \(\mathrm{H}_0=74.2^{+2.7}_{-3.0}\,{\,\mathrm {km}}\,\mathrm{s}^{-1}\,\mathrm{Mpc}^{-1}\) (Shajib et al. 2020). These H\(_0\) measurements from strong lensing agree well with that of the local Cepheids distance ladder (Riess et al. 2021) and are higher than the inference from the cosmic microwave background, making the Hubble tension even more significant.

The Time-Delay COSMOmography (TDCOSMO; Millon et al. 2020) collaboration, which consists of members of H0LiCOW, COSMOGRAIL, STRIDES and SHARP interested in cosmography with lensed quasars, further investigated systematic effects on H\(_0\) measurements, especially given that the mass-sheet degeneracy can manifest itself as degeneracies in the mass profiles (e.g., Schneider and Sluse 2013, 2014; Kochanek 2020; Blum et al. 2020). Millon et al. (2020) considered three main sources of uncertainties on the sample of 7 TDCOSMO lenses and found: (i) lens stellar kinematic measurements that are systematically offset by 10% would only change H\(_0\) at the 0.7% level, within the assertive assumptions; (ii) no bias on H\(_0\) due to incorrect characterization of line-of-sight effects; and (iii) the two families of mass models considered have the flexibility to yield significantly different H\(_0\) and yet the two families of models provide H\(_0\) measurements that agree within 1%, as illustrated in the bottom panels of Fig. 9. To conclude, using well-motivated “assertive” lens mass models, the current sample of 7 lensed quasars is constraining H\(_0\) at the 2% level.

Alternative approaches to the one of TDCOSMO have also been considered in the literature. For example, pixel based (“free form”) methods have continued to improve in methodology and sample size (Coles 2008; Paraficz and Hjorth 2010), although a fundamental issue remains to be addressed. These methods are underconstrained by the data, because typically only quasar positions and time delays are used to constrain the hundreds or thousands of free parameters in the model. Therefore their precision is driven entirely by the implicit or explicit prior on the mass distribution imposed by the regularization schemes. It would be interesting to see this class of models constrained by more information such as extended images or stellar kinematics or, in the case of clusters, large numbers of multiple images (Ghosh et al. 2020). It would also be informative to map the implicit priors to understand what the assumptions corresponds to in terms of physical interpretation. Finally, incorporating a formal blinding mechanism would help build confidence in these methods.

Another interesting approach is that introduced by Oguri (2007) to exploit samples of lenses with relatively little information per system. By necessity, given the relatively small sample size, these early attempts had to rely on strong informative priors to obtain interesting errors. However, as we will discuss at the end of Sect. 4.7, with large enough samples and a proper understanding of the selection function, the hierarchical analysis of many lenses with little information content per system may yield significant precision and accuracy. Measurements of H\(_0\) obtained by the studies discussed in this Section are summarized in Fig. 10.

Images reproduced with permission from [top] Wong et al. (2020), copyright by the authors; and [bottom] from Millon et al. (2020), copyright by ESO

Top panel: H\(_0\) measurements of 6 lensed quasars from the H0LiCOW program in collaboration with the COSMOGRAIL and SHARP programs, based on well-motivated lens mass models. The joint constraint on H\(_0\) in flat \(\Lambda\)CDM from all the lenses has a relative uncertainty of 2.4%. Bottom panels: H\(_0\) constraints from the two families of lens mass models, the power-law model (left) and composite model (right) including one additional lens from STRIDES. While the two families of mass models have the flexibility to yield significantly different H\(_0\), the two families of models provide H\(_0\) measurements that agree within 1%.

Comparison of inferences of H\(_0\) based on time delay cosmography, in \(\Lambda\)CDM cosmology. The measurements are categorized according to (i) assumptions on the mass distribution of the main deflector, “assertive” and “conservative” for simply parametrized models as described in Sect. 2.3 or “free form” for pixellated models, as described in Sect. 3.2.5; (ii) to the amount of information used per lens, “low info” utilizes quasar positions and time delays, “high info” adds the extended surface brightness distribution of the multiple images of the quasar host galaxy (or “Einstein Ring”), stellar kinematics of the main deflector, and number counts or weak lensing to estimate the line of sight convergence. For each measurement we give the reference and the number of time delay lenses. The measurements shown in red have been blinded to prevent experimenter bias

4 Time-delay cosmography in the 2020s

In this Section we review what we consider the main open issues at the moment of this writing (Sect. 4.1), describe the considerable opportunities that the coming decade affords (Sect. 4.2), discuss the considerable challenges that will need to be overcome to make the most of these opportunities, from an observational (Sect. 4.3), modeling (Sect. 4.4), and lens-environmental (Sect. 4.5) point of view. We conclude by presenting some forecasts.

4.1 Open issues

Even though much has been achieved in the past decade, a number of open issues remain to be addressed for time-delay cosmography to take the next step in precision and accuracy. We discuss the three more important ones in the next subsections.

4.1.1 “Everything should be made as simple as possible, but not simpler”

The number one issue is profound, and can be summarized as “what is a good model?” Performing a measurement requires a set of assumptions. In a Bayesian framework those include the choice of parametrization as well as the priors on those parameters. In the specific case of time-delay cosmography, as often is the case in astrophysics, each system—intended as the multiply imaged source and the propagation of photons to the observer—is inherently complex and cannot be described from first principles. For practical reasons, the problem gets separated into a number of steps: (i) the determination of the time delay, (ii) the lensing effect of the main deflector, and (iii) all the smaller deflections along the line of sight. In turn, each one of these steps needs to be further simplified to become tractable. As always, the open issue is how choose the right amount of simplification, or—as Einstein apparently said in the quote used to title this subsection—too much simplification leads to underestimated errors and possibly biases. Too little simplification makes the problem intractable, and/or overestimated errors. Ideally, assumptions and simplifications should be a way of expressing empirical knowledge from other sources. For example, a lot is known about quasars and supernovae and their light curves, and that knowledge informs how they are modeled to infer time delays. Furthermore, when considering samples of lenses, one should also be mindful of further complexity that arises at the population level. A classic example is understanding the selection function (e.g., Sonnenfeld et al. 2015; Baldwin and Schechter 2021) so that it can be modeled to assess and correct for any potential biases.

In the case of time-delay cosmography, the number one issue manifests itself most significantly in the modeling of the gravitational potential of the main deflector galaxy. Assumptions of symmetry or of certain functional forms to describe the mass distribution of the main lens affect significantly the inferred precision. If we assume elliptical mass distributions and mass density profiles described by functional forms that are standard and commonly accepted in the literature on massive elliptical galaxies, we infer a 2% precision on H\(_0\) based on current data. If we relax the radial mass profile assumption to make it maximally degenerate with H\(_0\), the uncertainty grows to 8% (Birrer et al. 2020). Departures from ellipticity do not seem to be equally important at this stage (Van de Vyvere et al. 2022) so one can reasonably conclude that the “true” current uncertainty is between 2–8%. So-called “free-form” models based on pixellated mass distribution or potential reach similar conclusions, given reasonable choices of priors and regularization (Denzel et al. 2021). In some sense, this range of uncertainty expresses the difficulty of taking all we know about elliptical galaxies and applying that knowledge to the systems under study. If take the commonly accepted description of the mass profile of early type galaxies, we end up with 2%. If we know nothing about them, we end up with 8%. As we will discuss in Sect. 4.4, there are two ways to improve what we know about the deflectors of time-delay lenses (Birrer et al. 2020; Birrer and Treu 2021). The first one is to obtain more data on each one of them. The second is to use knowledge extracted from analogs, assuming that we understand and can model the selection functions. Within the second approach, Birrer et al. (2020) demonstrates that the uncertainty can be reduced from 8% to approximately 5% if one combines the TDCOSMO sample with the non time-delay lenses from the SLACS sample.

4.1.2 Perturbations

The second big open issue is the treatment of the line of sight and nearby perturbers. The current approach is described in detail in Sect. 3.2.4 and yields uncertainties that are currently subdominant with respect to other contributors to the cosmography error budget.

However, there are several subtle effects that need to be studied in more detail if one wants to reach percent precision and accuracy. The first one is how to match exactly the identification of the perturbers in the rendering of the statistical lines of sight. The comparison light of sights will not reproduce exactly the configuration of nearby perturbers, so one needs to select lines of sight that contribute the same external convergence, except for the closest and most massive perturbers. This is generally achieved by selecting lines of sight that match statistically the observed distribution of galaxies in an annulus around the lens, excising the central part where the individual perturbers are located. It is also required that the simulated lines of sight match the external shear inferred by the strong lens model of the main deflector. The subtle issues are: (i) avoiding double counting in case some of the simulated lines of sight have significant perturbers (see, e.g., Rusu et al. 2017, for a discussion); (ii) inference of the external shear of the main deflector, introducing covariance between the model of the main deflector, the choice and model of the main perturbers, and the selection of the simulated lines of sight. Another subtle issue is that perturbers are identified as galaxies, and it is very hard to determine whether group-scale halos are present, even with complete spectroscopic catalogs (Sluse et al. 2017). A final subtle issue is related to the numerical simulations used for the estimate of the statistical component of the external convergence. In the current scheme one looks at overdensities (Greene et al. 2013; Rusu et al. 2017) and shear (Tihhonova et al. 2018) to mitigate any dependency of the choice of baryonic physics process in any specific simulation. Furthermore, multiplane ray tracing is performed within the Born approximation. As we discuss in the Sect. 4.5, in this current decade it will be important to develop a unified scheme to eliminate some of the approximations and carry out diverse cosmological simulations to make sure that the error budget associated with the environment and line of sight remains subdominant as the overall precision and accuracy increase.

4.1.3 Double Jeopardy: collecting multiple datasets

The third open issue is logistical. Whereas many experiments in physics and astrophysics are self-contained or based on at most a few instrumental apparatus (e.g., a Cosmic Microwave Background Experiment or a Redshift Survey), time-delay cosmography inherently requires multiple ones. Discovering lenses requires large surveys. Confirming them requires access to a range of telescope for spectroscopy and imaging. Time-delay determination requires high-cadence and high-precision monitoring, which can be done at relatively low angular resolution (\(\sim 1"\)) with the assistance of some higher-resolution imaging. Astrometry and lens modeling require information at \(\sim 10\) mas scales. Stellar kinematics and redshifts usually require large optical and infrared telescopes, ideally at \(\sim 100\) mas resolution or better. Characterization of the line of sight requires wide-field imaging and redshifts. Substantial computational power is required to carry out the inferences as well as produce cosmological numerical simulations that can be used for characterization of the selection function and line of sight. How to optimize and muster these resources is an open issue. Each one of these resources is usually allocated independently, and thus double jeopardy is often an issue. Furthermore, allocating committees are usually reluctant to award a piece of the puzzle without guarantees that the others will be secured. For the determination of time delays, the breakthrough came with guaranteed time on 1-2m class telescopes for multiple years. For the other parts of time-delay cosmography, coordinating and optimizing multiple telescopes remains a big challenge.

4.2 Opportunities

4.2.1 Large samples of multiply imaged QSOs and supernovae

Large samples of multiply imaged QSOs and supernovae will be transformational for time-delay cosmography. First, the measurements are still in a regime where random uncertainties dominate the error budget. Therefore, increasing the sample of known lenses allows one to shrink the uncertainties. Second, having a large pool of systems to choose from allows one to concentrate the follow-up resources on the ones that yield the biggest “(cosmological) bang for the observational buck”. For example, systems with delays estimated to be order 50–100 days are the best suited for time-delay determination with a few percent precision over a single season of monitoring, while it is hard to imagine that such precision can be reached if the time delay is of order a few days. Another example is that systems with a nearby bright star suitable for tip-tilt correction will be easier to target for spatially resolved spectroscopy from the ground using laser guide star adaptive optics. Third, in a landscape where hundreds or more multiply imaged quasars or supernovae are known one can imagine a hybrid approach in which a subset is studied in great detail to inform priors in the modeling of the larger sample.

As reviewed in Sect. 3.2.1, multiply imaged quasars and supernovae are rare in the sky. Only a few hundreds of the former (Lemon et al. 2020, 2022b) and a handful of the latter (Kelly et al. 2015; Goobar et al. 2017) have been found. Examples of discoveries since TM16 are shown in Figs. 11 and 12.

Image reproduced with permission from Schmidt et al. (2022), copyright by the authors

Montage of 30 recently discovered quadruply imaged quasars, imaged with the Hubble Space Telescope and modeled using an automated pipeline. The reader is referred to the original paper for more details and discovery references.

Image reproduced with permission from Kelly et al. (2016), copyright by AAS

Images of multiply imaged Supernova Refsdal. The time delays between images S1–4 are of order days to weeks, while SX is delayed by approximately 1 year.

A novelty of the 2020s will be the discovery and exploitation of multiply imaged supernovae for time-delay cosmography. At the moment of this writing, the first determination of a high-precision time delay for a multiply imaged supernova and resulting determination of the Hubble constant has been submitted for publication, and we expect many more to follow (e.g., Goldstein et al. 2019; Wojtak et al. 2019; Huber et al. 2019; Grillo et al. 2020; Suyu et al. 2020). Lensed supernovae present some advantages and disadvantages with respect to the lensed quasars from the point of view of time-delay cosmology. The main disadvantage is that by being transient they require cadenced surveys or monitoring campaigns to be discovered and cannot be found in single-epoch data. The main advantages are: (i) supernova light curves are not stochastic like those of quasars and thus one can use templates or models to infer the time delay requiring less demanding models (e.g., Pierel and Rodney 2019; Huber et al. 2022; ii) supernova spectra evolve over time and can be used as a new way to measure the time delays (Johansson et al. 2021; Bayer et al. 2021; iii) some supernovae (e.g., Ia) are standardizable candles, which gives an independent handle on the absolute magnification and therefore a way to mitigate the mass-sheet degeneracy, provided that microlensing/millilensing magnifications of the supernova can be quantified accurately (Oguri and Kawano 2003; Foxley-Marrable et al. 2018; Yahalomi et al. 2017; Birrer et al. 2022; iv) after the transient has faded, its host galaxy and the foreground detector can be studied more cleanly than in lensed quasars where the light from the quasar itself is often a dominant source of noise (Ding et al. 2021a).

In the 2020s, more powerful facilities and surveys will enable order of magnitude increases in the sample size of both multiply imaged quasars and supernovae (Oguri and Marshall 2010; Yue et al. 2022). The Legacy Survey of Space and Time (LSST) of the Vera C. Rubin Observatory, scheduled to start operations in 2024, will image approximately 18,000 square degrees of sky with depth and cadence vastly superior to that achieved by current state-of-the-art surveys. LSST will not only deliver large numbers of lenses by virtue of the large survey area, but it will also enable the discovery of lenses via variability (Pindor 2005; Kochanek et al. 2006a; Chao et al. 2020; Bag et al. 2022; Kodi Ramanah et al. 2022) and the determination of some time delays from the survey data itself (Liao et al. 2015).

Euclid and then the Nancy Grace Roman Space Telescope—scheduled to be launched in the next few years—will survey thousands of square degrees at angular resolution 0.2"–0.1", more than sufficient to resolve multiple images for typical Einstein radii of order arcsecond. The image quality will be sufficient for direct confirmation of the multiply imaged sources, bypassing the higher-resolution imaging follow-up that is often needed to confirm the candidates identified in seeing limited data.

Two other techniques could provide additional lenses. On the one hand, spectroscopic surveys with the Dark Energy Spectroscopic Instrument (Besuner et al. 2020), 4MOST, MOONS, and the Prime Focus Spectrograph (Tamura et al. 2016) will measure millions of spectra that can be searched for double spectra, identifying strong lensing systems on much larger scales than possible in the past (Huchra et al. 1985; Bolton et al. 2006, 2008). One of the advantages of spectroscopically identified strong lensing systems is that the redshift information is available from the survey itself.

On the other hand, even unresolved time domain data can be used to identify lensing events. Unresolved variable multiply imaged sources will appear as light curves with multiple autocorrelation peaks (Shu et al. 2021; Bag et al. 2022) corresponding to the time delays. This technique is in principle applicable with relatively small telescopes of large etendue such as ZTF (Bellm et al. 2019) or LAST (Ofek and Ben-Ami 2020).

4.2.2 External datasets

At any given time, the vast majority of galaxies does not host an active nucleus or a supernova. Therefore, the large surveys planned for the 2020s will deliver much larger numbers of so-called galaxy-galaxy lenses than quasar-galaxy or supernova-galaxy lenses. Galaxy-galaxy lenses do not contain the absolute distance information that comes from the time delays, but they can be used to inform how we model the quasar-galaxy or supernova-galaxy systems (Birrer et al. 2020; Birrer and Treu 2021; Sonnenfeld 2021). The sheer number of such galaxy-galaxy lenses to be found in the surveys under way in the 2020s all but guarantees that crucial data (e.g., high-resolution imaging, redshifts of the source, and velocity dispersion and redshift of the deflector) will be available from survey data themselves.

4.2.3 Integral field spectrographs

The launch of the James Webb Space Telescope and the constant upgrade of adaptive optics systems on 8–10 m (and Extremely Large Telescopes, 25–39 m, in the near future) class ground-based telescopes imply that our ability to do resolved spectroscopy at the angular resolution relevant for strong lensing systems (0.1–0.2") will be unprecedented in the 2020s. Spatially resolved kinematics has been recognized for some time as a fundamental ingredient to break the mass-sheet degeneracy and the mass-anisotropy degeneracy (Shajib et al. 2018; Yıldırım et al. 2020), but it was extremely challenging with 2010 technology. There is reason to believe the 2020s will be revolutionary in this respect.

4.3 Observational challenges

To make the most of the large sample of lenses to be discovered in the 2020s, two observational challenges will need to be solved.

The first one is the bottleneck of follow-up. Time-delay cosmography requires multiple pieces of information, and the surveys will not provide all of them; follow-up campaigns will be required. We anticipate that high-cadence targeted monitoring will be needed in most cases to determine time delays especially for lensed supernovae (Huber et al. 2019) (either by supplementing survey light curves or from scratch for systems discovered in single-epoch data). Most of the new lenses to be discovered in the 2020s will be fainter than the ones monitored prior to 2020, owing to a combination of the rising number counts towards fainter magnitudes and the fact that the brighter systems have likely already been discovered across most of the sky (Treu et al. 2018). Thus, even monitoring just 50–100 lenses to obtain exquisite time delays will require dedicated 3–4-m class telescopes, as opposed to the 1–2-m class telescopes that were used with great effect prior to 2020. Furthermore, most survey data will be of insufficient depth and resolution to perform cosmography-grade lens models (Meng et al. 2015). Thus, follow-up with the Hubble Space Telescope (as long as it is operational), JWST, adaptive optics from the ground, or radio interferometers when applicable, will in most cases be needed. Fortunately, single-epoch imaging will be sufficient for this task (or at most twice for transients, during and after), meaning that it is feasible with existing and planned facilities. Finally, as mentioned above, spatially resolved kinematics for large samples of lenses will be transformative. What is needed is continued support for the construction and deployment of the facilities (Wright et al. 2020) and the allocation of sufficient telescope time.

The second challenge will be understanding and characterizing the selection function of each sample with sufficient degree of accuracy. It is well established that the most common deflectors, massive elliptical galaxies, are a very uniform population that can generally be characterized by just two parameters, as evidenced by the tightness of the fundamental plane (Djorgovski and Davis 1987; Dressler et al. 1987) and mass plane (Auger et al. 2010). This uniformity is a good starting point for constructing matched samples of time-delay and non-time-delay lenses (e.g., by matching velocity dispersion and redshift, Treu et al. 2009) and should mitigate biases related to lensing selection (Collett and Cunnington 2016; Luhtaru et al. 2021). However, detailed and specific calculations of the selection function for each sample will be needed to meet the 1% precision and accuracy goal. Those calculations will need to consider every step of the process contributing to time delay cosmography, from lens system identification and confirmation, to time delay measurement, and lens and environment properties, and quantify any potential bias arising from these steps.

4.4 Deflector-modeling challenges

In the upcoming decade, we expect to have approximately \(\sim 10^5\) lensed galaxies, \(\sim 10^3\) lensed quasars, and \(\sim 10^2\) lensed supernovae (Collett 2015; Oguri and Marshall 2010; Wojtak et al. 2019) from LSST and/or Euclid. We would have: (i) a large sample with only LSST data, (ii) a medium sample with LSST and Euclid images, and (iii) a small sample with LSST, Euclid and ancillary follow-ups necessary for cosmography including HST/JWST images and spatially resolved kinematics. The challenge is to make use of all the lenses for cosmography, by for example learning about the mass structure of galaxies from the large sample and using it as prior for the small sample. This requires both enhancement in the speed of the modeling, and also new development in the modeling techniques especially with respect to joint lensing and dynamical models of galaxies.