Abstract

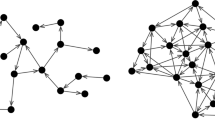

In contemporary artificial intelligence, the challenge is making intricate connectionist systems—comprising millions of parameters—more comprehensible, defensible, and rationally grounded. Two prevailing methodologies address this complexity. The inaugural approach amalgamates symbolic methodologies with connectionist paradigms, culminating in a hybrid system. This strategy systematizes extensive parameters within a limited framework of formal, symbolic rules. Conversely, the latter strategy remains staunchly connectionist, eschewing hybridity. Instead of internal transparency, it fabricates an external, transparent proxy system. This ancillary system’s mandate is elucidating the principal system’s decisions, essentially approximating its outcomes. Leveraging natural language processing as our analytical lens, this paper elucidates both methodologies: the hybrid method is underscored by the compositional vector semantics, whereas the purely connectionist method evolves as a derivative of neural semantic parsers. This discourse extols the merits of the purely connectionist approach for its inherent flexibility and for a pivotal delineation: segregating the explanatory apparatus from the operational core, thereby rendering artificial intelligence systems reminiscent of human cognition.

Similar content being viewed by others

Data Availability

Not applicable.

References

Arora S, Li Y, Liang Y, Ma T, Risteski A (2018) Linear algebraic structure of word senses, with applications to polysemy. Trans Assoc Comput Linguist 6:483–495

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473

Baker GP, Hacker PM (1984) On misunderstanding Wittgenstein: Kripke's private language argument. Synthese 58:407–450. http://www.jstor.org/stable/20115975. Accessed 20 Jan 2023

Baroni M, Zamparelli R (2010) Nouns are vectors, adjectives are matrices: representing adjective-noun constructions in semantic space. In: Proceedings of the 2010 Conference on empirical methods in natural language processing, (s. 1183–1193)

Baroni M, Bernardi R, Zamparelli R et al (2014) Frege in space: a program for compositional distributional semantics. Linguist Issues Lang Technol 9:5–110

Breiman L (2001) Statistical modeling: the two cultures (with comments and a rejoinder by the author). Stat Sci 16:199–231

Carabantes M (2020) Black-box artificial intelligence: an epistemological and critical analysis. AI & Soc 35:309–317

Childers T, Hvorecký J, Majer O (2023) Empiricisim in the foundations of cognition. AI & Soc 38:67–87

Chomsky N (1986) Knowledge of language: ıts nature, origin, and use. Greenwood Publishing Group

Chomsky N (2011) Language and other cognitive systems. What is special about language? Lang Learn Dev 7:263–278. https://doi.org/10.1080/15475441.2011.584041

Clark HH (1983) Making sense of nonce sense. In: Flores D’Arcais GB, Jarvella RJ (eds) The process of language understanding. Wiley, Chicester, pp 297–331

Coecke B, Sadrzadeh M, Clark S (2010) Mathematical foundations for a compositional distributional model of meaning. arXiv preprint arXiv:1003.4394

Cohen J (1985) A problem about ambiguity in truth-conditional semantics. Analysis 45:129–135

Dennett D (1987) True Belieers: the intentional strategy and why it works. In: Dennett D (ed) The intentional stance. The MIT Press, Cambridge, pp 13–35

Devlin J, Chang M-W, Lee K, Toutanova K (2018) BERT: pre-training of deep bidirectional transformers for language understanding. arXiv. https://arxiv.org/abs/1810.04805adresinden alındı

Evang K (2019) Transition-based DRS parsing using stack-LSTMs. In: Proceedings of the IWCS shared task on semantic parsing

Fodor JA (1998) Concepts: where cognitive science went wrong. Oxford University Press, New York

Fu Q, Zhang Y, Liu J, Zhang M (2020) DRTS parsing with structure-aware encoding and decoding. arXiv preprint arXiv:2005.06901

Günther M, Kasirzadeh A (2022) Algorithmic and human decision making: for a double standard of transparency. AI & Soc 37:375–381

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for ımage recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (s. 770–778). https://doi.org/10.1109/CVPR.2016.90

Heim I, Kratzer A (1998) Semantics in generative grammar. Blackwell

Henin C, Le Métayer D (2022) Beyond explainability: justifiability and contextability of algorithmic decision systems. AI & Soc 37:1397–1410

Iacobacci I, Pilehvar MT, Navigli R (2015) SensEmbed: learning sense embeddings for word and relational similarity. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on natural language processing (Volume 1: Long Papers) (s. 95–105). Beijing: Association for Computational Linguistics. https://doi.org/10.3115/v1/P15-1010

Johnson M, Schuster M, Le QV, Krikun M, Wu Y, Chen Z, Dean J (2017) Google’s multilingual neural machine translation system: enabling zero-shot translation. Trans Assoc Comput Linguist 5:339–351. https://doi.org/10.1162/tacl_a_00065

Krizhevsky A, Sutskever I, Hinton GE (2017) ImageNet classification with deep convolutional neural networks. Commun ACM 60:84–90. https://doi.org/10.1145/3065386

Levkovskyi O, Li W (2021) Generating predicate logic expressions from natural language. In: SoutheastCon 2021, (s. 1–8)

Lewis M (2019) Compositionality for recursive neural networks. arXiv preprint arXiv:1901.10723

Liu J, Cohen SB, Lapata M (2018) Discourse representation structure parsing. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (s. 429–439). Melbourne: Association for Computational Linguistics. https://doi.org/10.18653/v1/P18-1040

Liu Y, Ott M, Goyal N, Du J, Joshi M, Chen D, Stoyanov V (2019) Roberta: a robustly optimized bert pretraining approach. arXiv

McCoy RT, Linzen T, Dunbar E, Smolensky P (2020) Tensor product decomposition networks: uncovering representations of structure learned by neural networks. Proc Soc Comput Linguist 3:474–475

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space. arXiv

Montague R (1974a) English as a formal language. In: Thomason RH (ed) Formal philosophy: selected papers of Richard Montague. Yale University Press, New Heaven, pp 188–221

Montague R (1974b) Universal grammar. In: Thomason RH (ed) Formal philosophy: selected papers of Richard Montague. Yale University Press, New Heaven, pp 222–246

Newell A, Simon HA (1976) Computer science as empirical inquiry: symbols and search. Commun ACM 19:113–126

Nietzsche FW (2009) On truth and lie in an extra-moral sense. In: Geuss R, Nehamas, A (eds) Nietzsche: writings from the early notebooks. Cambridge University Press, Cambridge, pp 253–263

Van Noord R, Bos J (2017) Neural semantic parsing by character-based translation: experiments with abstract meaning representations. arXiv preprint arXiv:1705.09980

Norvig P (2012) Colorless green ideas learn furiously: Chomsky and the two cultures of statistical learning. Significance 9:30–33

Park YJ (2023) How we can create the global agreement on generative AI bias: lessons from climate justice. AI & Soc. https://doi.org/10.1007/s00146-023-01679-0

Partee BH, Meulen AT, Wall RE (1990) Mathematical methods in linguistics. Kluwer Academic Publishers, Dordrecht

Partee B (1984) Compositionality. In: Veltman F (eds) Varieties of formal semantics. Foris Publications, Dordrecht, pp 281–311

Pennington J, Socher R, Manning C (2014) Glove: global vectors for word representation. In: Proceedings of the 2014 Conference on empirical methods in natural language processing (EMNLP), (s. 1532–1543)

Peters ME, Ammar W, Bhagavatula C, Power R (2017) Semi-supervised sequence tagging with bidirectional language models. arXiv preprint arXiv:1705.00108

Peters ME, Neumann M, Iyyer M, Gardner M, Clark C, Lee K, Zettlemoyer L (2018) Deep contextualized word representations. arXiv preprint arXiv:1802.05365

Pustejovsky J (1996) The generative lexicon. MIT Press

Quine WV (1969) Epistemology naturalized. In: Quine, WVO (ed) Ontological relativity and other essays. Columbia University Press, New York, pp 69–90

Recanati F (2004) Literal meaning. Cambridge University Press

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning representations by back-propagating errors. Nature 323:533–536. https://doi.org/10.1038/323533a0

Sadrzadeh M, Muskens R (2019) Static and dynamic vector semantics for lambda calculus models of natural language. J Lang Model 6:319–351. https://doi.org/10.15398/jlm.v6i2.228

Singh H, Aggrawal M, Krishnamurthy B (2020) Exploring neural models for parsing natural language into first-order logic. arXiv preprint arXiv:2002.06544

Skinner BF (1974) About behaviorism. Vintage, New York

Smolensky P (1990) Tensor product variable binding and the representation of symbolic structures in connectionist systems. Artif Intell 46:159–216

Smolensky P (1995a) Connectionism, constituency and the language of thought. In: MacDonald C, MacDonald G (eds) Connectionism: debates on psychological explanation. Basil Blackwell, Oxford

Smolensky P (1995b) On the proper treatment of connectionism. In: MacDonald C, MacDonald G (eds) Connectionism: debates on psychological explanation. Basil Blackwell, Oxford

Socher R, Karpathy A, Le QV, Manning CD, Ng AY (2014) Grounded compositional semantics for finding and describing images with sentences. Trans Assoc Comput Linguist 2:207–218

Socher R, Huval B, Manning CD, Ng AY (2012) Semantic compositionality through recursive matrix-vector spaces. In: Proceedings of the 2012 Joint Conference on empirical methods in natural language processing and computational natural language learning, (s. 1201–1211)

Socher R, Perelygin A, Wu JY, Chuang JM, Ng CD (2013) Recursive deep models for semantic compositionality over a sentiment treebank. In: Proceedings of the conference on empirical methods in natural language processing (EMNLP)

Strawson PF (1950) On referring. Mind 59:320–344

Sutskever I, Vinyals O, Le Quoc V (2014) Sequence to sequence learning with neural networks. In: Proceedings of the 27th international conference on neural information processing systems. MIT Press, pp 3104–3112

van Noord R, Abzianidze L, Toral A, Bos J (2018) Exploring neural methods for parsing discourse representation structures. Trans Assoc Comput Linguist 6:619–633. https://doi.org/10.1162/tacl_a_00241

Zerilli J, Knott A, Maclaurin J, Gavaghan C (2019) Transparency in algorithmic and human decision-making: ıs there a double standard? Philos Technol 32:661–683

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Toy, T. Transparency in AI. AI & Soc (2023). https://doi.org/10.1007/s00146-023-01786-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00146-023-01786-y