Abstract

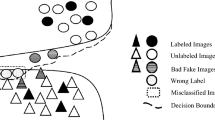

In this work, we investigate semi-supervised learning (SSL) for image classification using adversarial training. Previous results have illustrated that generative adversarial networks (GANs) can be used for multiple purposes in SSL . Triple-GAN, which aims to jointly optimize model components by incorporating three players, generates suitable image-label pairs to compensate for the lack of labeled data in SSL with improved benchmark performance. Conversely, Bad (or complementary) GAN optimizes generation to produce complementary data-label pairs and force a classifier’s decision boundary to lie between data manifolds. Although it generally outperforms Triple-GAN, Bad GAN is highly sensitive to the amount of labeled data used for training. Unifying these two approaches, we present unified-GAN (UGAN), a novel framework that enables a classifier to simultaneously learn from both good and bad samples through adversarial training. We perform extensive experiments on various datasets and demonstrate that UGAN: (1) achieves competitive performance among other GAN-based models, and (2) is robust to variations in the amount of labeled data used for training.

Similar content being viewed by others

Notes

In semi-supervised learning, p(x) is the empirical distribution of inputs and p(y) is assumed same to the distribution of labels on labeled data, which is uniform in our experiments.

In practice, we use \(L_{gG} = -\mathbb {E}_{x, y\sim p_{gG}(x, y)}[\log (p_{D}(x, y)]\) to ease the training process [9].

References

Abu-El-Haija, S., Kothari, N., Lee, J., Natsev, P., Toderici, G., Varadarajan, B., Vijayanarasimhan, S.: Youtube-8m: A Large-scale Video Classification Benchmark (2016). arXiv:1609.08675

Belkin, M., Niyogi, P., Sindhwani, V.: Manifold regularization: a geometric framework for learning from labeled and unlabeled examples. J. Mach. Learn. Res. 7, 2399–2434 (2006)

Brock, A., Donahue, J., Simonyan, K.: Large Scale Gan Training For High Fidelity Natural Image Synthesis. (2018). arXiv:1809.11096

Dai, Z., Yang, Z., Yang, F., Cohen, W.W., Salakhutdinov, R.R.: Good semi-supervised learning that requires a bad gan. In: Advances in Neural Information Processing Systems, pp. 6510–6520 (2017)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: Imagenet: a large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255. IEEE (2009)

Dumoulin, V., Belghazi, I., Poole, B., Mastropietro, O., Lamb, A., Arjovsky, M., Courville, A.: Adversarially learned inference (2016). arXiv:1606.00704

Gan, Z., Chen, L., Wang, W., Pu, Y., Zhang, Y., Liu, H., Li, C., Carin, L.: Triangle generative adversarial networks. In: Advances in Neural Information Processing Systems, pp. 5247–5256 (2017)

Girija, S.S.: Tensorflow: large-scale machine learning on heterogeneous distributed systems. Software available from https://www.tensorflow.org/ (2016)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Advances in Neural Information Processing Systems, pp. 2672–2680 (2014)

Iscen, A., Tolias, G., Avrithis, Y., Chum, O.: Label propagation for deep semi-supervised learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5070–5079 (2019)

Kingma, D.P., Ba, J.: Adam: A Method for Stochastic Optimization. arXiv:1412.6980 (2014)

Kingma, D.P., Welling, M.: Auto-Encoding Variational Bayes. arXiv:1312.6114 (2013)

Krasin, I., Duerig, T., Alldrin, N., Ferrari, V., Abu-El-Haija, S., Kuznetsova, A., Rom, H., Uijlings, J., Popov, S., Veit, A et al.: Openimages: A Public Dataset for Large-scale Multi-label and Multi-class Image Classification, vol. 2, p. 3. Dataset available from https://github.com/openimages (2017)

Krizhevsky, A., Hinton, G.: Learning Multiple Layers of Features from Tiny Images. Technical report, Citeseer (2009)

Kumar, A., Sattigeri, P., Fletcher, T.: Semi-supervised Learning with Gans: Manifold Invariance with Improved Inference. In: Advances in Neural Information Processing Systems, pp. 5534–5544 (2017)

Laine, S., Aila, T.: Temporal Ensembling for Semi-supervised Learning. arXiv:1610.02242 (2016)

Lecouat, B., Foo, C.-S., Zenati, H., Chandrasekhar, V.R.: Semi-supervised Learning with Gans: Revisiting Manifold Regularization. arXiv:1805.08957 (2018)

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P., et al.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Li, C., Xu, T., Zhu, J., Zhang, B.: Triple generative adversarial nets. In: Advances in Neural Information Processing Systems, pp. 4088–4098 (2017)

Li, W., Wang, Y., Cai, Y., Arnold, C., Zhao, E., Yuan, Y.: Semi-supervised Rare Disease Detection Using Generative Adversarial Network. arXiv:1812.00547 (2018)

Li, W., Wang, Z., Li, J., Polson, J., Speier, W., Arnold, C.: Semi-supervised Learning Based on Generative Adversarial Network: A Comparison Between Good Gan and Bad Gan Approach. arXiv:1905.06484 (2019)

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft coco: common objects in context. In: European Conference on Computer Vision, pp. 740–755. Springer (2014)

Lucic, M., Tschannen, M., Ritter, M., Zhai, X., Bachem, O., Gelly, S.: High-Fidelity Image Generation with Fewer Labels. arXiv:1903.02271 (2019)

Mirza, M., Osindero, S.: Conditional Generative Adversarial Nets. arXiv:1411.1784 (2014)

Miyato, T., Kataoka, T., Koyama, M., Yoshida, Y.: Spectral Normalization for Generative Adversarial Networks. arXiv:1802.05957 (2018)

Miyato, T., Maeda, S.I., Koyama, M., Ishii, S.: Virtual adversarial training: a regularization method for supervised and semi-supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 41(8), 1979–1993 (2018)

Netzer, Y., Wang, T., Coates, A., Bissacco, A., Wu, B., Ng, A.Y.: Reading digits in natural images with unsupervised feature learning. In: Advances in Neural Information Processing Systems (2011)

Nigam, K., McCallum, A., Mitchell, T.: Semi-supervised text classification using EM. In: Semi-supervised Learning, pp. 33–56 (2006)

Pu, Y., Gan, Z., Henao, R., Yuan, X., Li, C., Stevens, A., Carin, L.: Variational autoencoder for deep learning of images, labels and captions. In: Advances in Neural Information Processing Systems, pp. 2352–2360 (2016)

Qiao, S., Shen, W., Zhang, Z., Wang, B., Yuille, A.: Deep co-training for semi-supervised image recognition. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 135–152 (2018)

Radford, A., Metz, L., Chintala, S.: Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv:1511.06434 (2015)

Rasmus, A., Berglund, M., Honkala, M., Valpola, H., Raiko, T.; Semi-supervised Learning with Ladder Networks. In: Advances in Neural Information Processing Systems, pp. 3546–3554 (2015)

Sajjadi, M., Javanmardi, M., Tasdizen, T.: Mutual exclusivity loss for semi-supervised deep learning. In: 2016 IEEE International Conference on Image Processing (ICIP), pp. 1908–1912. IEEE (2016)

Salimans, T., Goodfellow, I., Zaremba, W., Cheung, V., Radford, A., Chen, X.: Improved techniques for training gans. In: Advances in Neural Information Processing Systems, pp. 2234–2242 (2016)

Springenberg, J.T.: Unsupervised and Semi-supervised Learning with Categorical Generative Adversarial Networks. arXiv:1511.06390 (2015)

Tarvainen, A., Valpola, H.: Mean teachers are better role models: weight-averaged consistency targets improve semi-supervised deep learning results. In: Advances in Neural Information Processing Systems, pp. 1195–1204 (2017)

Weston, J., Ratle, F., Mobahi, H., Collobert, R.: Deep learning via semi-supervised embedding. In: Neural Networks: Tricks of the Trade, pp. 639–655. Springer (2012)

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Li, W., Wang, Z., Yue, Y. et al. Semi-supervised learning using adversarial training with good and bad samples. Machine Vision and Applications 31, 49 (2020). https://doi.org/10.1007/s00138-020-01096-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-020-01096-z