Abstract

Retinal image analysis is a challenging problem due to the precise quantification required and the huge numbers of images produced in screening programs. This paper describes a series of innovative brain-inspired algorithms for automated retinal image analysis, recently developed for the RetinaCheck project, a large-scale screening program for diabetic retinopathy and other retinal diseases in Northeast China. The paper discusses the theory of orientation scores, inspired by cortical multi-orientation pinwheel structures, and presents applications for automated quality assessment, optic nerve head detection, crossing-preserving enhancement and segmentation of retinal vasculature, arterio-venous ratio, fractal dimension, and vessel tortuosity and bifurcations. Many of these algorithms outperform state-of-the-art techniques. The methods are currently validated in collaborating hospitals, with a rich accompanying base of metadata, to phenotype and validate the quantitative algorithms for optimal classification power.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Diabetes is reaching epidemic proportions worldwide, especially in Asia due to fast lifestyle changes and genetic factors. Today 11.6 % of the Chinese population is estimated to have diabetes-2. Diabetic retinopathy is also the main cause of newly formed blindness in the working population, leading to high societal costs. Early detection is the key to prevention and successful treatment of these forms of blindness. However, many cases still go unnoticed and are not treated in time, especially in rural areas.

To set up a screening program for the detection of early signs of diabetic retinopathy (DR), glaucoma and age-related macula degeneration (AMD), a Sino-Dutch consortium was formed and the project RetinaCheck was defined with the following four phases: (1) development of innovative algorithms for automated and quantitative detection of relevant bio-markers, (2) set up a significant validation study correlating the imaging data with relevant clinical metadata, and (3) roll out a screening infrastructure in the province of Liaoning, Northeast China, and (4) make a sustainable commercial infrastructure. The partners include the departments of Biomedical Engineering at Eindhoven University of Technology (TU/e, the Netherlands) and Northeastern University (NEU, Shenyang, China), which develop the computer-aided diagnosis (CAD) software, the clinical partners He University Eye Hospital (HUEH, Shenyang, China) and China Medical University (Shengjing Hospital, Shenyang, China) (Fig. 1), and the fundus camera manufacturer i-Optics (the Hague, the Netherlands).

This paper reports on phase 1 only, focusing in detail on algorithm design. The very important validation phase is ongoing, and will be reported in a forthcoming paper.

Due to its low cost, wide availability, high resolution and ease of use, optical imaging of the retinal fundus is often the method of choice for screening applications. Today non-mydriatic cameras (no pupil dilation) give good quality and high-resolution images. Quantitative measurement and analysis of geometric attributes of the retinal vasculature is highly informative for diagnosis and monitoring of diabetes at early stages [41]. The many extracted imaging biomarkers in this project will also be exploited for the early detection and monitoring of other diseases, like glaucoma, age-related macula degeneration (AMD), and cardiovascular and neurodegenerative diseases.

Several other early DR signs can be measured, such as nerve damage in the cornea with confocal laser microscopy, or changes in retina neural tissue layer thickness with optical coherence tomography (OCT), but these methods are more costly and more labor intensive, especially given the projected huge-scale screening and the much lower availability of OCT in China.

This paper gives an overview of the current brain-inspired applications in the RetinaCheck project. The paper mainly focuses on vessel analysis, while a forthcoming paper will focus on the automated detection of micro-aneurysms and background diagnostic features, as drusen and exudates.

The paper is organized as follows: first a short introduction is given to the physiological evidence of multi-scale and multi-orientation processing in the visual system in Sect. 2, and a theoretical model for brain-inspired multi-orientation computing in Sect. 3. Then a series of recently developed computer-aided diagnosis (CAD) algorithms is discussed in Sect. 4, mostly based on the mechanism of invertible multi-orientation scores. The paper ends with a description of the current validation study in Sect. 5 and a conclusion in Sect. 6.

2 Brain-inspired computer vision

Modern brain imaging techniques have revolutionized brain research: optical imaging methods such as voltage sensitive dye imaging, calcium intrinsic imaging and optogenetics, as well as structural and functional MRI techniques have revealed intricate details of brain function and structure, especially of the visual system, the best studied brain area today. Many models of the functional mechanisms in early vision have been proposed. This paper focuses on the geometric approach, starting from the multi-scale and multi-orientation structure found in the early stages of vision.

2.1 Multi-scale analysis

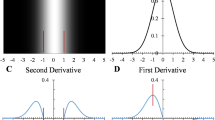

Multi-scale analysis is now well established [15, 44, 58]. The center-surround receptive fields in the retina are the first multi-scale sampling step. Our model for V1’s ‘simple cells’ (so called by Hubel and Wiesel) is that these have the function of multi-scale, regularized spatial differential operators, i.e., Gaussian derivatives, possibly up to fourth order [44, 58–60]. The simultaneous sampling of the multi-scale differential structure leads to ‘deep structure’ analysis [43], with applications as edge focusing, hierarchical top points and SIFT and SURF keypoints. Nonlinear adaptive multi-scale analysis is developed in the rich field of geometry-driven diffusion [32, 62], which introduced nonlinear PDEs and energy minimizing variational methods into this field of evolutionary geometric computing.

2.2 Multi-orientation analysis

Hubel and Wiesel [37] discovered that the receptive fields in cat’s striate cortex have a strong orientation-selective property (see Fig. 2). A so-called cortical hyper-column, with the characteristic pinwheel structure of equi-orientation lines radiating from a central singularity, can be interpreted as a visual pixel computer, neatly decomposed into a complete set of orientations.

Synaptic physiological studies of horizontal pathways in cat striate cortex show that neurons with aligned receptive field sites excite each other on the cortex between different pinwheels, in an elongated area and over long distances, creating long-range contextual connections [2]. Therefore, the visual system not only constructs the aforementioned score of local orientations, but also accounts for long-range context and alignment by excitation and inhibition a priori, which can be modeled by so-called association fields [15, 20, 27], and left-invariant PDEs and ODEs for contour enhancement and contour completion directly on the score [23, 61].

Left voltage sensitive dye measurement of the visual cortex of the tree shrew, exhibiting the columnar organization with the typical multi-orientation pinwheel structure. From [40]. Right Orientation score model with orientation on the vertical axis, and cortical columns with horizontal contextual (association field) connections. From [66]

This multi-orientation framework [14, 15, 21, 31] also allows us to generically deal with crossings, as we will show with the application to vessel tracking [6, 8, 9, 52] and segmentation [1, 33, 65]. Moreover, due to the neat organization of image data on the Lie-group SE(2), we are able to design effective detection algorithms [6, 7], geometric feature analysis techniques such as bifurcation detection/analysis [55] and local vessel curvature analysis [11], and enhancement methods [31, 66].

3 Theory

Motivated by this organization, so-called orientation scores are constructed by lifting all elongated structures (in 2D images) along an extra orientation dimension, see Fig. 2. Similar to the perceptual organization of orientation in the visual cortex, a 2D orientation score is an object that maps each 2D position and orientation \((x, y, \theta )\) to a complex scalar. So the original 2D image domain can be extended to the score domain. A great advantage is that it can deal with multiple orientations per position, and the extra dimension enables new techniques for, e.g., contextual geometric reasoning and crossing-preserving enhancement [31].

3.1 The Euclidean motion group SE(2)

The domain \(\mathbb {R}^2 \rtimes S^1\) (2 spatial dimensions, and one orientation dimension) of an orientation score can be identified with the Euclidean motion group SE(2), equipped with the group product

for all \(g,g' \in \hbox {SE}(2)\), and with \(\textstyle \mathbf R _\theta = \left( \begin{array}{ccc} \cos \theta &{}\quad -\sin \theta \\ \sin \theta &{}\quad \cos \theta \\ \end{array} \right) \) a counterclockwise rotation over angle \(\theta \).

3.2 Invertible orientation scores on SE(2)

An orientation score \(U_f:{\hbox {SE}}(2) \rightarrow \mathbb {C}\) is obtained by convolving an input image f with a specially designed, anisotropic wavelet \(\psi \):

where \(R_\theta \) denotes a 2D counterclockwise rotation matrix and \(\psi _\theta (\mathbf {x}) = \psi (R_\theta ^{-1}\mathbf {x})\). Exact image reconstruction is achieved by

where \(\mathcal {F}[\cdot ]\) represents the unitary Fourier transform on \(\mathbb {L}_2(\mathbb {R}^2)\) and \(M_\psi \) is given by \(\int _0^{2\pi }|\mathcal {F}\left[ \psi _\theta \right] |^2 \mathrm {d}\theta \).

Well-posedness of the reconstruction is controlled by \(M_\Psi \) [10, 24]. \(M_\Psi \): \(\mathbb {R}^{2} \rightarrow \mathbb {R}^{+}\) is calculated by

The function \(M_\Psi \) provides a stability measure of the inverse transformation. Theoretically, reconstruction is well-posed, as long as \(0< \delta< M < M_\Psi<\), where \(\delta \) is arbitrarily small, since then the condition number of \(W_\Psi \) is bounded by \(M\delta ^{1}\) (for a detailed discussion see [22]).

Wavelets that meet this criterion are the so-called cake wavelets described by [6, 21, 31]. They uniformly cover the Fourier domain up to a radius of about the Nyquist frequency \(\rho _n\) (see Fig. 3). They have the advantage over other oriented wavelets (such as Gabor wavelets) that they allow for a stable inverse transformation \({\mathcal {W}}_\psi ^*\) from the orientation score back to the image. As such, cake wavelets ensure that no data-evidence is lost during the forward and backward transformation. The procedure for creating cake wavelets is illustrated in Fig. 3. In detail, the proposed wavelet takes the form

with \(G_{\hbox {s}}\) an isotropic Gaussian window in the spatial domain and \((\rho ,\varphi )^T\) denote polar coordinates in the Fourier domain, i.e., \(\mathbf {\omega }= (\rho \cos \varphi ,\rho \sin \varphi )^T\). The Fourier wavelet \(\tilde{\psi }(\mathbf {\omega }) = A(\varphi )B(\rho )\) has an angular component \(A(\varphi )\) resembling a ‘piece of cake’ (Fig. 3), and a Gaussian weighted radial component \(B(\rho )\):

where \(B^k(x)\) denotes the kth order B-spline, \(N_\theta \) is the number of samples in the orientation direction and \(s_\theta =2\pi /N_\theta \) is the angular step size. The function \(B(\rho )\) is a Gaussian multiplied with the Taylor series of its inverse up to order N to enforce faster decay. The parameter t is given by \(t^2=2 \hat{\rho }/(1+2N)\) with the inflection point \(\hat{\rho }\) that determines the bending point of \(B(\rho )\).

As depicted in Fig. 3, the symmetric real part of the kernel picks up lines, whereas the asymmetric imaginary part responds to edges. In the context of vessel filtering, the real part is of primary interest, whereas vessel tracking in the orientation score additionally makes use of the imaginary part [6]. Elongated structures involved in a crossing can now be disentangled for crossing-preserving analysis.

a Real and b imaginary part of the cake kernel in the spatial domain, c Fourier contours at 70 % of the maximum for all orientations and d \(B(\rho )\) with \(\hat{\rho }=0.8\rho _n\), the Nyquist frequency \(\rho _n\) and \(N=8\). From [6]

3.3 Left-invariant Gaussian derivatives in SE(2)

Theoretically, because of the curved geometry of orientation scores, it is wrong to take the derivatives in orientation scores using the \(\{\partial _x,\partial _y,\partial _\theta \}\) derivative frame (we use shorthand notation \(\partial _i=\frac{\partial }{\partial _i}\)) [21]. Therefore, left-invariant differential operators

are used in SE(2). The \(\partial _\xi \) and \(\partial _\eta \) are the spatial derivatives tangent and orthogonal to the orientation \(\theta \). It is important to mention that not all the left-invariant derivatives commute, e.g., \(\partial _\theta \partial _\xi U \ne \partial _\xi \partial _\theta U\) [22, 31]. Mathematically this is due to the fact that rotations and translations do not commute. We have the following commutators in the Lie-algebra of SE(2):

Suitable combinations of derivatives have been widely used to pick up differential invariant features like edges, ridges, corners and so on [59]. However, obtaining derivatives directly is an ill-posed problem. Therefore, we regularize the orientation scores via convolutions with Gaussian kernels \(G_{\sigma _s,\sigma _o}(\mathbf x ,\theta )=G_{\sigma _s}(\mathbf x )G_{\sigma _o}(\theta )\), with a d—dimensional Gaussian given by

and where \(\sigma _s\) and \(\sigma _o\) are used to define the spatial scale \(\frac{1}{2}\sigma _s^2\) and orientation scale \(\frac{1}{2}\sigma _o^2\) of the Gaussian kernel. Note that the spatial Gaussian distribution \(G_{\sigma _s}: \mathbb {R}^{2} \rightarrow \mathbb {R}^{+}\) must be isotropic to preserve commutator relations of the SE(2) group for scales \(\sigma _{s}>0\), i.e., to preserve left-invariance.

Detailed numerical approaches for linear left-invariant diffusions on SE(2) have been developed in [66].

4 Computer-aided diagnosis algorithms

The pipeline of functions in the developed CAD system is the following:

-

1.

Quality assessment

-

2.

Masking and normalization

-

3.

Optic nerve head detection

-

4.

Vessel enhancement and segmentation

-

(a)

Left-invariant Gaussian derivatives in SE(2)

-

(b)

Multi-scale and multi-orientation for SLO

-

(c)

Crossing-preserving multi-scale vesselness

-

(a)

-

5.

Multi-orientation vessel tracking

-

6.

Arterio-venous ratio

-

7.

Fractal dimension

-

8.

Geometric vessel features

-

(a)

Bifurcation/crossing detection

-

(b)

Curvature/tortuosity

-

(a)

Each of the steps will be discussed below in the respectively numbered sections.

4.1 Automatic quality assessment

Sometimes retinal images have a low quality, e.g., due to cataract or other pathology. To exclude non-diagnostic images automatically in the high-volume screening process, a method for image quality verification is developed [26], based on [50]. The supervised method is based on the assumption that sufficient image structure according to a pre-defined distribution must be available. Geometric differential invariants (expressed in 2D gauge coordinates [58]) up to second order and at 4 different scales are used to develop response vectors. Different combinations of features are trained by different classifiers on 100 normal and 100 low-quality images. The ground truth for normal or low quality images was specified by two expert ophthalmologists. Combining the image structure clusters with RGB color histogram features, the Random Forest classifier proved to be the best classifier, with a performance of 0.984 area under the curve (AUC) of the receiver operator characteristic (ROC), with 0.91 accuracy rate.

4.2 Masking and normalization

All pixels outside the camera field-of-view are masked, according to the camera type. Retinal images often suffer from non-uniform illumination and varying contrast, which may affect the later detection process. We exploit the luminosity and contrast normalization procedures proposed by Foracchia et al. [28].

4.3 Optic nerve head detection

The optic nerve head (ONH) or optical disk is a key landmark in retinal images, and many methods for detection have been proposed [4, 18, 45, 47, 54, 64]. For an extensive and recent overview see [51]. Correct segmentation of the ONH and its rim is an important biomarker for glaucoma identification [38]), and for establishing a metric for regions-of-interest on the retina [36].

Conventional fundus images show the ONH as a bright disk-like feature. However, scanning laser ophthalmoscopy (SLO) cameras generally show dark regions with considerably less contrast. In the presence of large pathologies, classical approaches typically show decreased performance, or fail. Better performance is obtained by including contextual information, e.g., by incorporating the characteristic pattern of large blood vessel arches in the upper and lower retina emerging from the ONH [45, 46, 64], but this comes at higher computational costs.

Exploiting the extra dimensions of orientation scores, we have developed a template matching application via cross-correlation including local orientations [7, 10] (see also [16]). We can now match patterns of orientation distributions, rather than pixel intensities.

The templates are constructed from (a) a disk filter, (b) a model of the vessels radiating from the ONH, and (c) through minimization of a suitable energy functional. In Ref. [7] we found that, in contrast to model-based templates [10] (e.g., disk shapes or vessel indicator functions), the best performing templates are trained by the minimization of an energy functional, consisting of a term where the desired inner product responses are trained, and a regularization term that ensures stability and smoothness of the template. For 2D spatial image templates t, we minimize

where \(\hat{f}_i\) is one of P (\(P=100\)) normalized positive patches with ONH (with \(y_i = 1\)), or negative patches without ONH (with \(y_i = 0\)) with typically \(\lambda = 10^{-1.5}\). The regularization term enforces smoothness by punishing the squared gradient magnitude \(||\nabla t ||^2\), and prevents over-fitting. Examples of both positive and negative training patches are given in Fig. 4.

Exemplary retinal image patches used for template training. Top row positive patches. Bottom row negative patches. From [7]

Automatic vessel segmentation examples. a original (green channel), b enhanced, c segmented, d ground truth. Top row DRIVE database example (sensitivity \(=\) 0.8308, specificity \(=\) 0.9824 and accuracy \(=\) 0.9635). Bottom row STARE database (sensitivity \(=\) 0.8587, specificity \(=\) 0.9806 and accuracy \(=\) 0.9676). From [65]

For the orientation score template T, a similar functional is minimized:

with typically \(\lambda = 10\) and \(D_{\theta \theta } = 10^{-3.5}\) and with the left-invariant gradient \( \nabla T = \left( \frac{\partial T}{\partial \xi }, \frac{\partial T}{\partial \eta }, \frac{\partial T}{\partial \theta } \right) ^T \). The templates are represented in a B-spline basis, allowing for efficient and accurate optimization of the energy functionals. The B-spline coefficients are solved by a conjugate gradient approach.

The orientation score SE(2) template method outperforms state-of-the-art methods on publicly available benchmark databases, as it correctly identifies the ONH in 99.7 % of 1737 images of the well-known MESSIDOR, STARE (with a wide variety of pathological images) and DRIVE databases. For more details, see [7, 10].

4.4 Vessel enhancement and segmentation

4.4.1 Vessel enhancement filter

Vessels can be enhanced by oriented filters [5, 29], e.g., constructed from second-order Gaussian derivative operators at appropriate scales and orientations. To solve segmentation problems at complex structures like crossings, we have designed new filters in the orientation score matching the vessel profile as second-order left-invariant Gaussian differential operators perpendicular to the corresponding orientation [65], as described in Sect. 3.

With Gaussian filters, the maximum response occurs at \(\sigma =r/\sqrt{2}\), where r represents the radius of the vessel caliber [44]. Typically vessel calibers of the DRIVE and STARE databases range from 2 to 14 pixels, so we sample the spatial scales \(\sigma _s\) as \({\mathcal {S}}=\{0.7, 1.0, 1.5, 2.0, 2.5, 3.5, 4.5\}\) and angular scale \(\sigma _o=\pi /5\). We exploit \(N_o=36\) orientations between 0 and \(\pi \). Figure 5 shows our segmentation results on the DRIVE and STARE databases. From Fig. 6 we can see that the proposed orientation score based multi-scale filters show much better structure preservation ability on these special cases, as illustrated in Fig. 6.

Vessel segmentation results of our left-invariant Gaussian derivative method in comparison with state-of-the-art methods on an image of the DRIVE database. a–c and d–f resp. show the vessel segmentation results by the methods of Frangi et al. [29] and Soares et al. [56] on 3 difficult cases: a high curvature change on low intensity vessel part and tiny crossing, b artery and vein crossing with central reflex and c closely parallel vessels; g–i the results of our method, and j–l the corresponding ground truth annotations by a human observer [57]. From [65]

We apply the scale normalization as proposed by Lindeberg [44]. The final image reconstruction from the multi-scale filtered orientation scores is obtained via

with \(i \in \{1,..N_o\}\) and where \(N_o\) and \(\mathcal {S}\) represent resp. the number of orientations and the set of spatial scalings, and \(\Phi _{\eta ,\text {norm}}^{\sigma _s,\sigma _o}\) denotes the left-invariant Gaussian matching filters on the orientation score [65]. The maximum filter response is calculated over all orientations per position. The method is validated on the public databases DRIVE and STARE and is computationally efficient. With a sensitivity and specificity of 0.7744 resp. 0.9708 on DRIVE, and 0.7940 resp. 0.9707 on STARE, the proposed algorithm outperforms most current segmentation schemes. Interestingly, it can also deal with complicated vessel configurations. For more details see [65, 66].

4.4.2 Multi-scale and multi-orientation segmentation (BIMSO) for SLO images

For retinal images taken with a scanning laser ophthalmoscope (SLO) camera, new segmentation techniques are required. Such cameras typically exploit two wavelengths, green and infrared and typically exhibit better contrast for hemoglobin (vessels and bleedings). However, they also exhibit speckle noise, due to which conventional analysis methods for RGB images often fail. Very few studies are dedicated to SLO image analysis (e.g., [63]).

The proposed method [1], termed BIMSO (Brain-Inspired Multi-Scale and multi-Orientation), has four main steps: preprocessing, feature extraction, classification and post-processing. The method is developed for the green channel (RGB and SLO).

Noise reduction is effectuated by a nonlinear gamma transform (\(\check{U}_f=\alpha ~{|U_f|}^\gamma \)). The orientation score is raised by a power factor \(\gamma >1\). The absolute value of the orientation score \(|U_f|\) is taken because of the quadratic property of the cake wavelets, and \(\alpha \) is determined by the sign of the real part of the orientation score (\({\hbox {Re}}(U_f))\). See Fig. 7.

After enhancement, a pattern recognition approach is taken to classify the vessels from the background. A normalized feature vector is constructed per pixel, including the intensity, the half-cake wavelet responses and 3 left-invariant multi-scale first order Gaussian derivatives and 8 second-order Gaussian derivatives in the pre-processed orientation scores (two of the second-order derivatives are similar; therefore, there are only 8 unique second-order derivatives, so in total 11 Gaussian derivatives are used). The orientation score intrinsically allows for derivatives in the local directions (\(e_\xi ,e_\eta ,e_\theta \)), and crossings are ‘lifted’ as different orientations in different orientation layers. The method is trained with a feed-forward neural network classifier and validated and tested on two different datasets (DRIVE for RGB and IOSTAR for SLO).

Top original SLO image (EasyScan, I-Optics Inc.). Bottom nonlinear orientation score gamma transformation with \(\gamma =1.8\). From [1]

The BIMSO method detects blood vessels well, in particular the smaller ones in low contrast regions, and crossing vessels. The performance is better than the best supervised segmentation methods for RGB (e.g., [56]), for both the DRIVE and IOSTAR databases. For more details, see [1].

a Retinal image f and multi-scale vesselness filtering results for b the Frangi filter \(\mathcal {V}^\mathrm {Fr}(f)\) and our two methods c \(\mathcal {V}^{\xi \eta }(f)\) resp. d \(\mathcal {V}^{\mathbf {a}, \mathbf {b},\mathbf {c}}(f)\) (left to right). From [24]

4.4.3 Crossing-preserving multi-scale vesselness

Frangi’s vesselness filter [29], which is based on geometric relations between the principal curvatures (eigenvalues of the Hessian second-order matrix) to extract cylindrical shapes, has been extended to the orientation score domain, which makes it crossing-preserving [33].

In a single scale layer of the OS transform, the SE(2) vesselness is expressed as Frangi’s vesselness formulation [29]:

with typically \(\sigma _1=0.5\) and \(\sigma _2=0.2\;||\mathcal {S}||_\infty \).

The coordinates for the calculation of the second-order derivatives can be defined with two possible orthogonal frames: the local moving frame of reference \(\{\partial _\xi ,\partial _\eta ,\partial _\theta \}\) determined by the tangent and normal of the orientation score geodesics, or the gauge frame \(\{\partial _{\mathbf {a}}\), \(\partial _{\mathbf {b}}\), \(\partial _{\mathbf {c}}\}\) [31] determined by the eigendirections of the Hessian matrix \((\mathcal {H}^{s,\beta }\,\mathcal {U}_f^a)(\mathbf {g})\) at scale s and \(\mathbf {g}\in {\hbox {SE}}(2)\), normalized w.r.t. the \(\beta \)-metric. For the first we get:

where the superscripts \(^{s,\beta }\) indicate Gaussian derivatives at spatial scale \(s=\frac{1}{2}\sigma _s^2\) and angular scale \(\frac{1}{2}(\beta \sigma _s)^2\).

The second frame leads to (with \(\lambda _i\) the eigenvalues of the left-invariant Hessian):

with \(c=\frac{1}{2}(\lambda _2+\lambda _3)\), which can be interpreted as orientation confidence as defined by [31]. The SE(2) multi-scale vesselness is computed by summation over all scales. Figure 8 shows that the multi-scale vesselness gives much better results at crossings and bifurcations. The gauge frame \(\mathcal {V}^{\mathbf {a},\mathbf {b},\mathbf {c}}\) gives the best results. For more details, see [33].

4.5 Multi-orientation vessel tracking

Vessel tracking has the advantage over pixel classification that it guarantees connectedness of vessel segments. We have developed tracking via orientation scores [6], so classical difficulties as crossings, bifurcations, closely parallel vessels and vessels of varying width and high curvature can be dealt with naturally.

To handle bifurcations properly, one-sided kernels are exploited, constructed by decomposition of orientations scores in two opposite directions, weighted in the radial direction with an error function (cumulative Gaussian).

Two tracking methods are developed: Edge Tracking based on Orientation Scores (ETOS) and Centerline Tracking based on multi-scale Orientation Scores (CTOS). ETOS tracks both edges of the vessel simultaneously. The edge positions are detected in the orientation score, as a local minimum (left edge) and maximum (right edge) from the anti-symmetric imaginary part of the tangent plane. CTOS exploits the fact that the disturbing vessel central light reflex is filtered out by Gabor kernels with proper scales. The invertible orientation score has some distinct advantages over Gabor filtering: much lower computational costs as the orientation score filters for all scales simultaneously, whereas the Gabor filter only calculates a single spatial frequency at a time, and the orientation score filtering is more accurate.

Overall, ETOS outperforms CTOS. ETOS gives best results when applied on invertible orientation scores, can deal with many complex geometries, and gives reliable width measurements.

The algorithm performs well: ETOS detected 76 % (290/381) of the bifurcations and 96 % (109/114) of the crossings correctly. Most mistakes in bifurcations were actually crossings, only 5 % was misclassified. For more details, see [6].

4.5.1 Sub-Riemannian geodesics in SE(2)

In the orientation score, vessels can also be tracked as geodesic curves [8, 9, 52]. Geodesics (optimal curves minimizing some curve-length functional) are found by making proper use of the curved geometry of the domain. On the space of positions and orientations, a Riemannian geometry is defined, in which distances between tangent vectors are defined relative to the location in the score (e.g., via the left-invariant \(\{\partial _\xi ,\partial _\eta ,\partial _\theta \}\)-frame). Furthermore, since not all curves in the lifted domain SE(2) are natural some directions are prohibited, resulting in the definition of a sub-Riemannian geometry (compare this with the restricted motion of a car). Similarly, the paths of vessels are continuous and they do not exhibit sudden jumps to the left or the right.

Data-adaptive sub-Riemannian geodesics give good and smooth tracking results, also over crossings. From [8]

The algorithms for sub-Riemannian geodesic extraction are implemented via an anisotropic fast marching scheme [49, 52]. As a result, the curve extraction procedure is both fast and robust. In summary, the sub-Riemannian geodesic extraction in SE(2) allows for the robust extraction of curves because: (1) crossing structures are disentangled in the orientation scores, and (2) high curvature (e.g., discrete jumps to the left or right, or sudden change of direction) is punished due to the restricted Sub-Riemannian geometry. See examples in Fig. 9.

4.6 Arterio-venous ratio (AVR)

The separation of vessels in arteries and veins is crucial, as their different physiology, flow and mechanical properties respond differently to disease development, e.g., arteriolar narrowing, a decrease of the artery calibers relatively to the vein calibers is an important early biomarker for diabetic retinopathy. Also tortuosity measures are expected to be different for arteries and veins [39]. An automated artery–vein classification is required for high-volume screening.

The ratio of the arteriolar and venular diameters is called the arteriovenous ratio (AVR) and is classically computed from the six widest arteries and veins in a restricted zone around the optic disk [42].

We developed a novel method for artery/vein classification [25] based on local and contextual feature analysis of retinal vessels. Features are (a) the color, as arteries appear brighter and veins darker due to the oxygen content of the blood, (b) the transverse intensity profile, as arteries have a more pronounced central reflex to the camera flash, and (c) graph path properties of crossings and bifurcations of vessels, as these provide contextual information, because arteries never cross arteries and veins always cross arteries [17].

A non-submodular energy function is defined, integrating the features, and optimized exactly by means of graph cuts. The method was validated with a ground truth data set of 150 fundus images. An accuracy of 88.0 % was achieved for all vessels and 94.0 % for the 6 widest vessels. The contextual information especially benefits the classification of smaller vessels. For more details see [25].

The pipeline for calculating the fractal dimension from a color fundus image. From [35]

4.7 Fractal dimension

The concept of fractal dimension was initially defined and developed in mathematics. It measures the complexity of self-similar objects that have the same patterns across different scales, e.g., trees and snowflakes. The vascular tree on the human retina also has self-similar branching patterns over different scales. Therefore, there is a growing interest in retinal fractal analysis, exploiting fractal dimension as a biomarker for discriminating healthy from diabetic retinopathy.

Fractal dimensions have been widely investigated. However, conflicting findings are found [3, 12]. This motivated us to especially investigate the stability and reproducibility of fractal dimension measurements.

The retinal vascular network is extracted by the method described in Sect. 4.4.1. The region-of-interest (ROI) must be carefully specified for fractal dimension calculation, because it is a global measurement and different fundus cameras have different field-of-views. The ROI is determined via firstly locating the optic nerve head by the template-matching-based method as described in Sect. 4.3, and the optic disk radii are obtained. The fovea position is found at the global minimal intensity in a small region with 5*OD radii lateral distance to the optic nerve head. For the fovea resp. ONH-centered images, the circular ROI mask is centered at the fovea centralis, resp. ONH. For the fractal dimension calculation, we used the box counting method which paves the full retinal ROI image with square boxes (tiles) with different side-length (different scales) [48]. In each box, various measurements are done: counting the number of boxes that overlap with the vessels, calculating the entropy of each box and calculating the probability of finding vessels near each pixel. Finally, these measurements are plotted against the box side-length on a log-log plot, and the fractal dimension is the slope of the fitting regression line. See Fig. 10.

Top row pipeline of the BICROS method. a input image, b preprocessing result, c construction of the orientation score, d candidate selection, and e feature extraction and classification into bifurcations (orange) and crossings (blue). Bottom row The orientation column at a bifurcation point is f overlayed on an image with a polar plot, and g plotted against the orientation, where the dominant orientations are depicted in red. h A volume rendering of the orientation score. From [55] (color figure online)

We examined the stability of the fractal dimension measurements with respect to a range of variable factors: (1) different vessel annotations obtained from human observers, (2) different automatic segmentation methods, (3) different regions-of-interest, (4) different accuracy of vessel segmentation methods, and (5) different imaging modalities. Our results demonstrate that the relative errors for the measurement of fractal dimensions are significant and vary considerably according to the image quality, modality and the technique used for measuring it. So automated and semi-automated methods for the measurement of fractal dimension are not stable enough, which makes fractal dimension not a proper biomarker in quantitative clinical applications [35].

4.8 Geometric vessel features

4.8.1 Bifurcations/crossings detection

We have developed a fully automatic bifurcation and crossing detection algorithm called Biologically Inspired CRossing detecting in Orientation Scores (BICROS) [55], see Fig. 11, which does not depend on vessel segmentations. The precision of the junction detections is high, and the method can discriminate with high accuracy between crossings and bifurcations. Interestingly, junctions of small vessels, which are typically missing in vessel segmentations, are well detected by BICROS (Fig. 12). Through this, the proposed hybrid method outperforms state-of-the-art techniques [5]. It performs well on both RGB color and noisy SLO retinal images. It provides the orientation-augmented landmarks for image stitching, and because the orientations and the tracks of the branches are provided, useful other biomarkers can be extracted such as segment lengths and bifurcation angles.

Automated vessel bifurcation (yellow) and vessel crossing (red) detection. From [55] (color figure online)

4.9 Curvature/tortuosity

Vessel curvature is an important biomarker [13, 34, 39, 53]. Most methods require pre-segmentation and center line extraction, but our brain-inspired orientation score method [11] works directly on the image data by fitting so-called exponential curves in the orientation score. An exponential curve is a curve whose spatial projection has a constant curvature (Fig. 13).

The curvature values are computed directly from tangent vectors of exponential curves that locally best fit the data:

The coefficients of the tangent vector \({c_\xi ,c_\eta ,c_\theta }\) are expressed in the moving frame (see Eq. (1), [24, 30]).

SE(2) tortuosity measures per voxel. From [11]

Box-and-whisker plots of tortuosity measures \(\mu _{|\kappa |}\) and \(\sigma _{|\kappa |}\) in subgroups of the HRF and MESSIDOR database. From [11]

Principal directions are calculated from the eigenvectors of the Hessian matrix, but now in a curved domain, for which we need left-invariant derivatives:

As left-invariant derivatives do not commutate, e.g., \(\partial _\theta \partial _\xi U \ne \partial _\xi \partial _\theta U\), we need to make the asymmetric Hessian matrix symmetric via

so we can calculate the eigensystem of \(\mathcal {H}_\mu U\). The diagonal matrix \(M_{\mu ^{-2}}\) compensates for the difference in units between the orientation and spatial dimensions, for details see [11].

A confidence measure is acquired by calculating the Gaussian Laplacian in the plane orthogonal to the tangent direction of the vascular geodesic. From all pixelwise curvature measures, several global measures are derived, as the mean and standard deviation. The method was validated on synthetic and retinal images.

Tortuosity measures from the MESSIDOR database (1200 images) showed significant increase of tortuosity with the increasing severity of diabetic retinopathy (R0, R1, R2 and R3), see Fig. 14. For more details, see [11].

5 Next steps

The computer-aided diagnosis algorithms are mostly written in Mathematica 10 (Wolfram Research Inc.). Processing times are 1–15 min per image, but this is shortened by using multi-core (32) servers, both in China as in the Netherlands, and developing GPU-based implementations, in particular for the OS-based algorithms.

A dedicated workstation has been designed, to program any pipeline of processes, in batch mode, and with automated report generation (see Fig. 15, see also [19]). Data are acquired with different fundus cameras (EasyScan, DRS, Topcon). The workstation is also used for annotations: at least three experienced ophthalmology experts diagnose and annotate the bio-markers on the fundus image datasets as ground truth or reference standard. We have established a detailed workflow protocol to minimize variations.

The crucial validation phase, not reported in this, paper, is ongoing. The algorithms are currently evaluated in the collaborating hospitals: image and extensive metadata have been acquired of 3000 diabetes patients in Shengjing Hospital, and over 20.000 normal images with first expert reading are acquired at He Vision shops in Shenyang, China. Studies are ongoing to correlate the rich set of geometric biomarkers described in this paper with the extensive Chinese metadata obtained in the clinical setting, and find the most predictive and effective (combination of) geometric biomarkers extracted from the retinal fundus images for DR, glaucoma, and AMD.

6 Conclusion

The brain-inspired multi-orientation approach turned out to be highly successful in dealing with many complex vessel geometries, such as crossings, bifurcations, curvature analysis without segmentation, enhancement and segmentation. The theory for orientation scores in SE(2) is now well developed. The generalized approach of a Lie-group analysis by the visual front-end is currently a broad topic of research. The paper gives a review of many current geometric developments for retinal vessel analysis with the goal of establishing biomarkers for screening for early signs of a range of systemic, neurodegenerative and cardiovascular diseases.

In the parallel deep machine learning field major developments take place. The RetinaCheck team ended at 17th position in the recent Kaggle challenge on Diabetic Retinopathy classification (https://www.kaggle.com/c/diabetic-retinopathy-detection), of 661 participating teams. It can be foreseen that the merge of geometric analysis and reasoning with deep learning, based on the large volumes of data acquired in this project, can be highly successful. This is ongoing development, and will be reported in forthcoming papers.

References

Abbasi-Sureshjani, S., Smit-Ockeloen, I., Zhang, J., ter Haar Romeny, B.M.: Biologically-inspired supervised vasculature segmentation in SLO retinal fundus images. In: Kamel, M., Campilho, A. (eds.) Image Analysis and Recognition, Volume 9164 of Lecture Notes in Computer Science, pp. 325–334. Springer (2015)

Alexander, D., van Leeuwen, C.: Mapping of contextual modulation in the population response of primary visual cortex. Cogn. Neurodyn. 4, 124 (2012)

Aliahmad, R.B., Kumar, D.K., Sarossy, M.G., Jain, R.: Relationship between diabetes and grayscale fractal dimensions of retinal vasculature in the Indian population. BMC Ophthalmol. 14(1), 152–172 (2014)

Aquino, A., Gegundez-Arias, M.E., Marin, D.: Detecting the optic disc boundary in digital fundus images using morphological, edge detection, and feature extraction techniques. IEEE TMI 29(11), 1860–9 (2010)

Azzopardi, G., Petkov, N.: Automatic detection of vascular bifurcations in segmented retinal images using trainable COSFIRE filters. Comput. Anal. Images Patterns 34(8), 922–933 (2013)

Bekkers, E.J., Duits, R., Berendschot, T.T.J.M., ter Haar Romeny, B.M.: A multi-orientation analysis approach to retinal vessel tracking. J. Math. Imaging Vis. 49(3), 583–610 (2014)

Bekkers, E.J., Duits, R., Loog, M.: Training of templates for object recognition in invertible orientation scores: application to optic nerve head detection in retinal images. In: Tai, X.-C., Bae, E., Chan, T., Lysaker, M. (eds.) Energy Minimization Methods in Computer Vision and Pattern Recognition, Volume 8932 of Lecture Notes in Computer Science, pp. 464–477. Springer (2015)

Bekkers, E.J., Duits, R., Mashtakov, A., Sanguinetti, G.R.: Data-driven sub-riemannian geodesics in SE(2). In: Aujol, J.-F., Nikolova, M., Papadakis, N. (eds.) Scale Space and Variational Methods in Computer Vision, Volume 9087 of Lecture Notes in Computer Science, pp. 613–625. Springer (2015)

Bekkers, E.J., Duits, R., Mashtakov, A., Sanguinetti, G.R.: A PDE approach to data-driven sub-riemannian geodesics in SE(2). SIAM J. Imaging Sci. 8(4), 2740–2770 (2015)

Bekkers, E.J., Duits, R., ter Haar Romeny, B.M.: Optic nerve head detection via group correlations in multi-orientation transforms. In: Campilho, A., Kamel, M. (eds.) Image Analysis and Recognition, Volume 8815 of Lecture Notes in Computer Science, pp. 293–302. Springer (2014)

Bekkers, E.J., Zhang, J., Duits, R., ter Haar Romeny, B.M.: Curvature-based biomarkers for diabetic retinopathy via exponential curve fits in SE(2). In: Liu, J., Trucco, E., Xu, Y., Chen, X., Garvin, M. K. (eds.) Proceedings of the Ophthalmic Medical Image Analysis Second International Workshop, OMIA 2015, held in conjunction with MICCAI 2015, Lowa Research Online, Munich, Germany, 9 October 2015, pp. 113–120

Broe, R., Rasmussen, M.L., Frydkjaer-Olsen, U., Olsen, B.S., Mortensen, H.B., Peto, T., Grauslund, J.: Retinal vascular fractals predict long-term microvascular complications in type 1 diabetes mellitus: the Danish Cohort of Pediatric Diabetes 1987 (DCPD1987). Diabetologia 57(10), 2215–2221 (2014)

Cheung, C.Y., Lamoureux, E., Ikram, M.: Retinal vascular geometry in asian persons with diabetes and retinopathy. J. Diabetes Sci. Technol. 6(3), 595–605 (2012)

Citti, A., Sarti, G.: A cortical based model of perceptual completion in the roto-translation space. J. Math. Imaging Vis. 24(3), 307–326 (2006)

Citti, G., Sarti, A.: Neuromath. Vis. Springer, Berlin (2014)

Dalal, N., Triggs. B.: Histograms of oriented gradients for human detection. In: Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on volume 1, vol. 1, pp. 886–893 (2005)

Dashtbozorg, B., Mendonça, A.M., Campilho, A.: An automatic graph-based approach for artery/vein classification in retinal images. IEEE Trans. Image Process. 23(3), 1073–10832 (2015)

Dashtbozorg, B., Mendonça, A.M., Campilho, A.: Optic disc segmentation using the sliding band filter. Comput. Biol. Med. 56, 1–12 (2015)

Dashtbozorg, B., Mendonça, A.M., Penas, S., Campilho, A.: RetinaCAD, a system for the assessment of retinal vascular changes. In: 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, pp. 6328–6331 (2014)

Duits, R., Boscain, U., Rossi, F., Sachkov, Y.: Association fields via cuspless sub-riemannian geodesics in SE(2). J. Math. Imaging Vis. 49(2), 384–417 (2014)

Duits, R., Felsberg, M., Granlund, G., ter Haar Romeny, B.: Image analysis and reconstruction using a wavelet transform constructed from a reducible representation of the Euclidean motion group. Int. J. Comput. Vis. 72(1), 79–102 (2007)

Duits, R., Franken, E.: Left-invariant parabolic evolutions on SE(2) and contour enhancement via invertible orientation scores part I: Linear left-invariant diffusion equations on SE(2). Q. Appl. Math. 68(2), 255–292 (2010)

Duits, R., Franken, E.M.: Left invariant parabolic evolution equations on SE(2) and contour enhancement via invertible orientation scores, part II: Nonlinear left-invariant diffusion equations on invertible orientation scores. Q. Appl. Math. AMS 68, 293–331 (2010)

Duits, R., Janssen, M.H.J., Hannink, J., Sanguinetti, G.R.: Locally adaptive frames in the roto-translation group and their applications in medical imaging. arXiv:1502.08002v5 [math.GR] (2015)

Eppenhof, K., Bekkers, E.J., Berendschot, T.T.J.M., Pluim, J., ter Haar Romeny, B.M.: Retinal artey/vein classification via graph cut optimization. In: Liu, J., Trucco, E., Xu, Y., Chen, X., Garvin, M.K. (eds.) Proceedings of the Ophthalmic Medical Image Analysis Second International Workshop, OMIA 2015, held in conjunction with MICCAI 2015, Lowa Research Online, Munich, Germany, pp. 121–128, 9 October 2015

Feng, J., Berendschot, T.T.J.M., ter Haar Romeny, B.M.: Quality classification of retinal fundus images by image structure clusters and random forests. BME Report, BMIA2014-11 (2014)

Field, D., Hayes, A., Hess, R.F.: Contour integration by the human visual system: evidence for a local ‘association field ’. Vis. Res. 33–2, 173–193 (1993)

Foracchia, M., Grisan, E., Ruggeri, A.: Luminosity and contrast normalization in retinal images. Med. Image Anal. 9(3), 179–190 (2005)

Frangi, A.F., Niessen, W.J., Vincken, K.L., Viergever, M.A.: Muliscale vessel enhancement filtering. In: Proceedings of the First International Conference on Medical Image Computing and Computer-Assisted Intervention, Lecure Notes in Computer Science, IEEE Computer Society Press, pp. 130–137 (1998)

Franken, E., Duits, R., ter Haar Romeny, B.M.: Nonlinear diffusion on the 2D Euclidean motion group. In: Sgallari, F., Murli, A., Paragios, N. (eds.) Scale Space and Variational Methods in Computer Vision: Proceedings of the First International Conference, SSVM 2007, Ischia, Italy, volume 4485 of Lecture Notes in Computer Science, pp. 461–472. Springer, Berlin, May–June (2007)

Franken, E.M., Duits, R.: Crossing-preserving coherence-enhancing diffusion on invertible orientation scores. Int. J. Comput. Vis. 85(3), 253–278 (2009)

ter Haar Romeny, B.M.: Geometry-Driven Diffusion in Computer Vision volume 1 of Computational Imaging and Vision Series. Kluwer Academic Publishers, Dordrecht (1994)

Hannink, J., Duits, R., Bekkers, E.J.: Crossing-preserving multi-scale vesselness. In: Golland, P., Hata, N., Barillot, C., Hornegger, J., Howe, R. (eds.) Medical Image Computing and Computer-Assisted Intervention MICCAI 2014, Volume 8674 of Lecture Notes in Computer Science, pp. 603–610. Springer (2014)

Hart, W.E., Goldbaum, M., Kube, P., Nelson, M.R.: Measurement and classification of retinal vascular tortuosity. IJMI 53, 239–252 (1999)

Huang, F., Zhang, J., Bekkers, E.J., Dashtbozorg, B., ter Haar Romeny, B.M.: Stability analysis of fractal dimensions in retinal vasculature. In: Liu, J., Trucco, E., Xu, Y., Chen, X., Garvin, M.K. (eds.) Proceedings of the Ophthalmic Medical Image Analysis Second International Workshop, OMIA 2015, held in conjunction with MICCAI 2015, Lowa Research Online, Munich, Germany, pp. 1–8, 9 October 2015

Hubbard, L.D., et al.: Methods for evaluation of retinal microvascular abnormalities associated with hypertension/sclerosis in the Atherosclerosis Risk in Communities Study. Ophthalmology 106(12), 2269–80 (1999)

Hubel, D.H., Wiesel, T.N.: Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 160, 106–154 (1962)

Jonas, J.B., Budde, W.M., Panda-Jonas, S.: Ophthalmoscopic Evaluation of the Optic Nerve Head. Surv. Opthalmol. 43(4), 293–320 (1999)

Kalitzeos, A.A., Lip, G.Y., Heitmar, R.: Retinal vessel tortuosity measures and their applications. Exp. Eye Res. 106, 40–46 (2013)

Kandel, E.R., Schwartz, J.H., Jessel, T.M.: Principles of Neural Science, 5th edn. McGraw-Hill, New York (2013)

Kanski, J.J., Bowling, B.: Synopsis of Clinical Ophthalmology. Elsevier Health Sciences, Amsterdam (2012)

Knudtson, M.D., Lee, K.E., Hubbard, L.D., Wong, T.Y., Klein, R., Klein, B.E.K.: Revised formulas for summarizing retinal vessel diameters. Curr. Eye Res. 27(3), 143–149 (2003)

Koenderink, J.J.: The structure of images. Biol. Cybern. 50, 363–370 (1984)

Lindeberg, T.: Scale-Space Theory in Computer Vision. Springer, Berlin (1994)

Lu, S.: Accurate and efficient optic disc detection and segmentation by a circular transformation. IEEE TMI 30(12), 2126–2133 (2011)

Lu, S., Lim, J.H.: Automatic optic disc detection from retinal images by a line operator. Biomed. Eng. IEEE TBME 58(1), 88–94 (2011)

Mahfouz, A.E., Fahmy, A.S.: Fast localization of the optic disc using projection of image features. IEEE TIP 19(12), 3285–3289 (2010)

Masters, B.R.: Fractal analysis of the vascular tree in the human retina. Annu. Rev. Biomed. Eng. 6, 427–452 (2004)

Mirebeau, J.-M.: Anisotropic fast-marching on Cartesian grids using lattice basis reduction. SIAM J. Numer. Anal. 52(4), 1573–1599 (2014)

Niemeijer, M., Abrmoff, M.D., van Ginneken, B.: Image structure clustering for image quality verification of color retina images in diabetic retinopathy screening. Med. Image Anal. 10(6), 888–898 (2006)

Ramakanth, S.A., Babu, R.V.: Approximate nearest neighbour field based optic disk detection. Comput. Med. Imaging Graph. 38(1), 49–56 (2014)

Sanguinetti, G., Bekkers, E., Duits, R., Janssen, M.H.J., Mashtakov, A., Mirebeau, J.: Sub-Riemannian fast marching in SE(2). In: Pardo, A., Kittler, J. (eds.) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications, Volume 9423 of Lecture Notes in Computer Science, pp. 366–374. Springer (2015)

Sasongko, M.B., Wong, T.Y., Nguyen, T.T., et al.: Retinal vascular tortuosity in persons with diabetes and diabetic retinopathy. Diabetologia 54(9), 2409–2416 (2011)

Sinthanayothin, C., Boyce, J.F., Cook, H.L., Williamson, T.H.: Automated localisation of the optic disc, fovea, and retinal blood vessels from digital colour fundus images. Br. J. Ophthalmol. 83(8), 902–910 (1999)

Smit-Ockeloen, I.: Automatic detection of vascular bifurcations and crossings in retinal images using orientation scores. Master’s thesis, Eindhoven University of Technology, Department or Biomedical Engineering (2015)

Soares, J.V.B., Leandro, J.J.G., Cesar, R.M., Jelinek, H.F., Cree, M.J.: Retinal vessel segmentation using the 2-d gabor wavelet and supervised classification. Med. Imaging IEEE Trans. 25(9), 1214–1222 (2006)

Staal, J., Abràmoff, M.D., Niemeijer, M., Viergever, M.A., van Ginneken, B.: Ridge-based vessel segmentation in color images of the retina. Med. Imaging IEEE Trans. 23(4), 501–509 (2004)

ter Haar Romeny, B.M.: Front-End Vision and Multi-Scale Image Analysis: Multi-Scale Computer Vision Theory and Applications, Written in Mathematica, Volume 27 of Computational Imaging and Vision Series. Springer, Berlin (2003)

ter Haar Romeny, B.M.: The differential structure of images. In: Lakshminarayanan, V. (ed.) Mathematical Optics: Classical Quantum and Computational Methods, pp. 565–582. CRC Press, Boca Raton (2013)

ter Haar Romeny, B.M.: A geometric model for the functional circuits of the visual front-end. In: Grandinetti, L., Lippert, T., Petkov, N. (eds.) Brain-Inspired Computing, Volume 8603 of Lecture Notes in Computer Science, pp. 35–50. Springer International Publishing (2014)

van Almsick, M.: Context Models of Lines and Contours. Ph.D. thesis, Eindhoven University of Technology (2007)

Weickert, J.A.: Anisotropic diffusion in image processing. Ph.D. thesis, University of slautern, Department of Mathematics, Kaiserslautern, Germany (1996)

Xu, J., Ishikawa, H., Wollstein, G., Schuman, J.S.: Retinal vessel segmentation on SLO image. In: Engineering in Medicine and Biology Society, 2008. EMBS 2008. 30th Annual International Conference of the IEEE, IEEE, pp. 2258–2261 (2008)

Yu, H., et al.: Fast localization and segmentation of optic disk in retinal images using directional matched filtering and level sets. IEEE TITB 16(4), 644–57 (2012)

Zhang, J., Bekkers, E.J., Abbasi, S., Dashtbozorg, B., ter Haar Romeny, B.M.: Robust and fast vessel segmentation via Gaussian derivatives in orientation scores. In: ICIAP 2015, volume 9279 of Lecture Notes in Computer Science. Springer, Berlin, pp. 537–547 (2015)

Zhang, J., Duits, R., Sanguinetti, G., ter Haar Romeny, B.M., Numerical Approaches for Linear Left-invariant Diffusions on SE(2), their Comparison to Exact Solutions, and their Applications in Retinal Imaging. Numer. Math. Theory Methods Appl. 9(1):1–50 (2016)

Acknowledgments

The work is part of the Hé Programme of Innovation Cooperation, which is (partly) financed by the Netherlands Organisation for Scientific Research (NWO), dossier nr: 629.001.003. The work is also financed by a Lilly Collaborative Research Grant from the European Foundation for the Study of Diabetes EFSD together with the Chinese Diabetes Society, and by the EU Marie Curie program ‘Metric Analysis For Emergent Technologies’ (MAnET), Grant Agreement No.: 607643.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

ter Haar Romeny, B.M., Bekkers, E.J., Zhang, J. et al. Brain-inspired algorithms for retinal image analysis. Machine Vision and Applications 27, 1117–1135 (2016). https://doi.org/10.1007/s00138-016-0771-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00138-016-0771-9