Abstract

Objective

To test whether a reduction of third generation cephalosporin (3GC) use has a sustainable positive impact on the high endemic prevalence of 3GC resistant K. pneumoniae and E. coli.

Design

Segmented regression analysis of interrupted time series was used to analyse antibiotic consumption and resistance data 30 months before and 30 after the intervention.

Setting

Surgical intensive care unit (ICU) with 16-bed unit in a teaching hospital.

Intervention

In July 2004, 3GCs were switched to piperacillin in combination with a β-lactamase-inhibitor as standard therapy for peritonitis and other intraabdominal infections.

Results

Segmented regression analysis showed that the intervention achieved a significant and sustainable decrease in the use of 3GCs of −110.2 daily defined doses (DDD)/1,000 pd. 3GC use decreased from a level of 178.9 DDD/1,000 pd before to 68.7 DDD/1,000 pd after the intervention. The intervention resulted in a mean estimated reduction in total antibiotic use of 27%. Piperacillin/tazobactam showed a significant increase in level of 64.4 DDD/1,000 pd, and continued to increase by 2.3 DDD/1,000 pd per month after the intervention. The intervention was not associated with a significant change in the resistance densities of 3GC resistant K. pneumoniae and E. coli.

Conclusion

Reducing 3GCs does not necessarily impact positively on the resistance situation in the ICU setting. Likewise, replacing piperacillin with β-lactamase inhibitor might provide a selection pressure on 3GC resistant E. coli and K. pneumoniae. To improve resistance, it might not be sufficient to restrict interventions to a risk area.

Similar content being viewed by others

Introduction

There is universal agreement that the emergence of bacterial resistance is related to selective pressure through use of antimicrobials. This suggests that reducing antibiotic use will bring down resistance. However, unfortunately, it is difficult to reduce antibiotic use in hospitals and it does not always prove efficient [1, 2]. Furthermore, in 2003, a Cochrane systematic review of interventions to improve antibiotic prescribing concluded that there is limited evidence of sustained improvement in antibiotic use over time. The reason for this is a fundamentally flawed methodology [3]. This is especially a problem with multidrug-resistant pathogens, including Enterobacteriaceae producing extended spectrum beta-lactamases (ESBL).

During the past two decades, broad spectrum cephalosporins have been used worldwide and ESBL positive strains have emerged since the initial description of ESBL production by K. pneumoniae isolates in Germany in 1983 [4]. Generally, seriously ill patients with prolonged hospital stay and invasive devices are at risk for the acquisition of ESBL-producing pathogens [5]. Heavy antibiotic use is also a well known risk factor and several studies have found a positive relationship between third-generation cephalosporin (3GC) use and the acquisition of ESBL-producing organisms [6, 7]. Rahal et al. [8] showed that restriction of cephalosporins was able to control cephalosporin resistance in nosocomial Klebsiella. However, this was followed by an increase in the rate of imipenem resistant P. aeruginosa. This effect, known as ‘squeezing the balloon’, underscores the problems of systematic drug class restriction, because it may lead to the emergence of other class resistance patterns.

With respect to all of these problems, the purpose of this study was to test whether reduction of third-generation cephalosporin use has a sustainable positive impact on the high endemic prevalence of third-generation cephalosporin resistant K. pneumoniae and E. coli in a surgical intensive care unit (ICU). This should be done by using a segmented regression analysis as recommended by the Cochrane review and by looking at the ecology of the ICU, i.e., the changes in overall antibiotic use and in other Gram-positive and Gram-negative bacteria [3].

Methods

Setting

The surgical ICU monitored is a 16-bed unit in an 861 bed teaching hospital in Germany. In 2004, 54.3% of the patients on the ward had undergone visceral surgery, 24.3% had undergone trauma, 8.2% urological and 5.2% gynaecological surgery, and 7.9% were medical patients. Visceral surgery is mainly conducted for secondary peritonitis and pancreatitis, while trauma surgery focuses on acute trauma care and on surgery of the spine. In 2004, the mean APACHE II score was 15; however, it was not collected beyond that year. The nurse/patient ratio is 1:3. The patients are cared for by three physicians in the morning, by two in the afternoon and by one at night. The mean length of stay was 6.3 days.

76% of the physicians are anaesthetists. A senior physician is always present during the morning shift.

Patients are screened for MRSA (nose and groin swabs) if they have had contact with other MRSA patients, have chronic devices in place, chronic wounds, have undergone antibiotic therapy longer than three days, or if they have been transferred from another hospital, ICU, nursing home or from abroad. In ventilated patients, tracheal secretion is screened twice weekly. Otherwise, microbiology samples are taken whenever infection is suspected. In addition to the contact precautions applied, all patients with MRSA, VRE and ESBL are isolated in single rooms. Regular training in infection control takes place once or twice a year.

The ICU joined the Krankenhaus Infektions Surveillance System (KISS) in 1997 and SARI (Surveillance of Antibiotic Use and Resistance in ICUs) in 2002. The motive behind the decision to revise the antimicrobial guidelines was an increase in 3GC resistance in K. pneumoniae and an outlier status compared to other ICUs participating in SARI. In 2003, the (pooled) mean resistance proportion (RP) in SARI-ICUs was 2.4%, whereas in the study ICU it was as high as 29.0%. In 2003, the (pooled) mean in SARI-ICUs for 3GC resistant E. coli, was 1.8% compared with 6.7% in the study ICU. Furthermore, 3GC use in the study ICU was 191 DDD/1,000 pd, whereas consumption was below 138 DDD/1,000 pd in 75% of the other SARI-ICUs. Total antibiotic use averaged that of other SARI-ICUs (the pooled mean was 1,346 DDD/1,000 pd in 2003).

Intervention

In June 2004, clinical practice and the written guidelines on empiric antibiotic treatment in the ICU were revised based on the local resistance situation and were then put into effect in July 2004. The changes in empiric therapy were initiated by the microbiology department, which is also accountable for infection control. Education sessions on antibiotic guidelines were held in the departments of surgery and of anaesthesiology. Implementation of the guidelines in the ICU was incumbent on a team of intensive care specialists responsible for the ward in question and the responsible microbiologists. Implementation had the support of the heads of both departments.

Prior to the intervention, the ICU under study used 3GCs in combination with metronidazole for first-line empiric therapy of peritonitis originating from the lower gastrointestinal tract. Furthermore, 3GCs were used for the treatment of nosocomial pneumonia or urinary tract infections if pathogens tested susceptible. Ampicillin with β-lactamase inhibitor was administered for 5–7 days as infection prophylaxis for open fractures. The revision of the guidelines focused on reducing 3GC use—a known risk factor for the acquisition of ESBL positive Enterobacteriaceae. After initiation of the intervention in July 2004, empiric standard therapy for peritonitis and other intraabominal infections was switched from 3GCs to piperacillin in combination with a β-lactamase-inhibitor. Moreover, the duration of antibiotic therapy for open fractures was shortened to single shot pre-operative prophylaxis.

Data collection

Every month data on antimicrobial usage were obtained from the computerised pharmacy database from January 2002 through December 2006 within the framework of project SARI (Surveillance of antimicrobial use and antimicrobial resistance in Intensive Care Units).

Monthly resistance data, including all clinical and surveillance isolates were collected from the microbiology laboratory. The data were specified as resistant by the clinical laboratory using interpretive criteria recommended by the Clinical and Laboratory Standards Institute (CLSI). Copy strains—defined as an isolate of the same species showing the same susceptibility pattern throughout the period of 1 month in the same patient, irrespective of the site of isolation—were excluded.

Data analysis

Consumption, i.e., antimicrobial usage density (AD) was expressed as daily defined doses (DDD) and normalised per 1,000 patient-days. One DDD is the standard adult daily dose of an antimicrobial agent for a one day’s treatment defined by the WHO (ATC/DDD index 2006, http://www.whocc.no). Total antibiotic use was calculated without sulbactam.

The proportion of resistant isolates (RP) is calculated by dividing the number of resistant isolates by the total number of the isolates of this species tested against this antibiotic multiplied by 100. The resistance densities (RD) are expressed as the number of resistant isolates of a species per 1,000 patient days.

We used segmented regression analysis of interrupted time series to assess the changes in antibiotic use before and after implementation of the revised guidelines.

Segmented regression analysis of interrupted time series is a robust modelling technique that allows the analyst to estimate dynamic changes in various outcomes.

Level and slope are the two parameters which define each segment of a time series. The level is the value of the series at the beginning of a given time interval, the slope is the change of the measure during a time step (e.g. a month). An abrupt intervention effect constitutes a drop or jump in the level of the outcome after the intervention. A change in slope is defined by an increase or decrease in the slope of the time step after the intervention, as compared with the time step preceding the intervention. It represents a gradual change of the outcome parameter during a time step. The method is described in greater detail by Wagner et al. and Ansari et al. [9, 10].

For the statistical analysis of monthly antibiotic consumption, we looked at the 30 months before and 30 months after the intervention. Dependent variables were tested for normal distribution by Kolmogorov–Smirnov test or by Shapiro–Wilks test and for autocorrelation by Durbin–Watson test. The R 2 statistic was calculated as a measure to evaluate the goodness of the fit of the final segmented regression model to the data.

The full segmented regression model included the baseline level, the slope before intervention, change in level (at the moment of intervention) and change in slope after intervention. Therefore, non-significant variables were removed stepwise backward. The p-value to remove a variable was 0.10 and was 0.05 to enter a variable into the model. All antibiotic groups with an AD > 30 DDD/1,000 pd were considered in the regression analysis.

We analysed resistance data before and after the intervention by Fisher’s exact test. We did not use a linear model like segmented regression analysis because linearity could not be assumed. The following resistance parameters were analysed: 3GC resistant K. pneumoniae and E. coli, piperacillin resistant K. pneumoniae and E. coli, and P. aeruginosa and ampicillin-sulbactam resistant K. pneumoniae and E. coli.

The significance level was P < 0.05 for all tests and all analyses were performed using EpiInfo 6.04 and SAS 9.1; SAS Institute Inc, Cary, NC, USA.

Results

The characteristics of the ICU are shown in Table 1. The number of patients decreased over the 5-year period, as did the mean duration of ventilation. However, the percentage of ventilated patients increased, indicating that more patients were ventilated for a shorter period of time.

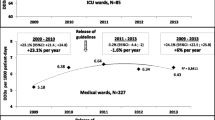

Antibiotic use

Segmented regression analysis showed that the intervention was associated with a significant and sustainable decrease in the use 3GCs of −110.2 DDD/1,000 pd, with a 95% confidence interval (CI95) (−140.0; −80.4), i.e., from 178.9 (CI95 157.8; 200.0) before to 68.7 DDD/1,000 pd after the intervention (Fig. 1; Table 2). R 2 was 0.468. Furthermore, there was a significant reduction in the use of ampicillins (−167.4 DDD/1,000 pd, CI95 −223.8; −110.9, R 2 = 0.378) and in the use of imidazoles (−94.5 DDD/1,000 pd, CI95 −121.2; −67.7, R 2 = 0.463). The intervention resulted in a mean estimated reduction in total antibiotic use of −375.0 DDD/1,000 pd (CI95 −523.3; −226.7, R 2 = 0.306), which equates to a 27% reduction. Total antibiotic use showed no significant month to month change (slope) before and after the intervention. In contrast, the use of aminoglycosides decreased steadily before and after the intervention (slope −1.4 DDD/1,000 pd per month, CI95 −1.8; −1.0, R 2 = 0.430). Piperacillin and piperacillin/tazobactam showed a significant increase in level of 64.4 DDD/1,000 pd (CI95 38.5; 90.3) and continued to increase by 2.3 DDD/1,000 pd (CI95 1.0; 3.6) per month after the intervention (R 2 = 0.745).

Changes in antibiotic use density (AD) of third generation cephalosporins 30 months before and 30 months after the intervention, 1/2002–12/2006. The estimated (grey dotted line) mean pre-intervention use (AD) is 178.9; the mean estimated post intervention use is 68.7 and the estimated change in level is −110.2

Resistance

The post-intervention period was not associated with a significant change in the resistance densities of K. pneumoniae and E. coli resistant to 3GC (Table 3; Fig. 2). Quite the contrary, reduced use of 3GCs and the switch to piperacillin led to an increase in the RD of piperacillin resistant E. coli; also, to a decrease in P. aeruginosa resistant to piperacillin (Table 3; Fig. 2).

Observed resistance densities of E. coli and K. pneumoniae resistant to third generation cephalosporins (3GC) and of E. coli and P. aeruginosa resistant to piperacillin 10 quarters before and 10 quarters after the intervention, 2002–2006 (solid black line). The black vertical line indicates the date of the intervention in June 2004, pd patient days, CI95 95% Poisson confidence intervals (dashed line)

In the post-intervention period, the RD of ampicillin/sulbactam resistant E. coli was significantly higher than in the pre-intervention period although the use of ampicillin and ampicillin/sulbactam had been reduced significantly.

Discussion

In this study, we analysed two questions. Firstly, whether there is a causal relationship between the use of 3GCs, and, correspondingly, resistance in E. coli and K. pneumoniae, and secondly, whether our intervention really was sustainable. This was done with a quasi-experimental study design by using interrupted time series analysis. Many studies conducted before and around the year 2000 found such an association: they replaced or decreased broad spectrum cephalosporins and found a reduction in resistance rates or in the incidence of infections with cephalosporin resistant/ESBL producing pathogens [11–15]. Rahel et al. [8] found that an 80% reduction in hospital-wide cephalosporin use over a period of 2 years (1994/1995) resulted in a 44% reduction of ceftazidime resistant Klebsiella in the hospital and an 88% reduction in the surgical ICU.

The second question can be answered in the affirmative, because after the intervention 61% fewer 3GCs were administered and use remained at this low level for the following 30 months. As a side effect of this revision process, antibiotic prophylaxis was changed to a single shot prophylaxis for open fractures. However, the switch from a combination therapy using 3GCs and metronidazole to piperacillin with a β-lactamase-inhibitor did not lead to a reduction in the burden of 3GC resistant E. coli and K. pneumoniae, but rather to an increase in the burden of piperacillin resistant E. coli. We concentrated on the burden of resistance, i.e., the resistant pathogens/1,000 patient days, because this parameter might better reflect the economic and ecologic burden in the ICU [16, 17].

There are several possible reasons why reducing antibiotics was not found to have a positive effect.

Firstly, it might be hypothesized that piperacillin with a β-lactamase-inhibitor in itself can induce or select for ESBL production. Piperacillin-tazobactam is believed only to be effective against ESBL-producing bacteria when the inoculum is low and, like other β-lactam antibiotics, may therefore contribute to a selection advantage for those pathogens [18, 19]. This is supported by other studies, e.g., a study by Toltzis et al.: RD of cefazidime-resistant organisms doubled in a paediatric ICU throughout the course of the study, although ceftazidime use was reduced by 96% and replaced by piperacillin [20]. Another comprehensive study, in which 5,209 ICU-patients were screened on admission, showed that piperacillin-tazobactam exposure was associated with ESBL-colonisation, whereas 3GCs were not [21]. However, there is evidence that replacement of 3GCs with piperacillin/tazobactam had a positive impact on the resistance of ESBL positive pathogens [11, 15].

Secondly, if 3GC resistant E. coli and K. pneumoniae have already been imported into the ICU either from the community or from other hospital wards then an alteration in antibiotic management will fail to influence the resistance situation in the ICU.

The increase in 3GC resistant E. coli in the ICU runs parallel to the hospital-wide increase and to that in German SARI-ICUs (mean RD increased from 0.1 in 2001 to 0.8 in 2006). Furthermore, the epidemiology of ESBLs has changed dramatically. Until recently, most infections caused by ESBL- producing bacteria have mostly been described as nosocomially acquired, often in specialist units. The enzyme variants found were mostly TEM or SHV. Now the CTX-M enzymes have replaced the mutants. Infections due to ESBL producers are now increasingly being found in non-hospitalised patients and the mode of transmission is not exactly clear [22–24]. Livermore concludes that at present “the opportunities for control (ESBLs) are disturbingly small” [22].

Thirdly, if there is an endemic level of 3GC resistant enterobacteriaceae, transmission of these pathogens from patient to patient can contribute to this level and can even cause outbreaks. Because peaks of E. coli and K. pneumoniae run parallel in our ICU, this cannot be excluded. Fourthly, other intervention studies only surveyed 9–12 months after the intervention, and mostly compared the means of resistance proportions [8, 12, 14]. Fifthly, the mean duration of ICU stay was 6.3 days. It might be that the restriction of antibiotics was not reflected in the ICU but on wards to which the patients were transferred. Sixthly, though lower, at 1,000 DDD/1,000 pd, antimicrobial pressure was still substantial and ESBL-containing gram-negative bacteria may have been selected by exposure to other antimicrobials such as fluoroquinolones or trimethoprim- sulfamethoxazole. Lastly, it can be hypothesized that antimicrobial controls may be more effective for bacteria with resistance mechanisms that are due to chromosomal mutation or derepression than for bacteria containing antimicrobial-resistance plasmids.

The limitations of the study must be considered. No admission screening programme was in place except for patients at risk for MRSA. Therefore, it is impossible to estimate the import and the nosocomial acquisition of resistant pathogens. Only one third of all 3GC resistant E. coli and K. pneumoniae were genotyped (data not presented) and genotyping was restricted to the species level and did not look for resistance genes. This excludes the possibility to make a statement on whether transmissions occurred because of infection control failures. Furthermore, due to the lack of genotyping it remains unclear whether CTX-M really were the predominant enzymes. No data on co-resistance are reported in SARI and we cannot therefore state whether for instance fluoroquinolone resistance (fluoroquinolone use did not change) had an influence on 3GC resistant E. coli. The frequency of obtaining cultures might have had an impact on the results as the study had no culture protocol. However, the policy for taking cultures did not change. Copy strains were defined as an isolate of the same species showing the same susceptibility pattern throughout the period of one month in the same patient. It cannot be excluded that very few isolates were counted twice if the ICU stay was longer than one month. This might have affected RDs.

Nevertheless, to our knowledge, there is no study which analyses the impact of reducing the use of 3GCs on the resistance of E. coli and K. pneumoniae for as long as 30 months after intervention. The intervention was successful in that it achieved a 30% reduction in total antibiotic use, but did not result in an improvement of the resistance situation.

We conclude that concentrating on reducing 3GCs does not necessarily have a positive impact on the resistance situation in the ICU setting. Likewise, replacement of 3GCs with piperacillin with β-lactamase inhibitor might provide a selection pressure on 3GC resistant E. coli and K. pneumoniae. This might not have been the case if carbapenems had been chosen. There may be a need to reduce total antibiotic use much further than to 1,000 DDD/1,000 pd, since reducing use to one antibiotic dose per patient per day may not have been enough to impact positively on the reduction of antimicrobial resistance.

Several other factors influence 3GC resistance in gram-negative bacteria, the most important being the changed epidemiology of ESBLs and import into the hospital and the ICU. Furthermore, infection control management, the purpose of which is to prevent person to person spread of plasmid mediated resistance, may trump antimicrobial stewardship and widespread use of antibiotics in the community and the environment.

Thus, to improve the resistance situation it might not be sufficient to simply restrict interventions to a risk area; rather, the whole hospital should be included and even the community. In dealing with the problem of resistance, society as a whole needs to recognize the fact that effective antibiotics are a valuable natural resource. Sustained effectiveness can only be achieved with greater social awareness of the problem, in addition to improving the education and training of physicians and setting up networks that addressing both resistance and antibiotic use.

References

Cook PP, Catrou PG, Christie JD (2004) Reduction in broad-spectrum antimicrobial use associated with no improvement in hospital antibiogram. J Antimicrob Chemother 53:853–859

Nijssen S, Bootsma M, Bonten M (2006) Potential confounding in evaluating infection-control interventions in hospital settings: changing antibiotic prescription. Clin Infect Dis 43:616–623

Ramsay C, Brown E, Hartman G (2003) Room for improvement: a systematic review of the quality of evaluations of interventions to improve hospital antibiotic prescribing. J Antimicrob Chemother 52:764–771

Knothe H, Shah P, Krcmery V (1983) Transferable resistance to cefotaxime, cefoxitin, cefamandole and cefuroxime in clinical isolates of Klebsiella pneumoniae and Serratia marcescens. Infection 11:315–317

Giamarellou H (2005) Multidrug resistance in gram-negative bacteria that produce extended-spectrum beta-lactamases (ESBLs). Clin Microbiol Infect 11:1–16

Du B, Long Y, Liu H (2002) Extended-spectrum beta-lactamase-producing Escherichia coli and Klebsiella pneumoniae bloodstream infection: risk factors and clinical outcome. Intensive Care Med 28:1718–1723

Wendt C, Lin D, von Baum H (2005) Risk factors for colonization with third-generation cephalosporin-resistant enterobacteriaceae. Infection 33:327–332

Rahal JJ, Urban C, Horn D (1998) Class restriction of cephalosporin use to control total cephalosporin resistance in nosocomial Klebsiella. JAMA 280:1233–1237

Ansari F, Gray K, Nathwani D (2003) Outcomes of an intervention to improve hospital antibiotic prescribing: interrupted time series with segmented regression analysis. J Antimicrob Chemother 52:842–848

Wagner AK, Soumerai SB, Zhang F (2002) Segmented regression analysis of interrupted time series studies in medication use research. J Clin Pharm Ther 27:299–309

Bantar C, Vesco E, Heft C (2004) Replacement of broad-spectrum cephalosporins by piperacillin-tazobactam: impact on sustained high rates of bacterial resistance. Antimicrob Agents Chemother 48:392–395

Bassetti M, Cruciani M, Righi E (2006) Antimicrobial use and resistance among gram-negative bacilli in an Italian intensive care unit (ICU). J Chemother 18:261–267

Du B, Chen D, Liu D (2003) Restriction of third-generation cephalosporin use decreases infection-related mortality. Crit Care Med 31:1088–1093

Hsueh PR, Chen WH, Luh KT (2005) Relationships between antimicrobial use and antimicrobial resistance in gram-negative bacteria causing nosocomial infections from 1991 to 2003 at a university hospital in Taiwan. Int J Antimicrob Agents 26:463–472

Lan CK, Hsueh PR, Wong WW (2003) Association of antibiotic utilization measures and reduced incidence of infections with extended-spectrum beta-lactamase-producing organisms. J Microbiol Immunol Infect 36:182–186

Meyer E, Schwab F, Pollitt A (2007) Resistance rates in ICUs: interpretation and pitfalls. J Hosp Infect 65:84–85

Schwaber MJ, De Medina T, Carmeli Y (2004) Epidemiological interpretation of antibiotic resistance studies—what are we missing? Nat Rev Microbiol 2:979–983

Burgess DS, Hall RG (2004) In vitro killing of parenteral beta-lactams against standard and high inocula of extended-spectrum beta-lactamase and non-ESBL producing Klebsiella pneumoniae. Diagn Microbiol Infect Dis 49:41–46

Thomson KS, Moland ES (2001) Cefepime, piperacillin-tazobactam, and the inoculum effect in tests with extended-spectrum beta-lactamase-producing Enterobacteriaceae. Antimicrob Agents Chemother 45:3548–3554

Toltzis P, Yamashita T, Vilt L (1998) Antibiotic restriction does not alter endemic colonization with resistant gram-negative rods in a pediatric intensive care unit. Crit Care Med 26:1893–1899

Harris AD, McGregor JC, Johnson JA (2007) Risk factors for colonization with extended-spectrum beta-lactamase-producing bacteria and intensive care unit admission. Emerg Infect Dis 13:1144–1149

Livermore DM, Canton R, Gniadkowski M (2007) CTX-M: changing the face of ESBLs in Europe. J Antimicrob Chemother 59:165–174

Pitout JD, Gregson DB, Church DL (2005) Community-wide outbreaks of clonally related CTX-M–14 beta-lactamase-producing Escherichia coli strains in the Calgary health region. J Clin Microbiol 43:2844–2849

Rodriguez-Bano J, Navarro MD, Romero L (2004) Epidemiology and clinical features of infections caused by extended-spectrum beta-lactamase-producing Escherichia coli in nonhospitalized patients. J Clin Microbiol 42:1089–1094

Acknowledgments

We thank Deborah Lawrie-Blum for her help in preparing the manuscript. Financial support: The ICU participated in SARI (Surveillance of Antimicrobial use and antimicrobial Resistance in German Intensive Care Units) a project which is supported by a grant from the Federal Ministry of Education and Research (01Kl 9907).

Conflict of interest statement

None of the authors had a conflict of interests.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Meyer, E., Lapatschek, M., Bechtold, A. et al. Impact of restriction of third generation cephalosporins on the burden of third generation cephalosporin resistant K. pneumoniae and E. coli in an ICU. Intensive Care Med 35, 862–870 (2009). https://doi.org/10.1007/s00134-008-1355-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00134-008-1355-6