Abstract

Circuits with delay elements are very popular and important in the simulation of very-large-scale integration (VLSI) systems. Neutral systems (NSs) with multiple constant delays (MCDs), for example, can be used to model the partial element equivalent circuits (PEECs), which are widely used in high-frequency electromagnetic (EM) analysis. In this paper, the model order reduction (MOR) problem for the NS with MCDs is addressed by moment matching method. The nonlinear exponential terms coming from the delayed states and the derivative of the delayed states in the transfer function of the original NS are first approximated by a Padé approximation or a Taylor series expansion. This has the consequence that the transfer function of the original NS is exponential-free and the standard moment matching method for reduction is readily applied. The Padé approximation of exponential terms gives an expanded delay-free system, which is further reduced to a delay-free reduced-order model (ROM). A Taylor series expansion of exponential terms lets the inverse in the original transfer function have only powers-of-s terms, whose coefficient matrices are of the same size as the original NS, which results in a ROM modeled by a lower-order NS. Numerical examples are included to show the effectiveness of the proposed algorithms and the comparison with existing MOR methods, such as the linear matrix inequality (LMI)-based method.

Similar content being viewed by others

1 Introduction

To describe the behavior of complex physical systems accurately, high or even infinite order mathematical models are often required. However, direct simulation of the original high or even infinite order models is very difficult and sometimes prohibitive due to unmanageable levels of storage, high computational cost and long computation time. Therefore, model order reduction (MOR) , which replaces the original complex and high-order system by a reduced-order model (ROM), plays an important role in many areas of engineering, e.g., transmission lines in circuit packaging [31, 38], PCB (printed circuit board) design [11, 48, 58] and networked control systems [18, 28]. The obvious advantages of MOR include that the use of ROMs results in not only considerable savings in storage and computational time, but also fast simulation and verification leading to shortened design cycle [2, 3, 5, 12, 15, 26, 33, 34, 36, 52, 59].

A lot of MOR methods have been presented in the past few decades [4, 14, 16, 17, 20–22, 25, 30, 35, 40, 44, 49, 51]. Most of them fall into two categories. The first one are singular value decomposition (SVD)-based methods via constructing or optimizing the ROM according to a suitably chosen criterion, such as the H ∞ norm, energy-to-peak gain and Hankel norm [40, 44, 49, 51]. The second category are the moment matching-based methods. For linear time invariant (LTI) systems, the moment matching method [4, 5, 16, 20, 35] is to expand the transfer function by Taylor series, and then create a ROM for which the first few terms (also called moments) of its Taylor series expansion match those of the original model. The projection matrix to derive the ROM is usually obtained from Krylov subspace iterative schemes. Over the past years, moment matching methods are widely used due to the availability of efficient iterative schemes for constructing the projection matrix, in contrast to the SVD approach which usually involves solving expensive matrix equations or convex optimization problems.

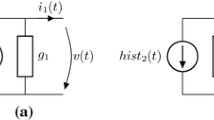

In many physical, industrial and circuit systems, time delays occur due to the finite capability of information processing, data transmission among various parts of the systems and some essential simplification of the corresponding process models [9, 27, 29, 37, 39, 49, 55–57]. The delaying effect is often detrimental to the performance, and even renders instability. So, the presence of time delays substantially complicates analytical and theoretical aspects of system design. In the past few decades, researchers have paid great attention to the analysis and synthesis of time delay systems (TDSs). Most studies are involved with systems having delays in the system states only, which are often called retarded systems (RSs) [42, 46]. Another important and more general TDSs, called neutral systems (NSs), have dynamics governed by delays not only in the system states, but also in the derivative of system states. For example, in the context of circuit modeling and simulation, NSs can be formulated for the partial element equivalent circuits (PEECs) widely used in electromagnetic (EM) simulation [24]. The NS has attracted a lot of research effort in recent years [23, 32, 39, 41, 45, 47, 53].

The MOR of TDSs is mainly based on the SVD-based method, which constructs the ROM such that the error norm between the original system and the ROM is less than some given tolerance. The H ∞ MOR problem for RSs is studied in [54] by solving linear matrix inequalities (LMIs) with a rank constraint. The problem in [54] for linear parameter-varying systems with both discrete and distributed delays is considered in [50] by solving parameterized LMIs. The MOR of a NS with multiple constant delays (MCDs), however, has received little attention despite its importance in theory and practice [43, 45]. The energy-to-peak MOR and H ∞ MOR for a NS are studied in [43] and [45], respectively, in terms of LMIs with inverse constraints. LMIs can be solved by interior-point method (IPM) together with Newton’s method via minimizing a strictly convex function whereby all matrix variables are transformed into a high-order vector variable in [8]. In practice, the IPM fails to solve large scale LMIs as the storage of the Hessian matrix of the objective convex function used in Newton’s method is memory-demanding and the computational cost for the Hessian matrix is also very high. Although cone complementarity linearization (CCL) algorithm [13] provides a way to transform the LMIs with inverse constraints to a minimization problem subject to original LMIs and additional LMIs coming from the inverse constraints, much higher computational cost is needed for expanded LMIs. Hence, though the methods in [43, 45] are theoretically correct, they are of little practical use in reducing high-order NSs due to the prohibitive computational cost.

As the SVD-based methods [43, 45] suffer from high computational cost, it is desirable to use moment matching method to approximate the NSs owing to its much faster computation. However, the major difficulty of applying moment matching method on a NS is the generation of moments from the NS transfer function, which are also the coefficient matrices of its Taylor series expansion. The reason is the appearance of nonlinear exponential terms in the transfer function from the delayed state and the derivative of the delayed state, making direct Taylor series expansion infeasible. In this paper, we propose two methods to approximate the nonlinear exponential terms and to generate their moments.

The major contribution of this paper is the reduction of the NS with MCDs by first approximating the nonlinear exponential terms via Padé approximation or Taylor series expansion of the exponential terms. The former results in an expanded-size, but exponential-free, state space which can then be reduced by standard moment matching method. Whereas the latter effectively replaces the exponential terms by truncated Taylor series, this allows the inverse in the transfer function computation to be again exponential-free, but contains only powers-of-s terms whose coefficient matrices are of the same size as those of the original NS. Subsequently, standard moment matching techniques for reduced-order modeling can be readily used. The proposed two methods with low computation cost make it applicable to the reduction of high-order NS.

The outline of this paper is as follows. In Sect. 1, the MOR problem for descriptor system (DS) by moment matching method is reviewed and the challenge of the MOR problem for the NS with MCDs is given. In Sect. 2, Padé approximation of exponential terms results in a delay-free ROM modeled by DS. In Sect. 3, the ROM with the same structure of original NS is given by replacing exponential terms via their Taylor series expansions. Numerical examples to demonstrate the effectiveness of the proposed MOR results and the comparison with other methods are given in Sect. 4. Finally, Sect. 5 draws the conclusion.

1.1 Neutral Systems

Consider a NS with MCDs, denoted by Σ,

where x(t)∈ℝn is the state vector, u(t)∈ℝm is the input and y(t)∈ℝl is the output. E, A, \(A_{h_{i}}\), \(A_{d_{j}}\), B and C, i=1,…,p, j=1,…,q, are properly dimensioned real constant matrices. Here h i and d j , i=1,…,p, j=1,…,q, are the constant delays and α=max{h i ,d j ,i=1,…,p,j=1,…,q}. All derivations in this paper can straightforwardly be extended to time varying delays case by assuming h i and d j as the upper bounds of the time varying delays. The order of the NS Σ is defined as the number of states, i.e., n. Under the assumption x(0)=ϕ(0)=0, the transfer function from input u(t) to state x(t) is given by

by taking Laplace transform on the left and right sides of (1). The NS Σ is also characterized by its transfer function from input u(t) to output y(t)

1.2 MOR of Systems Without Delay

When the NS Σ does not have time delays h i and d j , i.e., \(A_{h_{i}}=0\) and \(A_{d_{j}}=0, i=1,\ldots ,p, j=1,\ldots ,q\), it reduces to a DS Σ ds,

with transfer function

The above DS becomes to an LTI system when E=I. The Taylor series expansion of G ds(s) around s=0 is

by assuming that A is invertible. Coefficient matrices of its Taylor series expansion in (7) are called block moments or moments [35] of the DS Σ ds.

The MOR by moment matching method for DS Σ ds is to create a ROM for which the first few moments match those from the original model [4, 14, 16, 17, 20, 21, 35, 44, 49, 52]. The projection matrix \(V\in \mathbb{R}^{n\times \hat{n}}\) to generate the ROM is from

where \(K ( 0,\varSigma_{\mathrm{ds}},\hat{n} ) \) is defined as the \(\hat{n}\)th Krylov subspace

and the system matrices of the resulting ROM are

[7, 35]. Therefore, the key point in MOR by moment matching method is the generation of moments or coefficient matrices of the Taylor series expansion of the transfer function G ds(s).

1.3 MOR of NSs

For the MOR problem of the NS Σ, we also want to find a projection matrix V for constructing the ROM to match the first few moments of transfer function G(s) in (5). However, when time delays are taken into account, G(s) becomes much more complicated than G ds(s) due to exponential terms \(e^{-\mathrm{sh}_{i}}\) and \(e^{-\mathrm{sd}_{j}}\), i=1,…,p, j=1,…,q, from the delayed states and the derivative of delayed states, respectively. As direct Taylor series expansion of G(s) is impossible due to the appearance of nonlinear terms \(e^{-\mathrm{sh}_{i}}\) and \(e^{-\mathrm{sd}_{j}}\), approximation of these \(e^{-\mathrm{sh}_{i}}\) and \(e^{-\mathrm{sd}_{j}}\) gives an exponential-free approximation of the Taylor series expansion of G(s)

where G i , i=0,1,…, are constant matrices and called approximated moments of the NS Σ. Two kinds of approximation of exponential terms are used in this paper. One is the Padé approximation, which is the most frequently used method to approximate them by finite rational functions. The other is to expand exponential terms by their Taylor series expansions. The former gives rise to a ROM modeled by a DS in Sect. 2 and the latter results in a ROM modeled by a NS in Sect. 3.

2 ROM by Padé Approximation

The following lemma shows that the exponential term \(e^{-\mathrm{sh}_{i}}\) is approximated by a transfer function of an LTI system in terms of Padé approximation. The most important advantage is that G i in (10) are expressed by moments of an expanded DS. Then projection matrix proposed in [2, 7] is ready for the construction of the ROM.

Lemma 1

\(e^{-\mathrm{sh}_{i}}\) is approximated by the \(\beta_{h_{i}}\) th order transfer function of LTI system

where

and \(\alpha_{h_{i}}\) and \(\beta_{h_{i}}\) with \(\alpha_{h_{i}}\leq \beta_{h_{i}}\) are positive integers.

Proof

From [19, p. 557], \(e^{-\mathrm{sh}_{i}}\) can be approximated by \(\beta_{h_{i}}\)th order Padé approximation,

where a k and b k are defined in (15) and (16). Firstly, we assume that \(\alpha_{h_{i}}=\beta_{h_{i}}\). It is easy to get

It follows by [1, Theorem 3.5.1] that the controllable canonical realization of the second term in (17) is equivalent to

which gives (11), with \(\bar{C}_{h_{i}}\), \(\bar{A}_{h_{i}}\) and \(\bar{B}_{h_{i}}\) given in (12)–(14). In the case of \(\alpha_{h_{i}}=\beta_{h_{i}}-1\), i.e., \(b_{\beta _{h_{i}}}=0\), (17) becomes a \(\beta_{h_{i}}\)th order transfer function

which results in (11) for the case \(\alpha_{h_{i}}=\beta_{h_{i}}-1 \) by the controllable canonical realization again. The case \(\alpha_{h_{i}}<\beta_{h_{i}}-1\ \)can be obtained similar to the case \(\alpha_{h_{i}}=\beta_{h_{i}}-1\) by assuming \(b_{\alpha _{h_{i}}+1}=\cdots =b_{\beta _{h_{i}}-1}=0\). The conclusion holds. □

A proposition is followed from Lemma 1 related to the approximation of G(s).

Proposition 1

G(s) is approximated by

where

and \(\bar{C}_{h_{i}}\), \(\bar{A}_{h_{i}}\), \(\bar{B}_{h_{i}}\), \(\bar{D}_{h_{i}}\), \(r_{h_{i}}\), i=1,…,p, and \(\bar{C}_{d_{j}}\), \(\bar{A}_{d_{j}}\), \(\bar{B}_{d_{j}}\), \(\bar{D}_{d_{j}}\ \) and \(r_{d_{j}}\), j=1,…,q, are obtained from Lemma 1.

Proof

From Lemma 1, \(e^{-\mathrm{sh}_{i}}\) is approximated by the \(\beta_{h_{i}}\)th order transfer function

which follows that

where X(s) is the Laplace transform of x(t) and \(\tilde{C}_{h_{i}}\), \(\tilde{A}_{h_{i}}\), \(\tilde{B}_{h_{i}}\) and \(\tilde{D}_{h_{i}}\), i=1,…,p, are defined in (23) and (24). So, x(t−h i ), i=1,…,p, can be treated as the output of a \(\beta_{h_{i}}\)th order LTI system \(\varSigma_{h_{i}}\),

Similarly, it is easy to get

which can be treated as the output of a \(\beta_{d_{j}}\)th order LTI system \(\varSigma_{d_{j}}\),

where \(\tilde{C}_{d_{j}}\), \(\tilde{A}_{d_{j}}\), \(\tilde{B}_{d_{j}}\) and \(\tilde{D}_{d_{j}}\), j=1,…,q, are given in (25) and (26). Together with NS Σ, we have

By defining \(x_{s} ( t ) =[ x^{T} ( t ) \ x_{h_{1}}^{T} ( t ) \ \cdots \ x_{h_{p}}^{T} ( t ) \ x_{d_{1}}^{T} ( t ) \ \cdots \ x_{d_{q}}^{T} ( t )]^{T}\), the system in (28) can be rewritten as an expanded DS Σ s ,

with \(\mathcal{E}\), \(\mathcal{A}\), \(\mathcal{B}\ \)and \(\mathcal{C}\) being defined in (20)–(22). Then we can say that the NS Σ can be approximated by a DS Σ s in (29), i.e., \(G ( s ) \approx \mathcal{C} ( s\mathcal{E}-\mathcal{A} )^{-1}\mathcal{B}\), which further gives (19). □

By matching approximated moments in (19), we get the ROM immediately.

Theorem 1

The \(\hat{n}\) th reduced-order DS to approximate the NS Σ, is given by

where V is obtained by

Proof

The projection matrix V is defined in (30) by the same method in [2]. From (30) and by taking the same steps in [35], we have

Thus, the DS \(\hat{\varSigma}_{s}\) matches the first \(\hat{n}\) moments of the DS Σ s , which gives an approximation to the NS Σ. □

Remark 1

By Padé approximation of \(e^{-\mathrm{sh}_{i}}\) and \(e^{-\mathrm{sd}_{j}}\), the MOR problem of the NS Σ is transformed to the MOR problem of the DS Σ s without delay. The main advantage is that the standard state space techniques can be applied to the ROM directly in the DS Σ s for the analysis and simulation. Furthermore, practical application of a reduced-order DS is more convenient than ROM with delay as traditional software for simulation is all for delay-free systems. However, the order of the DS Σ s , \(\tilde{n}=n ( 1+\sum_{i=1}^{p}\beta_{h_{i}}+\sum_{j=1}^{q}\beta_{d_{j}} ) \) in (27) is determined by the order of Padé approximation of \(e^{-\mathrm{sh}_{i}}\) and \(e^{-\mathrm{sd}_{j}}\), and is higher than n, especially when p and q are large. So the result in Theorem 1 is more suitable for the NS with small p and q, or the case that the ROM should be delay-free system.

Remark 2

When \(A_{d_{j}}=0\), j=1,…,q, the NS Σ becomes a RS Σ rs ,

and the result in Theorem 1 is also applicable to the RS Σ rs by deleting columns, rows and elements related to \(A_{d_{j}}\) from (20)–(22). Theorem 1 is also true when \(A_{h_{i}}=A_{d_{j}}=0\), which is the MOR result by moment matching for the DS in [35].

3 ROM by Taylor Series Expansion

3.1 Moment Matching Around s 0=0

The inverse formula shown below is needed for later development.

Lemma 2

(See [12], p. 679)

The inverse of X 0+sX 1+s 2 X 2+⋯+s r−1 X r−1 is given by

where

In order to approximate G X (s) around s 0=0,

is assumed to be invertible. When the NS Σ reduces to the DS Σ ds in (6), the nonsingular assumption of Γ 0=−A becomes the standard assumption for moments’ computation of the DS in (7). In the following proposition, we approximate G X (s) around s 0=0, by combining the idea of approximating exponential terms \(e^{-\mathrm{sh}_{i}}\) and \(e^{-\mathrm{sd}_{j}}\), i=1,…,p, j=1,…,q, by their Taylor series expansions in [42, p. 834] and the inverse formula in Lemma 2. G i in (10) are expressed by the matrices having the same dimension as the original NS Σ. A lower-order NS is obtained by matching these G i in (10).

Proposition 2

G X (s) is approximated around s 0=0 by

where

Proof

We first expand exponential terms \(e^{-\mathrm{sh}_{i}}\) and \(e^{-\mathrm{sd}_{j}}\), i=1,…,p, j=1,…,q, by their Taylor series expansions around s 0=0 [42, p. 834],

which render

where Γ k , k=0,1,…, are defined in (32), (35) and (36). If we truncate the right hand side of (37) to only the first \(r\geq \hat{n}\) terms, we get

Then (33) can be get directly by applying the inverse formula in Lemma 2 to G app(s). □

From Proposition 2, the Krylov subspace for the NS Σ is approximated by coefficient matrices of G app(s) in (38) in the following proposition.

Proposition 3

The Krylov subspace around 0 for the NS Σ is approximated by

Remark 3

In the case of \(A_{h_{i}}=A_{d_{j}}=0\), i=1,…,p, j=1,…,q, \(K ( 0,\varSigma ,\hat{n} ) \) becomes \(K ( 0,\varSigma_{\mathrm{ds}},\hat{n}) \) in (8). So, Proposition 3 not only provides an approximation to the Krylov subspace for NS, but also gives an extension of Krylov subspace from the DS [7] to the NS.

Similar to the idea of generating the projection matrix V (\(\operatorname{colspan} ( V )\! \supseteq K ( 0,\varSigma_{\mathrm{ds}},\hat{n})\), V T V=I) for the MOR of the DS [35] or the single parameter linear system [12], the projection matrix V is obtained by

to get the reduced-order system \(\hat{\varSigma}\) in (40).

Theorem 2

The \(\hat{n}\) th reduced-order NS is given by

Proof

From Proposition 2, X(s) can be approximated by

where U(s) is the Laplace transform of u(t). Inspiring from [12, 35], we want to find a projection matrix V in (39) by matching the first \(\hat{n}\) terms of the approximated G X (s), which are also first \(\hat{n}\) coefficients of θ(s). By assuming \(\theta ( s ) =V\hat{\theta}( s ) \), and considering

we obtain

where Y(s) is the Laplace transform of y(t). From the expression of Γ k , \(k=0,1,\ldots ,\hat{n}-1\), in (32), (35) and (36), it is easy to show that

Consequently, it follows that

which is equivalent to the reduced-order NS \(\hat{\varSigma}\), where \(\hat{x}( t ) \) is the inverse Laplace transform of \(\hat{\theta} ( s ) \). □

Corollary 1

The result in Theorem 2 is still true for the RS Σ rs in (31) by taking \(A_{d_{j}}=0\), j=1,…,q, from (35) and (36). In the case of p=q=1, the projection matrix V in (39) is given by

Remark 4

The MOR result in [42] considers the reduction of a special kind of RS with C=B T. The moments of this special RS are approximated by the moments of a large scale DS with system matrices given by

where r is defined in (38). This will result in two obvious shortcomings. One is that the dimension of ROM may be higher than the original RS as low-order ROM may cause large error. The other is that high-order system matrices \(\bar{\mathcal{C}}\), \(\bar{\mathcal{G}}\) and \(\bar{\mathcal{L}}\) make this method fail to reduce higher-order RS as the storage of \(\bar{\mathcal{C}}\), \(\bar{\mathcal{G}}\) and \(\bar{\mathcal{L}}\) can be memory-demanding. Fortunately, Proposition 2 avoids this by using the inverse formula in Lemma 2 to produce moments having the same dimension as the original NS. The comparison with the result in [42, p. 834] is shown in Examples 2 and 3 in Sect. 4.

Remark 5

The MOR problems with ROM in NS form are also investigated in [43] and [45], respectively, by guaranteeing that the H ∞ norm or energy-to-peak gain of the error system is less than a given scalar in terms of LMIs with inverse constraints. It is solved by CCL algorithm [13] by transforming it to a minimization problem subject to original LMIs and additional LMIs coming from the inverse constraints, which are further solved by IPM. However, IPM requires that all matrix variables are transformed to a very huge vector variable in [8]. Obviously, this may render an out of memory problem due to large size matrix variables, and this further results in IPM not being able to handle large scale LMIs. Moreover, the computational cost of solving LMIs with inverse constraints is very high because of solving a minimization problem. Although LMI-based method provides a good approximation of the original NS by ensuring global accuracy, as a trade-off, high computational cost makes it inapplicable to reduce high-order NS with MCDs. The comparison with the methods in [43] and [45] is given in Example 4 in Sect. 4.

3.2 Extension to the Point s 0≠0 and multi-point moment matching

The result in Theorem 2 is extended to a nonzero point s 0 in the following theorem. Assume that

is nonsingular in order to approximate G X (s) around s 0.

Theorem 3

The projection matrix V is obtained from the approximated Krylov subspace around s 0

the \(\hat{n}\) th reduced-order NS is given by

where

Proof

By expanding \(e^{-\mathrm{sh}_{i}}\) and \(e^{-\mathrm{sd}_{j}}\), i=1,…,p, j=1,…,q, by their Taylor series expansions around s 0,

we have

where ϒ k , k=0,1,…, are defined in (41), (45) and (46). Then the proof can be finished similarly to the proof in Proposition 2 and Theorem 2. □

Theorem 3 can be further extended to the multi-point case.

Corollary 2

The projection matrix V in (42) obtained from the approximated Krylov subspace around multiple points, s 1, s 2,…,s g is given by

where \(K ( s_{i},\varSigma ,\hat{n} ) \), i=1,…,g, are defined in (43).

4 Numerical Examples

All the computation described in this section is performed in Intel Core 2 Quad processors with CPU 2.66 GHz and 2.87 GB memory. The first example is to show that with the same order of the ROM, Taylor series expansion method is better than Padé approximation method although it provides a delay-free ROM with better simulation and analysis than systems with delay via the standard state space techniques.

Example 1

An artificial NS Σ of order 24 with a single delay in the state and a single delay in the derivative of the state is considered to be reduced. It has two inputs and two outputs. The 8th order Padé approximation is chosen to approximate exponential terms in the original NS, which gives a transformed DS with order 408 by Proposition 1. A fourth-order ROM modeled by the DS is derived from Theorem 1. If exponential terms are approximated by their Taylor series expansions via truncating them to the first 4 terms, a fourth-order NS from Theorem 2 is obtained. The time domain responses, time domain errors and relative errors are compared in Fig. 1 with an input u(t)=e −1.5tsin(2t)[1 1]T. The frequency domain response comparison in terms of the maximal singular value (MSV) of the transfer functions is shown in Fig. 2. The above figures show that ROM from Taylor series expansion method captures the original NS better than the ROM by Padé approximation method. Higher-order ROM by Padé approximation method may result in better matching of the original system (see Remark 1 for the details).

This example will show that multi-point moment matching is better than single-point moment matching especially when the frequency domain response of the original system has multiple local minima and maxima.

Example 2

A RS is constructed by borrowing E=I 1006, A, B and C from FOM example in [10] and assuming \(A_{h_{1}}=0.1I_{1006}\) and h 1=1. The method in [42] fails due to out of memory (see Remark 4 for the details). From Theorems 2 and 3, and Corollary 2, the time domain comparison in Fig. 3 with an input u(t)=e −tsin(8t) shows that the 16th-order RS by multi-point moment matching gives a better approximation than the 16th-order RS by matching moments around zero only. The frequency domain responses, frequency domain errors and frequency domain relative error in Fig. 4 also draw the same conclusion.

Example 3

In this example, a network composing of five lands and 50 conductors transmission line, representing the delay element, and a circuit with RLC components, has been considered for reduction. The network is modeled by a RS with order 602 with four delays in the states. The method in [42] cannot be applied to this example due to out of memory, too. By the proposed method in Theorem 2, a reduced-order RS with order 30 is given by approximating exponential terms by the first 30 terms of their Taylor series expansions. The time domain responses, time domain error and relative error are plotted in Figs. 5 with u(t)=sin(1012 t). Moreover, Figs. 6 and 7 show the frequency domain responses, frequency errors and relative errors in low and high frequencies, respectively. It is clear that the reduced-order NS matches the original system very well.

The comparison with the existing LMI-based method in [43, 45] for reduction of NSs and method in [42] for reducing RS is given in the following example.

Example 4

Four examples are used to for comparison. The above three examples are used and another example is from a small PEEC model borrowed from [6, 24]. The system matrices A, \(A_{h_{1}}\) and \(A_{d_{1}}\ \)and the delays h 1 and d 1 are given in [6]. B and C are chosen to be

The time domain and frequency domain comparisons are given in Figs. 8 and 9 with time domain input u(t)=cos(5t). Clearly the ROM from the proposed method matches the original system better than the ROM from LMI-based method. Moreover, the proposed method in Theorem 2 by constructing a projection matrix to match the approximated moments uses less time than the LMI-based method shown in Table 1. We also conclude from Table 1 that LMI-based method cannot reduce the NS with more than order 24 due to out of memory problem (see the details in Remark 5). The method in [42] has the same problem in reducing RS with order 1006. The conclusion is that the proposed method in this paper has more practical use especially in reducing large scale NSs.

5 Conclusions

In this paper, the moment matching method is used to get two different kinds of ROM to approximate a NS with MCDs depending on ways of approximating exponential terms in the transfer function of the original NS. The Padé approximation of exponential terms renders a delay-free system modeled by the high-order DS, with the obvious price to be paid of higher storage and computational complexity. However, delay-free ROM facilitates the analysis and application of the standard state space techniques as most of the traditional software for simulation is for delay-free systems. The other ROM has the same structure of the original NS by using Taylor series expansion to replace exponential terms. The most important advantage is that approximated moments have the same dimension as the original NS, which makes this method capable of reducing higher-order NSs. The proposed results can be applied to RSs directly by deleting the matrices related to the derivative of the delayed state. Numerical examples have demonstrated that the Taylor series expansion-based MOR method is much more suitable to reduce high-order NSs than existing MOR methods. Further research will focus on the ROM with stability and passivity preservation by adding additional constraints.

References

B. Anderson, S. Vongpanitlerd, Network Analysis and Synthesis: A Modern Systems Theory Approach (Dover, New York, 2006)

A.C. Antoulas, Approximation of Large-Scale Dynamical Systems (SIAM, Philadelphia, 2005)

A. Astolfi, Model reduction by moment matching for linear and nonlinear systems. IEEE Trans. Autom. Control 55, 2321–2336 (2010)

Z. Bai, Krylov subspace techniques for reduced-order modeling of large-scale dynamical systems. Appl. Numer. Math. 43, 9–44 (2002)

Z. Bai, Y. Su, Dimension reduction of large-scale second-order dynamical systems via a second-order Arnoldi method. SIAM J. Sci. Comput. 26, 1692–1709 (2005)

A. Bellen, N. Guglielmi, A.E. Ruehli, Methods for linear systems of circuit delay differential equations of neutral type. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 46, 212–216 (1999)

D.L. Boley, Krylov space methods on state-space control models. Circuits Syst. Signal Process. 13, 733–758 (1994)

S. Boyd, L. EL Ghaoui, E. Feron, N. Balakrishnan, Linear Matrix Inequalities in System and Control Theory (SIAM, Philadelphia, 1994)

S. Cauet, F. Hutu, P. Coirault, Time-varying delay passivity analysis in 4 GHz antennas array design. Circuits Syst. Signal Process. 31, 93–106 (2012)

Y. Chahlaoui, P. Van Dooren, A collection of benchmark examples for model reduction of linear time invariant dynamical systems. Publishing Physics Web. http://www.icm.tu-bs.de/NICONET/benchmodred.html (2010)

Q. Chen, N. Wong, Efficient numerical modeling of random rough surface effects in interconnect resistance extraction. Int. J. Circuit Theory Appl. 37, 751–763 (2009)

L. Daniel, O.C. Siong, L.S. Chay, K.H. Lee, J. White, A multiparameter moment-matching model-reduction approach for generating geometrically parameterized interconnect performance models. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 23, 678–693 (2004)

L. El Ghaoui, F. Oustry, M. AitRami, A cone complementarity linearization algorithm for static output-feedback and related problems. IEEE Trans. Autom. Control 42, 1171–1176 (1997)

P. Feldmann, R.W. Freund, Efficient linear circuit analysis by Padé approximation via the Lanczos process. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 14, 639–649 (1995)

L. Fortuna, G. Nunnari, A. Gallo, Model Order Reduction Techniques, with Applications in Electrical Engineering (Springer, Berlin, 1992)

R.W. Freund, SPRIM: structure-preserving reduced-order interconnect macromodeling, in IEEE/ACM International Conference on Computer Aided Design (2004), pp. 80–87

K. Gallivan, E. Grimme, P. Van Dooren, A rational Lanczos algorithm for model reduction. Numer. Algorithm 12, 33–63 (1996)

H. Gao, T. Chen, J. Lam, A new delay system approach to network-based control. Automatica 44, 39–52 (2008)

G.H. Golub, C.F. Van Loan, Matrix Computations (Johns Hopkins University Press, Baltimore, 1989)

E. Grimme, Krylov projection methods for model reduction. PhD thesis, University of Illinois at Urbana-Champaign (1997)

E.J. Grimme, D.C. Sorensen, P. Van Dooren, Model reduction of state space systems via an implicitly restarted Lanczos method. Numer. Algorithm 12, 1–31 (1996)

T. Hamamoto, T. Song, T. Katayama, T. Shimamoto, Complexity reduction algorithm for hierarchical B-picture of H.264/SVC. Int. J. Innov. Comput., Inf. Control 7, 445–457 (2011)

Q.-L. Han, Robust stability of uncertain delay-differential systems of neutral type. Automatica 38, 719–723 (2002)

Q.-L. Han, Stability analysis for a partial element equivalent circuit (PEEC) model of neutral type. Int. J. Circuit Theory Appl. 33, 321–332 (2005)

H.-L. Hung, Y.F. Huang, Peak to average power ratio reduction of multicarrier transmission systems using electromagnetism-like method. Int. J. Innov. Comput., Inf. Control 7, 2037–2050 (2008)

S. Lall, J.E. Marsden, S. Glavaški, A subspace approach to balanced truncation for model reduction of nonlinear control systems. Int. J. Innov. Comput., Inf. Control 12, 519–535 (2002)

J. Lam, Model reduction of delay systems using Padé approximants. Int. J. Control 57, 377–391 (1993)

J. Lam, H. Gao, C. Wang, Stability analysis for continuous systems with two additive time-varying delay components. Syst. Control Lett. 56, 16–24 (2007)

Y. Li, J. Lam, X. Luo, Convex optimization approaches to robust L 1 fixed-order filtering for polytopic systems with multiple delays. Circuits Syst. Signal Process. 27, 1–22 (2008)

G.R. Naik, D.K. Kumar, Dimensional reduction using blind source separation for identifying sources. Int. J. Innov. Comput., Inf. Control 7, 989–1000 (2011)

N.M. Nakhla, A. Dounavis, R. Achar, M.S. Nakhla, DEPACT: Delay extraction-based passive compact transmission-line macromodeling algorithm. IEEE Trans. Adv. Packag. 28, 13–23 (2005)

S.-I. Niculescu, On delay-dependent stability under model transformations of some neutral linear systems. Int. J. Control 74, 609–617 (2001)

D. Ning, J. Roychowdhury, General-purpose nonlinear model-order reduction using piecewise-polynomial representations. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 27, 249–264 (2008)

G. Obinata, B.D.O. Anderson, Model Reduction for Control System Design (Springer, Berlin, 2001)

A. Odabasioglu, M. Celik, L.T. Pileggi, PRIMA: passive reduced-order interconnect macromodeling algorithm. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 17, 645–654 (1998)

J.R. Phillips, Projection-based approaches for model reduction of weakly nonlinear time-varying systems. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 22, 171–187 (2003)

J. Qiu, H. Yang, P. Shi, Y. Xia, Robust H ∞ control for class of discrete-time Markovian jump systems with time-varying delays based on delta operator. Circuits Syst. Signal Process. 27, 627–643 (2008)

J.-P. Richard, Time-delay systems: an overview of some recent advances and open problems. Automatica 39, 1667–1694 (2003)

B. Song, S. Xu, Y. Zou, Delay-dependent robust H ∞ filtering for uncertain neutral stochastic time-delay systems. Circuits Syst. Signal Process. 28, 241–256 (2009)

X. Su, L. Wu, P. Shi, Y.-D. Song, H ∞ model reduction of Takagi-Sugeno fuzzy stochastic systems. IEEE Trans. Syst., Man, Cybern., B, Cybern. Online version http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=06202714

T.J. Tsai, J.S.H. Tsai, S.M. Guo, G. Chen, An optimal linear-quadratic digital tracker for analog neutral time-delay systems. IMA J. Math. Control Inf. 23, 43–66 (2006)

W.L. Tseng, C.Z. Chen, E. Gad, M. Nakhla, R. Achar, Passive order reduction for RLC circuits with delay elements. IEEE Trans. Adv. Packag. 30, 830–840 (2007)

Q. Wang, J. Lam, S. Xu, Delay-dependent energy-to-peak model reduction neutral systems with time-varying delays, in Proceedings of the 7th Asia-Pacific Conference on Complex Systems (2004), pp. 448–461

Q. Wang, J. Lam, S. Xu, H. Gao, Delay-dependent and delay-independent energy-to-peak model approximation for systems with time-varying delay. Int. J. Syst. Sci. 36, 445–460 (2005)

Q. Wang, J. Lam, S. Xu, L. Zhang, Delay-dependent γ-suboptimal H ∞ model reduction for neutral systems with time-varying delays. J. Dyn. Syst. Meas. Control 128, 364–399 (2006)

X. Wang, Q. Wang, Z. Zhang, Q. Chen, N. Wong, Balanced truncation for time-delay systems via approximate Gramians, in 16th Asia and South Pacific Design Automation Conference (ASP-DAC) (2011), pp. 55–60

Z. Wang, J. Lam, K.J. Burnham, Stability analysis and observer design for neutral delay system. IEEE Trans. Autom. Control 47, 478–483 (2002)

N. Wong, V. Balakrishnan, Fast positive-real balanced truncation via quadratic alternating direction implicit iteration. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 26, 1725–1731 (2007)

L. Wu, P. Shi, H. Gao, C. Wang, A new approach to robust H ∞ filtering for uncertain systems with both discrete and distributed delays. Circuits Syst. Signal Process. 26, 229–247 (2007)

L. Wu, P. Shi, H. Gao, J. Wang, H ∞ model reduction for linear parameter-varying systems with distributed delay. Int. J. Control 82, 408–422 (2009)

L. Wu, X. Su, P. Shi, J. Qiu, Model approximation for discrete-time state-delay systems in the T-S fuzzy framework. IEEE Trans. Fuzzy Syst. 19, 366–378 (2011)

L. Wu, W.X. Zheng, Weighted H ∞ model reduction for linear switched systems with time-varying delay. Automatica 45, 186–193 (2009)

S. Xu, J. Lam, New positive realness conditions for uncertain discrete descriptor systems: analysis and synthesis. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 51, 1897–1905 (2004)

S. Xu, J. Lam, S. Huang, C. Yang, H ∞ model reduction for linear time-delay systems: continuous-time case. Int. J. Control 74, 1062–1074 (2001)

S. Xu, J. Lam, On H ∞ filtering for a class of uncertain nonlinear neutral systems. Circuits Syst. Signal Process. 23, 215–230 (2004)

S. Xu, J. Lam, H. Gao, Y. Zou, Robust H ∞ filtering for uncertain discrete stochastic systems with time delays. Circuits Syst. Signal Process. 24, 753–770 (2005)

R. Yang, H. Gao, J. Lam, P. Shi, New stability criteria for neural networks with distributed and probabilistic delays. Circuits Syst. Signal Process. 28, 505–522 (2009)

Z. Zhang, N. Wong, Passivity test of immittance descriptor systems based on generalized Hamiltonian methods. IEEE Trans. Circuits Syst. II, Analog Digit. Signal Process. 57, 61–65 (2010)

W. Zhou, D. Tong, H. Lu, Q. Zhong, J. Fang, Time-delay dependent H ∞ model reduction for uncertain stochastic systems: continuous-time case. Circuits Syst. Signal Process. 30, 941–961 (2011)

Acknowledgements

This work was supported by General Research Fund (GRF) project 718711E.

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Wang, Q., Wang, Y., Lam, E.Y. et al. Model Order Reduction for Neutral Systems by Moment Matching. Circuits Syst Signal Process 32, 1039–1063 (2013). https://doi.org/10.1007/s00034-012-9483-1

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-012-9483-1