Abstract

We establish variational estimates related to the problem of restricting the Fourier transform of a three-dimensional function to the two-dimensional Euclidean sphere. At the same time, we give a short survey of the recent field of maximal Fourier restriction theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

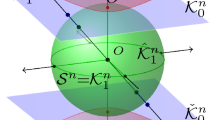

This short note serves primarily as a commentary on the very recent topic of pointwise estimates for the operator that restricts the Fourier transform to a hypersurface. We will concentrate exclusively on the case of the two-dimensional unit sphere \({\mathbb {S}}^2\) in three-dimensional Euclidean space \({\mathbb {R}}^3\). This both simplifies the exposition and enables the formulation of more general results.

Classical Fourier restriction theory seeks for a priori \(L ^p\)-estimates for the operator \(f\mapsto {\widehat{f}}|_S\), where S is a hypersurface in the Euclidean space. In the case of \({\mathbb {S}}^2\subset {\mathbb {R}}^3\), the endpoint Tomas–Stein inequality [17, 19] reads as follows:

Here \(\sigma \) denotes the standard surface measure on \({\mathbb {S}}^2\). It is well-known that 4/3 is the largest exponent that can appear on the right-hand side of (1.1) provided that we stick to the \(L ^2\)-norm on the left-hand side. Estimate (1.1) enables the Fourier restriction operator

to be defined via an approximation of identity argument as follows. Fix a complex-valued Schwartz function \(\chi \) such that \(\int _{{\mathbb {R}}^3}\chi (x)\,d x=1\), and write \(\chi _{\varepsilon }\) for the \(L ^1\)-normalized dilate of \(\chi \), defined as

for \(x\in {\mathbb {R}}^3\) and \(\varepsilon >0\). Given \(f\in L ^{4/3}({\mathbb {R}}^3)\), then, thanks to (1.1), \({\mathcal {R}}f\) can be defined as the limit

in the norm of the space \(L ^2({\mathbb {S}}^2,\sigma )\).

Maximal restriction theorems were recently inaugurated by Müller, Ricci, and Wright [13]. In that work, the authors considered general \(C ^2\) planar curves with nonnegative signed curvature equipped with the affine arclength measure, and established a maximal restriction theorem in the full range of exponents where the usual restriction estimate is known to hold. Shortly thereafter, Vitturi [20] provided an elementary argument which leads to a partial generalization to higher-dimensional spheres. In \({\mathbb {R}}^3\), Vitturi’s result covers the full Tomas–Stein range, whose endpoint estimate amounts to

An easy consequence of (1.3) and of obvious convergence properties in the dense class of Schwartz functions is the fact that the limit

exists for each \(f\in L ^{4/3}({\mathbb {R}}^3)\) and for \(\sigma \)-almost every \(\omega \in {\mathbb {S}}^2\). This enables us to recover the operator (1.2) also in the pointwise sense, and not only in the \(L ^2\)-norm, which was the main motivation behind the paper [13].

For the elegant proof of (1.3), Vitturi [20] used the following equivalent, non-oscillatory reformulation of the ordinary restriction estimate (1.1):

The proof of the equivalence between (1.1) and (1.5) amounts to passing to the adjoint operator (i.e., to a Fourier extension estimate) and expanding out the \(L ^4\)-norm using Plancherel’s identity. These steps make the choice of exponents 4/3 and 2 into the most convenient one. The advantage of the expanded adjoint formulation (1.5) is that one can easily insert the iterated maximal function operator in h, and simply invoke its boundedness on \(L ^2({\mathbb {R}}^3)\). We refer the reader to [20] for details. In some sense, we will be following a similar step below.

In this paper, we quantify the pointwise convergence result (1.4) in terms of the so-called variational norms. These were introduced by Lépingle [11] in the context of probability theory. Various modifications were then defined and used by Bourgain for numerous problems in harmonic analysis and ergodic theory; see for instance [5]. Recall that, given a function \(a:(0,\infty )\rightarrow {\mathbb {C}}\) and an exponent \(\varrho \in [1,\infty )\), the \(\varrho \)-variation seminorm of a is defined as

In order to turn it into a \(\varrho \)-variation norm, we simply add the term \(|a(\varepsilon _0)|^{\varrho }\) as follows:

Since this is not the standard convention in the literature, we make a distinction as we are going to use both quantities. Clearly, \(\Vert a\Vert _{V ^{\varrho }}\) controls both \(\sup _{\varepsilon >0}|a(\varepsilon )|\) and the number of “jumps” of \(a(\varepsilon )\) as \(\varepsilon \rightarrow 0^+\) (and even as \(\varepsilon \rightarrow \infty \), which is not the interesting case here). A particular instance of the main result of this paper (which is Theorem 1 below) is the following variational generalization of estimate (1.3):

when \(\varrho \in (2,\infty )\) and \(f\in L ^{4/3}({\mathbb {R}}^3)\). The reader can still consider \(\chi \) to be a fixed Schwartz function, but we are just about to discuss more general possible choices. Variational estimates like (1.6) for various averages and truncations of integral operators have been extensively studied; the papers [2, 6, 7, 9, 12] are just a sample from the available literature. In addition to quantifying the mere convergence, such estimates establish convergence in the whole \(L ^p\)-space in an explicit and quantitative manner, without the need for pre-existing convergence results on a dense subspace. Later in the text, we will also use the biparameter \(\varrho \)-variation seminorm, defined for a function of two variables \(b:(0,\infty )\times (0,\infty )\rightarrow {\mathbb {C}}\) as

It is natural to consider more general averaging functions \(\chi \); this has already been suggested (albeit somewhat implicitly) in the papers [13, 20]. It is clear from the proof in [20] that the function \(\chi \) does not need to be smooth. One can, for instance, take \(\chi \) to be the \(L ^1\)-normalized indicator function of the unit ball in \({\mathbb {R}}^3\), in which case the \(({\widehat{f}}*\chi _{\varepsilon })(\omega )\) become the usual Hardy–Littlewood averages of \({\widehat{f}}\) over Euclidean balls \(B (\omega ,\varepsilon )\),

Moreover, Ramos [15] concluded that, for each \(f\in L ^{p}({\mathbb {R}}^3)\) and \(1\le p\le 4/3\), almost every point on the sphere is a Lebesgue point of \({\widehat{f}}\), i.e., for \(\sigma \)-a.e. \(\omega \in {\mathbb {S}}^2\), we have that

Prior to [15], this had been confirmed by Vitturi [20] for functions \(f\in L ^{p}({\mathbb {R}}^3)\), \(1\le p\le 8/7\), who repeated the two-dimensional argument of Müller, Ricci, and Wright [13].

Subsequent papers [10] and [14], which appeared after the first version of the present paper, generalize the averaging procedure even further, by convolving \({\widehat{f}}\) with certain averaging measures \(\mu \). In light of this more recent research, we now take the opportunity to both generalize (1.6) and complete our short survey of maximal and variational Fourier restriction theories with papers that appeared in the meantime. In what follows, \(\mu \) will be a finite complex measure defined on the Borel sets in \({\mathbb {R}}^3\); its dilates \(\mu _{\varepsilon }\) are now defined as

for every Borel set \(E\subseteq {\mathbb {R}}^3\) and \(\varepsilon >0\). For reasons of elegance, one can additionally assume that \(\mu \) is normalized by \(\mu ({\mathbb {R}}^3)=1\) and that it is even, i.e., centrally symmetric with respect to the origin, which means that

for each Borel set \(E\subseteq {\mathbb {R}}^3\).

In [10], one of the present authors showed analogues of (1.3) and (1.6) when \(\chi \) is replaced by a measure \(\mu \) whose Fourier transform \({\widehat{\mu }}\) is \(C ^\infty \) and satisfies the decay condition

for some \(\delta >0\). It is interesting to make the following observation, which applies to the cases of two or three dimensions only as things improve in higher dimensions. If one takes \(\mu \) to be the normalized spherical measure, i.e., \(\mu =\sigma /\sigma ({\mathbb {S}}^2)\), then the decay of \(|\nabla {\widehat{\mu }}(x)|\) as \(|x|\rightarrow \infty \) is only \(O(|x|^{-1})\); see [1]. Consequently, the results from [10] do not apply. This was one of the sources of motivation for Ramos [14], who reused Vitturi’s argument from [20] to conclude that the maximal estimate

holds as soon as the maximal operator \(h\mapsto \sup _{\varepsilon >0}(h*\mu _{\varepsilon })\) is bounded on \(L ^2({\mathbb {R}}^3)\). Relating this to the work of Rubio de Francia [16], he further deduced the following sufficient condition in terms of the decays of \({\widehat{\mu }}\) and \(\nabla {\widehat{\mu }}\):

This condition includes the spherical measure as it satisfies \(|{\widehat{\mu }}(x)|=O(|x|^{-1})\) and \(|\nabla {\widehat{\mu }}(x)|=O(|x|^{-1})\) as \(|x|\rightarrow \infty \).

The main result of this note is a variational estimate which generalizes (1.6) slightly beyond the previously covered cases of the averaging functions \(\chi \) or measures \(\mu \).

Theorem 1

Suppose that \(\varrho \in (1,\infty )\), and that \(\mu \) is a normalized, even complex measure, defined on the Borel subsets of \({\mathbb {R}}^3\), satisfying any of the following three conditions:

-

(a)

\(-x\cdot \nabla {\widehat{\mu }}(x)\ge 0\) for each \(x\in {\mathbb {R}}^3\), and

$$\begin{aligned} \Big \Vert \big \Vert (h*\mu _{\varepsilon })(x) \big \Vert _{\widetilde{V }^{\varrho }_{\varepsilon }} \Big \Vert _{L _x^{2}({\mathbb {R}}^3)} \lesssim _{\mu ,\varrho } \Vert h\Vert _{L ^{2}({\mathbb {R}}^3)} \end{aligned}$$(1.8)holds for each Schwartz function h;

-

(b)

\(\varrho \in (2,\infty )\), while \({\widehat{\mu }}\) is \(C ^2\) and satisfies the decay condition (1.7);

-

(c)

the inequality

$$\begin{aligned} \Big \Vert \big \Vert (h*\mu _{\varepsilon }*{\overline{\mu }}_{\eta })(x) \big \Vert _{\widetilde{W }^{\varrho }_{\varepsilon ,\eta }} \Big \Vert _{L _x^{2}({\mathbb {R}}^3)} \lesssim _{\mu ,\varrho } \Vert h\Vert _{L ^{2}({\mathbb {R}}^3)} \end{aligned}$$(1.9)holds for each Schwartz function h.

Then, for each Schwartz function f, the following estimate holds:

Let us immediately clarify one minor technical issue. Theorem 1 claims estimate (1.10) for Schwartz functions f only, but it immediately extends to all \(f\in L ^{4/3}({\mathbb {R}}^3)\) whenever \(\mu \) is absolutely continuous with respect to the Lebesgue measure. Otherwise, we could run into measurability issues on the left-hand side of (1.10) for singular measures \(\mu \).

Condition (a) in Theorem 1 is quite restrictive, but it is satisfied at least when \(\varrho >2\) and the Radon–Nikodym density of \(\mu \) is a radial Gaussian function. Indeed, if \(d \mu (x)=\alpha ^3 e^{-\pi \alpha ^2|x|^2}\,d x\) for some \(\alpha \in (0,\infty )\), then

is nonnegative, and (1.8) is a standard estimate by Bourgain [5, Lemma 3.28]. In fact, Bourgain formulated (1.8) for one-dimensional Schwartz averaging functions in [5], but the proof carries over to higher dimensions. Alternatively, one can invoke more general results from the subsequent literature, such as the work of Jones, Seeger, and Wright [9], which covered higher-dimensional convolutions, more general dilation structures, and both strong and weak-type variational estimates in a range of \(L ^p\)-spaces.

Theorem 1 under condition (b) was covered by the paper [10], up to minor technicalities, such as the fact that here we do not need \({\widehat{\mu }}\) to be smoother than \(C ^2\). However, [10] was concerned with more general surfaces and more general measures \(\sigma \) on them, while here we are able to give a more direct proof that is specific to the sphere and to the stated choice of the Lebesgue space exponents. In fact, as we have already noted, the present proof predates [10].

Condition (c) above is somewhat artificial and difficult to verify, but we include it since the proof that it implies (1.10) will be the most straightforward.

Maximal restriction estimates have found a nice application in the very recent work of Bilz [4], who used them to show that there exists a compact set \(E\subset {\mathbb {R}}^3\) of full Hausdorff dimension that does not allow any nontrivial a priori Fourier restriction estimates for any nontrivial Borel measure on E. We do not discuss the details here, but rather refer an interested reader to [4].

1.1 Notation

If \(A,B:X\rightarrow [0,\infty )\) are two functions (or functional expressions) such that, for each \(x\in X\), \(A(x)\le CB(x)\) for some unimportant constant \(0\le C<\infty \), then we write \(A(x)\lesssim B(x)\) or \(A(x) = O(B(x))\). If the constant C depends on a set of parameters P, we emphasize it notationally by writing \(A(x)\lesssim _P B(x)\) or \(A(x) = O_P(B(x))\).

We write a variable in the subscript of the letter denoting a function space whenever we need to emphasize with respect to which variable the corresponding (semi)norm is taken. For instance, \(\Vert g(\omega )\Vert _{L ^p_\omega }\) can be written in place of \(\Vert g\Vert _{L ^p}\), while \(\Vert g(\varepsilon )\Vert _{V ^\varrho _\varepsilon }\) can be written in place of \(\Vert g\Vert _{V ^\varrho }\), whenever the variables \(\omega ,\varepsilon \) need to be written explicitly.

The Fourier transform of a function \(f\in L ^1({\mathbb {R}}^3)\) is normalized as

for each \(y\in {\mathbb {R}}^3\). Here \(x\cdot y\) denotes the standard scalar product of vectors \(x,y\in {\mathbb {R}}^3\), and integration is performed with respect to the Lebesgue measure. The map \(f\mapsto {\widehat{f}}\) is then extended, as usual, by continuity to bounded linear operators \(L ^p({\mathbb {R}}^3)\rightarrow L ^{p'}({\mathbb {R}}^3)\) for each \(p\in (1,2]\) and \(p'=p/(p-1)\).

More generally, the Fourier transform of a complex measure \(\mu \) is the function \({\widehat{\mu }}\) defined as

for each \(y\in {\mathbb {R}}^3\).

The set of complex-valued Schwartz functions on \({\mathbb {R}}^3\) will be denoted by \({\mathcal {S}}({\mathbb {R}}^3)\).

The remainder of this paper is devoted to the proof of Theorem 1.

2 Proof of Theorem 1

We need to establish (1.10) assuming any one of the three conditions from the statement of Theorem 1. Let us start with condition (a).

Proof of Theorem 1 assuming condition (a)

Start by observing that

that \({\widehat{f}}*\mu = (f{\widehat{\mu }})\,\), and that the ordinary restriction estimate (1.1) applies to \(f{\widehat{\mu }}\) and yields

Thus, inequality (1.10) reduces to two applications of

which we proceed to establish. The desired estimate (2.1) unfolds as

The numbers \(\varepsilon _j\) in the above supremum can be restricted to a fixed interval \([\varepsilon _min ,\varepsilon _max ]\) with \(0<\varepsilon _min <\varepsilon _max \), but the estimate needs to be established with a constant independent of \(\varepsilon _min \) and \(\varepsilon _max \). Afterwards one simply applies the monotone convergence theorem letting \(\varepsilon _min \rightarrow 0^+\) and \(\varepsilon _max \rightarrow \infty \). Moreover, by only increasing the left-hand side of (2.2), we can also achieve \(\varepsilon _0=\varepsilon _min \) and \(\varepsilon _m=\varepsilon _max \).

Next, by continuity, one may further restrict the attention to rational numbers in the interval \([\varepsilon _min ,\varepsilon _max ]\), and, by yet another application of the monotone convergence theorem, one may consider only finitely many values in that interval. In this way, no generality is lost in assuming that the supremum in (2.2) is achieved for some \(m\in {\mathbb {N}}\) and for some measurable functions \(\varepsilon _k:{\mathbb {S}}^2\rightarrow [\varepsilon _min ,\varepsilon _max ]\), \(k\in \{0,1,\ldots ,m\}\), such that \(\varepsilon _0(\omega )\equiv \varepsilon _{min }\), \(\varepsilon _m(\omega )\equiv \varepsilon _{max }\). Estimate (2.2) then becomes

Once again, the implicit constant needs to be independent of m and the functions \(\varepsilon _k\). The reduction we just performed is an instance of the Kolmogorov–Seliverstov–Plessner linearization method used in [13].

Dualizing the mixed \(L _\omega ^2(\ell _j^\varrho )\)-norm, see [3], we turn the latter estimate into

where the bilinear form \(\Lambda \) is defined via

Here, \(\varrho '=\varrho /(\varrho -1)\) denotes the exponent conjugate to \(\varrho \) as usual, and \(g_j:{\mathbb {S}}^2\rightarrow {\mathbb {C}}\) are arbitrary measurable functions, \(j\in \{1,2,\ldots ,m\}\), gathered in a single vector-valued function \({\mathbf {g}}=(g_j)_{j=1}^{m}\). By elementary properties of the Fourier transform, \(\Lambda \) can be rewritten as

where \({\mathcal {E}}\) is a certain extension-type operator given by

By Hölder’s inequality, (2.3) is in turn equivalent to

If we denote

then we also have

for any \(x\in {\mathbb {R}}^3\), which in turn implies

for any \(0<a<b\). Substituting this into the definition of \({\mathcal {E}}\) yields

where, for each \(t\in [\varepsilon _{min },\varepsilon _{max })\) and each \(\omega \in {\mathbb {S}}^2\), we denote by \(j(t,\omega )\) the unique index \(j\in \{1,2,\ldots ,m\}\) such that \(t\in [\varepsilon _{j-1}(\omega ),\varepsilon _{j}(\omega ))\). Since \(\vartheta (x)\ge 0\), by the standing assumption in (a), we can apply the Cauchy–Schwarz inequality in the variable t to estimate

where

and

By (2.7),

From (2.8) and (2.10), we see that (2.5) will be established once we prove

Expanding out the square in the definition of \({\mathcal {B}}({\mathbf {g}})(x)\) yields

For fixed \(\omega ,\omega '\in {\mathbb {S}}^2\), consider

The intersection of two half-open intervals is either the empty set or again a half-open interval. For each pair \((j,j')\in J(\omega ,\omega ')\), it follows that there exist unique real numbers \(a(j,j',\omega ,\omega ')\) and \(b(j,j',\omega ,\omega ')\), such that

Clearly the intervals (2.13) constitute a finite partition of \([\varepsilon _{min },\varepsilon _{max })\). Using (2.7), we can rewrite (2.12) as

Taking \(h\in {\mathcal {S}}({\mathbb {R}}^3)\) and dualizing with \({\widehat{h}}\) leads to the form

By Plancherel’s identity, we have

so (2.11) will follow from

which we proceed to establish.

Using basic properties of the Fourier transform, the form \(\Theta \) can be rewritten as

for any Schwartz function h. Applying Hölder’s inequality to the sum in \((j,j')\), and recalling the definition of the \(\varrho \)-variation yields

By the usual Tomas–Stein restriction theorem in the formulation (1.5), applied with g replaced by \(\big (\sum _{j=1}^{m}|g_{j}|^{\varrho '}\big )^{1/\varrho '}\) and with h replaced by \(\Vert h*\mu _\varepsilon \Vert _{\widetilde{V }^\varrho _\varepsilon }\), we obtain

Invoking assumption (1.8) completes the proof of estimate (2.14), and therefore also that of (1.10). \(\square \)

Next, we will impose condition (b) and reduce the proof to the previous one by replacing \(\mu \) with a superposition of “nicer” measures.

Proof of Theorem 1 assuming condition (b)

We can repeat the same steps as before, reducing (1.10) to (2.11), where \({\mathcal {B}}\) is as in (2.9) and \(\vartheta \) is defined by (2.6).

We have already noted that (1.8) is satisfied for measures \(\mu \) with Gaussian densities, i.e., when \(d \mu (x)=\alpha ^3 e^{-\pi \alpha ^2|x|^2}\,d x\) for some \(\alpha \in (0,\infty )\), and that in this case (1.11) equals \(\psi (x/\alpha )\), where

Therefore, the previous proof of (2.11) specialized to this measure yields

Also note that the \(L ^1\)-normalization of the above Gaussians guarantees that the left-hand side of (1.8) does not depend on the parameter \(\alpha \). Consequently, the previous proof makes the constant in the bound (2.15) independent of \(\alpha \) as well. In this way, estimate (2.11) for a general measure \(\mu \) satisfying condition (b) will be a consequence of (2.15) and Minkowski’s inequality for integrals if we can only dominate \(\vartheta (x)\) pointwise as follows:

for each \(x\in {\mathbb {R}}^3\).

Denote by \(\Psi (x)\) the right-hand side of (2.16), and observe that \(\Psi (0)=0\) and \(\Psi (x)>0\) for each \(x\ne 0\). By continuity and compactness, it suffices to show that the ratio \(|\vartheta (x)|/\Psi (x)\) remains bounded as \(|x|\rightarrow \infty \) or \(|x|\rightarrow 0^+\).

Substituting \(r=\pi \alpha ^{-2}|x|^2\), we may rewrite \(\Psi \) as

From (2.18), we see that \(\lim _{|x|\rightarrow \infty } \Psi (x)/|x|^{-\delta } \in (0,\infty )\), while decay condition (1.7) gives \(|\vartheta (x)|=O(|x|^{-\delta })\) as \(|x|\rightarrow \infty \).

On the other hand, using Taylor’s formula for the function \(x\mapsto e^{-\pi \alpha ^{-2} |x|^2}\) and substituting into (2.17), we easily obtain

on a neighborhood of the origin. Moreover, \({\widehat{\mu }}\) is \(C ^2\) and even since \(\mu \) is even, and so we have that \((\nabla {\widehat{\mu }})(0)=0\). Taylor’s formula then yields

on a neighborhood of the origin. It follows that \(|\vartheta (x)|/\Psi (x) = O_{\mu ,\delta }(1)\) for sufficiently small nonzero |x|, and this completes the proof of (2.16). \(\square \)

The Gaussian domination trick which we have just used can be attributed to Stein, see [18, Chapter V, §3.1]. It was generalized and used in a slightly different context by Durcik [8].

Proof of Theorem 1 assuming condition (c)

We can repeat the same steps as before that reduce (1.10) to (2.5). This time we define the form \(\Theta \) differently via

where \({\mathbf {g}}\) is as before and \(h\in {\mathcal {S}}({\mathbb {R}}^3)\). Again, by duality, we only need to establish (2.14).

Squaring out (2.4) and inserting that into the above definition of \(\Theta \), we obtain

i.e.,

Applying Hölder’s inequality to the sum in (j, k), and recalling the definition of the biparameter \(\varrho \)-variation seminorm yields

Using (1.5) we obtain

and it remains to invoke the assumption (1.9). This proves (2.14) and, thus, also completes the proof of the theorem assuming condition (c). \(\square \)

References

Abramowitz, M., Stegun, I.A.: Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Dover Publications Inc, New York (1992)

Akcoglu, M.A., Jones, R.L., Schwartz, P.O.: Variation in probability, ergodic theory and analysis. Illinois J. Math. 42(1), 154–177 (1998)

Benedek, A., Panzone, R.: The space \(L^p\), with mixed norm. Duke Math. J. 28, 301–324 (1961)

Bilz, C.: Large sets without Fourier restriction theorems. arXiv:2001.10016 (2020)

Bourgain, J.: Pointwise ergodic theorems for arithmetic sets. With an appendix by the author, H. Furstenberg, Y. Katznelson, and D. S. Ornstein. Inst. Hautes Études Sci. Publ. Math. 69, 5–45 (1989)

Bourgain, J., Mirek, M., Stein, E.M., Wróbel, B.: On dimension-free variational inequalities for averaging operators in \({\mathbb{R}}^d\). Geom. Funct. Anal. 28(1), 58–99 (2018)

Campbell, J.T., Jones, R.L., Reinhold, K., Wierdl, M.: Oscillation and variation for the Hilbert transform. Duke Math. J. 105(1), 59–83 (2000)

Durcik, P.: An \(L^4\) estimate for a singular entangled quadrilinear form. Math. Res. Lett. 22(5), 1317–1332 (2015)

Jones, R.L., Seeger, A., Wright, J.: Strong variational and jump inequalities in harmonic analysis. Trans. Amer. Math. Soc. 360(12), 6711–6742 (2008)

Kovač, V.: Fourier restriction implies maximal and variational Fourier restriction. J. Funct. Anal. 277(10), 3355–3372 (2019)

Lépingle, D.: La variation d’ordre \(p\) des semi-martingales. Z. Wahrscheinlich-keitstheorie und Verw. Gebiete 36(4), 295–316 (1976)

Mirek, M., Stein, E.M., Zorin-Kranich, P.: A bootstrapping approach to jump inequalities and their applications. Anal. PDE 13(2), 527–558 (2020)

Müller, D., Ricci, F., Wright, J.: A maximal restriction theorem and Lebesgue points of functions in \({\cal{F}}(L^p)\). Rev. Mat. Iberoam. 35(3), 693–702 (2019)

Ramos, J. P. G.: Low-dimensional maximal restriction principles for the Fourier transform. arXiv:1904.10858 (2019)

Ramos, J.P.G.: Maximal restriction estimates and the maximal function of the Fourier transform. Proc. Amer. Math. Soc. 148(3), 1131–1138 (2020)

de Francia, J.L.R.: Maximal functions and Fourier transforms. Duke Math. J. 53(2), 395–404 (1986)

Stein, E.M.: Harmonic Analysis: Real-Variable Methods, Orthogonality, and Oscillatory Integrals. Princeton Math. Ser., vol. 43. Princeton Univ. Press, Princeton (1993)

Stein, E.M.: Singular Integrals and Differentiability Properties of Functions. Princeton Math. Ser., vol. 30. Princeton Univ. Press, Princeton (1970)

Tomas, P.: A restriction theorem for the Fourier transform. Bull. Amer. Math. Soc. 81(2), 477–478 (1975)

Vitturi, M.: A note on maximal Fourier restriction for spheres in all dimensions. arXiv:1703.09495 (2017)

Acknowledgements

The authors are grateful to Polona Durcik and Jim Wright for useful discussions surrounding the problem, and to the anonymous referee for a careful reading of the manuscript and valuable suggestions. V.K. was supported in part by the Croatian Science Foundation under the project UIP-2017-05-4129 (MUNHANAP). He also acknowledges partial support of the DAAD–MZO bilateral grant Multilinear singular integrals and applications and that of the Fulbright Scholar Program. D.O.S. acknowledges support by the EPSRC New Investigator Award Sharp Fourier Restriction Theory, grant no. EP/T001364/1, and the College Early Career Travel Fund of the University of Birmingham. This work was started during a pleasant visit of the second author to the University of Zagreb, whose hospitality is greatly appreciated.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kovač, V., Oliveira e Silva, D. A variational restriction theorem. Arch. Math. 117, 65–78 (2021). https://doi.org/10.1007/s00013-021-01604-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00013-021-01604-1