Abstract

Breast cancer is one of the major causes of death in women. Computer Aided Diagnosis (CAD) systems are being developed to assist radiologists in early diagnosis. Micro-calcifications can be an early symptom of breast cancer. Besides detection, classification of micro-calcification as benign or malignant is essential in a complete CAD system. We have developed a novel method for the classification of benign and malignant micro-calcification using an improved Fisher Linear Discriminant Analysis (LDA) approach for the linear transformation of segmented micro-calcification data in combination with a Support Vector Machine (SVM) variant to classify between the two classes. The results indicate an average accuracy equal to 96% which is comparable to state-of-the art methods in the literature.

Classification of Micro-calcification in Mammograms using Scalable Linear Fisher Discriminant Analysis

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Background

Machine Learning is widely being used to solve problems involving high dimensional data. In a large number of cases, the dimension of the data is much larger than the sample size, which is referred to as the undersampling problem [1]. High dimesionality and undersampling occurs in many applications [2, 3]. One of the solutions to deal with this undersampling problem is dimensionality reduction [3, 4].

Principal component analysis (PCA) is a procedure to convert a number of correlated variables into fewer variables called principal components [5], commonly used in fields of pattern recognition and computer vision [6, 7]. The purpose of PCA is to transform data to some low dimensional space and subsequently apply a classification method. Fisher Linear Discriminant Analysis (LDA) has been around for a long time with applications found in face recognition [3, 8], marketing [9], and biomedical studies [10]. LDA is a classical approach used for feature extraction and dimensionality reduction [11, 12]. The objective function of conventional LDA is to find a linear transformation, where the class separation is maximized while keeping the in-class variance small [5]. One of the major problems associated with LDA in the singularity issue [13]: LDA requires the scatter matrices of the training data to be non singular but the training samples are from a high dimensional space and in most cases the sample size is smaller than this dimension leading to a sparse matrices and potential singularity issues. Many LDA extensions have been proposed to resolve this singularity issue. PCA + LDA [3] and LDA/QR [4] are some of these two-stage extensions. The purpose of the two-stage approach is to convert the data into some intermediate form before applying the actual LDA. While applying these two-stage algorithms some of the important information may be lost in the first dimesionality reduction stage that may be beneficial for the subsequent LDA [14].

Zhang et al. [14] proposed a fast two-stage LDA algorithm as an alternative to the PCA+LDA or QR/LDA solutions. They claimed with the theoretical analysis that their algorithm outfperforms the other two-staged algorithms [3, 4] with the same scalability. They also provided the theoretical bound on the approximation of two-staged LDA. We propose a novel application of the scalable-LDA approach [14] for the classification of breast calcifications. To our knowledge, such method of feature extraction through dimensionality reduction has never been used for the problem of classifying micro-calcifications. The proposed method provides a way of encoding binary calcification data to a single value, which is a one-dimensional representation of the high dimension micro-calcification data. Instead of using a large number of features, the proposed classification approach used only a single feature to distinguish between the benign and malignant micro-calcifications. In terms of classification accuracy, the algorithm is giving good results compared to other state-of-the-art approaches developed for the classification of malignant and benign micro-calcifications (an overview of state-of-the-art approaches developed for the classification of benign and malignant micro-calcification as well as comparison with the current method can be found in Section 6.1).

2 Dataset

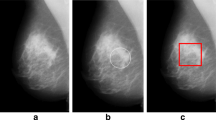

We used data from the Digital Database for Screening Mammography (DDSM) [15], which contains identified micro-calcifications. The mammograms in the DDSM database were digitized by one of four scanners: DBA M2100 ImageClear (42 μ m per pixel, 16 bits), Howtek 960 (43.5 μ m per pixel, 12 bits), Lumisys 200 Laser (50 μ m per pixel, 12 bits), and Howtek MultiRad850 (43.5 μ m per pixel, 12 bits). The patches are extracted from the whole mammogram containing micro-calcifications according to the available information regarding position of micro-calcification clusters. In addition, we excluded cases with overlapping mass regions. Subsequently, the micro-calcification clusters are either automatically detected [16] or manually annotated by expert radiologists. The automatic approach for segmenting micro-calcification clusters [16] involves local feature extraction using filter banks (including delta, Gaussian and Laplacian filters). After performing training to select salient features, a boosting classifier is used to detect individual micro-calcifications. Segmented images are representing the micro-calcification in binary form, where 0’s indicate the absence of micro-calcifications and 1’s the presence of micro-calcifications. The images in this dataset are of variable sizes. The average image size is (482 × 450), whereas the maximum height of all the images from the dataset is 2754 and the maximum width is 3778. The dataset has in total 288 ROIs (139 malignant and 149 benign). Each of the 288 RoI belongs to a different woman. Some sample variations in the size of the RoIs used in this study can be seen in Figs. 1 and 2 covering benign and malignant classes, respectively.

3 Fisher discriminant analysis

Given a data matrix \(D=[d_{1}, d_{2},....d_{n}]^{T} \in \mathbb {R}^{n\times m}\), where di is a m-dimensional vector, let the data matrix D be partitioned into k classes as DT = [D1T,D2T,.....DkT], where \(D_{i} \in \mathbb {R}^{n_{i}\times m}\) and \({\sum }_{i = 1}^{k} n_{i} = n\). The objective of conventional LDA is to compute the optimal linear transformation \(G \in \mathbb {R}^{m\times l}\) such that the class structure of the original space is preserved in the low-dimensional space. So, G maps each di of D in the m-dimensional space to a vector yj in l-dimensional space.

For discriminant analysis [12], two scatter matrices (between class and total scatter matrices) are defined as:

where \(c_{i} = \frac {1}{n_{i}} {\sum }_{d \in D_{i}} d \) is the mean of the ith class and \(c=\frac {1}{n} {\sum }_{d \in D} d \) is the mean of the whole data set. In the low-dimensional space, obtained as a result of linear transformation G, the scatter matrices become:

The calculation of the scatter matrices can be simplified through using precursors Hb and Ht as:

where \(e = [1,...,1]^ T \in \mathbb {R}^{n}\), then the scatter matrices Sb and St can be expressed as:

An optimal transformation G can be obtained by using the optimization from classical discriminant analysis [12]:

The solution to this optimization can be obtained by applying eigen-decomposition on the matrix St− 1Sb, if St is non-singular [12]. However, if St is singular, we can use the eigen-decomposition of St‡Sb, where St‡ is the pseudo-inverse of St. The use of psuedo-inverse for LDA has been studied in the literature [17, 18]. Ye [19] proposed a SVD-based solution for the eigen-decomposition (Algorithm 1), according to which the optimal transformation are the top q eigenvectors of St‡Sb, where q is equal to rank (Hb), which in most cases is equal to k − 1 (k is the number of classes). Zhang et al. [14] proposed a two-stage LDA algorithm as a scalable version of the conventional LDA. At the first stage of their algorithm, they introduced a linear transformation \(Z \in \mathbb {R}^{m \times r}\) to reduce the data dimensionality to some intermediate dimension r, and then applied conventional LDA on the reduced total scatter matrix \(\tilde {S_{t}}=Z^{T} S_{t} Z\) and reduced between-class scatter matrix \(\tilde {S_{b}} = Z^{T} S_{b} Z\) (by representing them as precursors \(\tilde {H_{b}}\) and \(\tilde {H_{t}}\)) in order to get the linear transformation \(\tilde {G}\). In the final stage they produced the reduced transformation as \(\hat {G} = Z \tilde {G}\). The pseudocode of this approach is presented in Algorithm 2.

4 Scalable LDA

In this section, we will explain the overall experimental setup for the Scalable-LDA approach implementation on the given dataset of segmented micro-calcifications with the aim of classifying them as benign or malignant micro-calcifications. The flow of the whole process can be seen in Fig. 3.

4.1 Resizing the RoIs

As explained in Section 2, we have 288 RoIs from the DDSM database. The basic purpose of this research was to devise a way to distinguish between the two classes of micro-calcification (i.e., benign versus malignant). Algorithm 2 required for all images to be equal size. For this, we resized all the images to max-height × max-width so that we are not loosing any single piece of information from any image within the dataset. We did this resizing by retaining the original image data I(x,y) at the center and fill the extra max-height-Ix and max-width-Iy pixels with the value 0. The purpose of using the proposed 0-padding technique instead of any other resizing approach (e.g. interpolation) is to retain the original data. The resizing through this 0-padding will keep the data at the centre of the image frame without adding additional bits to the original data or removing image information. After resizing the images in the dataset, all the images have size equal to 2754 × 3778.

4.2 Getting the intermediate dimensions [two-stage LDA]

Subsequently, we vectorize each image by converting it from 2754 × 3778 to 10404612 × 1 dimensions. In order to apply Fisher LDA for the dataset with this large dimensionality, we need to reduce the dimensionality of the data. As explained in Section 2, we have 149 images from the benign class and 139 from the malignant class, so we have 149 and 139 feature vectors from the benign and malignant classes. Here, we used terms Dbenign to represent the data belonging to the benign class and Dmalignant represents data from the malignant class, which are:

The total dataset is then represented as a data matrix DT as [DbenignT,DmalignantT], where the total size of Dbenign is 149 × 10404612 and 139 × 10404612 for Dmalignant according to the size of the dataset for each class. The total size of the final data matrix D is 288 × 10404612 (containing data vectors from both benign and malignant classes). As the sample size is much less than the dimensionality of the feature space, we can not apply the conventional LDA to solve this problem. To execute Algorithm 2, we need to compute the the precursors Hb and Ht (Eqs. 3 and 4) which requires the mean of the two classes. These precursors i.e. Hb and Ht, are required by Algorithms 1 and 2 to obtain the intermediate and final linear transformations.

We compute the mean for dataset Dbenign, Dmalignant and D as:

By using means cbenign, cmalignant and ctotal, we compute the precursors through Eqs. 1 and 2. We run Algorithm 2 by setting the value of r= 23 for the first stage (details of finding optimal value of r can be found in Section 5). For Algorithm 2 to perform well, the value of r≫ q [14]and a value to 23 is much larger than q (q= 1 for our data, as q= number of classes − 1̇). After executing step 1–4, we obtained the linear transformation \(Z \in \mathbb {R}^{10404612 \times 23}\), to reduce the dimensionality to 23.

4.3 Final linear transformation [two-stage LDA]

After obtaining the intermediate linear transformation Z, the next step is to find the final optimal transformation. Subsequently, after executing step 5 of Algorithm 2, we obtained \(\tilde {H_{b}}\) and \(\tilde {H_{t}}\) (having dimensionality (23 × 2) and (23 × 288), respectively) which will be used in step 6 of Algorithm 2, where we used the method proposed in Algorithm 1 for performing LDA (using precursors \(\tilde {H_{b}}\) and \(\tilde {H_{t}}\)) instead of using conventional LDA. The result of this LDA operation at step 6 of Algorithm 2 is a linear transformation \(\tilde {G}\) having dimensionality 23 × 1. Whereas the dimensionality of transformation matrix Z at step 4 is (10404612 × 23).

After getting the linear transformations matrix Z and \(\tilde {G}\), the next step is to obtain the final optimal transformation \(\hat {G}\), which is computed by multiplying the transformation matrices Z (10404612 × 23) and \(\tilde {G}\) (23 × 1). The dimensionality of this final optimal transformation matrix is (10404612 × 1).

4.4 Transforming the data to the reduced dimension

Next we transform our data (being represented as a vector [1 × 10404612]T) using the linear transformation \(\hat {G}\). We get the transformation as \(\hat {G}^{T} d_{i}\) ,where di ∈ d1,d2…dq,dq+ 1,dq+ 2…dq + l, where \(\hat {G}\) transformed each vector d from D to a one-dimensional space. The result of this projection is a single value for each data vector. The next step is to verify and check whether the data is linearly separable or not, and to use this to classify the data into the benign and malignant classes.

5 Performance evaluation

We classified our projected data with five different classifiers, which are Support Vector Machine (SVM), Baysian Network (BN), K-Nearest Neightbour (K-NN), Decision Table (DT) and ADTree. We used Weka [20] for all these experiments (developer version 3.7.2) and we left all the classifier’s parameters at the default setting.

As the projected data is one-dimensional, one of the ways to classify the data is to use a Support Vector Machine (SVM) [5]. We used Sequential Minimal Optimization (SMO) which is available in Weka [20] as an efficient way to solve the SVM problem [21]. We used a 10 runs 10-fold cross-validation (10-FCV) scheme for the performance evaluation of our results.

Optimizing the parameter r

As explained in Section 4.2, we selected the value of r equal to 23. In order to select this optimal value of r, we executed the algorithm for a range of values for r starting from 1. After projecting the data to a single dimension, we used SMO at each value r from 1…29. At r= 23, we found stable as well as reliable classification results (Classification Accuracy equal to 85.57%) using a SMO classifier. The details of the classification results achieved for each value of r can be seen in Fig. 4.

Classification results for the SMO classifier as well as for 4 other classifiers, i.e., BN, KNN, DT, and ADTree (using 10-runs 10-FCV) on the transformed data by using the Scalable-LDA approach can be found in the first column of Table 1.

The overall results were improved when compared to SVM (on average the accuracy was 96%).

In addition to classification accuracy, another commonly used evaluation metric is area under the ROC curve (AUC) (normally denoted as Az), which is used to explain the diagnostic ability of the classifier [22]. The values of Az by using 10-FCV classification scheme for 5 classifiers: SMO, BN, KNN, DT, ADTree are 0.853, 0.975, 0.972, 0.975, and 0.985, respectively, which indicates the stability of the classifiers (excluding SMO).

About projected data

While examining the projected data, we found that the values ≥ 0 are classified as benign, whereas the values < 0 are classified as malignant, which is shown in Fig. 5. The result in Fig. 5 cover 139 images from the malignant and 149 images from the benign class. It can also be clearly seen from Fig. 5 that this linear transformation of the segmented micro-calcification data is linearly separable.

Transformed micro-calcification data. Almost all the malignant images have been transformed to the negative axis, whereas the benign images have been transformed to the positive axis. Only a small amount of data has been misclassified that have been shown at the left hand side of each curve for the benign and malignant class

Figures 6 and 7 are showing sample cases (for both the benign and malignant classes) from the referenced dataset that have been correctly classified by using the developed approach. The projected value for each of these RoIs can also be seen in the figure’s captions.

6 Discussion on misclassified data

While observing the misclassified data, we observed characteristics of misclassified instances for all the classifiers used in our experiments (SMO, BN, KNN, DT and ADTree). We found 3 instances in all the classifiers (1 from the benign and 2 from the malignant class). Upon investigating the images from these misclassified data, the one benign image is very similar in appearance to the malignant images (having a dense cluster of micro-calcifications) and one malignant image has similarity with benign RoIs, whereas one of the malignant image have no detected micro-calcification. These three misclassified RoIs are shown in Fig. 8.

As stated in Section 1, several two stage approaches have also been proposed for LDA implementation [3, 4], which reduce the dimensionality of the data at the first stage before applying the actual LDA algorithm. We applied another version of LDA on our micro-calcification data for the benign and malignant classification that first transformed data to some low-dimensional space by using PCA before applying linear transformation of data (using LDA).

We used the Gram matrix (Appendix A) here for the computation of eigen vectors required for PCA. If D is representing a data matrix, then the Gram matrix for D is represented as DDT. The Gram matrix has been used in the literature for solving such eigenvalue problems and dimensionality reduction for large data sets [23, 24]. As our data matrix D is having dimension n×m and m ≫ n (for our dataset m = 10404612, n = 288), it is better to compute the eigen vectors of matrix DDT instead of DTD. The relationship between the two matrices DTD and DDT can be found in Appendix B. After computing the eigen vectors ν of DDT we left multiply ν by DT in order to get the eigen vectors of DTD. In order to consider matrix DDT, we already normalized the data matrix D to zero mean in order to consider DDT as the covariance matrix of D.

First we apply PCA on our data by setting the intermediate dimension to 10. We tried to set this dimension to 23, in order to be consistent with the Scalable-LDA approach (Section 4), but due to the limitations posed by the current memory we had to set the intermediate dimensions to 10. It should be noted here that we used the Gram matrix here for computing the eigen vectors, in order to cope with the memory requirements in computing eigen vectors of DTD. After that we transformed this reduced dimensional data to another linear space by using LDA. We used the LDA algorithm available with sklearn which is a machine learning library available with Python. The python version that was used in this experiment was version 2.7.0. The results of transforming the data matrix D to a linear dimension after applying applying LDA can be seen in Fig. 9. As can be clearly seen from the figure, the data is not linearly separable. We used the same classifiers (SMO, BN, KNN, DT, and ADT) and the average classification accuracy was 52% which is far less than the accuracy achieved by the developed approach (i.e. 96%), with details provided in Table 1. The results of the proposed scalable-LDA approach with setting the intermediate dimension r equal to 10 resulted in classification accuracy of 75% using the SMO classifier, whereas the results for BN, KNN,DT and ADTree classifiers were similar as in Table 1.

Transformed micro-calcification data by using the PCA-LDA approach. As can be seen, the data could not be classified correctly based on the transformed data. This is also in accordance with the results presented in column 2 of Table 1, according to which on average 52% of classification’s accuracy could be achieved from the transformed data by using the PCA-LDA approach

6.1 Comparative analysis

Multiple methods have been developed in the past for the purpose of classifying benign and malignant micro-calcifications [25, 26]. Some of these approaches rely on the features extracted from individual calcifications [27, 28], whereas some focused on extracting global features from clusters of calcifications [29,30,31,32]. Ma et al. [27], suggested the roughness of the individual micro-calcification as a discriminatory property for classifying benign and malignant micro-calcifications. Similarly, other shape features (measures of compactness, moments and Fourier descriptors) have also been studied in the past [28] to measure the roughness of the contours of calcifications and then used as a measure of classifying the benign and malignant micro-calcifications. In a work related to extracting cluster level features of micro-calcifications [29], 23 features were extracted from micro-calcifications. The features were categorized into three general types as: intensity statistics, shape features and linear structure features. They also used balanced learning and optimized decision making for the classification of the micro-calcification clusters. Final results used two classifiers (Artificial Neural Network (ANN) and Support Vector Machine (SVM)) for classifying benign and malignant micro-calcifications. In addition, topological models have also been studied in the past for modeling and classification of micro-calcifications [30,31,32]. Both fixed-scale [32] and multi-scale approaches [30, 31] have been proposed in the past for classifying the micro-calcifications with promising results. In addition, the topological models are also useful for radiologists/doctors for visual interpretation of the underlying micro-calcification’s structure.

Apart from the methods developed for manual feature extraction (both for individual calcifications or cluster level feature extraction), to our knowledge no method has been developed so far that focussed on the automatic features extraction through dimensionality reduction techniques for classifying the benign and malignant micro-calcifications. The work in this paper presents a novel application of feature extraction through dimensionality reduction techniques for classifying the micro-calcifications as benign or malignant. The results are comparable with other state-of-the-art approaches developed to solve the same problem. The detailed comparison of the proposed technique with the state-of-the-art approaches is shown in Table 2.

The performance measures used for the comparison are Classification Accuracy and area under the ROC curve (Az). The comparison has been made with 6 existing methods. For the current work, average values for the classification accuracy and Az are reported for the 5 classifiers used in the experiments.

7 Future work

The basic purpose of this research was to develop a method to classify binary images which contain benign or malignant micro-calcifications. In the future, we will try to use this scalable-LDA approach for the classification of normal and abnormal mammographic images from the DDSM database: to convert the mammographic images into some binary representation before applying this scalable-LDA approach.

Although the results are satisfactory (average accuracy 96%), other methods exist in the literature for making LDA/PCA scalable [3, 4, 33], and we will do some comparative work by applying these approaches to the same dataset.

8 Conclusions

We executed a two-stage LDA approach on binary data representing benign and malignant micro-calcifications. The idea was to project the data onto a low dimensional space and then use the resulting information to classify the data into two classes, but undersampling caused problems. By implementing a two-stage LDA approach, we achieved results that are comparable to state-of-the art approaches. The current method also presents a way to encode binary micro-calcification data as a single value. We achieved an accuracy of 96% on average for 5 classifiers, with the best performance at 98.6% by applying a scalable LDA approach for the classifications of benign and malignant micro-calcification. We compared the results with applying PCA-LDA on the same data, indicating a clear difference between the two approaches (Table 1, column 2).

Data availability

The data that support the findings of this study are available from Prof. Reyer Zwiggelaar, Aberystwyth University but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Data are however available from the authors upon reasonable request and with permission of Prof. Reyer Zwiggelaar, Aberystwyth University.

References

Krzanowski WJ, Jonathan P, McCarthy WV, Thomas MR (1995) Discriminant analysis with singular covariance matrices: methods and applications to spectroscopic data. Appl Stat 44:101–115

Berry MW, Dumais ST, O’Brien GW (1995) Using linear algebra for intelligent information retrieval. SIAM Rev 37(4):573–595

Belhumeur PN, Kriegman DJ, Hespanha JP (1997) Eigenfaces vs. fisherfaces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell 19(7):711–720

Ye J, Li Q (2005) A two-stage linear discriminant analysis via qr-decomposition. IEEE Trans Pattern Anal Mach Intell 27(6):929–941

Bishop MC (2006) Pattern recognition. Mach Learn 128:179–599

Li C, Diao Y, Ma H, Li Y (2008) A statistical pca method for face recognition. In: Second international symposium on intelligent information technology application, 2008 IITA’08, vol 3. IEEE, pp 376–380

Karamizadeh S, Abdullah SM, SM AA, Zamani M, Hooman A (2013) Manaf an overview of principal component analysis. J Signal Inform Process 4(3B):173

Etemad K, Chellappa R (1997) Discriminant analysis for recognition of human face images. JOSA A 14(8):1724–1733

Crask MR, Perreault WD Jr (1977) Validation of discriminant analysis in marketing research. J Mark Res 14:60–68

Dudoit S, Fridlyand J, Speed TP (2002) Comparison of discrimination methods for the classification of tumors using gene expression data. J Am Stat Assoc 97(457):77–87

Duda RO, Hart PE, Stork DG (2000) Pattern classification, 2nd edn. Wiley, New York, p 153

Fukunaga K (1990) Introduction to statistical pattern classification

Thomaz CE, Kitani EC, Gillies DF (2006) A maximum uncertainty lda-based approach for limited sample size problems-with application to face recognition. J Braz Comput Soc 12(2):7–18

Tu B, Zhang Z, Wang S, Qian H (2014) Making fisher discriminant analysis scalable. In: Proceedings of the 31st international conference on machine learning, pp 964–972

Heath M, Bowyer K, Kopans D, Moore R, Kegelmeyer WP (2000) The digital database for screening mammography. In: Proceedings of the 5th international workshop on digital mammography, pp 212–218

Oliver A, Torrent A, Lladó X, Tortajada M, Tortajada L, Sentis M, Freixenet J, Zwiggelaar R (2012) Automatic microcalcification and cluster detection for digital and digitised mammograms. Knowl-Based Syst 28:68–75

Raudys S, Duin R (1998) Expected classification error of the Fisher linear classifier with pseudo-inverse covariance matrix. Pattern Recogn Lett 19(5):385–392

Skurichina M, Duin R (1996) Stabilizing classifiers for very small sample sizes. In: Proceedings of the 13th international conference on pattern recognition, 1996, vol 2. IEEE, pp 891–896

Ye J (2005) Characterization of a family of algorithms for generalized discriminant analysis on undersampled problems. J Mach Learn Res 6:483–502

Hall M, Frank E, Holmes G, Pfahringer B, Reutemann P, Witten IH (2009) The weka data mining software: an update. ACM SIGKDD Explorations Newsletter 11(1):10–18

Platt JC (1999) 12 fast training of support vector machines using sequential minimal optimization. Advances in Kernel Methods, pp 185–208

Beck JR, Shultz EK (1986) The use of relative operating characteristic (ROC) curves in test performance evaluation. Arch Pathol Lab Med 110(1):13–20

Saul LK, Weinberger KQ, Ham JH, Sha F, Lee DD (2006) Spectral methods for dimensionality reduction. Semisupervised Learning:293–308

Weinberger KQ, Saul L (2006) Unsupervised learning of image manifolds by semidefinite programming. Int J Comput Vis 70(1):77–90

Elter M, Horsch A (2009) Cadx of mammographic masses and clustered microcalcifications: a review. Med Phys 36(6):2052–2068

Cheng HD, Cai X, Chen X, Hu L, Lou X (2003) Computer-aided detection and classification of microcalcifications in mammograms: a survey. Pattern Recogn 36(12):2967–2991

Ma Y, Tay PC, Adams RD, Zhang JZ (2010) A novel shape feature to classify microcalcifications. In: 2010 17th IEEE international conference on image processing (ICIP). IEEE, pp 2265–2268

Shen L, Rangayyan RM, Desautels JL (1994) Application of shape analysis to mammographic calcifications. IEEE Trans Med Imaging 13(2):263–274

Ren J (2012) ANN vs SVM: which one performs better in classification of mccs in mammogram imaging. Knowl-Based Syst 26:144–153

Chen Z, Strange H, Oliver A, Denton ER, Boggis C, Zwiggelaar R (2015) Topological modeling and classification of mammographic microcalcification clusters. IEEE Trans Biomed Eng 62(4):1203–1214

Strange H, Chen Z, Denton ER, Zwiggelaar R (2014) Modelling mammographic microcalcification clusters using persistent mereotopology. Pattern Recogn Lett 47:157–163

Suhail Z, Denton ER, Zwiggelaar R (2017) Tree-based modelling for the classification of mammographic benign and malignant micro-calcification clusters. Multimedia Tools and Applications, pp 1–14

Zabalza J, Ren J, Yang M, Zhang Y, Wang J, Marshall S, Han J (2014) Novel folded-pca for improved feature extraction and data reduction with hyperspectral imaging and sar in remote sensing. ISPRS J Photogramm Remote Sens 93:112–122

Acknowledgements

The authors would like to thank Dr. N. Mac Parthaláin for providing assistance to solve the classification problem as well as discussion regarding feature selection and optimization. The authors would also like to acknowledge Sandy Spence and Alun Jones for providing extensive hardware support to complete the experiments.

Funding

ZS carried out the implementation as well as the analysis and results evaluation. ED did the data augmentation and provided the class labels for the dataset. RZ suggested the overall model to solve the classification problem along with the overall coordination. All authors contributed to the written manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Appendices

Appendix A: Gram matrix

Consider m vectors \(\in \mathbb {R}^{n}\) [x1....xm]. The Gram matrix for this data collection is the m × m matrix G with elements Gij = \({x_{i}^{T}}x_{j}\). The matrix can be expressed as matrix X = [x1,...,xm], as

Appendix B: Eigenvectors from Gram matrix

Let A be the matrix having dimension m×n. Gram matrix of A can be computed as AAT. If ν and λ is representing eigen values and eigen vector of Gram matrix AAT, then

Left multiply both sides by AT, we get

Then by re-arranging terms:

Therefore, if ν is an eigenvector of AAT, then ATν is an eigenvector of ATA with the same eigenvalue λ.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Suhail, Z., Denton, E.R.E. & Zwiggelaar, R. Classification of micro-calcification in mammograms using scalable linear Fisher discriminant analysis. Med Biol Eng Comput 56, 1475–1485 (2018). https://doi.org/10.1007/s11517-017-1774-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11517-017-1774-z