Abstract

With rapid technological progress, the adoption of digital technology in human resource management (HRM) has become a crucial step towards the vision of digital organizations. Over the last four decades, a substantial body of empirical research has been dedicated towards explaining the phenomenon of digital HRM. Moreover, research has applied a wide array of theories, constructs, and measures to explain the adoption of digital HRM in organizations. The results are fragmented theoretical foundations and inconsistent empirical evaluations. We provide a comprehensive overview of theories applied in digital HRM adoption research and propose an adjusted version of the unified theory of acceptance and use of technology as a consolidating theory to explain adoption across settings. We empirically validate this theory by combining evidence from 134 primary studies yielding 768 effect sizes via meta-analytic structural equation modelling. Moderator analyses assessing the influence of research setting and sample on effects show significant differences between private and public sector. Findings highlight research opportunities for future studies and implications for practitioners.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

During recent decades, research on the adoption of digital technology in supporting HR activities has relied on various theoretical foundations that have evolved independently (Bondarouk and Ruël 2009; Larkin 2017; Strohmeier 2007, 2020). This plethora of theoretical tangents leaves theory in the field of digital Human Resource Management (HRM) in a fragmented state (Martin and Reddington 2010; Ruël et al. 2011; Strohmeier 2012) and may even lead to authors “cherry-picking” the model that best fits their data (Venkatesh et al. 2003). Merging these tangents under the umbrella of a unifying theory not only allows the integration of existing evidence but also offers common theoretical understanding, or baseline, that future research efforts can expand on. Our study, thus, aims to achieve this goal by presenting an integrative overview of theory applied in digital HRM adoption research. We show that a modified version of the Unified Theory of Acceptance and Use of Technology (UTAUT) (Venkatesh et al. 2003) consolidates a large proportion of individual theories applied in digital HRM adoption research. We then proceed by systematically and meta-analytically synthesizing the field’s quantitative evidence to empirically validate UTAUT as a unifying theory while motivating, discussing, and testing potential mediators and moderators.

The adoption of end-users has been frequently proposed as the crucial link between the technical implementation of digital HRM and its contribution to organizational effectiveness (Ruël and Bondarouk 2014). At the same time, we find a missing consensus on relevant key constructs to explain such adoption. This lack of a common theoretical basis severely limits the comparability, let alone the reproducibility of current results, and leads to inconsistent empirical evaluations (Bondarouk et al. 2017). Accordingly, reviews in the field have repeatedly called for a so far missing comprehensive examination of adoption factors for almost fifteen years now (Bondarouk et al. 2017; Ruël and Bondarouk 2014; Strohmeier 2007). Proposing a unifying, generalizable theory that includes a predefined set of constructs, provides recommended operationalizations, and offers specific testable hypotheses is a vital step to address this issue. Earlier works have made a case for UTAUT as a candidate for such a unifying concept in digital HRM by applying the theory in individual studies (e.g., Laumer et al. 2010; Obeidat 2016; Ruta 2005). However, to qualify for this consolidating purpose, it remains to show that UTAUT both integrates earlier research efforts on the phenomenon and that its validity can be confirmed across digital HRM settings. To this end, we take a theory-driven approach to show that UTAUT integrates earlier research efforts and to meta-analytically confirm the validity of the paradigm across digital HRM settings. Hereby, we complement the set of exclusively narrative and qualitative reviews in the field (e.g., Bondarouk et al. 2017; Strohmeier 2007; Tursunbayeva et al. 2017) to provide the much-needed common theoretical basis but may also offer guidance for more consistent and reproducible future research efforts.. In addition to this general objective, we offer two additional contributions to address the idiosyncrasies of UTAUT in digital HRM.

First, UTAUT has been proposed in different versions, including a varying set of constructs when applied in various contexts. The discussions center around an appropriate set of predictors (e.g., Sykes et al. 2009; Venkatesh et al. 2012) as well as suitable mediators to explain usage (e.g., Chang et al. 2016; Rondan-Cataluña et al. 2015). For instance, concerning user attitudes, scholars have argued for (e.g., Dwivedi et al. 2019; Huseynov and Özkan Yıldırım 2019; Oshlyansky et al. 2007) as well as against (e.g., Chang et al. 2016; Venkatesh et al. 2012; Yi et al. 2006) the inclusion of this construct as a mediator. Moreover, different variants of the theory have been gradually introduced, including a version based on meta-analytic results (meta-UTAUT; Dwivedi et al. 2019, 2020). As the choice of constructs may significantly improve the explanatory power of the theory, it is of vital importance to motivate and test adjustments to the UTAUT framework to guide future evaluations. Hereby, we aim to confirm if the original set of predictors is suitable to explain adoption in digital HRM and whether common mediators, i.e., attitudes and intentions emerge as relevant in our field.

Second, within the UTAUT literature, there has been an ongoing debate on a suitable set of moderators (Dwivedi et al. 2019; Venkatesh 2022). For example, both individual characteristics and environmental variables may moderate relationships, depending on context. This discussion is especially relevant in digital HRM because the field combines research in the preceding concepts of Human Resource Information Systems (HRIS) (e.g., DeSanctis 1986; Mathys and LaVan 1982; Mayer 1971; Tomeski and Lazarus 1974) and Electronic Human Resource Management (e-HRM) as well as its subsets such as e-recruiting or organizational e-learning (e.g., Lengnick-Hall and Moritz 2003; Lepak and Snell 1998; Ruël et al. 2004; Strohmeier 2007). Accordingly, scholars have studied technology adoption across various HR functions, organizational settings, and various groups of stakeholders (Strohmeier 2007). For this reason, research may greatly benefit from comparisons of adoption across contexts. Yet, the options for comparing associations in different settings and samples are severely limited within a single study. In contrast, summarizing evidence across numerous studies meta-analytically allows assessing the influence of various study characteristics on relationships between constructs. More specifically, such tests may reinforce the value of a unifying theory by attesting to its generalizability.

In brief, our review summarizes theory in empirical research as a basis (1) to meta-analytically test the validity of UTAUT as an overarching and consolidating theory to explain digital HRM adoption across settings, (2) to propose field-specific adjustments to the theory, and (3) to examine if study-level characteristics moderate relationships between individual constructs. This allows testing existing field-specific hypotheses, explore the influence of other possible moderators, and offer a baseline theory to guide future research efforts towards a more unified view on the phenomenon.

2 Motivation—technology adoption research in digital human resource management

2.1 Overview of theoretical foundations

The field of digital HRM can be characterized as a confluence of two streams of scholarly work, research on Information Systems (IS) and research on Human Resources (HR). Additionally, some theoretical perspectives in both streams track back their origins to psychology, e.g., the Theory of Reasoned Action (Fishbein and Ajzen 1975) or the Theory of Planned Behavior (Ajzen 1991). In empirical digital HRM studies, scholars have primarily made extensive use of dominant IS paradigms (Marler et al. 2009; Marler and Dulebohn 2005). While all these theories aim to explain the adoption and use of digital HRM in some capacity, they rely on varying sets of constructs and operationalizations. Yet, for our present goal of summarizing evidence across primary we should choose a theory that (1) allows us to include as much primary research as possible (2) without sacrificing the integrity of primary study constructs, i.e., primary study constructs should have conceptual equivalents in the unifying theory.

An informed decision in this matter requires a more in-depth examination of theory applied in digital HRM research. To this end, we conducted a scoping review (cf. Pham et al. 2014). As frequently suggested, we use this scoping review as a preliminary analysis to a inform our systematic review (Arksey and O’Malley 2005) by summarizing earlier research findings and by mapping the heterogenous body of empirical literature on digital HRM (Mays et al. 2001). We collected empirical studies reporting some sort of effect size and studying digital HRM adoption. The studies were either cited in one of the earlier, narrative digital HRM reviews or resulted from an initial search in the Web of Science. We did not enforce any additional inclusion criteria for this scoping procedure. This yielded a sample of 171 studies. Note that this set should not be seen as an exhaustive list of digital HRM adoption research but rather a comprehensive and, for the purpose at hand, representative sample of empirical studies in our field. Out of the 171 studies, only 36 employed some sort of digital HRM-specific theory. The remaining 135 contributions adapted theoretical frameworks from IS research with some researchers even combining multiple frameworks. Table 1 provides a summary with respect to the prevalence of individual theories in this 135-study sample. For each theory, the prevalence in empirical digital HRM literature, i.e., the number and percentage of applications for the respective paradigm, is listed in the rightmost column. As some studies utilized a combination of two theories, the total number of applications sums up to 162.

Our scoping review allows three important insights. First, we see a clear dominance of IS theories over field-specific paradigms. Almost 80% of the studies in our scoping review (135 out of 171) relied on established paradigms from IS research. A simple and plausible explanation for this dominance is the relative maturity of IS theories because each model has been evaluated across various IS subfields. These theories also include a predefined set of constructs, provide recommended operationalizations, and come with various testable propositions. While this general trend of merely adapting existing theories limits theory building, it also makes constructs comparable across studies. A second observation is the dominance of the original version of TAM (Davis 1989) in quantitative digital HRM antecedents research. This potentially poses problems because TAM, in its original form, has been extensively criticized for its poor explanative and predictive power and for its disputed heuristic value (Bagozzi 2007; Chuttur 2009; Legris et al. 2003; Venkatesh 2000). Following this reasoning, we decided against testing TAM in our study, even though it emerges as the most prevalent theory. Last, this multitude of frameworks confirms the fragmented state of theory in digital HRM. Yet, our tabulation also suggests that efforts to consolidate theory may be fruitful. Table 1 demonstrates that most theories applied in digital HRM are either (1) one of the eight theories that were unified to form the original UTAUT or (2) have at least some conceptually equivalent constructs in UTAUT. To illustrate this claim, our summary (Table 1) also shows the primary study constructs and their respective UTAUT equivalent for each applied theory.

Given these similarities, both conceptually and empirically, it seems appropriate to test the validity of UTAUT as on overarching and consolidating theory to explain digital HRM adoption. Additionally, by summarizing primary study constructs under the umbrella of meta-analytic constructs, i.e., UTAUT constructs, all theories prevalent in digital HRM are consolidated in a fashion similar to Venkatesh et al.’s original article. In addition and on a larger scale, we are interested in the explanatory value of UTAUT in the field of digital HRM. Thus, we not only examine the bivariate relationships between all UTAUT constructs but also use meta-analytic structural equation modeling to evaluate the model fit to the meta-analytically pooled results.

2.2 Field specific adjustments to UTAUT

We made two adjustments to the original UTAUT for the present study, the introduction of user attitudes as a central construct and the inclusion of moderators specific to digital HRM research.

In line with earlier contributions, we hypothesize that user attitudes mediate the relationships between all exogenous constructs and behavioral intention while having a merely indirect effect on usage. This inclusion of attitude has been proposed in general IS theories (Ajzen 1991; Fishbein and Ajzen 1975), in research studying technology adoption and use (Kim et al. 2009; Taylor and Todd 1995; Yang and Yoo 2004), as well as in UTAUT studies specifically (e.g., Dwivedi et al. 2019; Huseynov and Özkan Yıldırım 2019; Oshlyansky et al. 2007). In addition, it has been argued that an individual’s behavior towards using a new technology is shaped by attitude and that the construct must be considered to adequately explain intentions and use behavior (Chong 2013; Rana et al. 2017). Consequently, attitude is introduced as (1) a direct antecedent of behavioral intention (Dwivedi et al. 2017; Fishbein and Ajzen 1975) and (2) a direct consequence of performance expectancy, effort expectancy, social influence, and facilitating conditions (Dwivedi et al. 2020; Šumak et al. 2010). Conceptually, we hypothesize that digital HRM users form attitudes towards the innovation based on their perceptions, opinions of others, and the provided support. These attitudes are assumed to be linked to the users’ intentions to use the innovation and thus, in consequence, influence their actual use behavior (Guimaraes and Igbaria 1997). The inclusion of attitude is also consistent with a recently introduced approach to UTAUT based on meta-analytic results (meta-UTAUT; Dwivedi et al. 2019, 2020). Our proposed model corresponds to meta-UTAUT with one exception. Original UTAUT hypothesized no direct influence of facilitating conditions on behavioral intentions when effort expectancy is also included in the model (Venkatesh et al. 2003). We argue that this claim might still hold because the empirical evidence listed to justify the inclusion of this path is exclusively based on models with intentions as the only dependent variable (Eckhardt et al. 2009; Foon and Fah 2011; Yeow and Loo 2009). Thus, when trying to explain actual usage, as in the present study, we omit a hypothesized direct path between facilitating conditions and behavioral intentions.

Our second adjustment aims at investigating the dynamic nature of technology adoption and use in more detail. To this end, we take a contingency approach to applying UTAUT by examining a wide range of potential moderators (c.f. Venkatesh et al. 2003). More specifically, our study considers three sets of characteristics. First, digital HRM research has introduced various study-level characteristics that may exert an influence on relationships between digital HRM usage and its antecedents. As these potential moderators are specifically proposed in our field of research, we apply a confirmatory approach to validate their presence. In particular, authors have proposed the sector of the organization (Panayotopoulou et al. 2007; Shilpa and Gopal 2011; Strohmeier and Kabst 2009), the background of study respondents (Bondarouk et al. 2009; Bondarouk and Ruël 2009; Bos-Nehles and Meijerink 2018), and regional context (Florkowski and Olivas‐Luján 2006; Ramirez and Zapata-Cantú 2008; Strohmeier and Kabst 2009) as potentially influential characteristics. Sector characteristics can facilitate or inhibit digital HRM adoption because the use of technology and employees’ computer literacy is varying greatly across sectors (Strohmeier and Kabst 2009). Similarly, respondents with an HR background are expected to assess the usefulness of a prospective digital HRM system of service more accurately than employees without such a background (Bondarouk and Ruël 2009). Concerning study region, “Western” and “Eastern” economies with their respective cultural differences may perceive adoption factors differently (Bondarouk and Ruël 2009), e.g., collectivist cultures may be more affected by social influences regarding system use. Second, following the original UTAUT model, we also assessed the influence of gender and age of respondents on relationships. However, since the impact of relevant prior experience of users and their voluntariness of use are scarcely researched phenomena in the field of digital HRM, we could not include these characteristics in our moderator analysis. We complement this second, explorative set of moderators by adding publication year to capture the possible changes in outcomes over time. Finally, we examine the impact of methodological characteristics such as functional scope of the implementation (see below), peer-review status, and level of analysis on effect sizes.

In sum and from a theory building perspective, our first adjustment will give us the opportunity to evaluate if the inclusion of attitudes helps to explain usage in our field and if future contributions will benefit from measuring this construct. In addition, our second adjustment may inform future theory building efforts if different models and links between relationships are needed to explain digital HRM usage in a particular context or with specific user backgrounds in mind. The adjusted unifying research model is visualized in Fig. 1.

2.3 Measures—UTAUT constructs in the field of digital HRM

Next, we briefly discuss how the individual UTAUT constructs are operationalized in digital HRM research. Hereby, we aim to show that the aforementioned commonalities across theories also extend to the respective measurement items. Besides the direct application of UTAUT scales (e.g., Eckhardt et al. 2009; Laumer et al. 2010; Obeidat 2016; Wong and Huang 2011), digital HRM research uses various representative study constructs to capture the individual concepts. Following the classification of Venkatesh et al. (2003), we summarize these definitions under the umbrella of the corresponding UTAUT constructs. Construct definitions and representative constructs are presented with example measures in Table 2.

Given the prevalence of TAM in digital HRM research, it is not surprising that performance and effort expectancy are frequently measured in the form of perceived usefulness (Chen 2010; Marler et al. 2009; Roca et al. 2006) and perceived ease of use (Konradt et al. 2006; Wahyudi and Park 2014; Yusliza and Ramayah 2012), respectively. The same holds for the construct of subjective norm as a substitute for social influence (Iyer et al. 2020; Laumer et al. 2010; Lin 2010). Facilitating conditions are, by definition, a relatively broad concept. Yet, the most common operationalization in digital HRM assesses the compatibility with existing aspects of work, values, and needs or, alternatively, the degree of organizational support (Al-Dmour 2014; Parry and Wilson 2009; Purnomo and Lee 2013). In comparison to these exogenous constructs, the measures for attitude and behavioral intention are fairly consistent. Scales for attitude vary between more general measures (Maier et al. 2013; Voermans and van Veldhoven 2007; Yoo et al. 2012) and more field-specific ones, e.g., attitudes towards using recruitment websites (van Birgelen et al. 2008). Scales for behavioral intention show little variation across primary studies (Erdoğmuş and Esen 2011; Luor et al. 2009; Marler et al. 2006). Finally, since the construct of digital HRM usage shows various idiosyncratic properties, it requires a more detailed definition.

Following Burton-Jones and Gallivan (2007), we define digital HRM usage as the degree of applying digital technologies by an individual or collective actor to perform HRM tasks. Most commonly, this main construct is measured as frequency of use on various scales (Njoku 2016; Sabir et al. 2015; Wickramasinghe 2010). Other operationalizations capturing the level of implementation and application, e.g., what HR functions are supported by digital HRM, are less common (Al-Dmour 2014; Geetha 2017; Morsy and El Demerdash 2017).

This conceptualization is rather inclusive in that it incorporates use behavior for different user types with varying functional scope (e.g., broader e-HRM adoption vs narrower e-recruiting adoption). Thus, it is potentially subject to a common point of criticism in meta-analysis, that is, authors comparing “apples and oranges”(Durlak and Lipsey 1991; Sharpe 1997).

It is imperative to mention that we aim to derive conclusions for the research area of digital HRM as a whole rather than for one specific type of digital HRM. As such, we argue that it is not only valid but strictly necessary to include all available evidence across usage types. All types of usage examined in primary studies are performed with the overarching objective to support HR activities via digital technologies. Note that definitions of this construct, if varying, still remain within the boundaries of our well confined subfield. Thus, we argue that earlier meta-analytic contributions synthesizing general IS research across subfields necessarily introduce even broader usage constructs.

Nevertheless, we specifically address this discussion by introducing the functional scope of implementation as a domain-specific moderator in both of our analyses. In particular, we examine potential variations between two groups of contributions to evaluate our decision to pool results across multiple types of digital HRM usage. On one side, we find studies in which the digital HRM implementation affects an array of multiple HR functions, e.g., a joint migration to digital selection, compensation, and performance management. In contrast, other studies have investigated the impact on a single HR function in isolation, e.g., the introduction of a dedicated e-recruiting website or an employee self-service. Note that the current body of literature merely allows such cursory examinations of differences. More detailed and theoretically meaningful tests, e.g., comparing results from e-recruiting studies with those from e-performance management studies, are not yet possible because the number of studies in each subgroup is very limited. In consequence, such analyses would lack adequate statistical power due to insufficient studies per subgroups as well as unbalanced subgroups (cf. Cuijpers et al. 2021).

3 Method

To test our proposed theory, we performed a combination of meta-analysis and structural equation modelling (MASEM) that combines the strengths of both techniques (Jak and Cheung 2019). In particular, the approach allows us to evaluate the fit of our hypothesized model to meta-analytically pooled data. Recently, Jeyaraj and Dwivedi (2020) have introduced a categorization of meta-analyses in IS based on outcome measures (derived metrics or reported effect sizes) and research approach (exploratory or confirmatory). Even though our research approach shows traits of an exploratory meta-analysis, e.g., meta-regression analyses to reveal new knowledge regarding moderators, we consider our study a confirmatory meta-analysis with reported effect sizes.

3.1 Search strategy

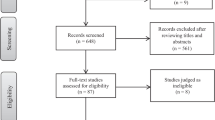

Our general sampling frame covered all empirical evidence published in English on the usage of digital HRM and its antecedents. We did not limit the search to a specific time period. To identify suitable primary studies, we first performed various keyword searches in several electronic databases, including Web of Science, Business Source Premier, JSTOR, EBSCOHost, and Google Scholar. We supplemented the database searches with backward and forward snowballing, i.e., we both examined the references of a relevant study and inspected the articles that cited that study (Jalali and Wohlin 2012). Likewise, we consulted highly cited digital HRM reviews for suitable primary research. Finally, in an effort to prevent publication bias, we additionally searched “grey literature” (Durlak and Lipsey 1991; Rothstein et al. 2005), in particular relevant doctoral dissertations (e.g., via Proquest Dissertations and Theses), conference proceedings, and working papers (e.g., via ResearchGate and SSRN) for relevant documents. Search keywords evolved around the core construct digital HRM usage spanning across various HR functions (e.g., e-HRM, HRIS, e-recruiting, organizational e-learning, and e-performance management), UTAUT constructs including their representative primary study constructs (see Table 2), and the desired outcome characteristics (e.g., correlation, quantitative, and empirical). This set of keywords was also iteratively updated to ensure no relevant evidence was missed. A list of search terms is provided in the supplementary material (Appendix B). This search process was concluded in December 2021 and yielded 3,545 results after removing duplicates. Preforming preliminary title and abstract screening left a sample of 1594 candidate studies. These studies had to pass four preset criteria to be included in the final analysis. First, a document had to report quantitative results and had to analyze primary data. Thus, collations or syntheses of existing research were excluded and suitable primary studies were extracted. Second, at least one relationship between any two UTAUT constructs as defined above had to be analyzed. Third, in case of sample overlap, i.e., two studies analyzing the same dataset, we included the study that reported more usable effect sizes. This rule also applied if there was only partial overlap. Finally, a document had to report sufficient information to code the effect sizes, correlation coefficient and sample size in our case. When the primary effect size metric was not reported, we had to assure a reliable conversion to this metric was possible. A PRISMA statement (Moher et al. 2009) documenting the search process is provided in the supplementary material (Appendix B).

The final sample included 134 studies with 140 independent datasets. These studies reported 768 effect sizes with a total sample size of 40,595.

3.2 Coding strategy

The chosen effect size metric for our study was the Pearson product-moment correlation coefficient. In the rare instances (k = 2 studies) when authors reported another effect size metric, i.e., standardized mean differences between constructs, those were transformed using conventional procedures (cf. Borenstein et al. 2009; Lipsey and Wilson 2001). For each study, we coded all essential information needed for further analyses, i.e., effect sizes, sample sizes but also the reliabilities, means, and standard deviations for all examined constructs.

In addition, we gathered all potentially relevant study characteristics including sector of the target organization, respondent group, study region, publication year, functional scope of the study, peer-review status, level of analysis, as well as age and gender of the study population. For sector we distinguished between studies conducted in the private (e.g., manufacturing or logistics) and in the public sector (e.g., government or education). If a study gathered data across sectors, it was only classified as private or public if all target organizations were operating in the respective sector. Respondent group described if all study respondents had some sort of HR background (HR managers or HR professionals) or not. Study region was coded as either Western (North America, Europe, and Australia), Eastern (Middle East and Asia), or African. As discussed above, functional scope was coded as either composite (e.g., spanning multiple HR functions) or single (e.g., focusing on a specific HR function). For peer-review status, we applied a more fine-grained categorization to not only captured the effect of peer-review but also of an outlet’s impact factor on effect sizes. Studies were grouped into three categories, i.e., (1) non-peer-reviewed sources, (2) sources from outlets that claimed to perform a peer-review procedure yet have no impact factor and are not recorded in the 2020 Journal Citation Reports (JCR) (Clarivate Analytics 2020), and (3) sources that have been published in outlets listed in the JCR with a 5-year mean impact factor (cf. Schrag et al. 2011). We followed common recommendations to address possible differences between individual and organizational level studies by introducing level of analysis as moderator (Collins et al. 2004; DeGroot et al. 2000; Gully et al. 1995). In case effect sizes differ substantially between levels of analysis, we would have to conduct separate meta-analyses for each level. Otherwise, pooling effect sizes across levels is feasible (Ostroff and Harrison 1999, p. 267). Finally, we noted the mean age of respondents and coded gender as the percentage of female respondents in a study.

To avoid synthesizing stochastically dependent effect sizes, special attention was required when a study reported multiple effect sizes for the same relationship (Gleser and Olkin 2009). In case the independence assumption was tenable, e.g., a study simply replicated their analysis for samples drawn from multiple firms, all reported effect sizes were included in the meta-analyses. If this assumption was violated (k = 2 studies), we computed an unweighted average of effect sizes, so that the study only contributed one (summary) effect size for the relationship in question.

Initial coding reliability was established by three coders coding a randomly chosen 10%-subset of all included studies. Intercoder reliability was calculated for all information and characteristics listed above. Reference details such as author, title, and publication year were excluded from the calculation because perfect agreement is expected for these characteristics. For all effect sizes, agreement required coders (1) to have a matching mapping from primary study constructs to the meta-analytic constructs and (2) to report the same reliabilities for both constructs (if available). The agreement rate between the three coders was 87.5%, and Fleiss’ kappa was 0.882, suggesting “almost perfect agreement” (J. R. Landis and Koch 1977, p. 165). Disagreements were resolved in a subsequent discussion before the remaining documents were coded by the first author.

Effect sizes were then corrected for measurement error in both constructs (Hunter and Schmidt 2014). Since studies frequently reported reliabilities for their constructs, this attenuation correction was performed on the level of the individual study. Effect sizes were not corrected if correlations were taken from primary studies that already corrected for attenuation (e.g., to assess discriminant validity). In case no reliability information was reported, we imputed the mean reliabilities for the respective constructs across all studies for this correction (e.g., Giluk 2009).Footnote 1 We did not correct for other statistical artifacts such as range restriction or dichotomization of continuous variables as these were not prevalent in our sample of studies. Since the necessity of reliability corrections is subject to debate in MASEM (Michel et al. 2011), we report results for both uncorrected and reliability corrected correlations.

3.3 Meta-analytic structural equation modelling (MASEM)

The essential idea behind MASEM has been summarized as follows (Cheung 2015a): In a first step, correlation matrices from primary studies are combined to form a pooled correlation matrix. In the second step, hypothesized structural equation models are tested using this pooled matrix as input. Earlier MASEM studies, in particular in management research, have used an univariate approach (R. S. Landis 2013; Viswesvaran and Ones 1995). Here, synthesizing correlations from primary studies is usually done via pairwise aggregation, i.e., performing a separate meta-analysis for each bivariate relationship. This meta-analytically populated pooled correlation matrix is then used as input into a structural equation model. An essential drawback of the univariate approach is that constructing a pooled correlation matrix via pairwise aggregation does not account for possible dependencies between the pairs of correlations, i.e., the elements in the matrix (Cheung 2015a; Riley 2009). Thus, multivariate approaches such as two-stage structural equation modelling (TSSEM, Cheung and Chan 2005) are preferred. These account for the dependencies by estimating the pooled correlation matrix with a multivariate random-effects meta-analysis (Cheung 2013). Thus, in the present study, we applied two-stage structural equation modelling (TSSEM). To test the stability of the results, we also conducted a univariate approach to MASEM and compared the results with those from TSSEM. Given our broad definition of digital HRM usage, we expected substantial between-study heterogeneity and intended to derive unconditional conclusions about a universe of similar but not identical studies. Thus, all results were obtained using a random-effects approach to meta-analysis (Hedges and Vevea 1998).

3.3.1 Stage 1—Meta-analyses

We synthesized correlation matrices, one for each independent sample following the TSSEM approach using random effects multivariate meta-analysis with maximum likelihood estimation (ML) for between-study heterogeneity (Cheung 2015a; Hardy and Thompson 1996). Beside the pooled estimates, we also report an estimate of the amount of heterogeneity (\(\widehat{\tau }\), the estimated standard deviation of true correlations) and the I2 statistic (Higgins et al. 2003) for each bivariate relationship. Unlike traditional meta-analyses, TSSEM approaches commonly use “raw” correlations instead of Fisher z scores (Fisher 1915) obtained through the associated variance-stabilizing transformation (e.g., Dwivedi et al. 2019; Joseph et al. 2007; Sabherwal et al. 2006). The resulting 21 pooled effect size estimates were then used to populate the pooled correlation matrix.

3.3.2 Stage 2—Structural equation modelling (SEM)

The results obtained in stage 1 were then used for the SEM analysis. The pooled correlation matrix was, together with its estimated asymptotic sampling covariance matrix, used as input in the stage to analysis (Cheung 2014). Note that the weighted least squared estimation approach in TSSEM, i.e., weighting the elements in the pooled correlation matrix by their precision, makes the choice of an appropriate sample size (e.g., harmonic mean) obsolete (Cheung 2014). Hereby, these matrices are treated as observed matrices. The approach uses weighted least squares estimation to weigh the precision of the pooled correlation matrix in fitting models, so that goodness-of-fit indices can be calculated. In our case, as for most MASEM studies in social sciences, no correlations on the item level were available. Thus, all constructs were treated as observed variables in this modelling stage so that we performed, strictly speaking, path analysis. To evaluate model fit, we report χ2 test statistics and common fit indices, including CFI, RMSEA and SRMR, and consult the common associated thresholds. Following earlier MASEM studies in IS research, we then inspected critical ratios (CRs) to identify non-significant path estimates (Dwivedi et al. 2019, 2021; Otto et al. 2020; Sabherwal et al. 2006).

3.4 Moderator analyses

We assessed moderator effects of study characteristics on effect sizes in two steps. First, we used mixed-effect (meta-regression) models for all bivariate relationships to explain at least part of the heterogeneity in true correlations (Raudenbush 2009). As for the meta-analyses, REML estimation was used to the amount of residual heterogeneity in the effect sizes. Additionally, to achieve more accurate control of Type I error rates we applied Knapp and Hartung adjustment for the test statistics (Knapp and Hartung 2003; Viechtbauer et al. 2014). Due to the restrictive studies to covariates ratio and resulting statistical power restrictions, we tested each moderator individually instead of including them all collectively in one model (Viechtbauer 2007). If multiple tests for a single moderator showed statistical significance, we obtained evidence against the hypothesis of homogeneity across all studies.

In the second stage, we applied one-stage MASEM (OSMASEM, Jak and Cheung 2019) to assess the influence of each categorical study characteristics by regressing model parameters on moderator variables. OSMASEM can be seen as an extension to TSSEM that allows to examine between-study heterogeneity on the level of individual (direct) path coefficient in the path model. Model fit of our proposed model was assessed and moderation was tested for all hypothesized direct paths.

For each moderator, differences were assessed via a likelihood ratio test, comparing the base models without and model with the moderator. We performed these OSMASEM analyses for all field-specific moderators, i.e., sector, respondent group, region, and functional scope.

3.5 Outlier and publication bias

To assess the possible presence of publication bias, we calculated a fail-safe k value (Orwin 1983; Rosenthal 1979), performed a regression test for funnel plot asymmetry (Egger et al. 1997; Sterne and Egger 2005), and inspected the associated funnel plot for each bivariate relationship. Due to the limitation of Rosenthal’s fail-safe k (Borenstein et al. 2009; Orwin 1983), we calculated each fail-safe k value based on Orwin’s approach with a target effect size of r = 0.1 (Orwin 1983). The value then represents the number of studies (samples) averaging null results that we would have to add to the given set of effect sizes to reduce the unweighted average correlation to a negligible unweighted average correlation.

To identify potential outliers, we consulted several influence diagnostics based on leave-one-out analysis, i.e. repeatedly fit the model, leaving out one study at a time. We can assess the influence on the overall result and on heterogeneity if the corresponding study was omitted. Different diagnostic measures are tested against common cut-offs to determine if a study is a an outlier candidate (Viechtbauer and Cheung 2010). We report results with and without outliers.

Analyses of publication bias and outlier are done at the level of each univariate meta-analysis using the variance-stabilizing transformation (z-transformed and back-transformed) for the correlations.

3.6 Robustness checks

To test the robustness of our decisions, we replicated our analysis in various scenarios. First, to validate our choice of MASEM approach, we performed a univariate approach in addition to our TSSEM approach and compared the results. Second, to validate our choice of using corrected correlations, we replicated the TSSEM analysis using uncorrected correlations. In addition, we calculated the covariance matrix from our stage 1 correlation matrix and used it as input for the stage 2 analysis. The covariance matrix was calculated from our pooled correlation matrix using the pooled standard deviations for each construct (Cheung and Chan 2009; Dwivedi et al. 2019). These pooled values were calculated by averaging all standard deviations reported in primary studies. In case scales deviated from the standard measurement scale (5-point Likert scale), the standard deviations were transformed accordingly. The resulting covariance matrix was then used as input for the analysis. Third, we replicated the analysis with outlier effect sizes removed. Finally, we replicated the analysis with grey literature excluded. Besides lending credibility to our chosen methods, these calculations also highlight opportunities of the MASEM toolset for future studies in IS literature.

All analyses were conducted in R (version 4.2.2, R Core Team 2021). The TSSEM approach and the moderator analyses via OSMASEM were realized using metaSEM (version 1.3.0, Cheung 2015b). Meta-regressions, test for publications bias, and outlier analyses were performed using the metafor package (version 3.8-1, Viechtbauer 2010). The path analysis for the univariate approach during the robustness checks was conducted using lavaan (version 0.6-13, Rosseel 2012).

4 Results

4.1 Descriptive statistics

The included studies were conducted during the period from 1997 to 2021. The mean age of respondents was 32.87. Samples had an average of 45.16% female respondents. Respondents had some sort of HR background in 25.71% of studies. 60.71% of the samples were collected in Eastern, 26.43% in Western, and 8.57% in African countries. More samples were collected from firms in the private sector (44.29%) than from firms in the public sector (30.71%). For the remaining studies, the sector was either not specified or data collection spanned over multiple sectors. The outlets were mostly journals (77.14%) followed by conference proceedings (11.43%). Two thirds (66.43%) of documents were peer-reviewed. The level of analysis was the individual in 80.60% and the organization in the remaining 19.40% of the studies. About half of the contributions investigated implementation affecting multiple HR functions (45.55%) with the other half focusing on a single HR function (54.45%).

4.2 Stage 1 results

Stage 1 results from all 21 bivariate relationships between the 7 UTAUT constructs are summarized in Table 3. Besides the pooled correlation estimate used to populate the pooled correlation matrix, we show the number of effect sizes and the cumulative sample size for each relationship. Moreover, we provide statistics to assess the presence of between-study heterogeneity and fail-safe K values for each meta-analysis. Pooled correlations estimates were generally moderate ranging from 0.354 to 0.626. The largest pooled correlations were found between intention and attitude (r = 0.626), intention and usage (r = 0.562), and intention and performance expectancy (r = 0.555). The number of effect sizes used to calculate the pooled estimate varied greatly between cells, ranging from 6 to 94 effect sizes. Without exception, correlations for all relationships were very heterogenous as indicated by high τ2 estimates [0.010–0.048] and I2 statistics [81.96–95.37%]. This substantial variation in effect sizes across studies can be explained by the presence of moderators on study level.

Fail-safe k values based on Orwin’s approach were high, ranging from 20 to 503, suggesting that we would need a substantial number of additional unpublished studies reporting null effects to render a hypothetical pooled correlation negligible (r < 0.1). Egger’s regression test indicated funnel plot asymmetry for merely three relationships leading to a detailed inspection of the associated funnel plots. Note that regression tests do not test for publication bias directly. They merely test for funnel plot asymmetry for which publication bias is only one of many possible reasons. We noticed a pattern of missing studies on the lower right side of almost all asymmetric funnel plots, i.e., missing studies with large reported effect sizes and higher standard errors. This pattern makes publication bias as the reason for the asymmetry very unlikely because it is improbable that studies finding large (“good”) effects would not have been published even if they had small sample sizes (Sterne et al. 2011). Influential case diagnostics indicated outliers for three relationships (one effect size in EE-SI and SI-ATT each, two effect sizes for ATT-USE). Removing these outliers effect sizes did not affect the conclusion in any way.Footnote 2 Stage 2 results are reported with outliers.

4.3 Stage 2 results

After transforming the pooled correlation matrix obtained in stage one to a pooled covariance matrix, we used this data as input for our path analysis. We tested both the original UTAUT model without the attitude construct and our proposed model with attitude as an additional mediator construct. The results are depicted in Table 4.

Model fit for the original UTAUT model was good (χ2(4) = 5.520, p = 0.24, CFI = 0.99, RMSEA = 0.003, SRMR = 0.021) while the fit for our proposed model was excellent (χ2(5) = 3.844, p = 0.57, CFI = 1.00, RMSEA = 0.000, SRMR = 0.016). Following our approach of model modification, we eliminate the non-significant path between effort expectancy and intention based on the CR (β = 0.052, p > 0.05). For this emergent model, all path estimates were significant and the model showed excellent fit (χ2(6) = 4.868, p = 0.56, CFI = 1.00, RMSEA = 0.000, SRMR = 0.017). Since the fit is equivalent to the hypothesized model, we prefer the model with more degrees of freedom that also explains more variance in intention. This emergent model is visualized in Fig. 2 and explains about 40.8% of variance in attitude, 51.3% of variance in intention, and 40.9% of variance in usage.

4.4 Moderator analyses

The results of the meta-regressions for all coded study characteristics across all bivariate relationships are visualized in Table 5 as follows: For each individual significant test, we list the name of the associated study characteristic in the corresponding cell in the matrix. For example, since the meta-regression for study sector was significant at the 5%-level for the relationship between effort expectancy and intention, the identifier (sector) and the associated p-value of the F-statistic (p = 0.009) is listed in the corresponding cell in the matrix depicted in Table 5. Significant tests for categorical moderators are listed in the cells below the diagonal while significant tests for continuous moderators are listed in the cells above the diagonal. We obtained the highest number of significant tests for study sector and gender (4), followed by year (3). In only few relationships had the peer-review status (1) or the level of analysis (2) any significant influence. Functional scope, respondent group, and mean age were not significant as a moderating characteristic in any relationship. Notably, we found no single bivariate relationship for which multiple tests for moderators were significant.

These results were reified by the OSMASEM analyses. Since we regressed all parameters corresponding to the nine direct effects in our emergent model on moderator variables, the change in degrees of freedom is nine for all likelihood ratio tests. Sector emerges as the only moderator variable that moderated single parameters in the model. In particular, the coefficient for the path between facilitating conditions and usage (β = 0.298, p = 0.019, stronger for public sector) as well as the coefficient for the path between intentions and usage (β = − 0.176, p = 0.017, weaker for public sector) was moderated by sector. Yet, the likelihood ratio test, comparing the model with and without moderator, was not significant (ΔLL(Δdf = 9) = 16.61, p = 0.055). Neither respondent group (ΔLL(Δdf = 9) = 10.60, p = 0.303) nor study region (ΔLL(Δdf = 9) = 8.42, p = 0.492) or functional scope (ΔLL(Δdf = 9) = 9.11, p = 0. 427) showed a significant test statistic and did not moderate any individual parameters. All moderated paths are highlighted in Fig. 2.

Accordingly, we estimated separate pooled correlation matrices for effect sizes collected in private sector and public sector organizations. That is, we performed a separate random-effects TSSEM analysis for both subgroups.Footnote 3 In total, the private sector matrix was constructed using 312 effect sizes while the public sector matrix included only 237 effect sizes. The total sample sizes were 12,686 (private) and 11,050 (public). For the stage 2 analysis, we then fitted our emergent model (without the deleted path) to both pooled subgroup correlation matrices. For the private sector subgroup, the fit was excellent, even better than for our main model with all collected data (χ2(6) = 0.479, p = 0.99, CFI = 1.000, RMSEA = 0.000, SRMR = 0.007). However, the fit for the public sector model was not very good (χ2(6) = 12.90, p < 0.05, CFI = 0.985, RMSEA = 0.010, SRMR = 0.038). This also showed in terms of explained variance in the endogenous constructs, i.e., the private sector data explained about 3.1% more variance in attitude, about 11.5% more variance in intention, and about the same variance in usage. We refrained from testing model modifications beyond the UTAUT framework, e.g., eliminating entire constructs from the model, due to the lack of theoretical justification for such alterations.

4.5 Robustness checks Footnote 4

First, performing the MASEM using a univariate approach instead of TSSEM, i.e., synthesizing z-transformed correlations from primary studies via pairwise aggregation, yielded comparable back-transformed pooled correlations in the first stage analysis. The pooled correlations ranged from 0.375 to 0.663. Notably the between-study heterogeneity is somewhat higher in this approach indicated by τ2 estimates [0.015–0.132] and I2 statistics [76.77–97.31%]. Fitting our emergent model in the second stage analysis, using the harmonic mean of cell sample sizes (Burke and Landis 2003; Colquitt et al. 2000, N = 6588) yielded acceptable fit (χ2(6) = 122.491, p < 0.05*, CFI = 0.990, RMSEA = 0.054, SRMR = 0.018). Notably, if we perform TSSEM with a covariance matrix instead of the correlation matrix and harmonic mean as sample size, we obtain very similar results (χ2(6) = 131.698, p < 0.05*, CFI = 0.988, RMSEA = 0.056, SRMR = 0.022). Second, recalculating TSSEM with uncorrected instead of reliability corrected correlations leads to lower pooled correlations but also slightly worse fit to our emergent model (χ2(6) = 11.5472, p = 0.07, CFI = 0.995, RMSEA = 0.005, SRMR = 0.024). Third, since only four out of 768 effect sizes emerged as potential outliers, stage 2 results are unaffected (to the second decimal place) by the removal of outliers. Finally, re-estimating the model without grey literature left 111 independent samples and showed almost identical fit (χ2(6) = 4.77, p = 0.57, CFI = 1.00, RMSEA = 0.000, SRMR = 0.018). Performing the analysis with grey literature only was not possible due to low number of independent samples (k = 29).

5 Discussion

Our study aims to motivate the need of a unifying theoretical basis with three contributions in mind. That is, we aimed to test and discuss the validity, the benefits, and implications of using UTAUT as such a basis, to examine the need of field-specific adjustments to the theory, and to provide valuable insights regarding generalizability and differences between research contexts. Our contributions to each goal are summarized below.

5.1 Validating a common baseline—implications

Our study complements and extends the set of earlier, narrative reviews in that it allows insights regarding the strength of associations between digital HRM antecedents. Here, we offer empirical support for an adjusted version of UTAUT as a suitable theory to explain digital HRM adoption across settings. With that, we provide a unifying basis that future efforts can expand on. The implications of this find are intriguing, both from a theoretical and a practitioner’s point of view.

From a theoretical perspective, we gain various important insights. Overall, the empirical validation of our proposed theory aims at advancing theory in digital HRM research as it yields (a) a predefined set of relevant constructs, (b) a summary of applicable operationalizations for these constructs, and (c) a collection of testable hypotheses. Accordingly, future digital HRM studies may use UTAUT as a foundation and common baseline that can possibly be extended for various settings. We explicitly do not suggest UTAUT as a one-size-fits-all solution for future evaluations. Rather, we expect upcoming research to adapt and extend this common baseline. Using such a unifying theory will not only contribute to the comparability of future evaluations but will also encourage authors to move away from using original TAM and all the explanatory and predictive limitations that come with it. In addition, it may prevent authors from “cherry-picking” their favorite model that best fits their data while simultaneously neglecting other important constructs and will help the field towards more consistent and reproducible research.

Assuming the digital HRM practitioner’s lens, insights on relevant constructs linked to digital HRM usage can motivate adoption decisions and direct possible interventions before, during, and after implementation. Managing user intentions emerges as a necessary requirement for successful adoption. This may be accomplished by thoroughly educating and informing users while simultaneously involving them in all project stages. Here, firms may also direct their interventions towards managing user perceptions, social influences, and other facilitating preconditions. Raising high expectations of performance can be crucial for a successful adoption and continued usage of digital HRM, possibly even more so than for similar innovations in the area of information systems. Designing digital HRM services in a way that clearly conveys their potential accessibility and utility paves the way for a seamless implementation. Regarding expected effort, our suggestions are in line with earlier IS contributions, i.e., firms are encouraged to align system capabilities with user requirements by pursuing clean and easy-to-use designs (Martín and Herrero 2012; Rana et al. 2015). Moreover, engaging users to share digital HRM recommendations and best-practices, e.g., via virtual communities (Šumak et al. 2010), may shape social influences by presenting digital HRM usage as a generally endorsed and rewarding behavior. In terms of facilitating conditions, a construct that was mainly operationalized using measures of compatibility and perceived resources, it is vital that organizations (1) provide sufficient resources for a successful implementation, (2) clearly convey what they try to achieve through digital HRM, and (3) align digital HRM to the current values and demands of potential users. In addition to following all these recommendations, practice is well advised to constantly adapt to possible changes in user perceptions and intentions during all stages of adoption. Firms should actively seek feedback from users, listen to their concerns, and convey the organizational vision of digital HRM across all hierarchical levels.

5.2 Necessity of field-specific extensions

Second, our analysis supports the notion that easier to use, useful, more compatible, and peer endorsed digital HRM systems and services are associated with more positive attitudes, intentions, and, in consequence, a greater extent of usage. In addition, we find interrelations between all predictors, e.g., easier to use systems are expected to perform better or vice versa. This confirms our proposed set of common predictors to guide future evaluations. In contrast, comparing our proposed model with the original UTAUT model does not conclusively answer the open question about the importance of attitudes in explaining digital HRM usage. Results suggest that model fit improves when including attitudes, especially for uncorrected correlations and covariance inputs, yet the differences are relatively small. Thus, we cannot argue for the critical necessity of the attitude construct. Nonetheless, UTAUT (in both the original and our emergent variant) emerges as excellent in explaining digital HRM adoption.

5.3 Generalizability across contexts

Last, our study contributes to the debate if digital HRM and its antecedents are subject to contextual influences. Concerning influential study characteristics, we obtain the most convincing evidence for variations across different sectors. This result is in line with earlier digital HRM contributions in that it supports the hypothesis of sectoral differences exerting an influence on effects (Panayotopoulou et al. 2007; Shilpa and Gopal 2011; Strohmeier and Kabst 2009). Bivariate relationships show that direct associations with digital HRM usage are to an extent comparable across sectors, yet the public sector data does not show good model fit. This may indicate that, while the measured antecedents are adequate, a different model, other than UTAUT, might be more appropriate to explain public sector digital HRM usage. Importantly, facilitating conditions emerge as a stronger predictor of usage in the public sector, while usage intentions are more relevant in the private sector. Intuitively, this is plausible because existing resources are usually the limiting factor in public sector organizations. Organizational and technical infrastructure needs to be compatible with the innovation. Infrastructure is rarely upgraded explicitly to facilitate such an innovation. In contrast, intentions in the private sector, especially those of decision makers and stakeholders are a critical requirement for business decisions, such as a digital HRM implementation. Future research efforts should continue our efforts to investigate possible differences between digital HRM adoption in the private and public sector.

For other potential moderators, we do not confirm such an influence. No single study characteristic emerges as an overall influential moderator. This in itself is an important find as it leaves the possibility that perceptions towards digital HRM adoption are comparable for various stakeholders in different regions of the world. Thus, our unifying theory not only offers a common understanding in one particular context but instead can serve as an outset for theory building across various contexts. Authors are still encouraged to provide an explicit discussion of possible variations if their data extends across multiple subgroups. Yet, to gain an even more nuanced view on contextual influences, researchers may use our proposed model to evaluate if the relationships are consistent across different study contexts.

6 Limitations

The findings of our analysis are subject to some limitations. First and foremost, the exclusively cross-sectional nature of evidence included in our synthesis prohibits any causal inference. Various authors have agreed that any conclusions regarding causality in MASEM, or meta-analysis for that matter, are misplaced unless all included studies have applied experimental designs (Bamberg and Möser 2007; Becker and Schram 1994; Bergh et al. 2016; Landis 2013; Tang and Cheung 2016). Accordingly, we explicitly avoid causal claims. It is to note that this issue is not limited to our field of research. Neither digital HRM nor general IS research is likely to exchange classic cross-sectional evaluations for experimental designs in the near future. Thus, the inability to argue for causality will remain an inherent limitation of most upcoming syntheses in IS research. Second, like previous IS meta-analyses (Dwivedi et al. 2019; King and He 2006; Sabherwal et al. 2006), our synthesis assumes that a comparison of effect sizes across similar construct definitions and operationalizations is meaningful. We address this issue by providing a concise overview of representative constructs and measures for each umbrella construct. Yet, as motivated before, pooling operationalizations across HR functions within a general usage construct precludes detailed conclusions for different types of digital HRM usage. Finally, moderator analyses in our study left a substantial amount of heterogeneity in relationships unexplained. Accordingly, research may still need to consider additional moderators to achieve a holistic approach to explain variations in relationships linked to digital HRM adoption and use in organizations. To this end, we offer some suggestions for potential research directions.

7 Conclusion

Our study identified and reviewed similarities across common theories, constructs, and measurement items in the field of digital HRM. Informed by this discussion, we developed an adjusted version of UTAUT as a consolidating theoretical framework to synthesize research on digital HRM antecedents. Using a combination of meta-analysis and structural equation modelling, we empirically validated our theory by combining evidence from 134 primary studies. Hereby, we proposed various extensions to complement the meta-analytic toolset in IS research. Our analysis allowed valuable insights for research and practice but also highlighted research opportunities. Recommendations may help practitioners to prevent failed digital HRM implementations. Both our overview of applied theory and our analysis of empirical evidence suggest that there is still much foundational work to be done before digital HRM can be considered a mature research area. We developed and validated a theory that future research may use as a basis to explain acceptance and use across research settings as well as for specific types of digital HRM. With that, we provided a crucial first step towards tying up the loose ends of theory in digital HRM, thus helping to guide the field towards more consistent and reproducible empirical evaluations.

Data availability

The authors confirm that the data supporting the findings of this study are available within the article’s supplementary materials.

Notes

All mean reliabilities are reported in the supplementary material (Appendix E).

All test statistics for publications bias and results with and without outliers can be found in the supplementary material (Appendix C).

The stage 1 correlation matrices for both subgroups are available in the supplementary material (Appendix C).

All statistics described in this chapter are available in the supplementary material (Appendix D). This includes stage one and two results of the univariate analysis and the associated path diagram. Note that using the OSMASEM approach without any moderator and on the full data necessarily yields the exact same results as TSSEM.

References

Ajzen I (1991) The theory of planned behavior. Organ Behav Hum Decis Process 50(2):179–211. https://doi.org/10.1016/0749-5978(91)90020-T

Al-Dmour R (2014) An integration model for identifying the determinants of the adoption and implementation level of HRIS applications and Its effectiveness in business organisations in Jordan. Doctoral dissertation, Brunel University

Arksey H, O’Malley L (2005) Scoping studies: towards a methodological framework. Int J Soc Res Methodol 8(1):19–32. https://doi.org/10.1080/1364557032000119616

Bagozzi R (2007) The legacy of the technology acceptance model and a proposal for a paradigm shift. J Assoc Inf Syst 8(4):244–254. https://doi.org/10.17705/1jais.00122

Bamberg S, Möser G (2007) Twenty years after Hines, Hungerford, and Tomera: a new meta-analysis of psycho-social determinants of pro-environmental behaviour. J Environ Psychol 27(1):14–25. https://doi.org/10.1016/j.jenvp.2006.12.002

Becker BJ, Schram CM (1994) Examining explanatory models through research synthesis. The annual meeting of the American Educational Research Association in San Francisco, CA, Mar 27, 1989

Bergh DD, Aguinis H, Heavey C, Ketchen DJ, Boyd BK, Su P, Lau CL, Joo H (2016) Using meta-analytic structural equation modeling to advance strategic management research: Guidelines and an empirical illustration via the strategic leadership-performance relationship. Strateg Manag J 37(3):477–497. https://doi.org/10.1002/smj.2338

Bermúdez-Edo M, Hurtado-Torres N, Aragón-Correa JA (2010) The importance of trusting beliefs linked to the corporate website for diffusion of recruiting-related online innovations. Inf Technol Manag 11(4):177–189. https://doi.org/10.1007/s10799-010-0074-1

Bondarouk T, Ruël HJM (2009) Electronic human resource management: challenges in the digital era. Int J Hum Resour Manag 20(3):505–514. https://doi.org/10.1080/09585190802707235

Bondarouk T, Looise JK, Lempsink B (2009) Framing the implementation of HRM innovation: HR professionals vs line managers in a construction company. Pers Rev 38(5):472–491. https://doi.org/10.1108/00483480910978009

Bondarouk T, Parry E, Furtmueller E (2017) Electronic HRM: four decades of research on adoption and consequences. Int J Hum Resour Manag 28(1):98–131. https://doi.org/10.1080/09585192.2016.1245672

Borenstein M, Hedges LV, Higgins JPT, Rothstein HR (2009) Introduction to meta-analysis. Wiley. https://doi.org/10.1002/9780470743386

Bos-Nehles AC, Meijerink JG (2018) HRM implementation by multiple HRM actors: a social exchange perspective. Int J Hum Resour Manag 29(22):3068–3092. https://doi.org/10.1080/09585192.2018.1443958

Braddy PW, Meade AW, Kroustalis CM (2008) Online recruiting: the effects of organizational familiarity, website usability, and website attractiveness on viewers’ impressions of organizations. Comput Hum Behav 24(6):2992–3001. https://doi.org/10.1016/j.chb.2008.05.005

Burke MJ, Landis RS (2003) Methodological and conceptual challenges in conducting and interpreting meta-analyses. Validy Gen Crit Rev 28:287–309

Burton-Jones A, Gallivan MJ (2007) Toward a deeper understanding of system usage in organizations: a multilevel perspective. MIS Q 31(4):657–679. https://doi.org/10.2307/25148815

Chang HH, Fu CS, Jain HT (2016) Modifying UTAUT and innovation diffusion theory to reveal online shopping behavior: familiarity and perceived risk as mediators. Inf Dev 32(5):1757–1773. https://doi.org/10.1177/0266666915623317

Chen H-J (2010) Linking employees’ e-learning system use to their overall job outcomes: an empirical study based on the IS success model. Comput Educ 55(4):1628–1639. https://doi.org/10.1016/j.compedu.2010.07.005

Cheung MW-L (2013) Multivariate meta-analysis as structural equation models. Struct Equ Model 20(3):429–454

Cheung MW-L (2014) Fixed- and random-effects meta-analytic structural equation modeling: examples and analyses in R. Behav Res Methods 46(1):29–40. https://doi.org/10.3758/s13428-013-0361-y

Cheung MW-L (2015a) Meta-analysis: a structural equation modeling approach. Wiley

Cheung MW-L (2015b) MetaSEM: an R package for meta-analysis using structural equation modeling. Front Psychol 5:1521. https://doi.org/10.3389/fpsyg.2014.01521

Cheung MW-L, Chan W (2005) Meta-analytic structural equation modeling: a two-stage approach. Psychol Methods 10(1):40. https://doi.org/10.1037/1082-989X.10.1.40

Cheung MW-L, Chan W (2009) A two-stage approach to synthesizing covariance matrices in meta-analytic structural equation modeling. Struct Equ Model 16(1):28–53. https://doi.org/10.1080/10705510802561295

Chong AY-L (2013) Predicting m-commerce adoption determinants: a neural network approach. Expert Syst Appl 40(2):523–530. https://doi.org/10.1016/j.eswa.2012.07.068

Chuttur MY (2009) Overview of the technology acceptance model: origins, developments and future directions. Work Pap Inf Syst 9(37):9–37

Cober RT, Brown DJ, Levy PE, Cober AB, Keeping LM (2003) Organizational web sites: web site content and style as determinants of organizational attraction. Int J Sel Assess 11(2–3):158–169. https://doi.org/10.1111/1468-2389.00239

Collins CJ, Hanges PJ, Locke EA (2004) The relationship of achievement motivation to entrepreneurial behavior: a meta-analysis. Hum Perform 17(1):95–117. https://doi.org/10.1207/S15327043HUP1701_5

Colquitt JA, LePine JA, Noe RA (2000) Toward an integrative theory of training motivation: a meta-analytic path analysis of 20 years of research. J Appl Psychol 85(5):678–707. https://doi.org/10.1037/0021-9010.85.5.678

Cudeck R (1989) Analysis of correlation matrices using covariance structure models. Psychol Bull 105(2):317

Cuijpers P, Griffin JW, Furukawa TA (2021) The lack of statistical power of subgroup analyses in meta-analyses: a cautionary note. Epidemiol Psychiatric Sci 30:e78. https://doi.org/10.1017/S2045796021000664

Davis FD (1989) Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q 13(3):319–340. https://doi.org/10.2307/249008

DeGroot T, Kiker DS, Cross TC (2000) A meta-analysis to review organizational outcomes related to charismatic leadership. Can J Admin Sci 17(4):356–372. https://doi.org/10.1111/j.1936-4490.2000.tb00234.x

DeSanctis G (1986) Human resource information systems: a current assessment. MIS Q 10(1):15–27. https://doi.org/10.2307/248875

Durlak JA, Lipsey MW (1991) A practitioner’s guide to meta-analysis. Am J Commun Psychol 19(3):291–332. https://doi.org/10.1007/BF00938026

Dwivedi YK, Rana NP, Janssen M, Lal B, Williams MD, Clement M (2017) An empirical validation of a unified model of electronic government adoption (UMEGA). Gov Inf Q 34(2):211–230

Dwivedi YK, Rana NP, Jeyaraj A, Clement M, Williams MD (2019) Re-examining the unified theory of acceptance and use of technology (UTAUT): towards a revised theoretical model. Inf Syst Front 21(3):719–734. https://doi.org/10.1007/s10796-017-9774-y

Dwivedi YK, Rana NP, Tamilmani K, Raman R (2020) A meta-analysis based modified unified theory of acceptance and use of technology (meta-UTAUT): a review of emerging literature. Curr Opin Psychol 36:13–18. https://doi.org/10.1016/j.copsyc.2020.03.008

Dwivedi YK, Ismagilova E, Sarker P, Jeyaraj A, Jadil Y, Hughes L (2021) A meta-analytic structural equation model for understanding social commerce adoption. Inf Syst Front. https://doi.org/10.1007/s10796-021-10172-2

Eckhardt A, Laumer S, Weitzel T (2009) Who influences whom? Analyzing workplace referents’ social influence on it adoption and non-adoption. J Inf Technol 24(1):11–24. https://doi.org/10.1057/jit.2008.31

Egger M, Smith GD, Phillips AN (1997) Meta-analysis: principles and procedures. BMJ 315(7121):1533–1537. https://doi.org/10.1136/bmj.315.7121.1533

Erdoğmuş N, Esen M (2011) An investigation of the effects of technology readiness on technology acceptance in e-HRM. Proc Soc Behav Sci 24:487–495. https://doi.org/10.1016/j.sbspro.2011.09.131

Fishbein M, Ajzen I (1975) Belief, attitude, intention and behavior: an introduction to theory and research. Addison-Wesley

Fisher RA (1915) Frequency distribution of the values of the correlation coefficient in samples from an indefinitely large population. Biometrika 10(4):507. https://doi.org/10.2307/2331838

Florkowski GW, Olivas-Luján MR (2006) The diffusion of human-resource information-technology innovations in US and non-US firms. Pers Rev 35(6):684–710. https://doi.org/10.1108/00483480610702737

Foon YS, Fah BCY (2011) Internet banking adoption in Kuala Lumpur: an application of UTAUT model. Int J Bus Manag 6(4):161

Geetha R (2017) Multi-dimensional perspective of e-HRM: a diagnostic study of select auto-component firms. DHARANA-Bhavan’s Int J Bus 8(2):60–72

Giluk TL (2009) Mindfulness, big five personality, and affect: a meta-analysis. Person Individ Differ 47(8):805–811. https://doi.org/10.1016/j.paid.2009.06.026

Gleser LJ, Olkin I (2009) Stochastically dependent effect sizes. In: The handbook of research synthesis and meta-analysis, 2nd edn. Russell Sage Foundation, pp 357–376

Goodhue DL, Thompson RL (1995) Task-technology fit and individual performance. MIS Q 8:213–236

Guimaraes T, Igbaria M (1997) Client/server system success: exploring the human side. Decis Sci 28(4):851–876

Gully SM, Devine DJ, Whitney DJ (1995) A meta-analysis of cohesion and performance: effects of level of analysis and task interdependence. Small Group Res 26(4):497–520. https://doi.org/10.1177/1046496495264003

Hardy R, Thompson S (1996) A likelihood approach to meta-analysis with random effects. Stat Med 15(6):619–629

Hedges LV, Vevea JL (1998) Fixed-and random-effects models in meta-analysis. Psychol Methods 3(4):486. https://doi.org/10.1037/1082-989X.3.4.486

Higgins JP, Thompson SG, Deeks JJ, Altman DG (2003) Measuring inconsistency in meta-analyses. Bmj 327(7414):557–560. https://doi.org/10.1136/bmj.327.7414.557

Huang K-Y, Chuang Y-R (2016) A task–technology fit view of job search website impact on performance effects: an empirical analysis from Taiwan. Cogent Bus Manag 3(1):18. https://doi.org/10.1080/23311975.2016.1253943

Huang K-Y, Chuang Y-R (2017) Aggregated model of TTF with UTAUT2 in an employment website context. J Data Sci 15(2):187–204. https://doi.org/10.6339/JDS.201704_15(2).0001

Hunter JE, Schmidt FL (2014) Methods of meta-analysis: correcting error and bias in research findings, 3ed edn. SAGE

Huseynov F, Özkan Yıldırım S (2019) Online consumer typologies and their shopping behaviors in B2C E-commerce platforms. SAGE Open 9(2):2158244019854639. https://doi.org/10.1177/2158244019854639

Iyer S, Pani AK, Gurunathan L (2020) User adoption of eHRM—an empirical investigation of individual adoption factors using technology acceptance model. In: International working conference on transfer and diffusion of IT, pp 231–248

Jak S, Cheung MW-L (2019) Meta-analytic structural equation modeling with moderating effects on SEM parameters. Psychol Methods 25(4):430–455. https://doi.org/10.1037/met0000245

Jalali S, Wohlin C (2012) Systematic literature studies: Database searches versus backward snowballing. In: Proceedings of the ACM-IEEE international symposium on empirical software engineering and measurement-ESEM ’12, p 29. https://doi.org/10.1145/2372251.2372257

Jan P-T, Lu H-P, Chou T-C (2012) The adoption of e-learning: an institutional theory perspective. Turkish Online J Educ Technol 11(3):326–343

Jeyaraj A, Dwivedi YK (2020) Meta-analysis in information systems research: review and recommendations. Int J Inf Manag 55:102226. https://doi.org/10.1016/j.ijinfomgt.2020.102226

Joseph D, Ng K-Y, Koh C, Ang S (2007) Turnover of information technology professionals: a narrative review, meta-analytic structural equation modeling, and model development. MIS Q 31(3):547–577. https://doi.org/10.2307/25148807

Kassim NM, Ramayah T, Kurnia S (2012) Antecedents and outcomes of human resource information system (HRIS) use. Int J Product Perform Manag 61(6):603–623. https://doi.org/10.1108/17410401211249184

Kim YJ, Chun JU, Song J (2009) Investigating the role of attitude in technology acceptance from an attitude strength perspective. Int J Inf Manag 29(1):67–77

King WR, He J (2006) A meta-analysis of the technology acceptance model. Inf Manag 43(6):740–755. https://doi.org/10.1016/j.im.2006.05.003

Knapp G, Hartung J (2003) Improved tests for a random effects meta-regression with a single covariate. Stat Med 22(17):2693–2710. https://doi.org/10.1002/sim.1482

Konradt U, Christophersen T, Schaeffer-Kuelz U (2006) Predicting user satisfaction, strain and system usage of employee self-services. Int J Hum Comput Stud 64(11):1141–1153. https://doi.org/10.1016/j.ijhcs.2006.07.001

Landis RS (2013) Successfully combining meta-analysis and structural equation modeling: recommendations and strategies. J Bus Psychol 28(3):251–261. https://doi.org/10.1007/s10869-013-9285-x

Landis JR, Koch GG (1977) The measurement of observer agreement for categorical data. Biometrics 33(1):159–174. https://doi.org/10.2307/2529310

Larkin J (2017) HR digital disruption: the biggest wave of transformation in decades. Strategic HR Rev 16:55–59

Laumer S, Eckhardt A, Trunk N (2010) Do as your parents say? Analyzing IT adoption influencing factors for full and under age applicants. Inf Syst Front 12(2):169–183. https://doi.org/10.1007/s10796-008-9136-x

Legris P, Ingham J, Collerette P (2003) Why do people use information technology? A critical review of the technology acceptance model. Inf Manag 40(3):191–204. https://doi.org/10.1016/S0378-7206(01)00143-4

Lengnick-Hall ML, Moritz S (2003) The impact of e-HR on the human resource management function. J Lab Res 24(3):365–379. https://doi.org/10.1007/s12122-003-1001-6

Lepak DP, Snell SA (1998) Virtual HR: strategic human resource management in the 21st century. Hum Resour Manag Rev 8(3):215–234. https://doi.org/10.1016/S1053-4822(98)90003-1

Lin H-F (2010) Applicability of the extended theory of planned behavior in predicting job seeker intentions to use job-search websites. Int J Sel Assess 18(1):64–74. https://doi.org/10.1111/j.1468-2389.2010.00489.x

Lippert SK, Forman H (2006) A supply chain study of technology trust and antecedents to technology internalization consequences. Int J Phys Distrib Logist Manag 36(4):271–288. https://doi.org/10.1108/09600030610672046

Lipsey MW, Wilson DB (2001) Practical meta-analysis. Sage Publications

Luor T, Hu C, Lu H-P (2009) ‘Mind the gap’: an empirical study of the gap between intention and actual usage of corporate e-learning programmes in the financial industry. Br J Edu Technol 40(4):713–732. https://doi.org/10.1111/j.1467-8535.2008.00853.x

Maier C, Laumer S, Eckhardt A, Weitzel T (2013) Analyzing the impact of HRIS implementations on HR personnel’s job satisfaction and turnover intention. J Strateg Inf Syst 22(3):193–207. https://doi.org/10.1016/j.jsis.2012.09.001

Marler JH, Dulebohn JH (2005) A model of employee self-service technology acceptance. In: Research in personnel and human resources management, vol 24. Emerald, pp 137–180. https://doi.org/10.1016/S0742-7301(05)24004-5

Marler JH, Liang X, Dulebohn JH (2006) Training and effective employee information technology use. J Manag 32(5):721–743. https://doi.org/10.1177/0149206306292388

Marler JH, Fisher SL, Ke W (2009) Employee self-service technology acceptance: a comparison of pre-implementation and post-implementation relationships. Pers Psychol 62(2):327–358. https://doi.org/10.1111/j.1744-6570.2009.01140.x

Martin G, Reddington M (2010) Theorizing the links between e-HR and strategic HRM: a model, case illustration and reflections. Int J Hum Resour Manag 21(10):1553–1574. https://doi.org/10.1080/09585192.2010.500483

Martín HS, Herrero Á (2012) Influence of the user’s psychological factors on the online purchase intention in rural tourism: integrating innovativeness to the UTAUT framework. Tour Manag 33(2):341–350. https://doi.org/10.1016/j.tourman.2011.04.003