Abstract

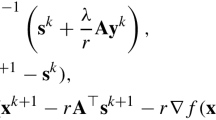

In this paper, the problem of minimizing a functionf(x) subject to a constraint φ(x)=0 is considered. Here,f is a scalar,x ann-vector, and φ aq-vector, withq<n. The use of the augmented penalty function is explored in connection with theordinary gradient algorithm. The augmented penalty functionW(x, λ,k) is defined to be the linear combination of the augmented functionF(x, λ) and the constraint errorP(x), where theq-vector λ is the Lagrange multiplier and the scalark is the penalty constant.

The ordinary gradient algorithm is constructed in such a way that the following properties are satisfied in toto or in part: (a) descent property on the augmented penalty function, (b) descent property on the augmented function, (c) descent property on the constraint error, (d) constraint satisfaction on the average, or (e) individual constraint satisfaction. Properties (d) and (e) are employed to first order only.

With the above considerations in mind, two classes of algorithms are developed. For algorithms of Class I, the multiplier is determined so that the error in the optimum condition is minimized for givenx; for algorithms of Class II, the multiplier is determined so that the constraint is satisfied to first order.

Algorithms of Class I have properties (a), (b), (c) and include Algorithms (I-α) and (I-β). In the former algorithm, the penalty constant is held unchanged for all iterations; in the latter, the penalty constant is updated at each iteration so as to ensure satisfaction of property (d).

Algorithms of Class II have properties (a), (c), (e) and include Algorithms (II-α) and (II-β). In the former algorithm, the penalty constant is held unchanged for all iterations; in the latter, the penalty constant is updated at each iteration so as to ensure satisfaction of property (b).

Four numerical examples are presented. They show that algorithms of Class II exhibit faster convergence than algorithms of Class I and that algorithms of type (β) exhibit faster convergence than algorithms of type (α). Therefore, Algorithm (II-β) is the best among those analyzed. This is due to the fact that, in Algorithm (II-β), individual constraint satisfaction is enforced and that descent properties hold for the augmented penalty function, the augmented function, and the constraint error.

Similar content being viewed by others

References

Hestenes, M. R.,Multiplier and Gradient Methods, Journal of Optimization Theory and Applications, Vol. 4, No. 5, 1969.

Kelley, H. J.,Methods of Gradients, Optimization Techniques, Edited by G. Leitmann, Academic Press, New York.

Bryson, A. E., Jr., andHo, Y. C.,Applied Optimal Control, Blaisdell Publishing Company, Waltham, Massachusetts, 1969.

Fiacco, A. V., andMcCormick, G. P.,Nonlinear Programming: Sequential Unconstrained Minimization Techniques, John Wiley and Sons, New York, 1968.

Davies, D., andSwann, W. H.,Review of Constrained Optimization, Optimization, Edited by R. Fletcher, Academic Press, New York, 1969.

Miele, A., Cragg, E. E., andLevy, A. V.,Use of the Augmented Penalty Function in Mathematical Programming Problems, Part 2, Journal of Optimization Theory and Applications, Vol. 8, No. 2, 1971.

Miele, A., andLevy, A. V.,Modified Quasilinearization and Optimal Initial Choice of the Multipliers, Part 1, Mathematical Programming Problems, Journal of Optimization Theory and Applications, Vol. 6, No. 5, 1970.

Miele, A., Heideman, J. C., andLevy, A. V.,Mathematical Programming for Constrained Minimal Problems, Part 3, Combined Gradient-Restoration Algorithm, Rice University, Aero-Astronautics Report No. 68, 1970.

Heideman, J. C., andLevy, A. V.,Mathematical Programming for Constrained Minimal Problems, Part 4, Combined Gradient-Restoration Algorithm: Further Numerical Examples, Rice University, Aero-Astronautics Report No. 69, 1970.

Author information

Authors and Affiliations

Additional information

This research was supported by the National Science Foundation, Grant No. GP-27271. The authors are indebted to Dr. J. N. Damoulakis for analytical and numerical assistance. Discussions with Professor H. Y. Huang are acknowledged.

Rights and permissions

About this article

Cite this article

Miele, A., Cragg, E.E., Iyer, R.R. et al. Use of the augmented penalty function in mathematical programming problems, part 1. J Optim Theory Appl 8, 115–130 (1971). https://doi.org/10.1007/BF00928472

Received:

Issue Date:

DOI: https://doi.org/10.1007/BF00928472