Abstract

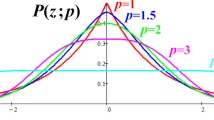

The χ 2 goodness-of-fit test for the hypothesis H 0: P = P 0 (a distribution with density f 0(x)) is using an m-partition C = {C l,..., C m } of the sample space and checks for the hypothetical class probabilities P 0(C 1),..., P 0(C m ). The asymptotic power performance of this test is characterized by the noncentrality parameter δ C 2(P 1, P 0) = ∑ i =1 m(P 1(C i )−P 0(C i ))2/P 0(C i ) where P 1 is a given alternative distribution. In this paper, we show how an optimally efficient partition C, i.e. with a maximum value δ C 2(P 1, P 0) can be obtained (for a given number m of classes). — In fact, this problem can be embedded into the general framework of maximizing a ø-divergence measure I C (P 1, P 0; ø) over all m-partitions C of R P (where ø(∙) is a convex function on R 1). Our algorithm is an adaptation of the well-known k-means clustering technique and uses the support lines of ø. Since ø-divergence measures characterize, quite generally, the performance of tests for distinguishing between two alternatives P 0 and P 1 (e.g. in the NeymanPearson or a Bayesian framework) the given methods can be used for obtaining partitions with a maximum discriminating power for the resulting discretized distributions P 0(C i ), P1(C i ), i = 1,..., m. A series of numerical examples is presented.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

BAHADUR, R.R. (1967), Rates of convergence of estimates and test statistics. Ann. Math. Statist. 38, 303–325.

BEN-BASSAT, M. (1982), Use of distance measures, information measures and error bounds in feature evaluation, in: Classification, pattern recognition and reduction of dimensionality, eds. P.R. Krishnaiah, L.N. Kanal, North Holland, Amsterdam, 773–791.

BEST, D.J., and RAYNER, J.C. (1981), A note on Mineo’s grouping method for the chi-square test of goodness-of-fit, Scand. J. Statist. 8, 185–186.

BHATTACHARYYA, A. (1943), On a measure of divergence between two statistical populations defined by their probability distributions. Bull. Calcutta Math. Society 35, 99–110.

BOCK, H.H. (1974), Automatische Klassifikation, Theoretische und praktische Methoden zur Gruppierung und Strukturierung von Daten (Clusteranalyse), Vandenhoeck & Ruprecht, Göttingen, 480.

BOCK, H.H. (1983), A clustering algorithm for choosing optimal classes for the chi-square test, Bull. 44th Session of the International Statistical Institute, Madrid, Contributed papers, Vol. 2, 758–762.

CHERNOFF, H. (1952), A measure of asymptotic efficiency for tests of a hypothesis based on the sum of observations, Ann. Math. Statist. 23, 493–507.

CHERNOFF, H. (1956), Large sample theory, Parametric case, Ann. Math. Statist. 27, 1–22.

CSISZAR, L. (1967a), Information-type measures of difference of probability distributions and indirect observations, Studia Scientiarum Mat hematicarum Hungarica 2, 299–318.

CSISZAR, I. (1967b), On topological properties of f-divergences, Studia Scientarum Mathematicarum Hungarica 2, 329–339.

FLURY, B.A. (1990), Principal points, Biometrika 77, 33–42.

FUKUNAGA, K. (1972), Introduction to statistical pattern recognition, Academic Press, New York.

HELLINGER, E. (1909), Neue Begründung der Theorie quadratischer Formen von unendlich vielen Veränderlichen, J. Reine Angew. Math. 136, 210–271.

IBRAGIMOV, I.A., and HAS’MINSKII, R.Z. (1981), Statistical estimation —Asymptotic theory, Springer, New York.

KAILATH, T. (1967), The divergence and Bhattacharyya distance measures in signal detection, IEEE Trans. Computers COM, 15, 52–60.

KAMPS, U. (1989), Hellinger distances and a—entropy in a one-parameter class of density functions, Statistical Papers 30, 263–269.

KENDALL, M.G., and STUART, A. (1961), The advanced theory of statistics 2. Griffin, London.

KOEHLER, K.J., and GAN, F.F. (1990), Chi-squared goodness-of-fit tests, Cell selection and power, Commun. Statist.–Simul. and Comput. 19, 1265–1278.

KOTZ, S., and JOHNSON, N.L. (1982) Encyclopedia of statistical sciences, Vol. 1, Wiley, New York.

KOTZ, S., and JOHNSON, N.L. (1983) Encyclopedia of statistical sciences, Vol. 4, Wiley, New York.

KRAFFT, O., and PLACHKY, D. (1970), Bounds on the power of likelihood ratio tests and their asymptotic properties, Ann. Math. Statist. 41, 1646–1654.

KULLBACK, S. (1959), Information theory and statistics, Wiley, New York.

KULLBACK, S., and LEIBLER, R. (1951), On information and sufficiency. Ann. Math. Statist. 22, 79–86.

LE CAM, L. (1986), Asymptotic methods in statistical decision theory, Springer, New York - Heidelberg.

MANN, H.B., and WALD, A. (1942), On the choice of the number of class intervals in the application of the chi-square test, Ann. Math. Statist. 13, 206–317.

MATUSITA, K. (1955), Decision rules, based on the distance, for problems of fit, two samples, and estimation, Ann. Math. Statist. 26, 631–640.

MATUSITA, K. (1964), Distance and decision rules, Ann. Inst. Statist. Math. 16, 305–320.

MINEO, A. (1979), A new grouping method for the right evaluation of the chi-square test of goodness-of-fit, Scand. J. Statist. 6, 145–153.

MINEO, A. (1981), Rejoinder to Best and Rayner’s, A note on Mineo’s grouping method for the chi-square test of goodness-of-fit, Scand. J. Statist. 8, 187–188.

MOORE, D.S., and SPRUILL, M.C. (1975), Unified large-sample theory of general chi-squared statistics for tests of fit, Ann. Statist. 3, 599–616.

RÉNYI, A. (1961), On measures of entropy and information, Proc. 4th Berkely Symp. Math. Statist. Probab., Vol. 1, Berkeley, 547–561.

SERFLING, R.J. (1980), Approximation theorems of mathematical statistics, Wiley, New York, 132–140.

SPRUILL, M.C. (1976), Cell selection in the Chernoff-Lehmann chi-square statistics, Ann. Statist. 4, 375–383.

SPRUILL, M.C. (1977), Equally likely intervals in the chi-square test, Sankhya A 39, 299–302.

VAJDA, I. (1989), Theory of statistical inference and information, Kluwer, Dordrecht.

VAJDA, I. (1970), On the amount of information contained in a sequence of observations, Kybernetica 6, 306–323.

WITTING, H. (1959), Über einen χ2-Test, dessen Klassen durch geordnete Stichprobenfunktionen festgelegt werden, Ark. Mat. 10, 468–479.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 1992 Springer-Verlag Berlin · Heidelberg

About this paper

Cite this paper

Bock, H.H. (1992). A Clustering Technique for Maximizing φ-Divergence, Noncentrality and Discriminating Power. In: Schader, M. (eds) Analyzing and Modeling Data and Knowledge. Studies in Classification, Data Analysis, and Knowledge Organization. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-46757-8_3

Download citation

DOI: https://doi.org/10.1007/978-3-642-46757-8_3

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-54708-2

Online ISBN: 978-3-642-46757-8

eBook Packages: Springer Book Archive