Abstract

The rapid growth of the Chinese consumer market has created a demand for new Chinese fit wearable products. The industry requires accurate digital data on Chinese head and facial shapes to develop new consumer products. 3D head models that express the dimension of Chinese head anthropometry are of great importance in various product design processes. Previous 3D statistical models were fitted mostly using dimensional frames made by head and facial landmarks that cannot adequately demonstrate the complex facial geometry. This paper proposed a 3D head fitting method for the 5th, 25th, 50th, 75th and 95th percentiles using a progressive scanning algorithm based on differential approximation. The high-precision point cloud data obtained were transformed into mesh models, and the facial details were integrated and improved by 3D facial feature frameworks and artistic digital sculpting. The 3D digital models and 3D printing models with different percentiles constructed in this research can be utilized as design-aided tools for physiological comfort optimization in wearable products design and other ergonomic evaluation procedures.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Traditional 1D anthropometric data have been the primary source of the information adopted by ergonomists for the dimensioning of head and facial tools [1, 2]. Features such as head-breadth, head-length and head-circumference are commonly utilized during the product lifecycle from product design to ergonomic evaluation procedures [3,4,5]. Although these data are easy to understand and use, they only provide univariate analysis of key dimensions that cannot accurately capture the complex parts of human face shapes. Therefore, traditional data collection methods cannot fulfill the demand of current wearable product design that highly emphasizes the importance of the head and facial structures. For example, the design of virtual reality (VR) headsets requires not only features such as head-circumference, but also information about the head-shapes and complex structures including eyes, noses and ears to perfectly fit the human head and face to provide better user experiences.

The application of 3D scanning technology in product design has contributed to creating more user-friendly products, which provide a better fit, satisfaction, and safety for users. To design a headgear for Australian cyclists, a hair thickness offset method was introduced to describe the true head shape more accurately [6]. Some researchers used a machine learning-based method to design customizable 3D respiratory masks [2]. The sizing system and representative models were generated based on the Civilian American and European Surface Anthropometry Resource (CAESAR) database to design various head-products [7,8,9]. A framework model was applied to the database of facial motion data for anthropometric measurements related to the design of face masks [10]. Marketing products depend mostly on the standard of existing Western human-body datasets (such as CAESAR data), which are the same products used by Chinese users. However, researchers demonstrated that the Chinese head shapes are significantly different from those of other races [11,12,13]. It is widely assumed that some famous VR products such as the Oculus rift and Google Daydream are not designed for the Chinese population. The ergonomic designs are not suitable for Chinese heads and noses, which lead to terrible immersive experiences. Therefore, up to date, accurate and usable Chinese head and facial data are the key for wearable products designed for the Chinese market.

The current market demand in China is absolutely huge. The design progress needs to consider Chinese anthropometric data to make the products more suitable for Chinese consumers. The Chinese national standard of head-and-face dimensions for adults was first published in 1986, and it was slightly modified in 2009. However, no head forms were fitted based on these data for product design and testing [14]. Ball et al. [15, 16] created 10 head forms from 2000 subjects in the SizeChina project using 3D scanned data and principal component analysis to design helmets for Chinese people. However, these head forms are primarily intended to only describe the head shape. The low accuracy and resolution of face-shape fitting cannot express the complicated facial details in designing head-related products, such as masks, goggles, and head-mounted displays. Zhuang et al. [17, 18] collected 2D dimensions and 3D models on 3000 Chinese civilian workers in 2006. After editing, landmarking, and measurement, 5 head models were fitted based on the clustering analysis of 350 3D scans, and the differences between Chinese head shapes in the last 30 years were compared. However, most of these methods were not aimed to fit a certain template or generic model for anthropometric purposes. The models are comparatively simple, and their accuracy and resolution were not specified in those studies. In addition, most of these studies were based on few human scans and did not provide convincing statistical results which considered Chinese population variations.

The 3D anthropometric data describe the complete shape characteristics of the head surface but are complicated to interpret due to the abundance of information they contain. Compared to traditional measurements, fitting heads obtained by scan models can provide more comprehensive morphological diversity in a target population for designers. Such fitting heads would facilitate the optimal design of products and improve the development of related standards and protocols for better ergonomic evaluations.

Chinese national standard GB/T 12985-91 [19] stipulates the general rules of using percentiles of body dimensions for product design progress. The percentile of a specific dimension means all the dimensional values of that percentile for a certain population are below that level. In the industrial design process, various products require different percentiles of human body dimensions as the upper and lower limits. Therefore, to facilitate Chinese population-oriented wearable products, this research adopted the dataset of 1900 Chinese head models to extract 33 head and facial feature points for each model, then built 3D head frameworks from different percentiles of these features. Furthermore, high-precision 3D head fitting was accomplished for each percentile by a progressive scanning algorithm integrated with these head frameworks. Finally, artistic digital sculpting was appropriately performed to create more facial details on each 3D model for appearance improvement (refer to Fig. 1).

2 Methods

2.1 Data Collection

The 3D head dataset adopted in this research was from the anthropometric survey accomplished by Hunan University in 2017. The major goal of that project was to construct a scalable, interactive and minable repository of high-resolution Chinese facial data available to designers and researchers. As such, 1900 male and female adult subjects between the ages of 18 and 45 were surveyed from 7 representative cities in China, including Harbin, Beijing, Xi’an, Chengdu, Guangzhou, Hangzhou and Changsha.

During the survey process, participants filled out a questionnaire that recorded basic information including age, gender, family background, and the location where they grew up. Traditional anthropometric measurements were also recorded, which included height, weight and 3 head measurements. A wig cap was fitted to obtain a better scan quality of the hair during the 3D scanning. Fourteen selected manually marked landmarks were directly labeled on each participant’s face to identify the critical bone structures [15]. Participants were scanned using the Artec EVATM 3D scanner, and high-resolution data were automatically captured as point cloud data.

2.2 Data Processing

Then, 1900 raw scans were collected during the scanning. The scans required extensive post-processing before they were suitable for use in the development of design tools. Each scan data file was processed according to the steps shown in Fig. 2, which includes (a) data alignment, (b) irrelevant features removal, (c) global registration, (d) sharp fusion, (e) feature smoothing, and (f) texture mapping.

During the data processing procedure, 30 models were abandoned due to irreparable damage such as missing textures, unfixable holes, closed eye(s), or a disordered model. Finally, a total of 1870 head models were adopted for the 3D head fitting. Given all the 3D head models, S stands for the set of head and facial surfaces, and H S denotes the set of all points on a specific surface. This gives us \( H_{S} \subset S \). The elements in H S can be represented as \( P_{Si} = \left\{ {\left( {X_{Si} ,Y_{Si} ,Z_{Si} } \right)\left| {i = 1,2} \right., \cdots ,N_{P} } \right\} \).

Data Alignment.

In the 3D scanning process, different scan angles, offsets or rotations of participants would cause the coordinates to deviate among various models. The statistical analysis in this research was based on the coordinates of all feature points extracted from each scan to finish the 3D head and facial fitting. This requires the feature extraction to be performed within the same space coordinate system where data alignment was conducted. First, the Frankfurt plane was defined according to the coordinates of the 3 reference points on each head model, which include the left tragion, the right tragion and the left infraorbitale (or right infraorbitale). This plane was denoted as the XOY plane for the coordinate system. The positive direction of the X axis is set to the direction of the apex nasi, while the positive direction of the Y axis is set to the same direction of the right tragion. By letting H A represent the set of all points on the head and facial models, we can get \( H_{A} \subset S \). After the data alignment, each element Psi from H S was transformed to \( P_{Ai} = \left\{ {\left( {X_{Ai} ,Y_{Ai} ,Z_{Ai} } \right)\left| {i = 1,2,} \right. \cdots ,N_{P} } \right\} \).

Landmark Selection.

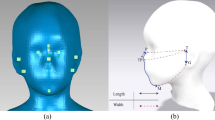

Landmarking is a common method for data representation among individual human features. It was necessary for anthropometry experts to mark anatomical features that required palpation to be located on the invisible skull. To create consistent head and face models, in this research, 14 anatomical landmarks were manually marked on each participant’s face before 3D scanning. They are Chin, Glabella, Frontotemporale (left and right), Infraorbital (left and right), Pronasale, Sellion, Tragion (left and right), Zygofrontale (left and right) and Lat. Zygomatic (left and right). The selection approach of the landmarks was mainly based on the anthropometric analysis in [20]. Due to complicated head and facial structures, those anatomical landmarks could not obtain more detailed data for analyzing the human face. Then, 19 more landmarks indicating identifiable facial features were located and extracted in Geomagic Wrap 2017. The name and location of the final 33 landmarks for all subjects are shown in Fig. 3 (No. 2, 3, 4, 6, 7, 8, 9, 10, 21, 22, 23, 24, 29 and 30 are manually marked landmarks, and the rest are physical landmarks). The feature set can be defined as H AL , while \( P_{AL} = \left\{ {\left( {X_{ALi} ,Y_{ALi} ,Z_{ALi} } \right)\left| {i = 1,2,} \right. \cdots ,33} \right\} \) denotes all the elements.

2.3 Anthropometric Face Frame Construction

Percentile Computing of Feature Points Coordinate.

The definition of human head dimensions requires the locations and distances among all sampling points in space. Once the coordinate system is unified, the Euclidean distances between each sampling point to the center of the system can be obtained by \( d = \sqrt {X^{2} + Y^{2} + Z^{2} } \). Therefore, the computing of the percentiles of head dimensions can be transformed into the percentiles of the absolute value of feature point coordinates along the X, Y and Z directions.

For example, given a certain feature point P AL , the p percentile along the X axis can be computed as follows. First, the absolute values of P AL ’s x-coordinates for n scan models were sorted in ascending order to get the sorted distances \( B_{i} = \left[ {X_{1} ,X_{2} , \cdots ,X_{n} } \right] \). Then, let l and m represent the integral and decimal parts of the calculation results of \( n \times p \), respectively. Lastly, the p percentile of the feature point P AL along the X axis is obtained by \( B_{AL} = \left( {1 - m} \right) \times B_{l - 1} + m \times B_{l} \).

In the computing process, due to inevitable coordinate deviations caused by data alignment and feature extraction, the coordinate values along the X and Y axes could be positive or negative for feature points such as the vertex, glabella, sellion, pronasale, subnasale, chin, menton, otobasion superius (left and right), tragion (left and right) and otobasion inferius (left and right) that can be mapped to the Z axis. The means of the coordinate values for these feature points were taken in this research to balance the error. Meanwhile, the feature point vertex was computed by percentiles only on the Z axis because it was the peak of all 33 feature points, and its coordinate errors along the X and Y axes cannot be ignored.

Face Frame Construction.

The 5th, 25th, 50th, 75th and 95th percentiles of the 33 feature points were separately computed, and all the points were marked based on their spatial locations and connected by lines according to the facial morphology in RhinocerosTM 5.14 for each percentile. Anthropometric facial frames embodying the characteristics of all features were constructed for each percentile (refer to Fig. 4).

2.4 Head and Facial Model Fitting

Due to the complexity and irregular nature of human heads, the facial frame can represent only facial information approximately 33 feature points, which cannot adequately express the surface characteristics. Furthermore, the frame would ignore most of the details of the frontal, parietal and occipital areas of human heads. In this paper, a progressive scanning algorithm based on differential approximation was proposed to subdivide scan models, and the sampling points set was obtained by the percentiles computed by their coordinates for the 3D head fitting.

Feature Region Division.

Wearable product design often requires dimensions from various regions of the human head (such as the Glabella-Infraorbitale distance) as references. The height of each region on the face significantly varies thanks to the great differences among the appearances of 1900 individuals. To account for the facial features of every individual, this paper vertically divided the head and face into several regions and made the 3D fitting based on feature mapping for related regions from all head models.

Progressive Scanning.

According to 4 facial organs (including the forehead, eyes, nose and mouth) and the common areas for wearable product design, the head and face were vertically divided into 4 regions by the Z axis coordinates of the vertex, glabella, Frankfurt plane, subnasale and menton. Then, each region was scanned with the corresponding layers that were determined according to the demanded accuracy and the height proportions of different regions in the head. In this paper, each scan model was divided into 528, 192, 144 and 384 layers separately from top to bottom, which totals 1248 layers, and let \( L_{j} ,j \in \left[ { - 528} \right.,\left. {720} \right) \) represent all layers on head and facial surface. Let d 1 , d 2 , d 3 and d 4 represent the furthest distance between each facial region to the Frankfurt plane on the Z axis. Therefore, each layer’s Z axis coordinate can be denoted in Eq. (1) and the facial region division was shown in Fig. 5.

Contour Sampling.

In the progressive scanning process, a closed and continuous contour was obtained for each layer. We took the intersection between the Z axis with one specific layer as the center, started from the positive direction of the X axis and rotated clockwise with an angular increment of \( \frac{\varPi }{864} \) (refer to Fig. 6). For each rotation, the intersection point between the line and the contour was sampled, and H F denotes the set of all sampling points on head and facial surface, \( H_{F} \subset H_{S} \). H FL represents the intersection point set on Layer L, and the number of the sampling points for each layer was \( N_{FL} \, = \,\frac{\varPi }{ 8 6 4} \cdot \frac{ 1}{ 2\varPi }{ = 1728} \) and elements are \( P_{FLi} = \left\{ {\left( {r_{FLi} ,\theta_{FLi} ,Z_{j} } \right)\left| {i = 1,2,} \right. \cdots ,1728} \right\} \), the distance r FLi can be denoted as below.

Percentile Computing of Contour Sampling Points.

During the contour sampling for all head scans, the p percentile of the distance r jαk was computed for layer j with angle α. The method is shown as follows. The r jαk of n scans were sorted in ascending order to obtain \( C_{n} = \left[ {r_{j\alpha 1} ,r_{j\alpha 2} , \cdots ,r_{j\alpha n} } \right] \). Then, let l and m, respectively, represent the integral and decimal parts of the calculation results of \( n \times p \). Therefore, the p percentile of r jαk can be denoted by \( C_{k} = \left( {1 - m} \right) \times C_{l - 1} + m \times C_{l} \). H T represents the point set obtained by 3D fitting and elements can be written as \( P_{T} = \left\{ {\left( {X_{j\alpha } ,Y_{j\alpha } ,Z_{j} } \right)\left| {j \in } \right.\left[ { - 528} \right.,\left. {720} \right),\alpha \in [0,2\varPi )} \right\} \),

where

Height Computing of Sampling Layers.

The 3D head fitting requires the Z coordinate for each sampling layer that was determined by the division of various feature regions. Therefore, the Z coordinate for each layer can be computed by dividing the percentiles of d a , \( a\, = \,1,2,3,4 \) equally according to the number of the sampling layers. The d ai of n scans were sorted in ascending order to obtain \( D_{n} = \left[ {d_{a1} ,d_{a2} , \cdots ,d_{an} } \right] \). Then, let l and m, respectively, represent the integral and decimal parts of the calculation results of \( n \times p \). Therefore, the p percentile of d ai can be denoted by \( D_{a} = \left( {1 - m} \right) \times D_{l - 1} + m \times D_{l} \). Thus, the p percentiles of the further distance along the Z axis between each region to the Frankfurt plane can be obtained as D 1 , D 2 , D 3 and D 4 . The elements of H T can be written as \( P_{T} = \left\{ {\left( {X_{j\alpha } ,Y_{j\alpha } ,Z_{j} } \right)\left| {j \in } \right.\left[ { - 528} \right.,\left. {720} \right),\alpha \in [0,\left. { 2\varPi } \right)} \right\} \), where

The whole computing process is shown in Fig. 7.

Head and Facial Model Fitting.

Let H T be the point set of the head and facial fitting results. Thus, the number of its elements is \( N_{P} = 528 + 720 \times 1728 = 2156544 \). The C++ program based on the 3D head and facial fitting methods was implemented on Microsoft Visual Studio 2015. It was run in the National Super Computing Center in Changsha, considering the huge memory consumption of 1900 3D models. Users can input parameters (including the sample size and scan layers for each feature region, the sample numbers for each layer and the specific percentiles) to get the fitting result as a *.txt format file with coordinates of all sampling points. The coordinates of all points were imported into Geomagic Wrap 2017 to get the surface model for each percentile using the wrap function. The results include fitting models of all sampling points and the mesh model transformed from them, which are shown in Fig. 8.

2.5 Head and Facial Model Fitting

The sampling point coordinates of the surface model obtained by 3D fitting were in accordance with the percentile value across all subjects on corresponding positions. However, the fitting model emphasized the extraction of percentiles for the entire head and face, which would contain inevitable errors on facial details caused by individual differences during the feature mapping process. Therefore, adjustments were made to the fitting model according to the coordinates of 33 feature points from the face frame. Due to the noise caused by the huge volume of data in the point cloud of the fitting model, artistic digital sculpting was appropriately performed during the model fusion, and more facial details were created on each 3D model to improve the appearance. At last, the ear model was joined with the head fitting model according to the coordinates of otobasion superius, tragion and otobasion inferius on each side (refer to Fig. 9).

3 Result

This paper presents two types of Chinese head and facial models, the high-resolution fitting model and the 3D printing reference model. The former can be imported into 3D modeling software to accomplish dynamic ergonomics analysis and testing, while the reference model could be utilized for product design references and product fitting evaluations. 3D Models with different percentiles can be obtained according to specific design scenarios in the future. All fitting models and 3D printing reference models were verified with original statistical data to ensure the accuracy and reliability of the data (refer to Fig. 10).

4 Conclusion

Traditional 3D anthropometric head models were constructed by frames composed of feature points and straight lines between them, which cannot adequately express the complicated surface details of human heads and faces. This paper proposed a 3D fitting algorithm based on progressive scanning for different facial feature regions and contour sampling on each scanning layer. The fitting meshes were reconstructed on the sampling points through 3D software and integrated with 3D facial frames built by percentiles of 33 feature point coordinates to improve the accuracy. Lastly, artistic digital sculpting was appropriately performed to create more facial details on each 3D model to improve the appearance.

The 3D head and facial fitting algorithm proposed in this paper is of important significance in the research on contemporary Chinese head sizes and Chinese market-oriented wearable product designs (such as smart glasses, VR&AR headsets, face masks, etc.). Product designers only need to set the 3D model with the specific percentiles they require to define the design boundary in the corresponding scenarios. It does not require professional statistical knowledge or time to analyze mass anthropometric data. In addition, 3D printing models with different percentiles can also be utilized for the design-aided tools for product comfort analysis and other ergonomics evaluation. So far, the methods and models proposed in this research have been applied in several companies for the design of the next generation of wearable devices.

In the future, the algorithm can also be generalized to other anthropometric issues, and the fitting models can be extended to other body parts to guide the product design in that area. Furthermore, our algorithm can be modified to support the Chinese head size research for different age groups, genders and cities.

References

Donelson, S.M., Gordon, C.C.: Anthropometric Survey of U.S. Army Personnel: Pilot Summary Statistics, 1988 (1991). https://doi.org/10.21236/ada241952

Chu, C.H., Huang, S.H., Yang, C.K., Tseng, C.Y.: Design customization of respiratory mask based on 3D face anthropometric data. Int. J. Precis. Eng. Manuf. 16, 487–494 (2015). https://doi.org/10.1007/s12541-015-0066-5

Bradtmiller, B.: Head-and-face anthropometric survey of U.S. Respirator users. J. Occup. Environ. Hyg. 2, 567 (2005). https://doi.org/10.17226/11815

Kolich, M.: Automobile seat comfort: occupant preferences vs. anthropometric accommodation. Appl. Ergon. 34, 177–184 (2003). https://doi.org/10.1016/s0003-6870(02)00142-4

Robinette, K.M., Daanen, H.A.: Precision of the caesar scan-extracted measurements. Appl. Ergon. 37, 259 (2006). https://doi.org/10.1016/j.apergo.2005.07.009

Perret-Ellena, T., Skals, S.L., Subic, A., Mustafa, H., Pang, T.Y.: 3D anthropometric investigation of head and face characteristics of Australian cyclists. Procedia Eng. 112, 98–103 (2015). https://doi.org/10.1016/j.proeng.2015.07.182

Lee, W., Yang, X., Jung, H., You, H., Goto, L., Molenbroek, J.F.M., Goossens, R.H.M.: Application of massive 3D head and facial scan datasets in ergonomic head-product design. 1, 344 (2016). https://doi.org/10.1504/ijdh.2016.10005368

Lee, W., Lee, B., Kim, S., Jung, H., Bok, I., Kim, C., Kwon, O., Choi, T., You, H.: Development of headforms and an anthropometric sizing analysis system for head-related product designs. 59 (2015). https://doi.org/10.1177/1541931215591308

Lee, W., Goto, L., Molenbroek, J.F.M., Goossens, R.H.M., Wang, C.C.C.: A shape-based sizing system for facial wearable product design. In: International Digital Human Modeling Symposium (2017)

Bolkart, T., Bose, P., Shu, C., Wuhrer, S.: A general framework to generate sizing systems from 3D motion data applied to face mask design. In: Second International Conference on 3D Vision, pp 425–431. IEEE, Tokyo (2014). https://doi.org/10.1109/3dv.2014.43

Ball, R., Shu, C., Xi, P., Rioux, M., Luximon, Y., Molenbroek, J.: A comparison between Chinese and Caucasian head shapes. Appl. Ergon. 41, 832–839 (2010). https://doi.org/10.1016/j.apergo.2010.02.002

Yang, L., Shen, H., Wu, G.: Racial differences in respirator fit testing: a pilot study of whether American fit panels are representative of Chinese faces. Ann. Occup. Hyg. 51, 415 (2007). https://doi.org/10.1093/annhyg/mem005

Niezgoda, G., Zhuang, Z.: Development of headforms for ISO eye and face protection standards. Procedia Manuf. 3, 5761–5768 (2015). https://doi.org/10.1016/j.promfg.2015.07.822

Standards Administration of the People Republic of China, GB/T 5703-2010: Basic human body measurements for technological design. Standard Press of China, Beijing (2011). (in chinese)

Ball, R., Molenbroek, J.F.M.: Measuring Chinese heads and faces (2008)

Ball, R.: 3-D design tools from the SizeChina project. Ergon. Des. Mag. Hum. Factors Appl. 17, 8–13 (2009). https://doi.org/10.1518/106480409X12487281219931

Yu, Y., Benson, S., Cheng, W., Hsiao, J., Liu, Y., Zhuang, Z., Chen, W.: Digital 3-D headforms representative of Chinese workers. Ann. Occup. Hyg. 56, 113–122 (2012). https://doi.org/10.1093/annhyg/mer074

Du, L., Zhuang, Z., Guan, H., Xing, J., Tang, X., Wang, L., Wang, Z., Wang, H., Liu, Y., Su, W.: Head-and-face anthropometric survey of Chinese workers. Ann. Occup. Hyg. 52, 773–782 (2008). https://doi.org/10.1093/annhyg/men056

Standards Administration of the People Republic of China, GB/T 12985-91: General rules of using percentiles of the body dimensions for products design. Standard Press of China, Beijing (1991)

Yan, L., Ball, R., Justice, L.: The Chinese face: a 3D anthropometric analysis. In: Proceeding of the TMCE, Italy (2010)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Wang, H., Chen, W., Li, Y., Yu, Y., Yang, W., Ball, R. (2018). A 3D Head Model Fitting Method Using Chinese Head Anthropometric Data. In: Rau, PL. (eds) Cross-Cultural Design. Methods, Tools, and Users. CCD 2018. Lecture Notes in Computer Science(), vol 10911. Springer, Cham. https://doi.org/10.1007/978-3-319-92141-9_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-92141-9_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-92140-2

Online ISBN: 978-3-319-92141-9

eBook Packages: Computer ScienceComputer Science (R0)