Abstract

In the field of digital geometry, numerous advances have been recently made to efficiently represent a simple polygonal shape; from dominant points of a curvature-based representation, a binary shape is efficiently represented even in presence of noise. In this article, we exploit recent results of such digital contour representations and propose an image vectorization algorithm allowing a geometric quality control. All the results presented in this paper can also be reproduced online.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Image vectorization is a classic problem with potential impacts and applications in computer graphic domains. Taking a raw bitmap image as input, this process allows us to recover geometrical primitives such as straight segments, arcs of circles and Bézier curved parts. Such a transformation is exploited in design softwares such as Illustrator or Inkscape in particular when users need to import bitmap images. This domain is large and also concerns document analyses and a variety of graphical objects such as engineering drawings [1], symbols [2], line drawings [3], or circular arcs [4]. Related to these applications, different theoretical advances are regularly proposed in the domains of pattern recognition and digital geometry. In particular, their focus on digital contour representations concerns various methodologies: dominant point detection [5, 6], relaxed straightness properties [7], multi-scale analysis [8], irregular isothetic grids and meaningful scales [9] or curvature-based approach [10].

These last advances are limited to digital shapes (i.e. represented within a crisp binary image). Indeed, even if a digital contour can be extracted from a non-binary image, their adaptation to other types of images such as greyscale and color images is not straightforward. There are other methods, which are designed to handle greyscale images; for example, Swaminarayan and Prasad proposed a method to vectorize an image by using its contours (from simple Canny or Sobel filters) and by performing Delaunay triangulation [11]. Despite its interest, the proposed approach does not take into account the geometrical characteristics of edge contours and other information obtained from low intensity variations. More oriented to segmentation methods, Lecot and Levy introduced an algorithm based on Mumford and Shah’s minimization process to decompose an image into a set of vector primitives and gradients [12]. Inspired from this technique, which includes a triangulation process, other methods were proposed to represent a digital image by using a gradient mesh representation allowing to preserve the topological image structure [13]. We can also mention the method proposed by Demaret et al. [14], which is based on linear spline over adaptive Delaunay triangulation or the work of Xia et al. [15] exploiting a triangular mesh followed of Bézier curves patch based representation. From a more interactive process, the vectorization method of Price and Barrett [16] was designed for interactive image edition by exploiting graph cut segmentation and hierarchical object selections.

In this article, we propose to investigate the solutions exploiting the geometric nature of image contours, and apply these advanced representations to handle greyscale images. In particular, we will mainly focus on recent digital-geometry based approaches of different natures: (i) the approach based on dominant point detection by exploiting the maximal straight segment primitives [6, 17], (ii) the one from the digital level layers with the algorithms proposed by Provot et al. [18, 19], (iii) the one using the Fréchet distance [20, 21], and (iv) the curvature based polygonalization [10].

In the next section (Sect. 2), we first present a strategy to reconstruct the image by considering the greyscale image intensities. Different strategies are presented with pros and cons. Afterwards, in Sect. 3, we recall different polygonalization methods. Then, we present the main results and comparisons obtained with different polygonalization techniques (Sect. 4).

2 Vectorizing Images from Level Set Contours

The proposed method is composed of two main steps. The first one is to extract the level set contours of the image. This step is parameterized by the choice of the intensity step (\(\delta _I\)), which defines the intensity variations from two consecutive intensity levels. From these contours, the geometric information can then be extracted and selected by polygonalization algorithms (as described in Sect. 3). The second step concerns the vectorial image reconstruction from these contours.

Step 1: Extracting Level Set Contours and Geometrical Information

The aim of this step is to extract geometrical information from the image intensity levels. More precisely, we propose to extract all the level set contours by using different intensity thresholds defined from an intensity step \(\delta _I\). For this purpose, we apply a simple boundary extraction method defined from an image intensity predicate and from a connectivity definition. The method is based on the definition of the Khalimsky space and can work in N dimension [22]. This algorithm has the advantage to be implemented in the DGtal library [23] and can be tested online [24].

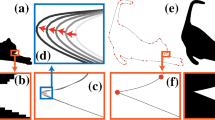

Figure 1 illustrates such a level set extraction (Fig. 1(b, c)) with a basic contour sampling defined from a point selection taken at the frequency of 20 pixels (Fig. 1(d)). Naturally, the more advanced contour polygonalization algorithms, as described in section Sect. 3, will be exploited to provide better contours, with relevant geometrical properties.

Step 2: Vectorial Image Reconstruction

We explore several choices for the reconstruction of the resulting vectorial images: the application of a triangulation based process, a reconstruction from sequence of intensity intervals, and a component tree representation.

Representation from Delaunay triangulation: A first solution was inspired from the method proposed by Swaminarayan and Prasad [11], which uses a Delaunay triangulation defined on the edges detected in the image. However the strategy is different since the triangulation is performed after an extraction of the main geometric features like dominant points, curvature or Fréchet distance. The first direct integration of the triangulation was performed by using the Triangle software [25] and by reconstructing the image with the mean intensity represented in the triangle barycenter. Though, even if such strategy appears promising, the quality is degraded if we have a closer view to the digital structure, as illustrated for the DGCI logo in Fig. 2. To obtain a better quality of image reconstruction, the level set intensity has to be integrated into the reconstruction structure in order to make a better decision for the triangle intensity value.

Representation by filling single intensity intervals: The filling of the polygonal region boundaries of the input image could be a possibility to obtain a better image reconstruction. For instance, the image in Fig. 4(a) can be reconstructed by filling inside the region \(\mathcal {R}_0\) defined from its borders (\(\delta ^0_{\mathcal {R}_0}\), \(\delta ^1_{\mathcal {R}_0}\), and \(\delta ^2_{\mathcal {R}_0}\)) and in the same way for the other regions \(\mathcal {R}_1\), \(\mathcal {R}_2\), \(\mathcal {R}_3\), \(\mathcal {R}_4\) and \(\mathcal {R}_5\). However, such a strategy may depend on the quality of the polygonalization, which has no guarantee to generate the polygonal contours with correspondence between the polygon vertices (according to the border of the current region and the border of their adjacent region). For instance, the vertices of the polygonal representations of \(\delta ^2_{\mathcal {R}_0}\) and \(\delta ^0_{\mathcal {R}_2}\) do not necessarily share the same vertices. Such limitations are illustrated in the Fig. 3(a–e) where numerous defects are present with null-color areas which are not filled by any color.

Component tree based representation: To overcome the limitations of the previous reconstruction, we propose to exploit a mathematical morphology based representation defined on the component tree [26, 27]. This method allows to represent an image by considering the successive intensity levels and by maintaining the tree representing inclusions of connected components. As an illustration, Fig. 4(d) shows such a representation with the root \(\mathcal {R}_0\) associated to the first level; it contains all the pixels of the image (with values higher or equal to 0). The edge between the nodes \(\mathcal {R}_4\) and \(\mathcal {R}_2\) indicates that the region \(\mathcal {R}_4\) is included in the region \(\mathcal {R}_2\).

With such a representation, each region is -by definition- included in its anchors and as a consequence a reconstruction starting from the root region can be executed without any non-filled areas (even with poor-quality polygonalization algorithms). As shown in Fig. 3(f–j), the reconstruction results have no empty-color region.

Algorithm 1 describes the global process of the proposed method where we can use the different polygonalization methods that are detailed in the next section.

3 Overview of Polygonalization Methods

In the proposed vectorization method, the polygonalization step can play an important role in the resulting image quality. Before showing some results, we overview several methods with different principles.

3.1 Dominant Points Based Polygonalization

The polygonalization method proposed by Nguyen and Debled-Rennesson allows to find the characteristic points on a contour, called dominant points, to build a polygon representing a given contour. The method consists in using a discrete curve structure based on width \(\nu \) tangential cover [28] (see Fig. 5(a)). This structure is widely used for studying geometrical characteristics of a discrete curve. More precisely, the width \(\nu \) tangential cover of a contour is composed of a sequence of all maximal blurred segments of width \(\nu \) [29] along the contour. Using this structure, a method proposed in [6, 17] detects the dominant points of a contour. The key idea of the method is that the candidates to be dominant points are located in common zones of successive maximal blurred segments. By a simple measure of angle, the dominant point in each common zone is determined as the point having the smallest angle with respect to the left and right endpoints of the left and right maximal blurred segments constituting the common zone. Then, the polygon representing the contour is constructed from the detected dominant points (see Fig. 5(b)). In the next section the acronym DPP will refer to this method.

3.2 DLL Based Polygonalization [18, 19]

A method of curve decomposition using the notion of digital level layers (DLL) has been proposed in [18, 19]. Roughly speaking, DLL is an analytical primitive which is modeled by a double inequality \(-\omega \le f(x) \le \omega \). Thanks to this analytic model of DLL, the method allows a large possible classes of primitives such as lines, circles and conics.

Based on a DLL recognition algorithm, the method consists in decomposing a given curve into the consecutive DLL and, by this way, an analytical representation of the curve is obtained. Figure 6 illustrates the DLL decomposition using line, circle and conic primitives. As the shape contains many line segments and arcs, the decompositions based on circle and conic primitives uses less primitives than the one with line primitives.

3.3 Fréchet Based Polygon Representation

Using the exact Fréchet distance, the algorithm proposed in [20, 21] computes the polygonal simplification of a curve. More precisely, given a polygonal curve P, the algorithm simplifies P by finding the shortcuts in P such that the Fréchet distance between the shortcut and the associated original part of the curve is less than a given error e. As a consequence, this method guarantees a minimum number of vertices in the simplified curve according to the Fréchet distance and a maximal allowed error e (see Fig. 5(c)).

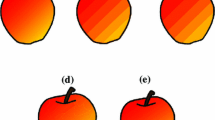

3.4 Visual Curvature Based Polygon Representation

The method was introduced by Liu, Latecki and Liu [10]. It is based on the measure of the number of extreme points presenting on a height function defined from several directions. In particular, the convex-hull points are directly visible as extreme points in the function and a scale parameter allows to retain only the extreme points being surrounded by large enough concave or convex parts. Thus, the non significant parts can be filtered from the scale parameter. The main advantage of this estimator is the feature detection that gives an interesting multi-scale contour representation even if the curvature estimation is only qualitative. Figure 7 illustrates some examples.

4 Results and Comparisons

In this section, we present some results obtained with the proposed method using the previous polygonalization algorithms. Figure 8 shows the results with their execution times (t) in link to the number of polygon points (#). The comparisons were obtained by applying some variations on the scale parameter (when exists: \(\nu \) for DPP, e for Fréchet) and on the intensity interval size s (\(s=255/iStep\), with iStep of Algorithm 1). From the presented results, we can observe that the Fréchet polygonalization methods provides a faster reconstruction but contains more irregular contours. By comparing DLL and DPP, the DLL looks, at low scale, slightly smoother than the others (Fig. 8(a) and (f)). The execution time mainly depends of the choice of the input parameter s and the choice of the method (see time measure given in Fig. 8). Due to page limitation the results obtained with the visual-curvature based polygonalization method are not presented but they can be obtained from an Online demonstration available at:

http://ipol-geometry.loria.fr/~kerautre/ipol_demo/DGIV_IPOLDemo/

To conclude this section we also performed comparisons with Inkscape, a free drawing software [30] (Fig. 9). We can observe that our representation provides fine results, with a lighter PDF file size.

5 Conclusion and Perspectives

Exploiting recent advances from the representation of digital contours, we have proposed a new framework to construct a vectorial representation from an input bitmap image. The proposed algorithm is simple, can integrate many other polygonalization algorithms and can be reproduced online. Moreover, we propose a vectorial representation of the image with a competitive efficiency, w.r.t. Inkscape [30]. This work opens new perspectives with for instance the integration of the meaningful scale detection to filter only the significant intensity levels [31]. Also, we would like to evaluate the robustness of our approach with different polygonalization algorithms, by testing images corrupted by noise.

References

Tombre, K.: Analysis of engineering drawings: state of the art and challenges. In: Tombre, K., Chhabra, A.K. (eds.) GREC 1997. LNCS, vol. 1389, pp. 257–264. Springer, Heidelberg (1998). doi:10.1007/3-540-64381-8_54

Cordella, L., Vento, M.: Symbol recognition in documents: a collection of techniques? Int. J. Doc. Anal. Recognit. 3, 73–88 (2000)

Hilaire, X., Tombre, K.: Robust and accurate vectorization of line drawings. IEEE Trans. Pattern Anal. Mach. Intell. 28, 890–904 (2006)

Nguyen, T.P., Debled-Rennesson, I.: Arc segmentation in linear time. In: Real, P., Diaz-Pernil, D., Molina-Abril, H., Berciano, A., Kropatsch, W. (eds.) CAIP 2011. LNCS, vol. 6854, pp. 84–92. Springer, Heidelberg (2011). doi:10.1007/978-3-642-23672-3_11

Marji, M., Siy, P.: Polygonal representation of digital planar curves through dominant point detection – a nonparametric algorithm. Pattern Recognit. 37, 2113–2130 (2004)

Nguyen, T.P., Debled-Rennesson, I.: A discrete geometry approach for dominant point detection. Pattern Recognit. 44, 32–44 (2011)

Bhowmick, P., Bhattacharya, B.B.: Fast polygonal approximation of digital curves using relaxed straightness properties. IEEE Trans. Pattern Anal. Mach. Intell. 29, 1590–1602 (2007)

Feschet, F.: Multiscale analysis from 1D parametric geometric decomposition of shapes. In: International Conference on Pattern Recognition, pp. 2102–2105 (2010)

Vacavant, A., Roussillon, T., Kerautret, B., Lachaud, J.O.: A combined multi-scale/irregular algorithm for the vectorization of noisy digital contours. Comput. Vis. Image Underst. 117, 438–450 (2013)

Liu, H., Latecki, L.J., Liu, W.: A unified curvature definition for regular, polygonal, and digital planar curves. Int. J. Comput. Vis. 80, 104–124 (2008)

Swaminarayan, S., Prasad, L.: Rapid automated polygonal image decomposition. In: 35th IEEE Applied Imagery and Pattern Recognition Workshop (AIPR 2006), p. 28 (2006)

Lecot, G., Levy, B.: Ardeco: automatic region detection and conversion. In: 17th Eurographics Symposium on Rendering-EGSR 2006, pp. 349–360 (2006)

Sun, J., Liang, L., Shum, H.Y.: Image vectorization using optimized gradient meshes. ACM Trans. Graph. 26, 11 (2007). ACM

Demaret, L., Dyn, N., Iske, A.: Image compression by linear splines over adaptive triangulations. Signal Process. 86, 1604–1616 (2006)

Xia, T., Liao, B., Yu, Y.: Patch-based image vectorization with automatic curvilinear feature alignment. Trans. Graph. 28, 115 (2009). ACM

Price, B., Barrett, W.: Object-based vectorization for interactive image editing. Vis. Comput. 22, 661–670 (2006)

Ngo, P., Nasser, H., Debled-Rennesson, I.: Efficient dominant point detection based on discrete curve structure. In: Barneva, R.P., Bhattacharya, B.B., Brimkov, V.E. (eds.) IWCIA 2015. LNCS, vol. 9448, pp. 143–156. Springer, Cham (2015). doi:10.1007/978-3-319-26145-4_11

Gérard, Y., Provot, L., Feschet, F.: Introduction to digital level layers. In: Debled-Rennesson, I., Domenjoud, E., Kerautret, B., Even, P. (eds.) DGCI 2011. LNCS, vol. 6607, pp. 83–94. Springer, Heidelberg (2011). doi:10.1007/978-3-642-19867-0_7

Provot, L., Gerard, Y., Feschet, F.: Digital level layers for digital curve decomposition and vectorization. Image Process. On Line 4, 169–186 (2014)

Sivignon, I.: A near-linear time guaranteed algorithm for digital curve simplification under the Fréchet distance. In: Debled-Rennesson, I., Domenjoud, E., Kerautret, B., Even, P. (eds.) DGCI 2011. LNCS, vol. 6607, pp. 333–345. Springer, Heidelberg (2011). doi:10.1007/978-3-642-19867-0_28

Sivignon, I.: A near-linear time guaranteed algorithm for digital curve simplification under the Fréchet distance. Image Process. On Line 4, 116–127 (2014)

Lachaud, J.O.: Coding Cells of Digital Spaces: A Framework to Write Generic Digital Topology Algorithms, vol. 12, pp. 337–348. Elsevier (2003)

DGTal-Team: DGtal: digital geometry tools and algorithms library (2017). http://dgtal.org

Coeurjolly, D., Kerautret, B., Lachaud, J.O.: Extraction of connected region boundary in multidimensional images. Image Process. On Line 4, 30–43 (2014)

Shewchuk, J.R.: Delaunay refinement algorithms for triangular mesh generation. Comput. Geom. 22, 21–74 (2002)

Najman, L., Couprie, M.: Building the component tree in quasi-linear time. Trans. Image Process. 15, 3531–3539 (2006)

Bertrand, G.: On the dynamics. Image Vis. Comput. 25, 447–454 (2007)

Faure, A., Buzer, L., Feschet, F.: Tangential cover for thick digital curves. Pattern Recognit. 42, 2279–2287 (2009)

Debled-Rennesson, I., Feschet, F., Rouyer-Degli, J.: Optimal blurred segments decomposition of noisy shapes in linear time. Comput. Graph. 30, 30–36 (2006)

Kerautret, B., Lachaud, J.O.: Meaningful scales detection along digital contours for unsupervised local noise estimation. IEEE Trans. Pattern Anal. Mach. Intell. 34, 2379–2392 (2012)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Kerautret, B., Ngo, P., Kenmochi, Y., Vacavant, A. (2017). Greyscale Image Vectorization from Geometric Digital Contour Representations. In: Kropatsch, W., Artner, N., Janusch, I. (eds) Discrete Geometry for Computer Imagery. DGCI 2017. Lecture Notes in Computer Science(), vol 10502. Springer, Cham. https://doi.org/10.1007/978-3-319-66272-5_26

Download citation

DOI: https://doi.org/10.1007/978-3-319-66272-5_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-66271-8

Online ISBN: 978-3-319-66272-5

eBook Packages: Computer ScienceComputer Science (R0)