Abstract

Recently, kernel methods have been widely employed to solve machine learning problems such as classification and clustering. Although there are many existing graph kernel methods for comparing patterns represented by undirected graphs, the corresponding methods for directed structures are less developed. In this paper, to fill this gap in the literature we exploit the graph kernels and graph complexity measures, and present an information theoretic kernel method for assessing the similarity between a pair of directed graphs. In particular, we show how the Jensen-Shannon divergence, which is a mutual information measure that gauges the difference between probability distributions, together with the recently developed directed graph von Neumann entropy, can be used to compute the graph kernel. In the experiments, we show that our kernel method provides an efficient tool for classifying directed graphs with different structures and analyzing real-world complex data.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Graph kernels have recently evolved into a rapidly developing branch of pattern recognition. Broadly speaking, there are two main advantages of kernel methods, namely (a) they can bypass the need for constructing an explicit high-dimensional feature space when dealing with high-dimensional data and (b) they allow standard machine learning techniques to be applied to complex data, which bridges the gap between structural and statistical pattern recognition [1]. However, although there are a great number of kernel methods aimed at quantifying the similarity between structures underpinned by undirected graphs, there is very little work on solving the corresponding problems for directed graphs. This is unfortunate since many real-world complex systems such as the citation networks, communications networks, neural networks and financial networks give rise to structures that are directed [2].

Motivated by the need to fill this gap in literature, in this paper we propose an information theoretic kernel method for measuring the structural similarity between a pair of directed graphs. Specifically, our goal is to develop a symmetric and positive definite function that maps two directed patterns to a real value, by exploiting the information theoretic kernels that are related to the Jensen-Shannon divergence and a recently developed directed graph structural complexity measure, namely the von Neumann entropy.

1.1 Related Literature

Recently, information theoretic kernels on probability distributions have attracted a great deal of attention and have been extensively employed to the domain of classification of structured data [3]. Martins et al. [4] have generalized the recent research advances, and have proposed a new family of nonextensive information theoretic kernels on probability distributions. Such kernels have proved to have a strong link with the Jensen-Shannon divergence, which measures the mutual information between two probability distributions, and which is quantified by gauging the difference between entropies associated with those probability distributions [1].

Graph kernels, on the other hand, particularly aim to assess the similarity between pairs of graphs by computing an inner product on graphs [5]. For example, the classical random walk kernel [6] compares two labeled graphs by counting the number of paths produced by random walks on those graphs. However, random walk kernel has a serious disadvantage, namely “tottering” [7], caused by the possibility that a random walk can visit the same cycle of vertices repeatedly on a graph. An effective technique to avoid tottering is the backtrackless walk kernel developed by Aziz et al. [8], who have explored a set of new graph characterizations based on backtrackless walks and prime cycles.

Turning attention to the directed graph complexity measures, Ye et al. [2] have developed a novel entropy for quantifying the structural complexity of directed networks. Moreover, Berwanger et al. [9] have proposed a new parameter for the complexity of infinite directed graphs by measuring the extent to which cycles in graphs are intertwined. Recently, Escolano et al. [10] have extended the heat diffusion-thermodynamic depth approach from undirected networks to directed networks and have obtained a means of quantifying the complexity of structural patterns encoded by directed graphs.

1.2 Outline

The remainder of the paper is structured as follows. Section 2 details the development of an information theoretic kernel for quantifying the structural similarity between a pair of directed graphs. In particular, we show the graph kernel can be computed in terms of the Jensen-Shannon divergence between the von Neumann entropy of the disjoint union of two graphs, and the entropies of individual graphs. In Sect. 3, we show the effectiveness of our method by exploring its experimental performance on both artificial and realistic directed network data. Finally, Sect. 4 summarizes our contribution present in this paper and points out future research directions.

2 Jensen-Shannon Divergence Kernel for Directed Graphs

In this section, we start from the Jensen-Shannon divergence and explore how this similarity measure can be used to construct a graph kernel method for two directed graphs. In particular, the kernel can be computed in terms of the entropies of two individual graphs and the entropy of their composite graph. We then introduce the recently developed von Neumann entropy which quantifies the structural complexity of directed graphs. This in turn allows us to develop the mathematical expression for the Jensen-Shannon divergence kernel.

2.1 Jensen-Shannon Divergence

Generally speaking, the Jensen-Shannon divergence is a mutual information measure for assessing the similarity between two probability distributions. Mathematically, given a set \(M_{+}^1(X)\) of probability distributions in which X indicates a set provided with some \(\sigma \)-algebra of measurable subsets. Then, the Jensen-Shannon divergence \(D_{JS}: M_{+}^1(X) \times M_{+}^1(X) \rightarrow [0,+\infty )\) between probability distributions P and Q is computed as:

where \(D_{KL}\) denotes the classical Kullback-Leibler divergence and \(M = \frac{P+Q}{2}\). Specifically, let \(\pi _p\) and \(\pi _q\) (\(\pi _p+\pi _q = 1\) and \(\pi _p,\pi _q\ge 0\)) be the weights of the probability distributions P and Q respectively, then the generalization of the Jensen-Shannon divergence can be defined as:

where \(H_S\) is used to denote the Shannon entropy of the probability distribution.

To proceed, we introduce the Jensen-Shannon kernel method for undirected graphs developed by Bai and Hancock [1]. Given a pair of undirected graphs \(\mathcal {G}_1 = (\mathcal {V}_1, \mathcal {E}_1)\) and \(\mathcal {G}_2 = (\mathcal {V}_2, \mathcal {E}_2)\) where \(\mathcal {V}\) represents the edge set and \(\mathcal {E}\) denotes the vertex set of the graph, the Jensen-Shannon divergence between these two graphs is expressed by:

where \(\mathcal {G}_1 \oplus \mathcal {G}_2\) denotes the disjoint union graph of graphs \(\mathcal {G}_1\) and \(\mathcal {G}_2\) and \(H(\cdots )\) is the graph entropy. With this definition to hand and making use of the von Neumann entropy for undirected graphs [11], the Jensen-Shannon diffusion kernel \(k_{JS}: \mathcal {G} \times \mathcal {G} \rightarrow [0,+\infty )\) is then

where \(H_{VN}\) is the undirected graph von Neumann entropy, and \(\lambda \) is a decay factor \(0<\lambda \le 1\). Clearly, the diffusion kernel is exclusively dependent on the individual entropies of the two graphs being compared as the union graph entropy can also be expressed in terms of those entropies.

2.2 von Neumann Entropy for Directed Graphs

Given a directed graph \(\mathcal {G} = (\mathcal {V}, \mathcal {E})\) consisting of a vertex set \(\mathcal {V}\) together with an edge set \(\mathcal {E}\subseteq \mathcal {V} \times \mathcal {V}\), its adjacency matrix A is defined as

The in-degree and out-degree at vertex u are respectively given as

The transition matrix P of a graph is a matrix describing the transitions of a Markov chain on the graph. On a directed graph, P is given as

As stated in [12], the normalized Laplacian matrix of a directed graph can be defined as

where I is the identify matrix and \(\varPhi =diag(\phi (1), \phi (2),\cdots )\) in which \(\phi \) represents the unique left eigenvector of P. Clearly, the normalized Laplacian matrix is Hermitian, i.e., \(\tilde{L}=\tilde{L}^T\) where \(\tilde{L}^T\) denotes the conjugated transpose of \(\tilde{L}\).

Ye et al. [2] have shown that using the normalized Laplacian matrix to interpret the density matrix, the von Neumann entropy of directed graphs is the Shannon entropy associated with the normalized Laplacian eigenvalues, i.e.,

where \(\tilde{\lambda _i}, i=1,\cdots ,|\mathcal {V}|\) are the eigenvalues of the normalized Laplacian matrix \(\tilde{L}\). Unfortunately, for large graphs this is not a viable proposition since the time required to solve the eigensystem is cubic in the number of vertices. To overcome this problem we extend the analysis of Han et al. [11] from undirected to directed graphs. To do this we again make use of the quadratic approximation to the Shannon entropy in order to obtain a simplified expression for the von Neumann entropy of a directed graph, which can be computed in a time that is quadratic in the number of vertices. Our starting point is the quadratic approximation to the von Neumann entropy in terms of the traces of normalized Laplacian and the squared normalized Laplacian

To simplify this expression a step further, we show the computation of the traces for the case of a directed graph. This is not a straightforward task, and requires that we distinguish between the in-degree and out-degree of vertices. We first consider Chung’s expression for the normalized Laplacian of directed graphs and write

Since the matrix trace is invariant under cyclic permutations, we have

The diagonal elements of the transition matrix P are all zeros, hence we obtain

which is exactly the same as in the case of undirected graphs.

Next we turn our attention to \(Tr[\tilde{L}^2]\):

which is different to the result obtained in the case of undirected graphs.

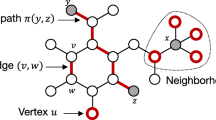

To continue the development we first partition the edge set \(\mathcal {E}\) of the graph \(\mathcal {G}\) into two disjoint subsets \(\mathcal {E}_1\) and \(\mathcal {E}_2\), where \(\mathcal {E}_1=\{(u,v)|(u,v)\in \mathcal {E}\) and \((v,u)\notin \mathcal {E}\}\), \(\mathcal {E}_2=\{(u,v)|(u,v)\in \mathcal {E}\) and \((v,u)\in \mathcal {E}\}\) that satisfy the conditions \(\mathcal {E}_1\bigcup \mathcal {E}_2=\mathcal {E}\), \(\mathcal {E}_1\bigcap \mathcal {E}_2=\emptyset \). Then according to the definition of the transition matrix, we find

Using the fact that \(\varPhi =diag(\phi (1),(2),\cdots )\) we have

Then, we can approximate the von Neumann entropy of a directed graph in terms of the in-degree and out-degree of the vertices as follows

or, equivalently,

Clearly, both entropy approximations contain two terms. The first is the graph size while the second one depends on the in-degree and out-degree statistics of each pair of vertices connected by an edge. Moreover, the computational complexity of these expressions is quadratic in the graph size.

2.3 Jensen-Shannon Divergence Kernel for Directed Graphs

It is interesting to note that the von Neumann entropy is related to the sum of the entropy contribution from each directed edge, and this allows us to compute a local entropy measure for edge \((u,v)\in \mathcal {E}\),

If this edge is bidirectional, i.e., \((u,v)\in \mathcal {E}_2\), then we add an additional quantity to \(I_{uv}\),

Clearly, the local entropy measure represents the entropy associated with each directed edge and more importantly, it avoids the bias caused by graph size, which means that it is the edge entropy contribution determined by the in and out-degree statistics, and neither the vertex number nor edge number of the graph that distinguishes a directed edge.

In our analysis, since we are measuring the similarity between the structures of two graphs, the vertex and edge information plays a significant role in the comparison. As a result, we use the following measure for quantifying the complexity of a directed graph, which is affected by the vertex and edge information,

We let \(\mathcal {G}_1 \oplus \mathcal {G}_2 = \{\mathcal {V}_1 \bigcup \mathcal {V}_2, \mathcal {E}_1 \bigcup \mathcal {E}_2\}\), i.e., the disjoint union graph (or composite graph) of \(\mathcal {G}_1\) and \(\mathcal {G}_2\). Then, we compute the Jensen-Shannon divergence between \(\mathcal {G}_1\) and \(\mathcal {G}_2\) as

As a consequence, we finally have the Jensen-Shannon diffusion kernel

The decay factor \(\lambda \) is set to be 1 for simplifying matters. Clearly, since Jensen-Shannon divergence is a dissimilarity measure and is symmetric, the diffusion kernel associated with the divergence is positive definite. Moreover, for a pair of graphs with N vertices, the computational complexity of the kernel is \(O(N^2)\).

3 Experiments

In this section, we evaluate the experimental performance of the proposed directed graph Jensen-Shannon divergence kernel. Specifically, we first explore the graph classification performance of our method on a set of random graphs generated from different models. Then we apply our method to the real-world data, namely the NYSE stock market networks, in order to explore whether the kernel method can be used to analyze the complex data effectively.

3.1 Datasets

We commence by giving a brief overview of the datasets used for experiments in this paper. We use two different datasets, the first one is synthetically generated artificial graphs, while the other one is extracted from real-world financial system.

Random Directed Graph Dataset. Contains a large number of directed graphs which are randomly generated according to two different directed random graph models, namely (a) the classical Erdős-Rényi model and (b) the Barabási-Albert model [13]. The different directed graphs in the database are created using a variety of model parameters, e.g., the graph size and the connection probability in the Erdős-Rényi model and the number of added connections at each time step in the Barabási-Albert model.

NYSE Stock Market Network Dataset. Is extracted from a database consisting of the daily prices of 3799 stocks traded on the New York Stock Exchange (NYSE). In our analysis we employ the correlation-based network to represent the structure of the stock market since many meaningful economic insights can be extracted from the stock correlation matrices [14]. To construct the dynamic network, 347 stocks that have historical data from January 1986 to February 2011 are selected. Then, we use a time window of 20 days (i.e., 4 weeks) and move this window along time to obtain a sequence (from day 20 to day 6004) in which each temporal window contains a time-series of the daily return stock values over a 20-day period. We represent trades between different stocks as a network. For each time window, we compute the cross-correlation coefficients between the time-series for each pair of stocks, and create connections between them if the maximum absolute value of the correlation coefficient is among the highest 5 % of the total cross correlation coefficients. This yields a time-varying stock market network with a fixed number of 347 vertices and varying edge structure for each of 5976 trading days.

3.2 Graph Classification

To investigate the classification performance of our proposed kernel method, we first apply it to the Random Directed Graph Dataset. Given a dataset consisting of N graphs, we construct a \(N \times N\) kernel matrix

in which \(k_{ij}, \;i,j = 1,2,\cdots ,N\), denotes the graph kernel value between graphs \(\mathcal {G}_i\) and \(\mathcal {G}_j\). In our case, we have \(k_{ij} = k_{JS}(\mathcal {G}_i,\mathcal {G}_j)\). With the kernel matrix to hand, we then perform the kernel principal component analysis (kernel PCA) [15] in order to extract the most important information contained in the matrix and embed the data to a low-dimensional principal component space.

Figure 1 gives two kernel PCA plots of kernel matrix computed from (a) Jensen-Shannon divergence kernel and (b) shortest-path kernel [7]. In particular, the kernels are computed from 600 graphs that belong to six groups (100 graphs in each group): (a) Erdős-Rényi (ER) graphs with \(n=30\) vertices and connection probability \(p=0.3\); (b) Barabási-Albert (BA) graphs with \(n=30\) vertices and average degree \(\bar{k}=9\); (c) ER graphs with \(n=100\) and \(p=0.3\); (d) BA graphs with \(n=100\) and \(\bar{k}=30\); (e) ER graphs with \(n=30\) and \(p=0.6\); and (f) BA graphs with \(n=30\) and \(\bar{k}=18\). From the left-hand panel, the six groups are clearly separated very well, implying that the Jensen-Shannon divergence kernel is effective in comparing not only the structural properties of directed graphs that belong to different classes, but also the structure difference between graphs generated from the same model. However, the panel on the right-hand side suggests that the shortest-path kernel cannot efficiently distinguish the difference between graphs of the six groups since the graphs cannot be clearly separated.

To better evaluate the properties of the proposed kernel method. We proceed to compare the classification result of the Jensen-Shannon divergence kernels developed for directed and undirected graphs. To this end, we employ the undirected Jensen-Shannon divergence kernel and repeat the above analysis on the undirected version of graphs in Random Directed Graph Dataset and report the result in Fig. 2. The undirected kernel method does not separate different groups of graphs well as there are a large number of data points overlapping in the kernel principal component space. Moreover, the BA graphs represented by yellow stars and the ER graphs symbolled by cyan squares (\(n=30\) and \(\bar{k}=18\)) are completely mixed. This comparison clearly indicates that for directed and undirected random graphs that are generated using the same model and parameters, the directed graph kernel method is more effective in classifying them than its undirected analogue.

3.3 Financial Data Analysis

We turn our attention to the financial data, and explore whether the proposed kernel method can be used as an efficient tool for visualizing and studying the time evolution of the financial networks. To commence, we select two financial crisis time series from the data, namely (a) Black Monday (from day 80 to day 120) and (b) September 11 attacks (from day 3600 to day 3650) and construct their corresponding kernel matrices respectively. Then, in Fig. 3 we show the path of the financial network in the PCA space during the selected financial crises respectively. The number beside each data point represents the day number in the time-series. From the left-hand panel we observe that before Black Monday, the network structure remains relatively stable. However, during Black Monday (day 117), the network experiences a considerable change in structure since the graph Jensen-Shannon divergence kernel changes significantly. After the crisis, the network structure returns to its normal state. A similar observation can also be made concerning the September 11 attacks which is shown in the right-hand panel. The stock network again undergoes a significant crash in which the network structure undergoes a significant change. The crash is also followed by a quick recovery.

4 Conclusion

In this paper, we present a novel kernel method for comparing the structures of a pair of directed graphs. In essence, this kernel is based on the Jensen-Shannon divergence between two probability distributions associated with graphs, which are represented by the recently developed von Neumann entropy for directed graphs. Specifically, the entropy approximation formula allows the entropy to be expressed in terms of a sum over all edge-based entropies of a directed graph, which in turn allows the kernel to be computed from the edge entropy sum of two individual graphs and their disjoint union graph. In the experiments, we have evaluated the effectiveness of our kernel method in terms of classifying structures that belong to various classes and analyzing time-varying realistic networks. In the future, it would be interesting to see what features the Jensen-Shannon divergence kernel reveal in additional domains, such as human functional magnetic resonance imaging data. Another interesting line of investigation would be to explore if the kernel method can be extended to the domains of edge-weighted graphs, labeled graphs and hypergraphs.

References

Bai, L., Hancock, E.R.: Graph kernels from the Jensen-Shannon divergence. J. Math. Imaging Vis. 47(1–2), 60–69 (2013)

Ye, C., Wilson, R.C., Comin, C.H., Costa, L.D.F., Hancock, E.R.: Approximate von Neumann entropy for directed graphs. Phys. Rev. E 89, 052804 (2014)

Cuturi, M., Fukumizu, K., Vert, J.P.: Semigroup kernels on measures. J. Mach. Learn. Res. 6, 1169–1198 (2005)

Martins, A.F.T., Smith, N.A., Xing, E.P., Aguiar, P.M.Q., Figueiredo, M.A.T.: Nonextensive information theoretic kernels on measures. J. Mach. Learn. Res. 10, 935–975 (2009)

Vishwanathan, S.V.N., Schraudolph, N.N., Kondor, R., Borgwardt, K.M.: Graph kernels. J. Mach. Learn. Res. 11, 1201–1242 (2010)

Kashima, H., Tsuda, K., Inokuchi, A.: Marginalized kernels between labeled graphs. In: Proceedings in 20th International Conference on Machine Learning, pp. 321–328(2003)

Borgwardt, K.M., Kriegel, H.P.: Shortest-path kernels on graphs. In: Proceedings in the 5th IEEE International Conference on Data Mining, pp. 74–81 (2005)

Aziz, F., Wilson, R.C., Hancock, E.R.: Backtrackless walks on a graph. IEEE Trans. Neural Netw. Learn. Syst. 24(6), 977–989 (2013)

Berwanger, D., Gradel, E., Kaiser, L., Rabinovich, R.: Entanglement and the complexity of directed graphs. Theor. Comput. Sci. 463, 2–25 (2012)

Escolano, F., Hancock, E.R., Lozano, M.A.: Heat diffusion: thermodynamic depth complexity of networks. Phys. Rev. E 85, 036206 (2012)

Han, L., Escolano, F., Hancock, E.R., Wilson, R.C.: Graph characterizations from von Neumann entropy. Pattern Recogn. Lett. 33, 1958–1967 (2012)

Chung, F.: Laplacians and the Cheeger inequailty for directed graphs. Ann. Comb. 9, 1–19 (2005)

Barabási, A.L., Albert, R.: Emergence of scaling in random networks. Science 286, 509–512 (1999)

Battiston, S., Caldarelli, G.: Systemic risk in financial networks. J. Financ. Manag. Mark. Inst. 1, 129–154 (2013)

Schölkopf, B., Smola, A., Müller, K.B.: Nonlinear component analysis as a kernel eigenvalue problem. Neural Comput. 10, 1299–1319 (1998)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Ye, C., Wilson, R.C., Hancock, E.R. (2016). A Jensen-Shannon Divergence Kernel for Directed Graphs. In: Robles-Kelly, A., Loog, M., Biggio, B., Escolano, F., Wilson, R. (eds) Structural, Syntactic, and Statistical Pattern Recognition. S+SSPR 2016. Lecture Notes in Computer Science(), vol 10029. Springer, Cham. https://doi.org/10.1007/978-3-319-49055-7_18

Download citation

DOI: https://doi.org/10.1007/978-3-319-49055-7_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-49054-0

Online ISBN: 978-3-319-49055-7

eBook Packages: Computer ScienceComputer Science (R0)