Abstract

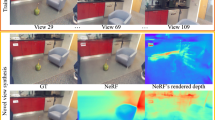

Providing omnidirectional depth along with RGB information is important for numerous applications. However, as omnidirectional RGB-D data is not always available, synthesizing RGB-D panorama data from limited information of a scene can be useful. Therefore, some prior works tried to synthesize RGB panorama images from perspective RGB images; however, they suffer from limited image quality and can not be directly extended for RGB-D panorama synthesis. In this paper, we study a new problem: RGB-D panorama synthesis under the various configurations of cameras and depth sensors. Accordingly, we propose a novel bi-modal (RGB-D) panorama synthesis (BIPS) framework. Especially, we focus on indoor environments where the RGB-D panorama can provide a complete 3D model for many applications. We design a generator that fuses the bi-modal information and train it via residual depth-aided adversarial learning (RDAL). RDAL allows to synthesize realistic indoor layout structures and interiors by jointly inferring RGB panorama, layout depth, and residual depth. In addition, as there is no tailored evaluation metric for RGB-D panorama synthesis, we propose a novel metric (FAED) to effectively evaluate its perceptual quality. Extensive experiments show that our method synthesizes high-quality indoor RGB-D panoramas and provides more realistic 3D indoor models than prior methods. Code is available at https://github.com/chang9711/BIPS.

C. Oh, W. Cho, Y. Chae and D. Park—Equal contribution.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Alaee, G., Deasi, A.P., Pena-Castillo, L., Brown, E., Meruvia-Pastor, O.: A user study on augmented virtuality using depth sensing cameras for near-range awareness in immersive VR. In: IEEE VR’s 4th Workshop on Everyday Virtual Reality (WEVR 2018). vol. 10 (2018)

Ali, B.: Pros and cons of Gan evaluation measures. Comput. Vis. Image Underst. 179, 41–65 (2019)

Armeni, I., Sax, S., Zamir, A.R., Savarese, S.: Joint 2d–3d-semantic data for indoor scene understanding. arXiv preprint arXiv:1702.01105 (2017)

Avidan, S., Shamir, A.: Seam carving for content-aware image resizing. In: ACM SIGGRAPH 2007 Papers, p. 10. SIGGRAPH 2007, Association for Computing Machinery, New York, NY, USA (2007). https://doi.org/10.1145/1275808.1276390

Ballester, C., Bertalmio, M., Caselles, V., Sapiro, G., Verdera, J.: Filling-in by joint interpolation of vector fields and gray levels. IEEE Trans. Image Process. 10(8), 1200–1211 (2001)

Barnes, C., Shechtman, E., Finkelstein, A., Goldman, D.B.: PatchMatch: a randomized correspondence algorithm for structural image editing. ACM Trans. Graph. 28(3), 24 (2009)

Bertalmio, M., Sapiro, G., Caselles, V., Ballester, C.: Image inpainting. In: Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, pp. 417–424 (2000)

Bertalmio, M., Vese, L., Sapiro, G., Osher, S.: Simultaneous structure and texture image inpainting. IEEE Trans. Image Process. 12(8), 882–889 (2003)

Chang, A., et al.: Matterport3d: learning from RGB-D data in indoor environments. In: International Conference on 3D Vision (3DV) (2017)

Cheng, X., Wang, P., Chenye, G., Yang, R.: CSPN++: learning context and resource aware convolutional spatial propagation networks for depth completion. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, 10615–10622 (2020). https://doi.org/10.1609/aaai.v34i07.6635

Cheng, X., Wang, P., Yang, R.: Learning depth with convolutional spatial propagation network. IEEE Trans. Pattern Anal. Mach. Intell. 42(10), 2361–2379 (2020). https://doi.org/10.1109/TPAMI.2019.2947374

Choi, D.: 3d room layout estimation beyond the Manhattan world assumption. arXiv preprint arXiv:2009.02857 (2020)

Criminisi, A., Perez, P., Toyama, K.: Object removal by exemplar-based inpainting. In: Proceedings of 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2, p. 2. IEEE (2003)

Criminisi, A., Pérez, P., Toyama, K.: Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 13(9), 1200–1212 (2004)

Dowson, D., Landau, B.: The fréchet distance between multivariate normal distributions. J. Multivar. Anal. 12(3), 450–455 (1982)

Efros, A.A., Leung, T.K.: Texture synthesis by non-parametric sampling. In: Proceedings of the seventh IEEE International Conference on Computer Vision. vol. 2, pp. 1033–1038. IEEE (1999)

Eldesokey, A., Felsberg, M., Khan, F.S.: Confidence propagation through CNNs for guided sparse depth regression. IEEE Trans. Pattern Anal. Mach. Intell. 42(10), 2423–2436 (2020). https://doi.org/10.1109/tpami.2019.2929170

Guo, D., Feng, J., Zhou, B.: Structure-aware image expansion with global attention. In: SIGGRAPH Asia 2019 Technical Briefs, pp. 13–16. SA 2019, Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3355088.3365161

Guo, D., et al.: Spiral generative network for image extrapolation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12364, pp. 701–717. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58529-7_41

Hara, T., Harada, T.: Spherical image generation from a single normal field of view image by considering scene symmetry. Proc. AAAI Conf. Artif. Intell. 35(2), 1513–1521 (2021)

He, Y., Ye, Y., Hanhart, P., Xiu, X.: Geometry padding for motion compensated prediction in 360 video coding. In: 2017 Data Compression Conference (DCC), pp. 443–443. IEEE Computer Society (2017)

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., Hochreiter, S.: Gans trained by a two time-scale update rule converge to a local Nash equilibrium. In: Advances in Neural Information Processing Systems, pp. 6626–6637 (2017)

Hirose, N., Tahara, K.: Depth360: Monocular depth estimation using learnable axisymmetric camera model for spherical camera image. CoRR abs/2110.10415 (2021). https://arxiv.org/abs/2110.10415

Hu, M., Wang, S., Li, B., Ning, S., Fan, L., Gong, X.: PeNet: towards precise and efficient image guided depth completion. In: IEEE International Conference on Robotics and Automation, ICRA 2021, Xi’an, China, May 30 - June 5, 2021, pp. 13656–13662. IEEE (2021). https://doi.org/10.1109/ICRA48506.2021.9561035

Huang, Z., Fan, J., Cheng, S., Yi, S., Wang, X., Li, H.: HMS-Net: hierarchical multi-scale sparsity-invariant network for sparse depth completion. IEEE Trans. Image Process. 29, 3429–3441 (2020). https://doi.org/10.1109/TIP.2019.2960589

Iizuka, S., Simo-Serra, E., Ishikawa, H.: Globally and locally consistent image completion. ACM Trans. Gr. (ToG) 36(4), 1–14 (2017)

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1125–1134 (2017)

Jaritz, M., De Charette, R., Wirbel, E., Perrotton, X., Nashashibi, F.: Sparse and dense data with CNNs: depth completion and semantic segmentation. In: 2018 International Conference on 3D Vision (3DV), pp. 52–60. IEEE (2018)

Kaneva, B., Sivic, J., Torralba, A., Avidan, S., Freeman, W.T.: Infinite images: creating and exploring a large photorealistic virtual space. Proc. IEEE 98(8), 1391–1407 (2010). https://doi.org/10.1109/JPROC.2009.2031133

Kasaraneni, S.H., Mishra, A.: Image completion and extrapolation with contextual cycle consistency. In: 2020 IEEE International Conference on Image Processing (ICIP), pp. 1901–1905 (2020). https://doi.org/10.1109/ICIP40778.2020.9191339

Kim, K., Yun, Y., Kang, K.W., Kong, K., Lee, S., Kang, S.J.: Painting outside as inside: Edge guided image outpainting via bidirectional rearrangement with progressive step learning. 2021 IEEE Winter Conference on Applications of Computer Vision (WACV) pp. 2121–2129 (2021)

Krishnan, D., et al.: Boundless: generative adversarial networks for image extension. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 10520–10529 (2019). https://doi.org/10.1109/ICCV.2019.01062

Lee, B., Jeon, H., Im, S., Kweon, I.S.: Depth completion with deep geometry and context guidance. In: 2019 International Conference on Robotics and Automation (ICRA), pp. 3281–3287 (2019). https://doi.org/10.1109/ICRA.2019.8794161

Lee, D., Yun, S., Choi, S., Yoo, H., Yang, M.-H., Oh, S.: Unsupervised holistic image generation from key local patches. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11209, pp. 21–37. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01228-1_2

Lee, J.K., Yea, J., Park, M.G., Yoon, K.J.: Joint layout estimation and global multi-view registration for indoor reconstruction. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 162–171 (2017)

Lee, S., Lee, J., Kim, D., Kim, J.: Deep architecture with cross guidance between single image and sparse lidar data for depth completion. IEEE Access 8, 79801–79810 (2020). https://doi.org/10.1109/ACCESS.2020.2990212

Li, A., Yuan, Z., Ling, Y., Chi, W., Zhang, S., Zhang, C.: A multi-scale guided cascade hourglass network for depth completion. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), March 2020

Li, Y., Liu, S., Yang, J., Yang, M.H.: Generative face completion. In: Proceedings of the IEEE Conference On Computer Vision and Pattern Recognition, pp. 3911–3919 (2017)

Liao, L., Xiao, J., Wang, Z., Lin, C.W., Satoh, S.: Image inpainting guided by coherence priors of semantics and textures. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6539–6548 (2021)

Liu, G., Reda, F.A., Shih, K.J., Wang, T.-C., Tao, A., Catanzaro, B.: Image inpainting for irregular holes using partial convolutions. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11215, pp. 89–105. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01252-6_6

Liu, H., Jiang, B., Xiao, Y., Yang, C.: Coherent semantic attention for image inpainting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4170–4179 (2019)

Liu, H., Wan, Z., Huang, W., Song, Y., Han, X., Liao, J.: PD-GAN: Probabilistic diverse Gan for image inpainting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9371–9381 (2021)

Ma, F., Cavalheiro, G.V., Karaman, S.: Self-supervised sparse-to-dense: Self-supervised depth completion from lidar and monocular camera. In: 2019 International Conference on Robotics and Automation (ICRA), pp. 3288–3295 (2019). https://doi.org/10.1109/ICRA.2019.8793637

Mal, F., Karaman, S.: Sparse-to-dense: depth prediction from sparse depth samples and a single image. In: 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 1–8. IEEE (2018)

Mao, X., Li, Q., Xie, H., Lau, R.Y., Wang, Z., Paul Smolley, S.: Least squares generative adversarial networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2794–2802 (2017)

Marinescu, R.V., Moyer, D., Golland, P.: Bayesian image reconstruction using deep generative models. In: Advances in Neural Information Processing Systems (2021)

Mastan, I.D., Raman, S.: DeepCFL: deep contextual features learning from a single image. In: 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 2896–2905 (2021)

Mittal, A., Moorthy, A.K., Bovik, A.C.: No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 21(12), 4695–4708 (2012)

Mittal, A., Soundararajan, R., Bovik, A.C.: Making a “completely blind’’ image quality analyzer. IEEE Signal Process. Lett. 20(3), 209–212 (2012)

Navasardyan, S., Ohanyan, M.: Image inpainting with onion convolutions. In: Proceedings of the Asian Conference on Computer Vision (2020)

Park, J., Joo, K., Hu, Z., Liu, C.-K., So Kweon, I.: Non-local spatial propagation network for depth completion. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12358, pp. 120–136. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58601-0_8

Peng, J., Liu, D., Xu, S., Li, H.: Generating diverse structure for image inpainting with hierarchical VQ-VAE. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10775–10784 (2021)

Qiu, J., et al.: DeepLiDAR: deep surface normal guided depth prediction for outdoor scene from sparse lidar data and single color image. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2019

Rosin, P.L., Lai, Y.-K., Shao, L., Liu, Y.: RGB-D Image Analysis and Processing. ACVPR, Springer, Cham (2019). https://doi.org/10.1007/978-3-030-28603-3

Sabini, M., Rusak, G.: Painting outside the box: image outpainting with GANs. arXiv:abs/1808.08483 (2018)

Salimans, T., Goodfellow, I., Zaremba, W., Cheung, V., Radford, A., Chen, X.: Improved techniques for training GANs. In: Advances in Neural Information Processing Systems, pp. 2234–2242 (2016)

Schubert, S., Neubert, P., Pöschmann, J., Pretzel, P.: Circular convolutional neural networks for panoramic images and laser data. In: 2019 IEEE Intelligent Vehicles Symposium (IV), pp. 653–660. IEEE (2019)

Shan, Q., Curless, B., Furukawa, Y., Hernandez, C., Seitz, S.M.: Photo Uncrop. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8694, pp. 16–31. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10599-4_2

Suin, M., Purohit, K., Rajagopalan, A.: Distillation-guided image inpainting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 2481–2490 (2021)

Sumantri, J.S., Park, I.K.: 360 panorama synthesis from a sparse set of images on a low-power device. IEEE Trans. Comput. Imaging 6, 1179–1193 (2020). https://doi.org/10.1109/TCI.2020.3011854

Suvorov, R., et al.: Resolution-robust large mask inpainting with Fourier convolutions. In: 2022 IEEE Winter Conference on Applications of Computer Vision (WACV) (2022)

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. In: CVPR, pp. 2818–2826 (2016)

Tang, J., Tian, F.P., Feng, W., Li, J., Tan, P.: Learning guided convolutional network for depth completion. IEEE Trans. Image Process. 30, 1116–1129 (2020)

Van Gansbeke, W., Neven, D., De Brabandere, B., Van Gool, L.: Sparse and noisy lidar completion with RGB guidance and uncertainty. In: 2019 16th International Conference on Machine Vision Applications (MVA), pp. 1–6 (2019). https://doi.org/10.23919/MVA.2019.8757939

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Wan, Z., Zhang, J., Chen, D., Liao, J.: High-fidelity pluralistic image completion with transformers. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 4672–4681 (2021)

Wang, B., An, J.: FIS-Nets: full-image supervised networks for monocular depth estimation. CoRR abs/2001.11092 (2020). arXiv:2001.11092

Wang, F.E., Yeh, Y.H., Sun, M., Chiu, W.C., Tsai, Y.H.: BiFuse: monocular 360 depth estimation via bi-projection fusion. In: CVPR (2020)

Wang, M., Lai, Y.K., Liang, Y., Martin, R.R., Hu, S.M.: BiggerPicture: data-driven image extrapolation using graph matching. ACM Trans. Graph. 33(6), 1–13 (2014). https://doi.org/10.1145/2661229.2661278

Wang, N., Li, J., Zhang, L., Du, B.: Musical: Multi-scale image contextual attention learning for inpainting. In: IJCAI, pp. 3748–3754 (2019)

Wang, T.C., Liu, M.Y., Zhu, J.Y., Tao, A., Kautz, J., Catanzaro, B.: High-resolution image synthesis and semantic manipulation with conditional GANs. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8798–8807 (2018)

Wang, W., Zhang, J., Niu, L., Ling, H., Yang, X., Zhang, L.: Parallel multi-resolution fusion network for image inpainting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 14559–14568 (2021)

Wang, Y., Tao, X., Shen, X., Jia, J.: Wide-context semantic image extrapolation. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1399–1408 (2019). https://doi.org/10.1109/CVPR.2019.00149

Wu, X., et al.: Deep portrait image completion and extrapolation. IEEE Trans. Image Process. 29, 2344–2355 (2020). https://doi.org/10.1109/tip.2019.2945866

Xie, C., et al.: Image inpainting with learnable bidirectional attention maps. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8858–8867 (2019)

Xu, Y., Zhu, X., Shi, J., Zhang, G., Bao, H., Li, H.: Depth completion from sparse lidar data with depth-normal constraints. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2019

Yang, S.T., Wang, F.E., Peng, C.H., Wonka, P., Sun, M., Chu, H.K.: DuLA-Net: a dual-projection network for estimating room layouts from a single RGB panorama. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3363–3372 (2019)

Yang, Z., Dong, J., Liu, P., Yang, Y., Yan, S.: Very long natural scenery image prediction by outpainting. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 10561–10570 (2019)

Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., Huang, T.S.: Generative image inpainting with contextual attention. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5505–5514 (2018)

Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., Huang, T.S.: Free-form image inpainting with gated convolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4471–4480 (2019)

Zeng, W., Karaoglu, S., Gevers, T.: Joint 3D layout and depth prediction from a single indoor panorama image. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12361, pp. 666–682. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58517-4_39

Zhang, L., Wang, J., Shi, J.: Multimodal image outpainting with regularized normalized diversification. In: 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 3422–3431 (2020). https://doi.org/10.1109/WACV45572.2020.9093636

Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 586–595 (2018)

Zhang, X., Chen, F., Wang, C., Tao, M., Jiang, G.P.: SiENet: Siamese expansion network for image extrapolation. IEEE Signal Process. Lett. 27, 1590-1594 (2020). https://doi.org/10.1109/LSP.2020.3019705

Zhang, Y., Funkhouser, T.: Deep depth completion of a single RGB-D image. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018

Zhang, Y., Xiao, J., Hays, J., Tan, P.: FrameBreak: dramatic image extrapolation by guided shift-maps. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1171–1178 (2013)

Zhao, L., et al.: UctGAN: diverse image inpainting based on unsupervised cross-space translation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5741–5750 (2020)

Zhao, S., et al.: Large scale image completion via co-modulated generative adversarial networks. In: 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021. OpenReview.net (2021). https://openreview.net/forum?id=sSjqmfsk95O

Zheng, C., Cham, T.J., Cai, J.: Pluralistic image completion. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1438–1447 (2019)

Zheng, J., Zhang, J., Li, J., Tang, R., Gao, S., Zhou, Z.: Structured3D: a large photo-realistic dataset for structured 3d modeling. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12354, pp. 519–535. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58545-7_30

Acknowledgements

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (NRF2022R1A2B5B03002636). This research was conducted while Wonjune Cho and Lin Wang were with KAIST.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Oh, C., Cho, W., Chae, Y., Park, D., Wang, L., Yoon, KJ. (2022). BIPS: Bi-modal Indoor Panorama Synthesis via Residual Depth-Aided Adversarial Learning. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13676. Springer, Cham. https://doi.org/10.1007/978-3-031-19787-1_20

Download citation

DOI: https://doi.org/10.1007/978-3-031-19787-1_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19786-4

Online ISBN: 978-3-031-19787-1

eBook Packages: Computer ScienceComputer Science (R0)