Abstract

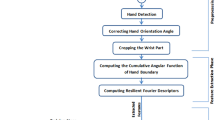

Automated translation from sign languages used by the hearing-impaired people worldwide is an important but so far unresolved task ensuring universal communication in the society. In the paper, we propose an original approach to recognizing gestures of the Russian Sign Language based on the combined use of the linguistic Hamburg System of Notations (HamNoSys) and OpenPose library for tracking human movements. Our software based on the specially constructed and trained artificial neural network (ANN) model performs recognition of the two main components commonly identified in gestures: handshape and location (while the hand orientation, the movement and the non-manual component are so far not considered). The recognition accuracy obtained in the experimental validation with the standard Leap Motion SDK hand tracking algorithm was 100% for adult signers and about 76% for the children. Details of the software architecture and the image recognition process with skeletal data are provided.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

http://сурдофон.рф.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

References

Kendon, A.: Current issues in the study of gesture. the biological foundation of gestures: motor and semiotic aspects. Lawrence Erlbaum Associate, pp. 23–47 (1986)

Keane, J., Sehyr, Z.S., Emmorey, K., Brentari, D.: A theory-driven model of handshape similarity. Phonology 34(2), 221–241 (2017)

Battison, R.: Phonological deletion in American sign language. Sign Lang. Stud. 5(1), 1–19 (1974)

Al-khazraji, S., et al.: Modeling the speed and timing of American sign language to generate realistic animations. In: Proceedings 20th International ACM SIGACCESS Conference on Computers and Accessibility, pp. 259–270 (2018)

Hanke, T.: HamNoSys – representing sign language data in language resources and language processing contexts. In: Proceedings LREC Workshop, Paris: ELRA, pp. 1–6 (2004)

Grif, M.G., Prikhodko, A.L.: Approach to the Sign language gesture recognition framework based on HamNoSys analysis. In: 2018 XIV International Conference on Actual Problems of Electronics Instrument Engineering (APEIE), pp. 426–429 (2018)

Koller, O., Bowden, R., Ney, H.: Automatic alignment of HamNoSys subunits for continuous sign language recognition. In: LREC 2016 Proceedings, pp. 121–128 (2016)

Bragg, D., et al: Sign language recognition, generation, and translation: an interdisciplinary perspective. In: 21st ACM SIGACCESS Conf on Comp and Accessibility, pp. 16–31 (2019)

Rautaray, S.S., Agrawal, A.: Vision based hand gesture recognition for human computer interaction: a survey. Artif. Intell. Rev. 43(1), 1–54 (2012). https://doi.org/10.1007/s10462-012-9356-9

Wei, S., Chen, X., Yang, X., Cao, S., Zhang, X.: A component-based vocabulary-extensible sign language gesture recognition framework. Sensors 16(4), 556 (2016)

Konstantinidis, D., et al.: Sign language recognition based on hand and body skeletal data. In Proceedings 2018-3DTV-Conference, pp. 1–4. IEEE (2018)

Ronchetti, F., et al.: LSA64: a dataset of Argentinian sign language. In: XXII Congreso Argentino de Ciencias de la Computación (CACIC) (2016)

Wei, S.E., et al.: Convolutional pose machines. In: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, pp. 4724–4732 (2016)

Bragg, D., et al.: Sign language recognition, generation, and translation: an interdisciplinary perspective. In: Proceedings 21st International ACM SIGACCESS Conference, pp. 16–31 (2019)

Du, Y. et al.: Hand gesture recognition with leap motion. arXiv:1711.04293 (2017)

Kumar, P., et al.: A multimodal framework for sensor based sign language recognition. Neurocomputing 259, 21–38 (2017)

Acknowledgment

The reported study was funded by RFBR and DST according to the research project No. 19-57-45006.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Prikhodko, A., Grif, M., Bakaev, M. (2020). Sign Language Recognition Based on Notations and Neural Networks. In: Alexandrov, D.A., Boukhanovsky, A.V., Chugunov, A.V., Kabanov, Y., Koltsova, O., Musabirov, I. (eds) Digital Transformation and Global Society. DTGS 2020. Communications in Computer and Information Science, vol 1242. Springer, Cham. https://doi.org/10.1007/978-3-030-65218-0_34

Download citation

DOI: https://doi.org/10.1007/978-3-030-65218-0_34

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-65217-3

Online ISBN: 978-3-030-65218-0

eBook Packages: Computer ScienceComputer Science (R0)