Abstract

Epileptic focus localization is a critical factor for successful surgical therapy of resection of epileptogenic tissues. The key challenging problem of focus localization lies in the accurate classification of focal and non-focal intracranial electroencephalogram (iEEG). In this paper, we introduce a new method based on short time Fourier transform (STFT) and convolutional neural networks (CNN) to improve the classification accuracy. More specifically, STFT is employed to obtain the time-frequency spectrograms of iEEG signals, from which CNN is applied to extract features and perform classification. The time-frequency spectrograms are normalized with Z-score normalization before putting into this network. Experimental results show that our method is able to differentiate the focal from non-focal iEEG signals with an average classification accuracy of 91.8%.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

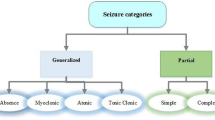

According to the World Health Organization, Epilepsy is one of the most common neurological diseases globally, approximately 50 million people worldwide suffer from it [1]. Epilepsy is a chronic disorder of the brain which is a result of excessive electrical discharges in a group of brain cells. Different parts of the brain can be the site of such discharges [1]. Recent studies have shown that up to 70% of patients can be successfully treated with anti-epileptic drugs (AEDs) [1]. For patients with drug-resistant focal epilepsy, resection of epileptogenic tissues is one of the most promising treatments in controlling epileptic seizures. Hence, it’s very important to determine the seizure focus in surgical therapy. The nature that focal iEEG signal is more stationary and less random than non-focal iEEG signal enable iEEG to be used for identification of location [2].

As it is not certain that symptoms will present in the EEG signal at all times, interictal iEEG from epilepsy patients are monitored or recorded in long-term. In this process, large amounts of data are generated that detection of seizure from the iEEG recordings with visual inspection by neurological experts is time-consuming. That can cause a delay of hours or even weeks in the patient’s course of treatment. Therefore, many methods of automatic detection of epileptic foci have been proposed to assist neurologists by accelerating the reading process and thereby reducing workload, such as classification of normal, interictal and seizure [3]. For drug-resistant focal epilepsy, automatic detection of seizure focus localization from the interictal iEEG signal is required. In order to determine the epileptic seizure focus according to the iEEG signals, it essential to extract the most discriminative features, followed by classification of features into the focal part or non-focal part.

For feature extraction, methods in common use are entropy, empirical mode decomposition (EMD) [4] and time-frequency analysis such as Fourier transform, wavelet transforms (WT) [5], etc. Particularly, it is demonstrated that the time-frequency domain extracted with the aid of STFT is suitable for classifying EEG signal for epilepsy [6]. For classification, in recent years, automatic classification of EEG by machine learning techniques has been popular, including support vector machines (SVM) [4], K-Nearest-Neighbor method (KNN) [7] and deep learning, such as the CNN and recurrent neural network (RNN) [8]. Several computer-aided solutions based on deep learning that used the raw iEEG time-series signal as input had been proposed to localize seizure focus in epilepsy [9]. However, the performance of the combination of the time-frequency domain and CNN has not been widely tested for this task.

In this paper, inspired by successes in CNN with the raw EEG time-series signal [9], we propose a deep learning approach for the classification of focal and non-focal iEEG signal combining time-frequency analysis and CNN, in which simple features are extracted from the time-frequency domain with the use of STFT, discriminative features are learned with convolutional layers and classification is performed with a fully connected layer.

2 Dataset

The iEEG signals used in this study are obtained from the publicly available Bern-Barcelona iEEG dataset provided by Andrzejak et al. at the Department of Neurology of the University of Bern [2], collected from five epilepsy patients who underwent long-term intracranial iEEG recordings. All patients suffered from long-standing drug-resistant temporal lobe epilepsy and were candidates for surgery.

Signals recorded at epileptogenic zones were labeled as focal signals, otherwise, it was labeled as non-focal signals. The dataset consists of 3750 pairs of focal iEEG signals and 3750 pairs of non-focal iEEG signals, and a pair of iEEG signals from adjacent channels were recorded into each signal pair, sampled of 20 s at a frequency of 512 Hz. In order to guarantee to get rid of the seizure iEEG signals, the iEEG signals recorded during the seizure and three hours after the last seizure were excluded.

An example of a pair of the focal and non-focal iEEG signals are shown in Fig. 1, respectively.

3 Method

In this method, it is divided into two key parts. Firstly, the STFT and Z-score normalization were successively deployed to preprocess the iEEG signal to get feature arrays on the time-frequency domain. And then the feature arrays are fed into the CNN to classify the iEEG signal into focal and non-focal.

The proposed method used to classify iEEG signals into focal and non-focal is shown in Fig. 2.

3.1 Short Time Fourier Transform (STFT)

Due to the instability of the iEEG signal, it is difficult to extract the key features by Fourier transform [10], hence, STFT is one of the most commonly used time-frequency analysis methods. Firstly, the time-frequency spectrogram of the iEEG signal is transformed by STFT, and then the spectrogram is transformed into a 2D array which is input to CNN for training or testing.

For STFT, the process is to divide a longer time signal into shorter segments of equal length and then use the Fourier transform to compute the Fourier spectrum of each shorter segment.

Given a determined signal x(t), the time-frequency domain at each time point can be obtained by the following formula (1).

where w(t) is the Hann window function centered around zero.

Examples of spectrogram of iEEG signals are shown in Fig. 3.

3.2 Z-Score Normalization

Before feeding into the neural network, the data are normalized to improve the accuracy of the network and increase convergence speed. Z-score normalization is employed in this study based on the mean and standard deviation of the original spectrogram array.

The Z-score normalization defined as:

where \(\bar{x}\) is the mean of the dataset and S is the standard deviation of the dataset.

3.3 Convolutional Neural Network (CNN)

The CNN architecture contains three different types of the layer: convolutional layer, pooling layer, and fully connected layer.

Convolutional Layer: The ultimate preprocessed STFT data is used as input to convolutional layers. In the convolutional layers, the input time-frequency spectrogram is convoluted by the learnable filter (kernel) which is a matrix, and the stride is set to control how much the filter convolves across the input time-frequency spectrogram. The output of the convolution, also known as the feature map, are obtained after additive bias and non-linear map by an activation function.

Pooling Layer: In the pooling layer, also known as the down-sampling layer, feature maps from the upper layer are down-sampled to lower the calculation complexity and prevent overfitting. There are many kinds of pooling operation, max-pooling is used in this study to obtain the maximum value of each region of the feature map and consequently reducing the number of output neurons.

Fully Connected Layer: In the fully-connected layer, all the 2D feature maps from the upper layer are represented by a one-dimensional feature vector as the input of this layer. In this study, the output is obtained by doing dot products between the feature vector and learnable weights vector, adding learnable bias and then responding to the activation function.

CNN Architecture: The ultimate preprocessed spectrogram transformed by STFT, which is a time-frequency spectrogram of size 257 \(\times \) 101, exploited by the CNN architecture to conduct convolution operation. The local features are extracted individually based on the local correlation among the time-frequency domain. Overall features are built by connecting the local features. The CNN architecture is proposed in Fig. 4. The network is trained by setting with five pooling layers (P1 to P5) after each convolutional layers (C1 to C5), following six fully connected layers, and output includes two neurons corresponding to the focal iEEG signal and non-focal iEEG signal. And the training process builds relationships between iEEG signals and labels. The specific training process is as follows [11]:

-

1.

Time-frequency spectrogram of 257 \(\times \) 101 size is convoluted by a 3 \(\times \) 3 filter sliding in C1 with stride 1 and set 10 feature maps, which are the same size as the input to represent each input spectrogram.

-

2.

The P1 is done pooling operation by a 3 \(\times \) 3 filter sliding with stride 2 and set 10 feature maps to represent the output of C1, and its output size is 129 \(\times \) 51.

-

3.

The C2 to C5 and P2 to P5 are similar to C1 and P1, except that their sizes of input and output are decided by former, and size of the feature map increase exponentially. The final output obtained is 160 feature maps of size 9 \(\times \) 4.

-

4.

The fully connected layer has 160 \(\times \) 9 \(\times \) 4 neurons connected to the feature maps obtained from the P5, and the output layer has two neurons connected to the fully connected layer for classification. Finally, each signal is trained to correspond to one kind of label.

4 Experimental Result and Discussion

The proposed algorithm was implemented on a workstation with 12 Intel Core i7 3.50 GHz (5930K), a GeForce GTX 1080 graphics processing unit (GPU) and 128 GB random-access memory (RAM) using the Python programming language on TensorFlow framework.

Ten-fold cross-validation is used in this study, 90% of the dataset is used as the training set (including 10% as validation set), while the remaining 10% as the test set. It requires a lot of computational overhead to use one iteration of full training set to perform each epoch, therefore stochastic gradient descent training is used in this paper. In each epoch of the training, 100 batches with a size of 120 data are randomly fed into the network. And we validate the network by using validation set after each epoch.

The accuracy of the validation set across classification all ten-folds is shown in Fig. 5.

Compared with the published works record in Table 1 [12], although our proposed method does not achieve the best in terms of classification accuracy, it is still managed to obtain 91.8% accuracy. And the advantage of this method is that it is less preprocessing for feature extraction and selection than other methods such as EMD and entropy.

5 Conclusion

Since manual visual inspection of iEEG is a time-consuming process, an effective classifier that automates detection of epileptic focus will have the potential to reduce delays in treatment. We propose a new recognition method for iEEG-based localization of epileptic focal based on STFT and CNN with additional preprocessing and we implement a 15-layer CNN model for automated iEEG signal classification in this paper. The results with 91.8% accuracy demonstrates that this method is effective with much efficient and fast preprocessing step for localization of focal epileptic seizure area.

References

World health organization (2019). Epilepsy. https://www.who.int/news-room/fact-sheets/detail/epilepsy

Andrzejak, R.G., Schindler, K., Rummel, C.: Nonrandomness, nonlinear dependence, and nonstationarity of electroencephalographic recordings from epilepsy patients. Phys. Rev. E 86(4), 046206 (2012)

Schirrmeister, R.T., et al.: Deep learning with convolutional neural networks for eeg decoding and visualization. Hum. Brain Mapp. 38(11), 5391–5420 (2017)

Itakura, T., Tanaka, T.: Epileptic focus localization based on bivariate empirical mode decomposition and entropy. In: Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), pp. 1426–1429. IEEE (2017)

Dash, D.P., Kolekar, M.H.: A discrete-wavelet-transform-and Hidden-Markov-Model-Based approach for epileptic focus localization. In: Biomedical Signal and Image Processing in Patient Care, pp. 34–45. IGI Global (2018)

Kıymık, M.K., Güler, İ., Dizibüyük, A., Akın, M.: Comparison of stft and wavelet transform methods in determining epileptic seizure activity in EEG signals for real-time application. Comput. Biol. Med. 35(7), 603–616 (2005)

Bhattacharyya, A., Pachori, R.B., Upadhyay, A., Acharya, U.R.: Tunable-Q wavelet transform based multiscale entropy measure for automated classification of epileptic EEG signals. Appl. Sci. 7(4), 385 (2017)

Roy, S., Kiral-Kornek, I., Harrer, S.: ChronoNet: a deep recurrent neural network for abnormal EEG identification. arXiv preprint arXiv:1802.00308 (2018)

Acharya, U.R., Oh, S.L., Hagiwara, Y., Tan, J.H., Adeli, H.: Deep convolutional neural network for the automated detection and diagnosis of seizure using EEG signals. Comput. Biol. Med. 100, 270–278 (2018)

Tzallas, A.T., Tsipouras, M.G., Fotiadis, D.I.: Epileptic seizure detection in EEGs using time-frequency analysis. IEEE Trans. Inf. Technol. Biomed. 13(5), 703–710 (2009)

Wang, X., Huang, G., Zhou, Z., Gao, J.: Radar emitter recognition based on the short time Fourier transform and convolutional neural networks. In: 2017 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), pp. 1–5. IEEE (2017)

Acharya, U.R., et al.: Characterization of focal EEG signals: a review. Future Gener. Comput. Syst. (2018)

Sharma, R., Pachori, R.B., Acharya, U.R.: Application of entropy measures on intrinsic mode functions for the automated identification of focal electroencephalogram signals. Entropy 17(2), 669–691 (2015)

Deivasigamani, S., Senthilpari, C., Yong, W.H.: Classification of focal and nonfocal EEG signals using anfis classifier for epilepsy detection. Int. J. Imaging Syst. Technol. 26(4), 277–283 (2016)

Das, A.B., Bhuiyan, M.I.H.: Discrimination and classification of focal and non-focal EEG signals using entropy-based features in the EMD-DWT domain. Biomed. Signal Process. Control 29, 11–21 (2016)

Sharma, R., Kumar, M., Pachori, R.B., Acharya, U.R.: Decision support system for focal EEG signals using tunable-Q wavelet transform. J. Comput. Sci. 20, 52–60 (2017)

Gupta, V., Priya, T., Yadav, A.K., Pachori, R.B., Acharya, U.R.: Automated detection of focal EEG signals using features extracted from flexible analytic wavelet transform. Pattern Recogn. Lett. 94, 180–188 (2017)

Sriraam, N., Raghu, S.: Classification of focal and non focal epileptic seizures using multi-features and svm classifier. J. Med. Syst. 41(10), 160 (2017)

Arunkumar, N., et al.: Classification of focal and non focal EEG using entropies. Pattern Recogn. Lett. 94, 112–117 (2017)

Acknowledgement

This work was supported by JST CREST Grant Number JPMJCR1784, JSPS KAKENHI (Grant No. 17K00326 and 18K04178).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 IFIP International Federation for Information Processing

About this paper

Cite this paper

Sui, L., Zhao, X., Zhao, Q., Tanaka, T., Cao, J. (2019). Localization of Epileptic Foci by Using Convolutional Neural Network Based on iEEG. In: MacIntyre, J., Maglogiannis, I., Iliadis, L., Pimenidis, E. (eds) Artificial Intelligence Applications and Innovations. AIAI 2019. IFIP Advances in Information and Communication Technology, vol 559. Springer, Cham. https://doi.org/10.1007/978-3-030-19823-7_27

Download citation

DOI: https://doi.org/10.1007/978-3-030-19823-7_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-19822-0

Online ISBN: 978-3-030-19823-7

eBook Packages: Computer ScienceComputer Science (R0)