Abstract

These proceedings of the IFIP WG 8.2 reflects the response of the research community to the theme selected for the 2018 working conference: “Living with Monsters? Social Implications of Algorithmic Phenomena, Hybrid Agency and the Performativity of Technology”.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

These proceedings of the IFIP WG 8.2 reflects the response of the research community to the theme selected for the 2018 working conference: “Living with Monsters? Social Implications of Algorithmic Phenomena, Hybrid Agency and the Performativity of Technology”. The IFIP WG 8.2 community has played an important role in the Information Systems’ (IS) field, by promoting methodological diversity and engagement with organizational and social theory. Historically, it has acted as a focus for IS researchers who share these interests, always sustaining a lively and reflexive debate around methodological questions. In recent years, this community has developed an interest in processual, performative and relational aspects of inquiries into agency and materiality. This working conference builds on these methodological and theoretical focal points.

As the evolving digital worlds generate both hope and fears, we wanted to mobilize the research community to reflect on the implications of digital technologies. Early in our field’s history Norbert Wiener emphasized the ambivalence of automation’s potential and urged us to consider the possible social consequences of technological development [1]. In a year where the CEO of one of the world’s largest tech giants had to testify to both the US Congress and the EU ParliamentFootnote 1, the social implications of digital technologies is a pertinent topic for a working conference in the IFIP 8.2 WG community.

The monster metaphor allows reframing and questioning both of our object of research and of ourselves [2]. It brings attention to the ambivalence of technology as our creation. Algorithms, using big data, do not only identify suspicious credit card transactions and predict the spread of epidemics, but they also raise concerns about mass surveillance and perpetuated biases. Social media platforms allow us to stay connected with family and friends, but they also commoditize relationships and produce new forms of sociality. Platform architectures are immensely flexible for connecting supply and demand, but they are also at risk of monopolization and may become the basis for totalitarian societal structures.

We have realized that digital technologies are not mere strategic assets, supporting tools, or representational technologies; instead, they are profoundly performative [3]. They serve as critical infrastructures and are deeply implicated in our daily lives. The complexity and opacity of today’s interconnected digital assemblages reduce anyone’s ability to fathom, let alone control them. For instance, stock market flash crashes, induced by algorithmic trading, are highly visible examples of the limits of oversight and control.

Cautionary tales of technology have often employed monster notions, such as the sorcerer’s apprentice, the juggernaut, and the Frankenstein figure [4]. The complex hybrid assemblages that have become so crucial for our everyday lives, are our own creations but not under anyone’s apparent control. In fact, we might even be controlled by them [5]. Consequently, we ask: “Are we living with monsters?”

The monster, emphasizing the unintended consequences of technologies, can also encourage a reflection on what it is we do when we contribute to the creation of technologies, and what our roles and duties are as researchers. The monster figure has stimulated such moral reflection ever since Mary Wollstonecraft Shelley’s paradigmatic novel Frankenstein or The Modern Prometheus was published 200 years ago in 1818. The novel has often been read as a critique of modern science’s hubris and lack of moral restraint in the pursuit of knowledge, especially when linked to creating artificial life or tinkering with natural, biological life.

A slightly different reading is presented by Winner [4] and Latour [6], who point to the lack (or delayed onset) of the realization that a moral obligation accompanies the pursuit of knowledge and technological creation. “Dr. Frankenstein’s crime was not that he invented a creature through some combination of hubris and high technology, but rather that he abandoned the creature to itself” ([6] [italics in original]). If our involvement should be followed by a moral obligation, we need to ask what this entails. What does it mean to take care of the monsters in our midst?

The monster disturbs us. A central thrust in the literature on monsters in the humanities is geared to explore the revelatory potential of the monster figure. Attending to monsters is a way of reading a culture’s fears, desires, anxieties and fantasies as expressed in the monsters it engenders [7]. The monster is a mixed, and incongruous entity that generates questions about boundaries between the social and the material: Where are the borders of the human society? Does the monster have a place among us?

This ontological liminality can be exploited as an analytic resource: the monsters reveal that which is “othered” and expelled, perhaps that which we neither want to take responsibility for nor to take care of. How do we think about being deeply implicated in the ongoing (re)creation of digital societies? Many of us use social media while criticizing their privacy-invading tendencies; many enjoy the gig economy for its low-cost services while disliking its destabilization of workers’ rights; and many perpetuate the quantification of academic life while criticizing it. Do we as researchers inadvertently contribute to such “eyes wide shut” behavior? How can we conduct our research in ways that are open to the unintended? How can we be self-reflexive and realize our own biases, preferences, interests and (hidden) agendas?

These are some of the reflections we hoped the conference theme would trigger and the questions it would raise. We had hoped for an engaged response from members of the IFIP WG 8.2 community, and we were not disappointed. We received 49 submissions from researchers addressing a wide range of topics within the conference theme. Enacting a double-blind review process, the papers were assigned to one of the conference co-chairs (acting in a senior editor role) and then sent to at least two reviewers. As a sign of the 8.2 community’s critical yet supportive culture and its generosity of spirit, the reviewers offered highly constructive and carefully crafted feedback that was invaluable to both editors and the authors.

After a careful and highly competitive selection, we accepted 11 contributions for full presentation at the conference. These papers, and opinion pieces by our two keynotes, make up these proceedings.

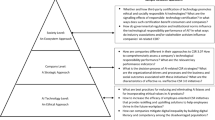

Following after this chapter and two short papers summarizing the keynote addresses, we have clustered the 11 papers into three groups, each making up a section in this editorial. The first section, Social Implications of Algorithmic Phenomena, features contributions that deal with questions about objectivity, legitimacy, matters of inclusion, the blackboxing of accountability, and the systemic effects and unintended consequences of algorithmic decision-making. In particular, utopian visions of new forms of rationality are explored alongside dystopian narratives of surveillance capitalism.

Hybrid Agency and the Performativity of Technology, our second cluster of papers, includes papers that explore relational notions of agency, as well as perspectives, concepts and vocabularies that help us understand the performativity of sociomaterial practices and how technologies are implicated in them. These contributions embrace hybridity, liminality, and performativity.

The papers in the third section, Living with Monsters, take up the notion of monsters as their core metaphor. They explore the value and threat of these complex, autonomous creations in our social worlds and develop ideas for how we, as creators, users and investigators of these technologies, might reconsider and renew our relationships to, and intertwining with, these creatures/creations.

2 Contributions from Our Keynote Speakers – Lucy Suchman and Paul N. Edwards

Our two eminent keynote speakers engage with the conference theme in different ways. In “Frankenstein’s Problem,” Lucy Suchman deals with our ambivalent relationship to technological power and control. She seeks to intervene in the increasing automation of military systems, where the identification of targets and initiation of attack via military drones seem on track to becoming fully automated. Drawing on Rhee’s The Robotic Imaginary [8], she describes the dehumanization that is made possible by the narrowness in robotic views of humanness and argues that we need to direct our attention towards the “relation between the figurations of the human and operations of dehumanization.”

Rather than seeking to control our monster, Suchman argues for continued engagement and reminds us that we are not absolved from responsibility because the technological systems are beyond our control. While we are obliged to remain in relation, we should also remember that these relations often are about either dominance or instrumentality. Suchman urges us to recognize how “a politics of alterity” operates through the monster figure, and how the monster [9] can challenge and questions us. Indeed, this is the promise that the monster imaginary holds.

Paul N. Edwards takes on algorithms in his chapter “We have been assimilated: some principles for thinking about algorithmic systems”. While we often think of an algorithm as a recipe or a list of instructions, Edwards claims that this is “merely the kindergarten version” of what is going on with algorithmic systems. To gain a more realistic appreciation of contemporary algorithms, Edwards develops four principles that capture their nature.

Firstly, there are too many intricate interactions in a complicated algorithmic system (e.g., climate models) for us to grasp an algorithm’s totality. This constitutes the principle of radical complexity. Secondly, the category of algorithms produced by machine learning systems are not a set of pre-programmed instructions but are themselves “builders” that develop new models. Thus, the algorithms, as well as the high-dimensional feature matrices that are used to develop and evaluate the models being built, are beyond human comprehensions. Edwards captures this aspect of algorithms in the principle of opacity.

Thirdly, despite the frequent invocation of the human brain as a metaphor for artificial intelligence, learning algorithms do not work like human cognition. This is expressed in the principle of radical otherness. Finally, to indicate the need for a comprehensive view that goes beyond a mere focus on the technology, i.e., algorithms and data, Edwards highlights the performative and constitutive nature of algorithms, which he struggles to capture in a succinct principle. He thus offers alternatives like the principle of infrastructuration and the principle of Borgian assimilation.

Edwards concludes with a call for intellectual tools – of which his principles might form an integral part – that might help us engage with this new world. However, he worries that we might already be too assimilated to a world infused with algorithmic systems, and that “there probably is no going back.”

Lucy Suchman and Paul Edwards both stimulate our reflections on living with the technologies we have created, caring for them and contemplating the roles and responsibilities of the IS researcher in studying these imbroglios. In this way, our keynote speakers set the tone we envisaged for this working conference, aptly located in the shadow of Silicon Valley.

3 Social Implications of Algorithmic Phenomena

The proliferation of algorithmic decision-making in work and life raises novel questions about objectivity, legitimacy, matters of inclusion, the blackboxing of accountability, and the systemic effects and unintended consequences of algorithmic decision-making. Utopian visions of new forms of rationality coexist with dystopian narratives around surveillance capitalism and the remaking of society [10]. Will algorithms and artificial ‘intelligence’ provide solutions to complex problems, from reimagining transport and reducing social inequality to fighting disease and climate change, or are we putting undue faith in the techno-utopian power of algorithmic life [11]? Several of the papers engage with this topic in a range of settings:

In the chapter “Algorithmic Pollution: Understanding and Responding to Negative Consequences of Algorithmic Decision-making,” Olivera Marjanovic, Dubravka Cecez-Kecmanovic, and Richard Vidgen draw inspiration from the notion of environmental pollution to explore the potentially harmful and unintended consequences of algorithmic pollution in sociomaterial environments. While the authors recognize the potentially significant benefits of algorithms to society (e.g., healthcare, driverless vehicles, fraud detection) they offer evidence of the negative effect algorithms can have on services that transform people’s lives such as (again) healthcare, social support service, education, law and financial services. Using sociomateriality as their theoretical lens, the authors take the first step in developing an approach that can help us track how this type of pollution is performed by sociomaterial assemblages involving numerous actors. The paper is a timely call to action for IS researchers interested in algorithms and their role in organizations and society.

In “Quantifying Quality: Algorithmic Knowing Under Uncertainty”, Eric Monteiro, Elena Parmiggiani, Thomas Østerlie, and Marius Mikalsen examine the complex processes involved in geological assessments in commercial oil and gas exploration. The authors uncover how traditionally qualitative sensemaking practices of geological interpretations in commercial oil and gas exploration are challenged by quantification efforts driven by geophysical, sensor-based measurements captured by digital tools. Interestingly, even as more geophysical data become available, they feed into narratives that aim to provide actionable explanations for what geological processes and events gave rise to the current geophysical conditions discernible from the data. Adopting a performative view of scaffolding as underpinning geological (post-humanist) sensemaking, they argue that scaffolding is dynamic, provisional, and decentered. They also argue and show that even as practices evolve, towards quantification, there remains an irreducible relationship between, or intertwining of, qualitative judgments and data-driven quantitative analysis.

In “Understanding the Impact of Transparency on Algorithmic Decision Making Legitimacy” David Goad and Uri Gal argue that the lack of transparency in algorithmic decision making (ADM), i.e., the opacity of how the algorithm was developed and how it works, negatively impacts its perceived legitimacy. This is likely to reduce the application and adoption of decision support technologies, especially those leveraging machine learning and artificial intelligence, in organizations. To unpack the complex relationship between transparency and ADM’s perceived legitimacy, the authors develop a conceptual framework that decomposes transparency into validation, visibility and variability. Furthermore, it distinguishes among different types of legitimacy, i.e., pragmatic, moral and cognitive. Based on this conceptual scaffold, Goad and Gal develop a set of propositions that model the complex relationship between transparency and ADM’s perceived legitimacy. Since transparency affects the legitimacy of algorithmic decision making positively under some circumstances and negatively under others, this paper offers useful guidance for the design of business processes that seek to leverage learning algorithms in order to deal with the very real organizational challenges of big data and the Internet of Things.

The chapter called “Advancing to the Next Level: Caring for Evaluative Metrics Monsters in Academia and Healthcare”, is written by Iris Wallenburg, Wolfgang Kaltenbrunner, Björn Hammarfeldt, Sarah de Rijcke, and Roland Bal. The authors use the notions of play [12] and games to analyze performance management practices in professional work, more specifically in law faculties and hospitals. The authors argue that while evaluative metrics are often described as ‘monsters’ impacting professional work, the same metrics can also become part of practices of caring for such work. For instance, these metric monsters can be put to work to change and improve work. At other times, they are playfully resisted when their services are unwarranted. Distinguishing between finite games (games played to win) and infinite games (games played for the purpose of continuing to play), the authors show how evaluative metrics are reflexively enacted in daily professional practice as well as how they can be leveraged in conflictual dynamics. This chapter therefore provides rich nuance to the discussion of the “metric monsters” of performance evaluations.

In “Hotspots and Blind Spots: A Case of Predictive Policing in Practice”, Lauren Waardenburg, Anastasia Sergeeva and Marleen Huysman describe the changes following the introduction of predictive analytics in the Dutch police force. They focus on the occupational transformations and describe the growing significance of intermediary occupational groups. The previously supportive role of the “information officers” evolved into a more pro-active role that involved the exercising of judgment, which impacted the outcome of the analytics. This earned the group, now denoted “intelligence officers” a more central position. Paradoxically, the work of the intelligence officers was critical to establishing a perception of the predictive analytics’ trustworthiness among the police officers.

In the chapter entitled “Objects, Metrics and Practices: An Inquiry into the Programmatic Advertising Ecosystem,” Cristina Alaimo and Jannis Kallinikos explore “programmatic advertising,” i.e., the large scale, real-time bidding process whereby ads are automatically assigned upon a user’s browser request. In this process of dizzying computational and organizational complexity, the study focuses on the functioning of digital objects, platforms and measures. The chapter shows how the automated exchanges in these massive platform ecosystems shift the way they measure audiences from user behaviour data to contextual data. Adopting Esposito’s notion of conjectural objects [13, 14], the authors describe how ads are assigned not based on what a user did but rather the computable likelihood of an ad to be seen by a user, or what the industry calls an “opportunity to see.” Thus, rather than being simple means to monitor a pre-existing reality (e.g., user behaviour) these metrics and techniques bring forward their own reality by shaping the objects of digital advertising. This intriguing account of programmatic advertisement contributes to our understanding of automation in the age of performative algorithmic phenomena.

4 Hybrid Agency and the Performativity of Technology

We are deeply entangled with technologies ranging from avatars through wearables to ERP systems and infrastructure. To explain this, a notion of agency as a property possessed by actants seems insufficient, and we need to explore relational notions of agency, as well as perspectives, concepts and vocabularies that help us understand the performativity of sociomaterial practices and how technologies are implicated in not just organizational but also social life. What are the methodological implications of a performative lens; the practices, becoming, and processes associated with AI, big data, digital traces and other emerging phenomena [15, 16]? Several of the papers in this volume reflect on and experiment with research approaches that embrace hybridity, liminality, and performativity:

Mads Bødker, Stefan Olavi Olofsson, and Torkil Clemmensen detail in their chapter titled “Re-figuring Gilbert the Drone” the process of working with a drone in a maker lab. They approach the drone as a figure that has not been fully fleshed out, where a number of potential figurations lie beyond many of the current assemblages of drones as tools for policing, surveillance and warfare. By merging insights from philosophies of affect [17,18,19] and critical design [20, 21] they develop a re-figuring process to explore this potentially “monstrous” technology. Various figurations of drones, they argue, can be explored critically by paying attention to how material things are entangled in how we feel and what we do. Asking how things enchant us and how we feel about technologies, widens, the authors find, the possibilities for re-figuration in a critical design process.

In her chapter “Making a Difference in ICT research: Feminist theorization of sociomateriality and the diffraction methodology”, Amany Elbanna heeds the call to advance sociomateriality research practice. The author builds on Barad’s Agential Realism [22] as one prominent theoretical lens, which she locates and discusses in the wider context of feminist theorizing. In so doing, she critically questions contemporary, scientistic forms of knowledge production in IS, in particular the assumption that core entities of the field exist unequivocally and ex ante [23], to be located and observed in the field during research. Instead, assuming ontological inseparability, [23, 24] the author proposes to build on the notion of diffraction [25, 26] in leaving the delineation of meaningful entities to the research process, which she spells out in detail. The paper makes an important contribution to IS research practice, at a time when the discipline grapples with novel and indeterminate phenomena, such as the emergence of “platform monsters”.

5 Living with Monsters

Several of the papers took up the monsters notion directly, addressing head-on the core metaphor of the conference. They explore the entry of these complex, autonomous creations, or creatures, in our social worlds and examine our own roles as creators. The papers deal with the simultaneous intentions and desires that push us towards creative acts, and as co-inhabitors of the emergent technology-infused worlds, prompts us to reconsider and renew our relationships to, and intertwining with, our creations.

In the opening chapter in this section, “Thinking with Monsters,” which is authored by Dirk S. Hovorka and Sandra Peter, we return to some of the themes introduced by Lucy Suchman. The authors call on technology researchers to develop approaches to inquiry that respond better to the needs of our future society. Specifically, they critique the optimistic instrumentalism that dominates most future studies. This approach projects the practices, materialities and conditions of the present into the future, and fails to recognize the ‘train tracks’ of thought that limits the imaginaries thusly produces. Drawing on examples such as Isaac Asimov’s “The Laws of Robotics” and Philip K. Dick’s “Minority Report,” Hovorka and Peter advance an alternative method that privileges the human condition and everyday life a given technological future entails. They label this approach to future studies as ‘thinking with monsters,’ and argue that it illustrates what dwelling in a technological future would feel like. If IS researchers are to influence, shape or create a desirable future, gaining deeper insights into the worlds our technologies will create is imperative, and this paper outlines a potentially powerful approach to engage with the future in ways that are better able to paint a realistic picture.

In “A bestiary of digital monsters”, Rachel Douglas-Jones, Marisa Cohn, Christopher Gad, James Maguire, John Mark Burnett, Jannick Schou, Michael Hockenhull, Bastian Jørgensen, and Brit Ross Winthereik introduces us to an uncommon academic genre – the bestiary – a collection of real and imagined monsters. Drawing on Cohen [7] and Haraway [2] the authors seek to explore novel forms of analysis available for describing “the beasts in our midst”. The chapter recount encounters with ‘beasts’ that have arisen in attempts to govern organizations, businesses and citizens. None of the beasts in the bestiary are technologies alone, rather, with this form of narrating, the authors wish to ensure that the concept of the monster does not ‘other’ the digital as untamed or alien. The bestiary helps with “the work of figuring out what is monstrous, rather than simply identifying monsters…. including something in the bestiary is a move of calling forth, rather than calling out”. Thus, the chapter draws our attention firmly to the devices we used in narration of the digital monsters, which defy the border between the real and the imaginary.

In our final chapter “Frankenstein’s Monster as Mythical Mattering: Rethinking the Creator-Creation Technology Relationship”, Natalie Hardwicke problematizes our relationship with technology and with ourselves. Building on a careful analysis of Shelley’s Frankenstein [27], the author questions critically the human pursuit of knowledge as technological mastery of nature. Drawing on both Heidegger’s [28] and McLuhan’s [29] work she argues that such pursuit takes us further away from locating our own-most authentic selves. Our desires to separate ourselves from, and control the world through the technologies we build, ultimately fails to bring us closer to the meaning of our own existence, which is to live an authentic life. Only by accepting both our own mortality and by understanding ourselves as an inseparable part of the world do we stand a chance to free ourselves; to assume an organic relationship with technology that brings us closer to the world, others and ourselves, rather than to alienate us.

In sum, the papers presented in this volume address a broad range of phenomena involving the monsters of our day, that is, the increasingly autonomous algorithmic creations in our midst that carry promises, provoke concerns and have not surrendered their ability to incite fear. The diversity of perspectives taken by the authors – ranging between pragmatic and critical, rational and emotional, and hopeful and (somewhat) despondent – might be seen as a manifestation of the ambiguity, hybridity and liminality of the Fourth Industrial Revolution (4IR) that is developing at warp speed. It is these characteristics of the emergent social worlds that we sought to capture in the question mark at the end of the working conference’s title “Living with Monsters?”

What the papers in this volume are telling us is that a life in which people and technologies are increasingly entangled and intertwined, is an ongoing journey that will require continuing conscious and critical engagement with, and care for, the creatures/monsters we have created. Only in this way can we live up to our responsibilities as participants in, as well as creators and researchers of, the new ecosystems that constitute our contemporary social worlds.

References

Wiener, N.: The Human Use of Human Beings: Cybernetics and Society (No. 320). Perseus Books Group, New York (1950/1988)

Haraway, D.: Promises of monsters: a regenerative politics for inappropriate/d others. In: Grossberg, L., Nelson, C., Treichler, P.A. (eds.) Cultural Studies, pp. 295–337. Routledge (1992)

MacKenzie, D.: Is economics performative? Option theory and the construction of derivatives markets. J. Hist. Econ. Thought 28(1), 29–55 (2006)

Winner, L.: Autonomous Technology: Technics-out-of-Control as a Theme in Political Thought. MIT Press, Cambridge (1978)

Ciborra, C.U., Hanseth, O.: From tool to gestell: agendas for managing the information infrastructure. Inf. Technol. People 11(4), 305–327 (1998)

Latour, B.: Love your monsters. Break. J. 2, 21–28 (2011)

Cohen, J.J.: Monster culture (seven theses). In: Cohen, J.J. (ed.) Monster Theory: Reading Culture, pp. 3–25. University of Minnesota Press, Minneapolis (1996)

Rhee, J.: The Robotic Imaginary: The Human and the Price of Dehumanized Labour. University of Minneapolis Press, Minneapolis and London (2017)

Cohen, J.J.: The promise of monsters. In: Mittman, E.S., Dendle, P. (eds.) The Ashgate Research Companion to Monsters and the Monstrous, pp. 449–464, New York (2012)

Zuboff, S.: Big other: surveillance capitalism and the prospects of an information civilization. J. Inf. Technol. 30(1), 75–89 (2015)

Harari, Y.N.: Homo Deus: A Brief History of Tomorrow. Vintage Publishing, New York (2016)

Huizinga, J.: Homo Ludens: A Study of the Play Element in Culture. Beacon Press, Boston (1955)

Esposito, E.: Probabilità improbabili. La realtà della finzione nella società moderna. Meltemi Editore (2008)

Esposito, E.: The structures of uncertainty: performativity and unpredictability in economic operations. Econ. Soc. 42(1), 102–129 (2013)

Ingold, T.: Towards an ecology of materials. Annu. Rev. Anthropol. 41, 427–442 (2012)

Nicolini, D.: Practice Theory, Work, & Organization. Oxford University Press, Oxford (2013)

Clough, P.: The affective turn: political economy, bio-media and bodies. Theory, Cult. Soc. 25(1), 1–22 (2008)

Bennett, J.: The Enchantment of Modern Life: Attachments, Crossings, and Ethics. Princeton University Press, Princeton (2001)

Bennett, J.: Vibrant Matter: A Political Ecology of Things. Duke University Press, Durham (2010)

Dunne, A., Raby, F.: Design Noir: The Secret Life of Electronic Objects. Birkhäuser, Basel (2001)

Dunne, A., Raby, F.: Critical design FAQ (2017). http://www.dunneandraby.co.uk/content/bydandr/13/0. Accessed 21 May 2018

Barad, K.: Agential realism: feminist interventions in understanding scientific practices. In: Biagioli, I. (ed.) The Science Studies Reader, pp. 1–11. Routledge, New York/London (1999)

Orlikowski, W.J., Scott, S.V.: Sociomateriality: challenging the separation of technology, work and organization. Acad. Manag. Ann. 2(1), 433–474 (2008)

Riemer, K., Johnston, R.B.: Clarifying ontological inseparability with heidegger’s analysis of equipment. MIS Q. 41(4), 1059–1081 (2017)

Barad, K.: Diffracting diffraction: cutting together-apart. Parallax 20(3), 168–187 (2014)

Haraway, D.J.: Modest–Witness@Second–Millennium Femaleman–Meets–Oncomouse: Feminism and Technoscience. Psychology Press, Hove (1997)

Shelley, M.: Frankenstein or The Modern Prometheus, London (1818)

Heidegger, M.: Being and Time: A Translation of Sein und Zeit. SUNY press, Albany (1996)

McLuhan, M.: The Extensions of Man, New York (1964)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 IFIP International Federation for Information Processing

About this paper

Cite this paper

Aanestad, M., Mӓhring, M., Østerlund, C., Riemer, K., Schultze, U. (2018). Living with Monsters?. In: Schultze, U., Aanestad, M., Mähring, M., Østerlund, C., Riemer, K. (eds) Living with Monsters? Social Implications of Algorithmic Phenomena, Hybrid Agency, and the Performativity of Technology. IS&O 2018. IFIP Advances in Information and Communication Technology, vol 543. Springer, Cham. https://doi.org/10.1007/978-3-030-04091-8_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-04091-8_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-04090-1

Online ISBN: 978-3-030-04091-8

eBook Packages: Computer ScienceComputer Science (R0)