Abstract

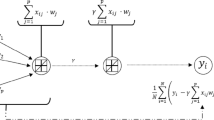

If no large design data set is available to design the Multiple classifier system, one typically uses the same data set to design both the expert classifiers and the fusion rule. In that case, the experts form an optimistically biased training data for a fusion rule designer. We consider standard Fisher linear and Euclidean distance classifiers used as experts and the single layer perceptron as a fusion rule. Original bias correction terms of experts’ answers are derived for these two types of expert classifiers under assumptions of high-variate Gaussian distributions. In addition, noise injection as a more universal technique is presented. Experiments with specially designed artificial Gaussian and real-world medical data showed that the theoretical bias correction works well in the case of high-variate artificial data and the noise injection technique is more preferable in the real-world problems.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Kittler J. and Roli F. (eds): Multiple Classifier Systems. Springer Lecture Notes in Computer Science, Springer Vol. 1857 (2000), Vol. 2096 (2001).

Ho T.K.: Data complexity analysis for classifier combination. Multiple Classifier Systems. Lecture Notes in Computer Science. Springer Vol. 2096. (2001) 53–67.

Kittler J., Hatef M., Duin R.P.W. and Matas J: On combining classifiers. IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 20 (1998) 226–239.

Xu L., Krzyzak A. and Suen C.Y.: Methods for combining multiple classifiers and their applications in handwritten character recognition. IEEE Transactions on Systems, Man and Cybernetics, vol. 22 (1992) 418–435.

Rastrigin L.A. and Erenstein R.Ch.: Method of Collective Recognition. Energoizdat, Moscow (1981) (in Russian).

Raudys Š.: Combining the expert networks: a review. In: Sadykhov R.K. (ed.): Proc. of Int. Conference Neural Networks and Artificial Intelligence, ICNNAI’2001. BSU publication, Minsk (2001) 81–91.

Kittler J.: A framework of classifier fusion: is still needed? In: F. Ferri, J.M. Inest, A. Amin and P. Pudil (eds), Advances in Pattern Recognition. Springer Lecture Notes in Computer Science 1876 (2000) 45–56.

Bedworth M.: High Level Data Fusion. PhD Thesis, Aston Univ, UK (1999).

Alkoot F.M., Kittler J.: Improving the performance of the product fusion strategy. In: Proc. 15th IAPR International Conference on Pattern Recognition, Barcelona, (2000).

Raudys Š.: Experts’ Bias in Trainable Fusion Rules. In: IEEE transaction on Pattern Analysis and Machine Intelligence, (2001) (submitted).

Kahya Y.P., Güler E.C., Sankur B. and Raudys Š.: Hierarchical classification of respiratory sounds. In: Chang H.K. and Zhang U.T. (eds.): Proc. of 20th annual. Int. Conf. of IEEE Engineering in Medicine and Biology and Biology Society, Pts 1-6. Biomedical Engineering towards the year 2000 and beyond. Vol. 20 (2000) 1598–1601.

Janeliūnas A.: Bias correction of linear classifiers in the classifier combination scheme. In: Sadykhov R.K. (ed.): Proc. of Int. Conference Neural Networks and Artificial Intelligence, ICNNAI’2001. BSU publication, Minsk (2001) 92–99.

Raudys Š.: On the amount of a priori information in designing the classification algorithm. In: Technical. Cybernetics. Proc. Acad. of Sciences of the USSR. Nauka Moscow, N4 (1972) 168–174 (in Russian).

Raudys Š.: Evolution and generalization of a single neuron. I. SLP as seven statistical classifiers, In: Neural Networks 11 (1998) 283–96.

Raudys Š.: Statistical and Neural Classifiers: An integrated approach to design. Springer, London (2001).

Skurichina M., Raudys S. and Duin R.P.W.: K-nearest neighbors directed noise injection in multilayer perceptron training. In: IEEE Trans. on Neural Networks, 11 (2000) 504–511.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2002 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Janeliūnas, A., Raudys, Š. (2002). Reduction of the Boasting Bias of Linear Experts. In: Roli, F., Kittler, J. (eds) Multiple Classifier Systems. MCS 2002. Lecture Notes in Computer Science, vol 2364. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-45428-4_24

Download citation

DOI: https://doi.org/10.1007/3-540-45428-4_24

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-43818-2

Online ISBN: 978-3-540-45428-1

eBook Packages: Springer Book Archive