Abstract

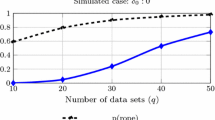

It is shown that computationally tight bounds for the probability of overfitting can be obtained only by simultaneous consideration of the following two properties of classifier sets: splitting into error levels and similarity of classifiers. For a set consisting of only two classifiers, an exact bound is obtained for the probability of overfitting. This is the simplest learning task that exhibits overfitting and the effects of splitting and similarity, which reduce the probability of overfitting. For a more complex case—a chain of classifiers—an experiment is carried out in which the effects of splitting and similarity are estimated separately. It is shown that reasonably low probabilities of overfitting can be obtained only for the sets that possess both properties.

Similar content being viewed by others

References

K. V. Vorontsov, “A Survey on Modern Research of Generalization Bounds for Learning Algorithms,” Tavrich. Vestn. Inf. Mat., No. 1, 5–24 (2004) [in Russian].

E. T. Bax, “Similar Classifiers and VC Error Bounds,” Tech. Rep. CalTech-CS-TR97-14: 6, 1997.

S. Boucheron, O. Bousquet, and G. Lugosi, “Theory of Classification: A Survey of Some Recent Advances,” ESIAM: Probab. Stat., No. 9, 323–375 (2005).

B. Efron, The Jackknife, the Bootstrap, and Other Resampling Plans (SIAM, Philadelphia, 1982).

R. L. Graham, D. E. Knuth, and O. Patashnik, Concrete Mathematics (Addison-Wesley, Reading, 1994), p. 657.

T. Hastie, R. Tibshirani, and J. Friedman, The Elements of Statistical Learning: Data Mining, Inference, and Prediction (Springer, New York, 2001).

R. Herbrich and R. Williamson, “Algorithmic Luckiness,” J. Machine Learning Res., No. 3, 175–212 (2002).

M. J. Kearns, Y. Mansour, A. Y. Ng, and D. Ron, “An Experimental and Theoretical Comparison of Model Selection Methods,” in Proceedings of the 8th Conference on Computational Learning Theory, Santa Cruz, California, US, 1995, pp. 21–30.

R. Kohavi, “A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection,” in Proceedings of the 14th International Joint Conference on Artificial Intelligence, Palais de Congress Montreal, Quebec, Canada, 1995, pp. 1137–1145.

J. Langford, “Quantitatively Tight Sample Complexity Bounds,” Ph.D. Thesis (Carnegie Mellon Thesis, 2002).

J. Langford and D. McAllester, “Computable Shell Decomposition Bounds,” in Proceedings of the 13th Annual Conference on Computer Learning Theory (Morgan Kaufmann, San Francisco, CA, 2000), pp. 25–34.

G. Lugosi, “On Concentration-of-Measure Inequalities,” in Machine Learning Summer School (Australian National University, Canberra, 2003).

J. Sill, “Generalization Bounds for Connected Function Classes,” citeseer.ist.psu.edu/127284.html.

J. Sill, “Monotonicity and Connectedness in Learning Systems,” Ph.D. Thesis (California Inst. Technol., 1998).

V. Vapnik, Statistical Learning Theory (Wiley, New York, 1998).

V. Vapnik and A. Chervonenkis, “On the Uniform Convergence of Relative Frequencies of Events to Their Probabilities,” Theory Probab. Its Appl. 16(2), 264–280 (1971).

K. V. Vorontsov, “Combinatorial Substantiation of Learning Algorithms,” Comput. Math. Math. Phys. 44(11), 1997–2009 (2004).

K. V. Vorontsov, “Combinatorial Probability and the Tightness of Generalization Bounds,” Pattern Recognit. Image Anal. 18(2), 243–259 (2008).

Author information

Authors and Affiliations

Corresponding author

Additional information

Konstantin V. Vorontsov was born in 1971. He graduated from the Faculty of Applied Mathematics and Control, Moscow Institute of Physics and Technology, in 1994. He received his candidate’s degree in 1999. Currently he is with the Dorodnitcyn Computing Centre, Russian Academy of Sciences. His scientific interests include statistical learning theory, machine learning, data mining, probability theory, and combinatorics. He is the author of 40 papers. Homepage: www.ccas.ru/voron.

Rights and permissions

About this article

Cite this article

Vorontsov, K.V. Splitting and similarity phenomena in the sets of classifiers and their effect on the probability of overfitting. Pattern Recognit. Image Anal. 19, 412–420 (2009). https://doi.org/10.1134/S1054661809030055

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1054661809030055