Abstract

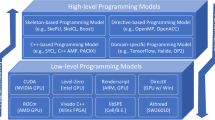

Asynchronous task-based programming models are gaining popularity to address the programmability and performance challenges of contemporary large scale high performance computing systems. In this paper we present AceMesh, a task-based, data-driven language extension targeting legacy MPI applications. Its language features include data-centric parallelizing template, aggregated task dependence for parallel loops. These features not only relieve the programmer from tedious refactoring details but also provide possibility for structured execution of complex task graphs, data locality exploitation upon data tile templates, and reducing system complexity incurred by complex array sections. We present the prototype implementation, including task shifting, data management and communication-related analysis and transformations. The language extension is evaluated on two supercomputing platforms. We compare the performance of AceMesh with existing programming models, and the results show that NPB/MG achieves at most 1.2X and 1.85X speedups on TaihuLight and TH-2, respectively, and the Tend_lin benchmark attains more than 2X speedup on average and attain at most 3.0X and 2.2X speedups on the two platforms, respectively.

Similar content being viewed by others

References

Acun, B., Gupta, B., Jain, N., Langer, A., Menon, H., Mikida, E., Ni, A., Robson, M., Sun, Y., Totoni, E., Wesolowski, L., Kale, L.: Parallel programming with migratable objects: Charm++ in Practice. SC ’14: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, New Orleans, LA, 2014, pp. 647–658, doi: 10.1109/SC.2014.58.

Augonnet, C., Thibault, S., Namyst, R., Wacrenier, P.: StarPU: a unified platform for task scheduling on heterogeneous multicore architectures. Concurr. Comput. Pract. Exper. 23(2), 187–198 (2011). https://doi.org/10.1002/cpe.1631

Barrera, I.S., Moretó, M., Ayguadé, E., Labarta, J., Valero, M., Casas, M.: Reducing data movement on large shared memory systems by exploiting computation dependencies. In Proceedings of the 2018 International Conference on Supercomputing (ICS ’18). ACM, New York, NY, USA, pp. 207–217. https://doi.org/10.1145/3205289.3205310

Bauer, M., Treichler, S., Slaughter, E., Aiken, A.: Legion: expressing locality and independence with logical regions. In Proceedings of the 2012 ACM/IEEE International Conference on High Performance Computing, Networking, Storage and Analysis (SC ’12). IEEE Computer Society, Los Alamitos, CA, USA, Article 66, p. 11.

Broquedis, F., Aumage, O., Goglin, B., Thibault, S., Wacrenier, P., Namyst,R.: Structuring the execution of OpenMP applications for multicore architectures. 2010 IEEE International Symposium on Parallel and Distributed Processing (IPDPS), Atlanta, GA, 2010, pp. 1-10.

Castillo, M., Jain, N., Casas, M., Moreto, M., Schulz, M. Beivide, R., Valero, M., Bhatele, A.: Optimizing computation-communication overlap in asynchronous task-based programs. In Proceedings of the ACM International Conference on Supercomputing (ICS ’19). Association for Computing Machinery, New York, NY, USA, pp. 380–391. https://doi.org/10.1145/3330345.3330379

Cicotti, P.: Tarragon: a programming model for latency-hiding scientific computations. PhD thesis, Department of Computer Science and Engineering, University of California, San Diego (2011)

Drebes, A., Heydemann, K., Drach, N., Pop, A., Cohen, A.: Topology-aware and dependence-aware scheduling and memory allocation for task-parallel languages. ACM Trans. Archit. Code Optim. 11(3), 1–25 (2014). https://doi.org/10.1145/2641764

Drebes, A., Pop, A., Heydemann, A., Cohen, A., Drach, N.: Scalable task parallelism for NUMA: a uniform abstraction for coordinated scheduling and memory management. In International Conference on Parallel Architectures and Compilation (PACT ’16). ACM, New York, NY, USA, pp. 125–137. https://doi.org/10.1145/2967938.2967946

Duran, A., Ayguadé, E., Badia, R.M., Labarta, J., Martinell, L., Martorell, X., Planas, J.: OmpSs: a proposal for programming heterogeneous multi-core architectures. Parallel Process. Lett. 21(2), 173–193 (2011)

Ghosh, P., Yan, Y., Chapman, B.: A prototype implementation of OpenMP task dependency support. In: Rendell, A.P., Chapman, B.M., M¨uller, M.S. (eds.) IWOMP 2013. LNCS, vol. 8122, pp. 128–140. Springer, Heidelberg (2013)

Kaiser, H., Heller, T., Adelstein-Lelbach, B., Serio, A., Fey, D.: HPX: a task based programming model in a global address space. In Proceedings of the 8th International Conference on Partitioned Global Address Space Programming Models (PGAS ’14). ACM, New York, NY, USA, Article 6, p. 11.

Marjanovi´c, V., Labarta, J., Ayguadé, E., Valero, M.: Overlapping communication and computation by using a hybrid MPI/SMPSs approach. In Proceedings of the 24th ACM International Conference on Supercomputing, 2010, pp. 5–16, doi: 10.1145/1810085.1810091

Nguyen, T., Cicotti, P., Bylaska, E., Quinlan, D., Baden, S.: Automatic translation of MPI source into a latency-tolerant, data-driven form. J. Parallel Distrib. Comput. 106, 1–13 (2017). https://doi.org/10.1016/j.jpdc.2017.02.009

Perez, J.M.: A dependency-aware parallel programming model. PhD thesis. Universitat Politècnica de Catalunya, Barcelona (2014)

Podobas, A., Brorsson, M., Vlassov, V.: TurboBLYSK: scheduling for improved data-driven task performance with fast dependency resolution. In: DeRose, L., de Supinski, B.R., Olivier, S.L., Chapman, B.M., M¨uller, M.S. (eds.) IWOMP 2014. LNCS, vol. 8766, pp. 45–57. Springer, Cham.

Preissl, R., Schulz, M., Kranzlmuller, D., de Supinski, B., Quinlan, D.: Using MPI communication patterns to guide source code transformations. In Computational Science ICCS 2008, Volume 5103 of Lecture Notes in Computer Science, pp. 253–260. Springer, Berlin/Heidelberg (2008).

OpenMP Architecture Review Board: OpenMP application program interface. Version 5.0. Nov. 2018. https://www.openmp.org/wp-content/uploads/OpenMP-API-Specification-5.0.pdf

Sala, K., Teruel, X., Perez, J.M., Peña, A.J., Beltran, V., Labarta, J.: Integrating blocking and non-blocking MPI primitives with task-based programming models. Parallel Comput. 85, 153–166 (2019). https://doi.org/10.1016/j.parco.2018.12.008

Virouleau, P., Broquedis, F., Gautier, T., Rastello, F.: Using data dependencies to improve task-based scheduling strategies on NUMA architectures. In Euro-Par 2016: Parallel Processing. Springer, Cham, pp. 531–544. https://doi.org/10.1007/978-3-319-43659-3_39

Xu, Z., Lin, J., Matsuoka, S.: Benchmarking SW26010 many-core processor. In Proceedings—2017 IEEE 31st International Parallel and Distributed Processing Symposium Workshops, IPDPSW 2017, pp. 743–752, June 30, 2017

Zhang, H., Lin, Z., Zeng, Q.: The computational scheme and the test for dynamical framework of IAP AGCM-4. Chin. J. Atmos. Sci. 33, 1267–1285 (2009)

Acknowledgements

This work was supported by National Key R&D Program of China (Grant No. 2017YFB02-02002); the Innovation Research Group of NSFC (Grant No. 61521092).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, L., Tang, S., Fu, Y. et al. AceMesh: a structured data driven programming language for high performance computing. CCF Trans. HPC 2, 309–322 (2020). https://doi.org/10.1007/s42514-020-00047-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42514-020-00047-4