Abstract

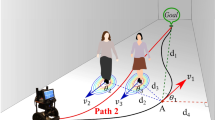

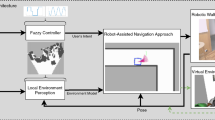

A key skill for mobile robots is the ability to navigate efficiently through their environment. In the case of social or assistive robots, this involves navigating through human crowds. Typical performance criteria, such as reaching the goal using the shortest path, are not appropriate in such environments, where it is more important for the robot to move in a socially adaptive manner such as respecting comfort zones of the pedestrians. We propose a framework for socially adaptive path planning in dynamic environments, by generating human-like path trajectory. Our framework consists of three modules: a feature extraction module, inverse reinforcement learning (IRL) module, and a path planning module. The feature extraction module extracts features necessary to characterize the state information, such as density and velocity of surrounding obstacles, from a RGB-depth sensor. The inverse reinforcement learning module uses a set of demonstration trajectories generated by an expert to learn the expert’s behaviour when faced with different state features, and represent it as a cost function that respects social variables. Finally, the planning module integrates a three-layer architecture, where a global path is optimized according to a classical shortest-path objective using a global map known a priori, a local path is planned over a shorter distance using the features extracted from a RGB-D sensor and the cost function inferred from IRL module, and a low-level system handles avoidance of immediate obstacles. We evaluate our approach by deploying it on a real robotic wheelchair platform in various scenarios, and comparing the robot trajectories to human trajectories.

Similar content being viewed by others

References

Abbeel P, Coates A, Ng AY (2010) Autonomous helicopter aerobatics through apprenticeship learning. Int J Robot Res 29(13):1608–1639

Abbeel P, Dolgov D, Ng A, Thrun S (2008) Apprenticeship learning for motion planning, with application to parking lot navigation. In: IEEE/RSJ international conference on intelligent robots and systems (IROS-08)

Abbeel P, Ng A (2004) Apprenticeship learning via inverse reinforcement learning. In: Proceedings of the twenty-first international conference on machine learning. ACM, New York, p 1

Bellman R (1957) A markovian decision process. J Math Mech 6:679–684

Bennewitz M, Burgard W, Cielniak G, Thrun S (2005) Learning motion patterns of people for compliant robot motion. Int J Robot Res 24(1):31–48

Bruhn A, Weickert J, Schnörr C (2005) Lucas/kanade meets horn/schunck: combining local and global optic flow methods. Int J Comput Vis 61(3):211–231

Choi J, Kim K-E (2011) Map inference for bayesian inverse reinforcement learning. In: NIPS, pp 1989–1997

CVX Research I (2012) CVX: Matlab software for disciplined convex programming, version 2.0 beta. http://cvxr.com/cvx

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96:1348–1360

Farnebäck G (1999) Spatial domain methods for orientation and velocity estimation. Ph.D. thesis, Linköping

Farnebäck G (2000) Fast and accurate motion estimation using orientation tensors and parametric motion models. In: Proceedings of 15th international conference on pattern recognition, vol 1. pp 135–139

Foka AF, Trahanias PE (2010) Probabilistic autonomous robot navigation in dynamic environments with human motion prediction. Int J Soc Robot 2(1):79–94

Fox D, Burgard W, Thrun S (1997) The dynamic window approach to collision avoidance. Robot Autom Mag 4:23–33

Gat E (1998) On three-layer architectures. Artificial intelligence and mobile robots. MIT Press, Cambridge

Henry P, Vollmer C, Ferris B, Fox D (2010) Learning to navigate through crowded environments. In: ICRA. pp 981–986

Horn BKP, Schunck BG (1981) Determining optical flow. Artif Intell 17:185–203

Knutsson H, Westin CF, Andersson M (2011) Representing local structure using tensors II. Image analysis. Springer, Berlin, pp 545–556

Kruse T, Kirsch A, Sisbot EA, Alami R (2010) Exploiting human cooperation in human-centered robot navigation. In: ROMAN

Kruse T, Pandey AK, Alami R, Kirsch A (2013) Human-aware robot navigation: a survey. Robot Auton Syst 61:1726–1743

Lavalle S (2006) Planning algorithms. Cambridge University Press, Cambridge

Luber M, Arras KO (2013) Multi-hypothesis social grouping and tracking for mobile robots. Robotics: science and systems. Springer, Berlin

Matuszek C, Herbst E, Zettlemoyer L, Fox D (2012) Learning to parse natural language commands to a robot control system. In: 13th international symposium on experimental robotics (ISER)

Montemerlo M, Thrun S, Whittaker W (2002) Conditional particle filters for simultaneous mobile robot localization and people-tracking. In: IEEE international conference on robotics and automation (ICRA)

Ng AY, Russell S (2000) Algorithms for inverse reinforcement learning. In: International conference on machine learning

Pineau J, Atrash A (2007) Smartwheeler: a robotic wheelchair test-bed for investigating new models of human–robot interaction. In: AAAI spring symposium: multidisciplinary collaboration for socially assistive robotics. AAAI, pp 59–64

Puterman ML (2009) Markov decision processes: discrete stochastic dynamic programming, vol 414. Wiley-Interscience, New York

Quigley M, Conley K, Gerkey BP, Faust J, Foote T, Leibs J, Wheeler R, Ng AY (2009) Ros: an open-source robot operating system. In: ICRA workshop on open source software

Ramachandran D, Amir E (2007) Bayesian inverse reinforcement learning. In: IJCAI. pp 2586–2591

Ratsamee P, Mae Y, Ohara K, Takubo T, Arai T (2013) Human-robot collision avoidance using a modified social force model with body pose and face orientation. Int J Hum Robot 10:1350008

Schulz D, Burgard W, Fox D, Cremers AB (2003) People tracking with mobile robots using sample-based joint probabilistic data association filters. Int J Robot Res 22:99–116

Seder M, Petrovic I (2007) Dynamic window based approach to mobile robot motion control in the presence of moving obstacles. In: International conference on robotics and automation

Shiomi M, Zanlungo F, Hayashi K, Kanda T (2014) Towards a socially acceptable collision avoidance for a mobile robot navigating among pedestrians using a pedestrian model. Int J Soc Robot 6:443–455

Sisbot EA, Marin-Urias LF, Alami R, Simeon T (2007) A human aware mobile robot motion planner. IEEE Trans Robot 23:874–883

Stentz A, Mellon IC (1993) Optimal and efficient path planning for unknown and dynamic environments. Int J Robot Autom 10:89–100

Sun D, Roth S, Black MJ (2010) Secrets of optical flow estimation and their principles. In: 2010 IEEE conference on computer vision and pattern recognition (CVPR), IEEE. pp 2432–2439

Sutton RS, Barto AG (1998) Reinforcement learning: an introduction. MIT Press, Cambridge

Svenstrup M, Bak T, Andersen H (2010) Trajectory planning for robots in dynamic human environments. In: IEEE/RSJ international conference on intelligent robots and systems

Tellex S, Kollar T, Dickerson S, Walter M, Banerjee A, Teller S, Roy N (2011) Understanding natural language commands for robotic navigation and mobile manipulation. In: Proceedings of AAAI

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc Ser B 58:267–288

Tsang E, Ong SCW, Pineau J (2012) Design and evaluation of a flexible interface for spatial navigation. In: Canadian conference on computer and robot vision. pp 353–360

Yuan F, Twardon L, Hanheide M (2010) Dynamic path planning adopting human navigation strategies for a domestic mobile robot. In: Intelligent robots and systems (IROS)

Ziebart BD, Maas A, Bagnell JA, Dey AK (2008) Navigate like a cabbie: probabilistic reasoning from observed context-aware behavior. In: Proceedings of Ubicomp. pp 322–331

Ziebart BD, Ratliff N, Gallagher G, Mertz C, Peterson K, Bagnell JA, Hebert M, Dey AK, Srinivasa S (2009) Planning-based prediction for pedestrians. In: Proceedings of the international conference on intelligent robots and systems

Acknowledgments

The authors gratefully acknowledge financial and organizational support from the following research organizations: Regroupements stratgiques REPARTI and INTER (funded by the FQRNT), CanWheel (funded by the CIHR), the CRIR Rehabilitation Living Lab (funded by the FRQS), and the NSERC Canadian Network on Field Robotics. Additional funding was provided by NSERC’s Discovery program. We are also very grateful for the support of Andrew Sutcliffe, Anas el Fathi, Hai Nguyen and Richard Gourdeau for the realization of the empirical evaluation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kim, B., Pineau, J. Socially Adaptive Path Planning in Human Environments Using Inverse Reinforcement Learning. Int J of Soc Robotics 8, 51–66 (2016). https://doi.org/10.1007/s12369-015-0310-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-015-0310-2