Abstract

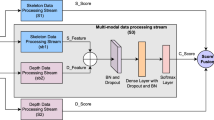

Recognition of human activity is a challenging issue, especially in the presence of multiple actions and multiple scenarios. Therefore, in this paper, multi-view multi-modal based human action recognition (HAR) is proposed. Here, initially, motion representation of each image such as Depth motion maps, motion history images, and skeleton images are created from depth, RGB, and skeleton data of RGB-D sensor. After the motion representation, each motion is separately trained by using a 5-stack convolution neural network (5S-CNN). To enhance the recognition rate and accuracy, the skeleton representation is trained using a hybrid 5S-CNN and Bi-LSTM classifier. Then, decision-level fusion is applied to fuse the score value of three motions. Finally, based on the fusion value, the activity of humans is identified. To estimate the efficiency of the suggested 5S-CNN with the Bi-LSTM method, we conduct our experiments using UTD-MHAD. Results show that the suggested HAR method attained better than other existing approaches.

Similar content being viewed by others

Data Availability

Data sharing is not applicable to this article because of proprietary nature.

Code Availability

Code sharing is not applicable to this article because of proprietary nature.

References

Aggarwal, J. K., & Ryoo, M. S. (2011). Human activity analysis: A review. ACM Computing Surveys (CSUR), 43(3), 16.

Wang, P., Li, W., Gao, Z., Tang, C., Zhang, J., & Ogunbona, P. (2015). Convnets-based action recognition from depth maps through virtual cameras and pseudocoloring. In Proceedings of the 23rd ACM international conference on Multimedia, ACM, pp. 1119–1122.

Wang, P., Li, W., Gao, Z., Zhang, J., Tang, C., & Ogunbona, P. O. (2016). Action recognition from depth maps using deep convolutional neural networks. IEEE Transactions on Human-Machine Systems, 46(4), 498–509.

Wang, P., Li, W., Ogunbona, P., Gao, Z., & Zhang, H. (2014). Mining mid-level features for action recognition based on effective skeleton representation. In Digital lmage computing: techniques and applications (DlCTA), 2014 international conference on, IEEE, pp. 1–8.

Vemulapalli, R., Arrate, F., & Chellappa, R. (2014). Human action recognition by representing 3d skeletons as points in a lie group. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 588–595.

Simonyan, K., & Zisserman, A. (2015). Very deep convolutional networks for largescale image recognition, arXiv preprint arXiv:1409.1556, ICLR, pp. 1–10.

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., & Fei-Fei, L. (2014). Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1725–1732.

Simonyan, K., & Zisserman, A. (2014). Two-stream convolutional networks for action recognition in videos. In Advances in neural information processing systems, pp. 568–576.

Luo, J., Wang, W., & Qi, H. (2014). Spatio-temporal feature extraction and representation for RGB-D human action recognition. Pattern Recognition Letters, 50, 139–148.

Chen, C., Jafari, R., & Kehtarnavaz, N. (2015). Improving human action recognition using fusion of depth camera and inertial sensors. IEEE Transactions on Human-Machine Systems, 45(1), 51–61.

El Madany, N. E. D., He, Y., & Guan, L. (2016). Human action recognition via Multiview discriminative analysis of canonical correlations. In: Image processing (ICIP), 2016 IEEE international conference on, IEEE, pp. 4170–4174.

Verma, P., Sah, A., & Srivastava, R. (2020). Deep learning-based multi-modal approach using RGB and skeleton sequences for human activity recognition. Multimedia Systems, 26(6), 671–685.

Wang, P., Li, W., Gao, Z., Zhang, J., Tang, C., & Ogunbona, P. O. (2015). Action recognition from depth maps using deep convolutional neural networks. IEEE Transactions on Human-Machine Systems, 46(4), 498–509.

Chen, C., Jafari, R., & Kehtarnavaz, N. (2016). Fusion of depth, skeleton, and inertial data for human action recognition. In 2016 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp. 2712–2716. IEEE.

Escobedo, E., & Camara, G. (2016). A new approach for dynamic gesture recognition using skeleton trajectory representation and histograms of cumulative magnitudes. In 2016 29th SIBGRAPI conference on graphics, patterns and images (SIBGRAPI), pp. 209–216. IEEE.

Gaglio, S., Re, G. L., & Morana, M. (2014). Human activity recognition process using 3-D posture data. IEEE Transactions on Human-Machine Systems, 45(5), 586–597.

Khaire, P., Kumar, P., & Imran, J. (2018). Combining CNN streams of RGB-D and skeletal data for human activity recognition. Pattern Recognition Letters, 115, 107–116.

Guo, J., Bai, H., Tang, Z., Xu, P., Gan, D., & Liu, B. (2020). Multi modal human action recognition for video content matching. Multimedia Tools and Applications, 79, 34665–34683.

Tran, T.-H., Tran, H.-N., & Doan, H.-G. (2019). Dynamic hand gesture recognition from multi-modal streams using deep neural network. In International conference on multi-disciplinary trends in artificial intelligence. Springer, Cham, pp. 156–167.

Nie, W., Yan, Y., Song, D., & Wang, K. (2020). Multi-modal feature fusion based on multi-layers LSTM for video emotion recognition. Multimedia Tools and Applications, 80, 1–10.

Khowaja, S. A., & Lee, S.-L. (2020). Hybrid and hierarchical fusion networks: a deep cross-modal learning architecture for action recognition. Neural Computing and Applications, 32(14), 10423–10434.

Chen, C., Jafari, R., & Kehtarnavaz, N. (2015). UTD-MHAD: A multimodal dataset for human action recognition utilizing a depth camera and a wearable inertial sensor. In Image processing (ICIP), 2015 IEEE international conference on, IEEE, pp. 168–172.

Bulbul, M. F., Jiang, Y., & Ma, J. (2015). Dmms-based multiple features fusion for human action recognition. International Journal of Multimedia Data Engineering and Management (IJMDEM), 6(4), 23–39.

Annadani, Y., Rakshith, D., & Biswas, S. (2016). Sliding dictionary based sparse representation for action recognition, arXiv preprint arXiv:1611.00218, 1–7.

Escobedo, E., & Camara, G. (2016). A new approach for dynamic gesture recognition using skeleton trajectory representation and histograms of cumulative magnitudes. In Graphics, patterns and images (SIBGRAPI), 2016 29th SIBGRAPI conference on, IEEE, pp. 209–216.

Funding

The authors declare that they have competing interests and funding.

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kumar, R., Kumar, S. Multi-view Multi-modal Approach Based on 5S-CNN and BiLSTM Using Skeleton, Depth and RGB Data for Human Activity Recognition. Wireless Pers Commun 130, 1141–1159 (2023). https://doi.org/10.1007/s11277-023-10324-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11277-023-10324-4