Abstract

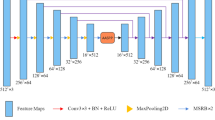

The precise segmentation of lesions can assist doctors to complete efficient disease diagnosis. Unet is widely used in the field of medical image segmentation due to its excellent feature fusion ability. However, the deep network based on Unet has poor ability to extract lesion features and insufficient segmentation accuracy. This is because the amount of medical image data is generally small, the lesion area is small, and Unet ignores the importance of different information. To overcome these shortcomings, we propose a Unet-based attention network for accurate segmentation of liver CT images. Specifically, we first creatively design an attention mechanism module (DASGC) that pays attention to both multi-scale spatial information and inter-channel information at the same time, which can give more weight to important feature information and perform the feature information screening task well. Secondly, based on the advantages of DASGC’s efficient development of limited information, we masterly design an improved Unet network (DASGC-Unet) to solve the problem that the Unet network cannot effectively use less image information to complete accurate segmentation. Finally, on the LiTS2017 public dataset, our method achieves the best results on mIoU, IoU, and Dice coefficient compared to other advanced attention mechanism networks.

Similar content being viewed by others

Data Availability

Data will be made available on reasonable request.

References

Bilic P, Christ PF, Vorontsov E, Chlebus G, Chen H, Dou Q, Fu CW, Han X, Heng PA, Hesser J, et al (2019) The liver tumor segmentation benchmark (lits). arXiv preprint arXiv:1901.04056

Brock A, Donahue J, Simonyan K (2018) Large scale gan training for high fidelity natural image synthesis. arXiv preprint arXiv:1809.11096

Cao Y, Xu J, Lin S, Wei F, Hu H (2019) Gcnet: non-local networks meet squeeze-excitation networks and beyond. In: Proceedings of the IEEE/CVF international conference on computer vision workshops. pp 0–0

Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-end object detection with transformers. In: European Conference on Computer Vision, pp 213–229. Springer

Chen L, Zhang H, Xiao J, Nie L, Shao J, Liu W, Chua TS (2017) Sca-cnn: spatial and channel-wise attention in convolutional networks for image captioning. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 5659–5667

Chen LC, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2017) Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans Pattern Anal Mach Intell 40(4):834–848

Chen LC, Papandreou G, Schroff F, Adam H (2017) Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587

Chen LC, Zhu Y, Papandreou G, Schroff F, Adam H (2018) Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European conference on computer vision (ECCV). pp 801–818

Chollet F (2017) Xception: Deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 1251–1258

Dai Y, Gieseke F, Oehmcke S, Wu Y, Barnard K (2021) Attentional feature fusion. In: Proceedings of the IEEE/CVF Winter conference on applications of computer vision. pp 3560–3569

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, et al. (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929

Fan DP, Zhou T, Ji GP, Zhou Y, Chen G, Fu H, Shen J, Shao L (2020) Inf-net: automatic covid-19 lung infection segmentation from ct images. IEEE Trans Med Imaging 39(8):2626–2637

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. Advances in neural information processing systems. p 27

Gu Z, Cheng J, Fu H, Zhou K, Hao H, Zhao Y, Zhang T, Gao S, Liu J (2019) Ce-net: context encoder network for 2d medical image segmentation. IEEE Trans Med Imaging 38(10):2281–2292

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 770–778

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H (2017) Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 7132–7141

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems. p 25

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Li Y, Cao G, Yu Q, Li X (2019) Fast and robust active contours model for image segmentation. Neural Process Lett 49(2):431–452

Liu C, Zhao R, Xie W, Pang M (2020) Pathological lung segmentation based on random forest combined with deep model and multi-scale superpixels. Neural Process Lett 52(2):1631–1649

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 3431–3440

Lv Q, Yuan X, Qian J, Li X, Zhang H, Zhan S (2022) An improved u-net for human sperm head segmentation. Neural Process Lett 54(1):537–557

Mirza M, Osindero S (2014) Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784

Misra D, Nalamada T, Arasanipalai AU, Hou Q (2021) Rotate to attend: Convolutional triplet attention module. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision. pp 3139–3148

Mnih V, Heess N, Graves A (2014) et al Recurrent models of visual attention. Advances in neural information processing systems. p 27

Park J, Woo S, Lee JY, Kweon IS (2018) Bam: Bottleneck attention module. arXiv preprint arXiv:1807.06514

Qin Z, Zhang P, Wu F, Li X (2021) Fcanet: Frequency channel attention networks. In: Proceedings of the IEEE/CVF international conference on computer vision. pp 783–792

Radford A, Metz L, Chintala S (2015) Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. pp 234–241. Springer

Sha G, Wu J, Yu B (2021) A robust segmentation method based on improved u-net. Neural Process Lett 53(4):2947–2965

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 1–9

Wang Q, Wu B, Zhu P, Li P, Hu Q (2020) Eca-net: Efficient channel attention for deep convolutional neural networks. In: 2020 IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Wang W, Xie E, Li X, Fan DP, Song K, Liang D, Lu T, Luo P, Shao L (2021) Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In: Proceedings of the IEEE/CVF international conference on computer vision. pp 568–578

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 7794–7803

Woo S, Park J, Lee JY, Kweon IS (2018) Cbam: Convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV). pp 3–19

Wu J, Liu X, Liao Y (2022) Difficulty-aware brain lesion segmentation from mri scans. Neural Processing Letters. pp 1–15

Xiao X, Lian S, Luo Z, Li S (2018) Weighted res-unet for high-quality retina vessel segmentation. In: 2018 9th international conference on information technology in medicine and education (ITME). pp 327–331. IEEE

Zhang H, Dana K, Shi J, Zhang Z, Wang X, Tyagi A, Agrawal A (2018) Context encoding for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 7151–7160

Zhang H, Goodfellow I, Metaxas D, Odena A (2019) Self-attention generative adversarial networks. In: International conference on machine learning. pp 7354–7363. PMLR

Zhang Y, Li K, Li K, Wang L, Zhong B, Fu Y (2018) Image super-resolution using very deep residual channel attention networks. In: Proceedings of the European conference on computer vision (ECCV). pp 286–301

Zhang Y, Li X, Lin M, Chiu B, Zhao M (2020) Deep-recursive residual network for image semantic segmentation. Neural Comput Appl 32(16):12935–12947

Zhao H, Zhang Y, Liu S, Shi J, Loy CC, Lin D, Jia J (2018) Psanet: Point-wise spatial attention network for scene parsing. In: Proceedings of the European conference on computer vision (ECCV). pp 267–283

Zhou L, Zhang C, Wu M (2018) D-linknet: Linknet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction. In: Proceedings of the IEEE Conference on Computer vision and pattern recognition workshops. pp 182–186

Zhu JY, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision. pp 2223–2232

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grants 62102331, 62176125 and 61772272, the Natural Science Foundation of Sichuan Province under Grant 2022NSFSC0839 and the Doctoral Research Fund Project of Southwest University of science and Technology 22zx7110.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, X., Chen, Y., Pu, L. et al. DASGC-Unet: An Attention Network for Accurate Segmentation of Liver CT Images. Neural Process Lett 55, 12289–12308 (2023). https://doi.org/10.1007/s11063-023-11421-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-023-11421-y