Abstract

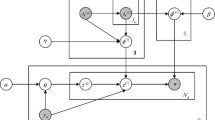

Recently, some statistic topic modeling approaches, e.g., Latent Dirichlet allocation (LDA), have been widely applied in the field of document classification. However, standard LDA is a completely unsupervised algorithm, and then there is growing interest in incorporating prior information into the topic modeling procedure. Some effective approaches have been developed to model different kinds of prior information, for example, observed labels, hidden labels, the correlation among labels, label frequencies; however, these methods often need heavy computing because of model complexity. In this paper, we propose a new supervised topic model for document classification problems, Twin Labeled LDA (TL-LDA), which has two sets of parallel topic modeling processes, one incorporates the prior label information by hierarchical Dirichlet distributions, the other models the grouping tags, which have prior knowledge about the label correlation; the two processes are independent from each other, so the TL-LDA can be trained efficiently by multi-thread parallel computing. Quantitative experimental results compared with state-of-the-art approaches demonstrate our model gets the best scores on both rank-based and binary prediction metrics in solving single-label classification, and gets the best scores on three metrics, i.e., One Error, Micro-F1, and Macro-F1 while multi-label classification, including non power-law and power-law datasets. The results show benefit from modeling fully prior knowledge, our model has outstanding performance and generalizability on document classification. Further comparisons with recent works also indicate the proposed model is competitive with state-of-the-art approaches.

Similar content being viewed by others

References

Asuncion AU, Welling M, Smyth P, Teh YW (2009) On smoothing and inference for topic models. In: UAI proceedings of the 25th conference on uncertainty in artificial intelligence, Montreal, QC, Canada, pp 27–34

Burkhardt S, Kramer S (2018) Online multi-label dependency topic models for text classification. Mach Learn 107:859–886

Burkhardt S, Kramer S (2019) A survey of multi-label topic models. ACM SIGKDD Explorations Newsletter 21:61–79

Boutell MR, Luo J, Shen X, Brown CM (2004) Learning multi-label scene classification. Pattern Recogn 37(9):1757–1771

Blei DM, McAuliffe JD (2008) Supervised topic models. Advances in Neural Information Processing Systems, pp 121–128

Blei DM, Ng AY, Jordan MI (2003) Latent Dirichlet allocation. J Mach Learn Res 3:993–1022

Cowans PJ (2006) Probabilistic document modeling. PhD thesis University of Cambridge, Cambridgeshire, UK

Clare A, King RD (2001) Knowledge discovery in multi-label phenotype data. In: European conference on principles of data mining and knowledge discovery, pp 42–53

Crammer K, Singer Y (2003) A family of additive online algorithms for category ranking. J Mach Learn Res 3:1025–1058

Fürnkranz J, Hüllermeier E, Mencía EL, Brinker K (2008) Multilabel classification via calibrated label ranking. Mach Learn 73(2):133–153

Griffiths TL, Steyvers M (2004) Finding scientific topics. PNAS 101(Suppl 1):5228–5235

Ji S, Tang L, Yu S, Ye J (2008) Extracting shared subspace for multi-label classification. In: KDD’08: proceedings of the 14th ACM SIGKDD international conference on knowledge discovery and data mining. ACM, New York, pp 381–389

Jelodar H, Wang Y, Yuan C, Feng X, Jiang X, Li Y (2019) Latent Dirichlet allocation (LDA) and topic modeling: models, applications, a survey. Multimed Tools Appl 78(11):15169– 15211

Li X, Ma Z, Peng P, Guo X, Huang F, Wang X, Guo J (2018) Supervised latent Dirichlet allocation with a mixture of sparse softmax. Neurocomputing 312:324–335

Li X, Ouyang J, Zhou X (2015) Supervised topic models for multi-label classification. Neurocomputing 149:811–819

Li X, Ouyang J, Zhou X, Lu Y, Liu Y (2015) Supervised labeled latent Dirichlet allocation for document categorization. Appl Intell 42:581–593

Lacoste-Julien S, Sha F, Jordan MI (2009) Disclda: discriminative learning for dimensionality reduction and classification. In: Neural information processing systems, pp 897–904

Lewis DD, Yang Y, Rose TG, Li F (2004) RCV1: a new benchmark collection for text categorization research. J Mach Learn Res 5:361–397

Magnusson M, Jonsson L, Villani M (2020) DOLDA: a regularized supervised topic model for high-dimensional multi-class regression. Comput Stat 35:175–201

Padmanabhan D, Bhat S, Shevade S, Narahari Y (2017) Multi-label classification from multiple noisy sources using topic models. Information 8(2):52–75

Rubin TN, Chambers A, Smyth P, Steyvers M (2012) Statistical topic models for multi-label document classification. Mach Learn 88(1–2):157–208

Ramage D, Hall D, Nallapati R, Manning CD (2009) Labeled LDA: a supervised topic model for credit attribution in multilabeled corpora. In: Conference on empirical methods in natural language processing, Association for Computational Linguistics, pp 248–256

Ramage D, Manning CD, Dumais S (2011) Partially labeled topic models for interpretable text mining. In: ACM SIGKDD international conference on knowledge discovery and data mining, pp 457–465

Sandhaus E (2008) The New York times annotated corpus. Linguistic Data Consortium. Philadelphia

Tsoumakas G, Katakis I (2007) Multi-label classification: an overview. International Journal of Data Warehousing and Mining, pp 1–13

Tsoumakas G, Vlahavas I (2007) Random k-labelsets: an ensemble method for multilabel classification. In: European conference on machine learning, pp 406–417

Ueda N, Saito K (2002) Parametric mixture models for multi-labeled text. In: Advances in neural information processing systems, pp 721–728

Wallach HM (2008) Structured topic models for language. PhD thesis University of Cambridge, Cambridgeshire, UK

Wallach HM, Mimno D, McCallum A (2009) Rethinking LDA: why priors matter. Advances in Neural Information Processing Systems 23:1973–1981

Yang Y (1999) An evaluation of statistical approaches to text categorization. Inf Retr 1(1–2):69–90

Yang Y, Zhang J, Kisiel B (2003) A scalability analysis of classifiers in text categorization. In: SIGIR’03, proceedings of the 26th annual international ACM SIGIR conference on research and development in information retrieval, New York, pp 96–103

Zhu J, Ahmed A, Xing E (2009) Medlda: maximum margin supervised topic models for regression and classification. In: ACM proceedings of the 26th annual international conference on machine learning, pp 1257–1264

Zhang Y, Ma J, Wang Z, Chen B (2018) LF-LDA: a topic model for multi-label classification. Advances in Internetworking, Data and Web Technologies, pp 618–628

Zhang M, Zhou Z (2007) ML-KNN: a lazy learning approach to multi-label learning. Pattern Recogn 40(7):2038–2048

Zhang M, Zhou Z (2014) A review on multi-label learning algorithms. IEEE Trans Knowl Data Eng 26(8):1819–1837

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China under Grant No. 61772352, and in part by the Science and Technology Planning Project of Sichuan Province under Grant No.2019YFG0400, 2018GZDZX0031, 2018GZDZX0004, 2017GZDZX0003, 2018JY0182, 19ZDYF1286.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, W., Guo, B., Shen, Y. et al. Twin labeled LDA: a supervised topic model for document classification. Appl Intell 50, 4602–4615 (2020). https://doi.org/10.1007/s10489-020-01798-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01798-x