Abstract

This paper proposes and justifies two globally convergent Newton-type methods to solve unconstrained and constrained problems of nonsmooth optimization by using tools of variational analysis and generalized differentiation. Both methods are coderivative-based and employ generalized Hessians (coderivatives of subgradient mappings) associated with objective functions, which are either of class \({{\mathcal {C}}}^{1,1}\), or are represented in the form of convex composite optimization, where one of the terms may be extended-real-valued. The proposed globally convergent algorithms are of two types. The first one extends the damped Newton method and requires positive-definiteness of the generalized Hessians for its well-posedness and efficient performance, while the other algorithm is of the regularized Newton-type being well-defined when the generalized Hessians are merely positive-semidefinite. The obtained convergence rates for both methods are at least linear, but become superlinear under the semismooth\(^*\) property of subgradient mappings. Problems of convex composite optimization are investigated with and without the strong convexity assumption on smooth parts of objective functions by implementing the machinery of forward–backward envelopes. Numerical experiments are conducted for Lasso problems and for box constrained quadratic programs with providing performance comparisons of the new algorithms and some other first-order and second-order methods that are highly recognized in nonsmooth optimization.

Similar content being viewed by others

References

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd edn. Springer, New York (2017)

Beck, A.: Introduction to Nonlinear Optimization: Theory, Algorithms, and Applications with MATLAB. SIAM, Philadelphia, PA (2014)

Beck, A.: First-Order Methods in Optimization. SIAM, Philadelphia, PA (2017)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2, 183–202 (2009)

Becker, S., Fadili, M.J.: A quasi-Newton proximal splitting method. Adv. Neural Inform. Process. Syst. 25, 2618–2626 (2012)

Bertsekas, D.P.: Nonlinear Programming, 3rd edn. Athena Scientific, Belmont, MA (2016)

Bonnans, J.F.: Local analysis of Newton-type methods for variational inequalities and nonlinear programming. Appl. Math. Optim. 29, 161–186 (1994)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learning 3, 1–122 (2010)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Chieu, N.H., Chuong, T.D., Yao, J.-C., Yen, N.D.: Characterizing convexity of a function by its Fréchet and limiting second-order subdifferentials. Set-Valued Var. Anal. 19, 75–96 (2011)

Chieu, N.H., Lee, G.M., Yen, N.D.: Second-order subdifferentials and optimality conditions for \({{\cal{C} }}^1\)-smooth optimization problems. Appl. Anal. Optim. 1, 461–476 (2017)

Chieu, N.M., Hien, L.V., Nghia, T.T.A.: Characterization of tilt stability via subgradient graphical derivative with applications to nonlinear programming. SIAM J. Optim. 28, 2246–2273 (2018)

Colombo, G., Henrion, R., Hoang, N.D., Mordukhovich, B.S.: Optimal control of the sweeping process over polyhedral controlled sets. J. Diff. Eqs. 260, 3397–3447 (2016)

Combettes, P.L., Pesquet, J.-C.: Proximal splitting methods in signal processing. In: Bauschke, H.H., et al. (eds.) Fixed-Point Algorithms for Inverse Problems in Science and Engineering, pp. 185–212. Springer, New York (2011)

Dan, H., Yamashita, N., Fukushima, M.: Convergence properties of the inexact Levenberg–Marquardt method under local error bound conditions. Optim. Meth. Softw. 17, 605–626 (2002)

Dennis, J.E., Moré, J.J.: Quasi-Newton methods, motivation and theory. SIAM Rev. 19, 46–89 (1977)

Ding, C., Sun, D., Ye, J.J.: First-order optimality conditions for mathematical programs with semidefinite cone complementarity constraints. Math. Program. 147, 539–379 (2014)

Dias, S., Smirnov, G.: On the Newton method for set-valued maps. Nonlinear Anal. TMA 75, 1219–1230 (2012)

Dontchev, A.L., Rockafellar, R.T.: Characterizations of strong regularity for variational inequalities over polyhedral convex sets. SIAM J. Optim. 6, 1087–1105 (1996)

Dontchev, A.L., Rockafellar, R.T.: Implicit Functions and Solution Mappings: A View from Variational Analysis, 2nd edn. Springer, New York (2014)

Drusvyatskiy, D., Lewis, A.S.: Tilt stability, uniform quadratic growth, and strong metric regularity of the subdifferential. SIAM J. Optim. 23, 256–267 (2013)

Drusvyatskiy, D., Mordukhovich, B.S., Nghia, T.T.A.: Second-order growth, tilt stability, and metric regularity of the subdifferential. J. Convex Anal. 21, 1165–1192 (2014)

Emich, K., Henrion, R.: A simple formula for the second-order subdifferential of maximum functions. Vietnam J. Math. 42, 467–478 (2014)

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R.: Least angle regression. Ann. Statist. 32, 407–499 (2004)

Facchinei, F.: Minimization of SC1 functions and the Maratos effect. Oper. Res. Lett. 17, 131–137 (1995)

Facchinei, F., Pang, J.-C.: Finite-Dimensional Variational Inequalities and Complementarity Problems, vol. II. Springer, New York (2003)

Friedlander, M.P., Goodwin, A., Hoheisel, T.: From perspective maps to epigraphical projections. Math. Oper. Res. (2022). https://doi.org/10.1287/moor.2022.1317

Gabay, D., Mercier, B.: A dual algorithm for the solution of nonlinear variational problems via finite element approximations. Comput. Math. Appl. 2, 17–40 (1976)

Glowinski, R., Marroco, A.: Sur l’approximation, par éléments finis d’ordre un, et la résolution, par pénalisation-dualité, d’une classe de problémes de Dirichlet non linéares. Revue Francaise d’Automatique, Informatique et Recherche Operationelle 9, 41–76 (1975)

Gfrerer, H.: On directional metric regularity, subregularity and optimality conditions for nonsmooth mathematical programs. Set-Valued Var. Anal. 21, 151–176 (2013)

Gfrerer, H., Mordukhovich, B.S.: Complete characterization of tilt stability in nonlinear programming under weakest qualification conditions. SIAM J. Optim. 25, 2081–2119 (2015)

Gfrerer, H., Outrata, J.V.: On a semismooth\(^*\) Newton method for solving generalized equations. SIAM J. Optim. 31, 489–517 (2021)

Ginchev, I., Mordukhovich, B.S.: On directionally dependent subdifferentials. C. R. Acad. Bulg. Sci. 64, 497–508 (2011)

Hang, N.T.V., Mordukhovich, B.S., Sarabi, M.E.: Augmented Lagrangian method for second-order conic programs under second-order sufficiency. J. Glob. Optim. 82, 51–81 (2022)

Henrion, R., Mordukhovich, B.S., Nam, N.M.: Second-order analysis of polyhedral systems in finite and infinite dimensions with applications to robust stability of variational inequalities. SIAM J. Optim. 20, 2199–2227 (2010)

Henrion, R., Römisch, W.: On \(M\)-stationary points for a stochastic equilibrium problem under equilibrium constraints in electricity spot market modeling. Appl. Math. 52, 473–494 (2007)

Henrion, R., Outrata, J., Surowiec, T.: On the co-derivative of normal cone mappings to inequality systems. Nonlinear Anal. 71, 1213–1226 (2009)

Hestenes, M.R.: Multiplier and gradient methods. J. Optim. Theory Appl. 4, 303–320 (1969)

Hintermüller, M., Ito, K., Kunisch, K.: The primal-dual active set strategy as a semismooth Newton method. SIAM J. Optim. 13, 865–888 (2002)

Hiriart-Urruty, J.-B., Strodiot, J.-J., Nguyen, V.H.: Generalized Hessian matrix and second-order optimality conditions for problems with \(\cal{C} ^{1,1}\) data. Appl. Math. Optim. 11, 43–56 (1984)

Ho, C.H., Lin, C.J.: Large-scale linear support vector regression. J. Mach. Learn. Res. 13, 3323–3348 (2012)

Hoheisel, T., Kanzow, C., Mordukhovich, B.S., Phan, H.M.: Generalized Newton’s methods for nonsmooth equations based on graphical derivatives, Nonlinear Anal. 75, 1324–1340 (2012); Erratum in Nonlinear Anal. 86, 157–158 (2013)

Hsieh, C.J., Chang, K.W., Lin, C.J.: A dual coordinate descent method for large-scale linear SVM. Proceedings 25th International Conference on Machine Learning, pp. 408–415. Helsinki, Finland (2008)

Izmailov, A.F., Solodov, M.V.: Newton-Type Methods for Optimization and Variational Problems. Springer, New York (2014)

Izmailov, A.F., Solodov, M.V., Uskov, E.T.: Globalizing stabilized sequential quadratic programming method by smooth primal-dual exact penalty function. J. Optim. Theor. Appl. 169, 1–31 (2016)

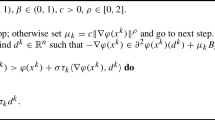

Khanh, P.D., Mordukhovich, B.S., Phat, V.T.: A generalized Newton method for subgradient systems. Math. Oper. Res. (2022). https://doi.org/10.1287/moor.2022.1320

Klatte, D., Kummer, B.: Nonsmooth Equations in Optimization. Regularity, Calculus, Methods and Applications. Kluwer Academic Publishers, Dordrecht (2002)

Kummer, B.: Newton’s method for non-differentiable functions. In: Guddat et al. (eds) Advances in Mathematical Optimization, pp. 114–124. Akademie-Verlag, Berlin (1988)

Lee, J.D., Sun, Y., Saunders, M.A.: Proximal Newton-type methods for minimizing composite functions. SIAM J. Optim. 24, 1420–1443 (2014)

Li, D.H., Fukushima, M., Qi, L., Yamashita, N.: Regularized Newton methods for convex minimization problems with singular solutions. Comput. Optim. Appl. 28, 131–147 (2004)

Li, X., Sun, D., Toh, K.-C.: A highly efficient semismooth Newton augmented Lagrangian method for solving Lasso problems. SIAM J. Optim. 28, 433–458 (2018)

Lions, P.-L., Mercier, B.: Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 16, 964–979 (1979)

Meng, F., Sun, D., Zhao, Z.: Semismoothness of solutions to generalized equations and the Moreau–Yosida regularization. Math. Program. 104, 561–581 (2005)

Mohammadi, A., Mordukhovich, B.S., Sarabi, M.E.: Variational analysis of composite models with applications to continuous optimization. Math. Oper. Res. 47, 397–426 (2022)

Mohammadi, A., Mordukhovich, B.S., Sarabi, M.E.: Parabolic regularity in geometric variational analysis. Trans. Amer. Math. Soc. 374, 1711–1763 (2021)

Mohammadi, A., Sarabi, M.E.: Twice epi-differentiability of extended-real-valued functions with applications in composite optimization. SIAM J. Optim. 30, 2379–2409 (2020)

Mordukhovich, B.S.: Sensitivity analysis in nonsmooth optimization. In: Field, D.A., Komkov, V.(eds) Theoretical Aspects of Industrial Design, pp. 32–46. SIAM Proc. Appl. Math. 58. Philadelphia, PA (1992)

Mordukhovich, B.S.: Complete characterizations of openness, metric regularity, and Lipschitzian properties of multifunctions. Trans. Am. Math. Soc. 340, 1–35 (1993)

Mordukhovich, B.S.: Variational Analysis and Generalized Differentiation, I: Basic Theory, II: Applications. Springer, Berlin (2006)

Mordukhovich, B.S.: Variational Analysis and Applications. Springer, Cham (2018)

Mordukhovich, B.S., Nghia, T.T.A.: Second-order characterizations of tilt stability with applications to nonlinear programming. Math. Program. 149, 83–104 (2015)

Mordukhovich, B.S., Nghia, T.T.A.: Local monotonicity and full stability of parametric variational systems. SIAM J. Optim. 26, 1032–1059 (2016)

Mordukhovich, B.S., Outrata, J.V.: On second-order subdifferentials and their applications. SIAM J. Optim. 12, 139–169 (2001)

Mordukhovich, B.S., Outrata, J.V., Sarabi, M.E.: Full stability of local optimal solutions in second-order cone programming. SIAM J. Optim. 14, 1581–1613 (2014)

Mordukhovich, B.S., Rockafellar, R.T.: Second-order subdifferential calculus with applications to tilt stability in optimization. SIAM J. Optim. 22, 953–986 (2012)

Mordukhovich, B.S., Rockafellar, R.T., Sarabi, M.E.: Characterizations of full stability in constrained optimization. SIAM J. Optim. 23, 1810–1849 (2013)

Mordukhovich, B.S., Sarabi, M.E.: Generalized differentiation of piecewise linear functions in second-order variational analysis. Nonlinear Anal. 132, 240–273 (2016)

Mordukhovich, B.S., Sarabi, M.E.: Generalized Newton algorithms for tilt-stable minimizers in nonsmooth optimization. SIAM J. Optim. 31, 1184–1214 (2021)

Mordukhovich, B.S., Yuan, X., Zheng, S., Zhang, J.: A globally convergent proximal Newton-type method in nonsmooth convex optimization. Math. Program. 198, 899–936 (2023)

Nam, N.M.: Coderivatives of normal cone mappings and Lipschitzian stability of parametric variational inequalities. Nonlinear Anal. 73, 2271–2282 (2010)

Nesterov, Yu.: Lectures on Convex Optimization, 2nd edn. Springer, Cham (2018)

Nocedal, J., Wright, S.: Numerical Optimization. Springer, New York (2006)

Outrata, J.V., Sun, D.: On the coderivative of the projection operator onto the second-order cone. Set-Valued Anal. 16, 999–1014 (2008)

Pang, J.S.: Newton’s method for B-differentiable equations. Math. Oper. Res. 15, 311–341 (1990)

Pang, J.S., Qi, L.: A globally convergent Newton method for convex SC1 minimization problems. J. Optim. Theory Appl. 85, 633–648 (1995)

Patrinos, P., Bemporad, A.: Proximal Newton methods for convex composite optimization. In: IEEE Conference on Decision and Control, pp. 2358–2363 (2013)

Patrinos, P., Stella, L., Bemporad, A.: Forward-backward truncated Newton methods for convex composite optimization. arXiv:1402.6655 (2014)

Pelckmans, K., De Brabanter, J., De Moor, B., Suykens, J.A.K.: Convex clustering shrinkage. In: PASCAL Workshop on Statistics and Optimization of Clustering, pp. 1–6. London (2005)

Poliquin, R.A., Rockafellar, R.T.: Tilt stability of a local minimum. SIAM J. Optim. 8, 287–299 (1998)

Polyak, B.T.: Introduction to Optimization. Optimization Software, New York (1987)

Powell, M.J.D.: A method for nonlinear constraints in minimization problems. In: Fletcher, R. (ed.) Optimization, pp. 283–298. Academic Press, New York (1969)

Qi, L.: Convergence analysis of some algorithms for solving nonsmooth equations. Math. Oper. Res. 18, 227–244 (1993)

Qi, L., Sun, J.: A nonsmooth version of Newton’s method. Math. Program. 58, 353–367 (1993)

Qui, N.T.: Generalized differentiation of a class of normal cone operators. J. Optim. Theory Appl. 161, 398–429 (2014)

Robinson, S.M.: Newton’s method for a class of nonsmooth functions. Set-Valued Anal. 2, 291–305 (1994)

Rockafellar, R.T.: Augmented Lagrangian multiplier functions and duality in nonconvex programming. SIAM J. Control 12, 268–285 (1974)

Rockafellar, R.T.: Augmented Lagrangians and hidden convexity in sufficient conditions for local optimality. Math. Program. 198, 159–194 (2023)

Rockafellar, R.T., Wets, R.J.-B.: Variational Analysis. Springer, Berlin (1998)

She, Y.: Sparse regression with exact clustering. Elect. J. Stat. 4, 1055–1096 (2010)

Stella, L., Themelis, A., Patrinos, P.: Forward-backward quasi-Newton methods for nonsmooth optimization problems. Comput. Optim. Appl. 67, 443–487 (2017)

Stella, L., Themelis, A., Patrinos, P.: Forward-backward envelope for the sum of two nonconvex functions: further properties and nonmonotone linesearch algorithms. SIAM J. Optim. 28, 2274–2303 (2018)

Tibshirani, R.: Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. 58, 267–288 (1996)

Ulbrich, M.: Semismooth Newton Methods for Variational Inequalities and Constrained Optimization Problems in Function Spaces. SIAM, Philadelphia, PA (2011)

Yamashita, N., Fukushima, M.: On the rate of convergence of the Levenberg–Marquardt method. In: Alefeld, G., Chen, X. (eds.) Topics in Numerical Analysis, vol. 15, pp. 239–249. Springer, Vienna (2001)

Yao, J.-C., Yen, N.D.: Coderivative calculation related to a parametric affine variational inequality. Part 1: Basic calculation. Acta Math. Vietnam. 34, 157–172 (2009)

Acknowledgements

The authors are very grateful to three anonymous referees for their helpful remarks and suggestions, which allowed us to significantly improve the original presentation.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Pham Duy Khanh: Research of this author is funded by the Ministry of Education and Training Research Funding under grant B2023-SPS-02, Boris S. Mordukhovich: Research of this author was partly supported by the US National Science Foundation under Grants DMS-1808978 and DMS-2204519, by the US Air Force Office of Scientific Research under Grant #15RT0462, and by the Australian Research Council under Discovery Project DP-190100555. Vo Thanh Phat: Research of this author was partly supported by the US National Science Foundation under Grants DMS-1808978 and DMS-2204519, and by the US Air Force Office of Scientific Research under Grant #15RT0462, Dat Ba Tran: Research of this author was partly supported by the US National Science Foundation under Grant DMS-1808978 and DMS-2204519.

Appendix: Some technical lemmas

Appendix: Some technical lemmas

This section contains four technical lemmas used in the text.

The first lemma is a local version of [44, Lemma 2.20]. The proof of this result is similar to the original one, and thus it is omitted.

Lemma 3

Let \(\Omega \subset \mathrm{I\!R}^n\) be an open set, and let \(\varphi :\Omega \rightarrow \mathrm{I\!R}\) be a continuously differentiable function such that \(\nabla \varphi \) is Lipschitz continuous with modulus \(L >0\). Then for any \(\sigma >0\), \(x \in \Omega \), and \(d \in \mathrm{I\!R}^n\) satisfying \(\langle \nabla \varphi (x),d\rangle <0\), the following inequality

holds whenever \(\tau \in (0,\overline{\tau }]\) and \(x+\tau d \in \Omega \), where

The next lemma provides conditions for the R-linear and Q-linear convergence of sequences.

Lemma 4

(estimates for convergence rates) Let \(\{\alpha _k\}, \{\beta _k \}\), and \(\{\gamma _k \}\) be sequences of positive numbers. Assume that there exist numbers \(c_i>0\), \(i=1,2,3\), and \(k_0\in \mathrm{I\!N}\) such that for all \(k \ge k_0\) we have the estimates:

-

(i)

\(\alpha _k - \alpha _{k+1} \ge c_1 \beta _k^2\).

-

(ii)

\(\beta _k \ge c_2 \gamma _k\).

-

(iii)

\(c_3 \gamma _k^2 \ge \alpha _k\).

Then the sequence \(\{\alpha _k \}\) Q-linearly converges to zero, and the sequences \(\{\beta _k \}\) and \(\{\gamma _k \}\) R-linearly converge to zero as \(k\rightarrow \infty \).

Proof

Combining (i), (ii), and (iii) yields the inequalities

which imply that \(\alpha _{k+1}\le q \alpha _k\), where \(q:= 1- (c_1c_2^2)/c_3\in (0,1)\). This verifies that the sequence \(\{\alpha _{k}\}\) Q-linearly converges to zero. Using the latter and the assumed condition (i) ensures that

which tells us that \(\beta _k \le c\mu ^k\), where \(c:= \sqrt{\alpha _0/c_1}\) and \(\mu :=\sqrt{q}\). This justifies the R-linear convergence of the sequence \(\{\beta _k \}\) to zero. Furthermore, it easily follows from (ii) that the sequence \(\{\gamma _k \}\) R-linearly converge to zero, and thus we are done with the proof. \(\square \)

Now we obtain a useful result on the semismooth\(^*\) property of compositions.

Lemma 5

(semismooth\(^*\) property of composition mappings) Let \(A \in \mathrm{I\!R}^{n\times n}\) be a symmetric nonsingular matrix, \(b \in \mathrm{I\!R}^n\), \({\bar{x}}\in \mathrm{I\!R}^n\), and \(f:\mathrm{I\!R}^n \rightarrow \mathrm{I\!R}^n\) be continuous and semismooth\(^*\) at \({\bar{y}}:=A{\bar{x}}+b\). Then the mapping \(g: \mathrm{I\!R}^n \rightarrow \mathrm{I\!R}^n\) defined by \(g(x):= f(Ax+b)\) is semismooth\(^*\) at \({\bar{x}}\).

Proof

Using the coderivative chain rule from [59, Theorem 1.66], we get

Denote \(\mu := \sqrt{\textrm{max}\{1,\Vert A\Vert ^2 \}\cdot \textrm{max}\{1,\Vert A^{-1}\Vert ^2\}}>0\). Picking any \(\varepsilon >0\) and employing the semismooth\(^*\) property of f at \({\bar{y}}\), we find \(\delta >0\) such that

for all \(y \in {\mathbb {B}}_\delta ({\bar{y}})\) and all \((x^*,y^*) \in \textrm{gph}\,D^*f(y)\). Denoting \(r:= \delta / \Vert A\Vert >0\) gives us \(y:=Ax+b \in {\mathbb {B}}_\delta ({\bar{y}})\) whenever \(x \in {\mathbb {B}}_r({\bar{x}})\). Picking now \(x \in {\mathbb {B}}_r({\bar{x}})\) and \((z^*,w^*) \in \textrm{gph}\,D^*g(x)\), we get \((A^{-1}z^*,w^*)\in \textrm{gph}\,D^*f(Ax+b)\) due to (7.2). It follows from (7.3) that

which verifies the semismooth\(^*\) property of g at \({\bar{x}}\). \(\square \)

The final lemma establishes tilt stability of strongly convex functions at stationary points.

Lemma 6

(strong convexity and tilt-stability) Let \(\varphi :\mathrm{I\!R}^n\rightarrow \overline{\mathrm{I\!R}}\) be an l.s.c. and strongly convex function with modulus \(\kappa >0\), and let \({\bar{x}}\in \textrm{dom}\,\varphi \) such that \(0\in \partial \varphi ({\bar{x}})\). Then \({\bar{x}}\) is a tilt-stable local minimizer of \(\varphi \) with modulus \(\kappa ^{-1}\).

Proof

By the second-order characterization of strongly convex functions [10, Theorem 5.1], we have

This implies in turn that \({\bar{x}}\) is a tilt-stable local minimizer with modulus \(\kappa ^{-1}\) by second-order characterization of tilt stability taken from [61, Theorem 3.5]. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Khanh, P.D., Mordukhovich, B.S., Phat, V.T. et al. Globally convergent coderivative-based generalized Newton methods in nonsmooth optimization. Math. Program. 205, 373–429 (2024). https://doi.org/10.1007/s10107-023-01980-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-023-01980-2

Keywords

- Nonsmooth optimization

- Variational analysis

- Generalized Newton methods

- Global convergence

- Linear and superlinear convergence rates

- Convex composite optimization

- Lasso problems