Abstract

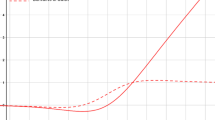

The deep learning architectures' activation functions play a significant role in processing the data entering the network to provide the most appropriate output. Activation functions (AF) are created by taking into consideration aspects like avoiding model local minima and improving training efficiency. Negative weights and vanishing gradients are frequently taken into account by the AF suggested in the literature. Recently, a number of non-monotonic AF have increasingly replaced previous methods for improving convolutional neural network (CNN) performance. In this study, two novel non-linear non-monotonic activation functions, αSechSig and αTanhSig are proposed that can overcome the existing problems. The negative part of αSechSig and αTanhSig is non-monotonic and approaches zero as the negative input decreases, allowing the negative part to retain its sparsity while introducing negative activation values and non-zero derivative values. In experimental evaluations, αSechSig and αTanhSig activation functions were tested on MNIST, KMNIST, Svhn_Cropped, STL-10, and CIFAR-10 datasets. In addition, better results were obtained than the non-monotonic Swish, Logish, Mish, Smish, and monotonic ReLU, SinLU, and LReLU AF known in the literature. Moreover, the best accuracy score for the αSechSig and αTanhSig activation functions was obtained with MNIST at 0.9959 and 0.9956, respectively.

Similar content being viewed by others

Data availability

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript. The datasets generated during and/or analyzed during the current study are available in the [tensorflow] repository, [https://www.tensorflow.org/datasets/catalog/overview].

References

Adem K, Közkurt C (2019) Defect detection of seals in multilayer aseptic packages using deep learning. Turk J Electr Eng Comput Sci 27(6):4220–4230. https://doi.org/10.3906/elk-1903-112

Adem K, Kiliçarslan S, Cömert O (2019) Classification and diagnosis of cervical cancer with stacked autoencoder and softmax classification. Expert Syst Appl 115:557–564

Adem K, Kiliçarslan S COVID-19 Diagnosis Prediction in Emergency Care Patients using Convolutional Neural Network. Afyon Kocatepe Üniversitesi Fen Ve Mühendis. Bilim. Derg., 21(2), Art. no. 2, Apr. 2021, https://doi.org/10.35414/akufemubid.788898.

Apicella A, Donnarumma F, Isgrò F, Prevete R (2021) A survey on modern trainable activation functions. Neural Netw 138:14–32. https://doi.org/10.1016/j.neunet.2021.01.026

Baş S (2018) A new version of spherical magnetic curves in the de-sitter space S 1 2. Symmetry 10(11):606

Bawa VS, Kumar V (2019) Linearized sigmoidal activation: a novel activation function with tractable non-linear characteristics to boost representation capability. Expert Syst Appl. https://doi.org/10.1016/j.eswa.2018.11.042

Clanuwat T, Bober-Irizar M, Kitamoto A, Lamb A, Yamamoto K, Ha D Deep learning for classical Japanese literature, ArXiv181201718 Cs Stat, 9999, https://doi.org/10.20676/00000341.

Clevert D-A, Unterthiner T, Hochreiter S Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs), ArXiv151107289 Cs, Feb. 2016, Accessed: Apr. 27, 2022. [Online]. http://arxiv.org/abs/1511.07289

Coates A, Ng A, Lee H An analysis of single-layer networks in unsupervised feature learning. In: Proceedings of the fourteenth international conference on artificial intelligence and statistics, 2011, pp. 215–223

Elen A (2022) Covid-19 detection from radiographs by feature-reinforced ensemble learning. Concurrency Computat Pract Exper 34(23):e7179. https://doi.org/10.1002/cpe.7179

Gironés RG, Gironés RG, Palero RC, Boluda JC, Boluda JC, Cortés AS (2005) FPGA implementation of a pipelined on-line backpropagation. J VLSI Signal Process Syst Signal, Image Video Technol 40:189–213

Gorur K, Kaya Ozer C, Ozer I, Can Karaca A, Cetin O, and Kocak I, ‘Species-Level Microfossil Prediction for Globotruncana genus Using Machine Learning Models’, Arab. J. Sci. Eng., pp. 1–18, 2022.

He K, Zhang X, Ren S, Sun J Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification, 2015, pp 1026–1034. Accessed: Apr. 27, 2022. [Online]. https://openaccess.thecvf.com/content_iccv_2015/html/He_Delving_Deep_into_ICCV_2015_paper.html

Hendrycks D, Gimpel K Gaussian Error Linear Units (GELUs), ArXiv160608415 Cs, Jul. 2020, Accessed: Apr. 27, 2022. [Online]. http://arxiv.org/abs/1606.08415

Kiliçarslan S, Celik M (2021) RSigELU: a nonlinear activation function for deep neural networks. Expert Syst Appl 174:114805. https://doi.org/10.1016/j.eswa.2021.114805

Kiliçarslan S, Celik M (2022) KAF + RSigELU: a nonlinear and kernel-based activation function for deep neural networks. Neural Comput Appl. https://doi.org/10.1007/s00521-022-07211-7

Kiliçarslan S, Közkurt C, Baş S, Elen A (2023) Detection and classification of pneumonia using novel Superior Exponential (SupEx) activation function in convolutional neural networks. Expert Syst Appl 217:119503

Kiliçarslan S, Adem K, Çelik M (2021) An overview of the activation functions used in deep learning algorithms. J. New Results Sci 10(3), Art. no. 3, https://doi.org/10.54187/jnrs.1011739.

Klambauer G, Unterthiner T, Mayr A, Hochreiter S Self-Normalizing Neural Networks, ArXiv170602515 Cs Stat, Sep. 2017, Accessed: Apr. 27, 2022. [Online]. http://arxiv.org/abs/1706.02515

Korpinar T, Baş S (2019) A new approach for inextensible flows of binormal spherical indicatrices of magnetic curves. Int J Geom Methods Mod Phys 16(02):1950020

Krizhevsky A, Sutskever I, Hinton GE ImageNet classification with deep convolutional neural networks. In: Advances in neural information processing systems, 2012, vol. 25. Accessed: Apr. 28, 2022. [Online]. https://proceedings.neurips.cc/paper/2012/hash/c399862d3b9d6b76c8436e924a68c45b-Abstract.html

Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324. https://doi.org/10.1109/5.726791

LeCun Y, Bengio Y, Hinton G (2015) Deep learning, Nature 521(7553), Art. no. 7553, https://doi.org/10.1038/nature14539.

Maas AL, Hannun AY (2013) Ng AY Rectifier nonlinearities improve neural network acoustic models

Misra D Mish: A Self Regularized Non-Monotonic Activation Function, ArXiv190808681 Cs Stat, Aug. 2020, Accessed: Apr. 27, 2022. [Online]. http://arxiv.org/abs/1908.08681

Nair V, Hinton GE Rectified Linear Units Improve Restricted Boltzmann Machines. In: Presented at the ICML, Jan. 2010. Accessed: Apr. 27, 2022. [Online]. Available: https://openreview.net/forum?id=rkb15iZdZB

Netzer Y, Wang T, Coates A, Bissacco A, Wu B, Ng AY (2011) Reading digits in natural images with unsupervised feature learning

Pacal I, Karaboga D (2021) A robust real-time deep learning based automatic polyp detection system. Comput Biol Med 134: 104519–104519

Paul A, Bandyopadhyay R, Yoon JH, Geem ZW, Sarkar R SinLU: Sinu-Sigmoidal Linear Unit. Mathematics, 10(3), Art. no. 3, Jan. 2022, https://doi.org/10.3390/math10030337.

Ramachandran P, Zoph B, Le QV Searching for Activation Functions, ArXiv171005941 Cs, Oct. 2017, Accessed: Apr. 27, 2022. [Online]. http://arxiv.org/abs/1710.05941

Scardapane S, Van Vaerenbergh S, Totaro S, Uncini A (2019) Kafnets: kernel-based non-parametric activation functions for neural networks. Neural Netw 110:19–32. https://doi.org/10.1016/j.neunet.2018.11.002

Trottier L, Giguère P, Chaib-draa B Parametric Exponential linear unit for deep convolutional neural networks. ArXiv160509332 Cs, Jan. 2018, Accessed: Apr. 27, 2022. [Online]. http://arxiv.org/abs/1605.09332

Wang X, Ren H, Wang A Smish: A novel activation function for deep learning methods, Electronics 11(4), Art. no. 4, Jan. 2022, https://doi.org/10.3390/electronics11040540.

Ying Y, Su J, Shan P, Miao L, Wang X, Peng S (2019) Rectified exponential units for convolutional neural networks. IEEE Access 7:101633–101640. https://doi.org/10.1109/ACCESS.2019.2928442

Zhou Y, Li D, Huo S, Kung S-Y (2021) Shape autotuning activation function. Expert Syst Appl 171:114534. https://doi.org/10.1016/j.eswa.2020.114534

Zhu H, Zeng H, Liu J, Zhang X (2021) Logish: a new nonlinear nonmonotonic activation function for convolutional neural network. Neurocomputing 458:490–499. https://doi.org/10.1016/j.neucom.2021.06.067

Funding

No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors have contributed equally.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethical approval

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Közkurt, C., Kiliçarslan, S., Baş, S. et al. αSechSig and αTanhSig: two novel non-monotonic activation functions. Soft Comput 27, 18451–18467 (2023). https://doi.org/10.1007/s00500-023-09279-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-023-09279-2