Abstract

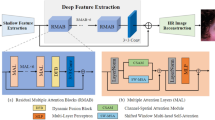

Recently, single-image super-resolution (SISR) based on convolutional neural networks (CNNs) has encountered challenges, including the presence of numerous network parameters, limited receptive field, and the inability to capture global context information. In order to address these issues, we propose an image super-resolution method based on attention aggregation hierarchy feature (AHSR), which improves the performance of the super-resolution (SR) network through the optimization of convolutional operations and the integration of effective attention modules. AHSR first uses a high-frequency filter to bypass the rich low-frequency information, allowing the main network to focus on learning the high-frequency information. In order to aggregate spatial information within the image, expand the receptive field, and extract local structural features more effectively, we propose the utilization of the shift operation with zero parameters and zero triggers instead of spatial convolution. Additionally, we introduce a multi-Dconv head transposed attention module to improve the aggregation of cross-hierarchical feature information. This approach allows us to obtain enhanced features that incorporate contextual information. Extensive experimental results show that compared to other advanced SR models, the proposed AHSR method can better recover image details with fewer model parameters and less computational complexity.

Similar content being viewed by others

Data availability

All data generated or analyzed during this study are included in this published article (and its supplementary information files).

References

Park, S.C., Park, M.K., Kang, M.G.: Super-resolution image reconstruction: a technical overview. IEEE Signal Process. Mag. 20(3), 21–36 (2003)

Yang, C.Y., Huang, J.B., Yang, M.H.: Exploiting self-similarities for single frame super-resolution. In: Proceedings of the 10th Asian Conference on Computer Vision, Springer, pp. 497–510(2010)

Chen, Y., Xia, R., Yang, K., Zou, K.: MFFN: image super-resolution via multi-level features fusion network. Vis. Comput. (2023). https://doi.org/10.1007/s00371-023-02795-0

Zhang, L., Wu, X.: An edge-guided image interpolation algorithm via directional filtering and data fusion. IEEE Trans Image Process 15(8), 2226–2238 (2006)

Farsiu, S., Robinson, D., Elad, M., Milanfar, P.: Fast and robust super-resolution. In: Proceedings 2003 International Conference on Image Processing (Cat No 03CH37429), IEEE, pp. 291(2003)

Dong, C., Loy, C.C., He, K., Tang, X.: Learning a deep convolutional network for image super-resolution. In: European Conference on Computer Vision, Springer, pp. 184–199(2014)

Zhou, D., Liu, Y., Li, X., and Zhang, C.: Single-image super-resolution based on local biquadratic spline with edge constraints and adaptive optimization in transform domain. The Visual Computer, pp. 1–16(2022)

Wang, J., Wu, Y., He, S., Sharma, P.K., Yu, X., Alfarraj, O., Tolba, A.: Lightweight single image super-resolution convolution neural network in portable device. KSII Trans Internet Inf Syst (TIIS) 15(11), 4065–4083 (2021)

Liu, D., Wen, B., Fan, Y., Loy, C.C., Huang, T.S.: Non-local recurrent network for image restoration. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems, pp. 1680–1689(2018)

Kim, J., Lee, J.K., Lee, K.M.: Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision And Pattern Recognition, pp. 1646–1654(2016)

Ledig, C., Theis, L., Huszár, F., Caballero, J., Cunningham, A., Acosta, A., Aitken, A., Tejani, A., Totz, J., Wang, Z.: Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4681–4690(2017).

Shi, W., Caballero, J., Huszár, F., Totz, J., Aitken, A.P., Bishop, R., Rueckert, D., Wang, Z.: Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1874–1883(2016).

Zhang, Y., Li, K., Li, K., Wang, L., Zhong, B., Fu, Y.: Image super-resolution using very deep residual channel attention networks. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 286–301(2018)

Dai, T., Cai, J., Zhang, Y., Xia, S.T., Zhang, L.: Second-order attention network for single image super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 11065–11074(2019)

Lu, Z., Li, J., Liu, H., Huang, C., Zhang, L., Zeng, T.: Transformer for single image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 457–466(2022)

Zhang, J., Sun, J., Wang, J., Yue, X.G.: Visual object tracking based on residual network and cascaded correlation filters. J. Ambient. Intell. Humaniz. Comput. 12, 8427–8440 (2021)

Wei, W., Yongbin, J., Yanhong, L., Ji, L., Xin, W., Tong, Z.: An advanced deep residual dense network (DRDN) approach for image super-resolution. Int. J. Comput. Intell. Syst. 12(2), 1592–1601 (2019)

Huang, W., Zhang, L., Wu, H., Min, F., Song, A.: Channel-Equalization-HAR: a light-weight convolutional neural network for wearable sensor based human activity recognition. IEEE Trans. Mobile Comput. (2022). https://doi.org/10.1109/TMC.2022.3174816

Chen, H., Gu, J., Zhang, Z.: Attention in attention network for image super-resolution. arXiv preprint arXiv:210409497, (2021)

Tang, Y., Zhang, L., Teng, Q., Min, F., Song, A.: Triple cross-domain attention on human activity recognition using wearable sensors. IEEE Trans. Emerg. Top. Comput. Intell. 6(5), 1167–1176 (2022)

Liu, D., Wen, B., Fan, Y., Loy, C.C., Huang, T.S.: Non-local recurrent network for image restoration. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems, pp 1680–1689(2018)

Li, F., Bai, H., Zhao, Y.: Detail-preserving image super-resolution via recursively dilated residual network. Neurocomputing 358, 285–293 (2019)

Qiu, Y., Wang, R., Tao, D., Cheng, J.: Embedded block residual network: A recursive restoration model for single-image super-resolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4180–4189(2019)

Liang, J., Cao, J., Sun, G., Zhang, K., Van Gool, L., Timofte, R.: Swinir: Image restoration using swin transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1833–1844(2021)

Huang, Z., Wang, X., Huang, L., Huang, C., Wei, Y., Liu, W.: Ccnet: Criss-cross attention for semantic segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 603–612(2019)

Zhang, Y., Li, K., Li, K., Zhong, B., Fu, Y.: Residual non-local attention networks for image restoration. arXiv preprint arXiv:190310082, (2019)

Wang, X., Gu, Y., Gao, X., Hui, Z.: Dual residual attention module network for single image super resolution. Neurocomputing 364, 269–279 (2019)

Wu, B., Wan, A., Yue, X., Jin, P., Zhao, S., Golmant, N., Gholaminejad, A., Gonzalez, J., Keutzer, K.: Shift: A zero flop, zero parameter alternative to spatial convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9127–9135(2018)

Zamir, S.W., Arora, A., Khan, S., Hayat, M., Khan, F.S., Yang, M.-H.: Restormer: Efficient transformer for high-resolution image restoration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5728–5739(2022)

Fritsche, M., Gu, S., Timofte, R.: Frequency separation for real-world super-resolution. In: 2019 IEEE/CVF International Conference on Computer Vision Workshop(ICCVW), pp. 3599–3608(2019)

Zhang, X., Zeng, H., Guo, S., Zhang, L.: Efficient long-range attention network for image super-resolution. arXiv preprint arXiv:220306697, (2022)

Li, Z., Liu, Y., Chen, X., Cai, H., Gu, J., Qiao, Y., Dong, C.: Blueprint separable residual network for efficient image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 833–843(2022)

Han, C., Zhang, L., Tang, Y., Huang, W., Min, F., He, J.: Human activity recognition using wearable sensors by heterogeneous convolutional neural networks. Expert Syst. Appl. 198, 116764 (2022)

Hendrycks, D., Gimpel, K.: Gaussian error linear units (gelus). arXiv preprint arXiv:160608415, (2016)

Timofte, R., Agustsson, E., Van Gool, L., Yang, M.-H., Zhang, L.: Ntire 2017 challenge on single image super-resolution: Methods and results. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 114–125(2017)

Bevilacqua, M., Roumy, A., Guillemot, C., Morel, M.-L.A.: Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In: Proceedings of British Machine Vision Conference (BMVC), pp. 135.131–135.110(2012)

Zeyde, R., Elad, M., Protter, M.: On single image scale-up using sparse-representations. In: International Conference on Curves And Surfaces, Springer, pp. 711–730(2010)

Martin, D., Fowlkes, C., Tal, D., Malik, J.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings Eighth IEEE International Conference on Computer Vision ICCV 2001, IEEE, pp. 416–423(2001)

Huang, J.B., Singh, A., Ahuja, N.: Single image super-resolution from transformed self-exemplars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5197–5206(2015)

Matsui, Y., Ito, K., Aramaki, Y., Fujimoto, A., Ogawa, T., Yamasaki, T., Aizawa, K.: Sketch-based manga retrieval using manga109 dataset. Multimed Tools Appl 76(20), 21811–21838 (2017)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:14126980, (2014)

Li, J., Fang, F., Mei, K., Zhang, G.: Multi-scale residual network for image super-resolution. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 517–532(2018)

Liu, J., Tang, J., Wu, G.: Residual feature distillation network for lightweight image super-resolution. In: European Conference on Computer Vision, Springer, pp. 41–55(2020)

Hui, Z., Gao, X., Yang, Y., Wang, X.: Lightweight image super-resolution with information multi-distillation network. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 2024–2032(2019)

Zhao, H., Kong, X., He, J., Qiao, Y., Dong, C.: Efficient image super-resolution using pixel attention. In: European Conference on Computer Vision, Springer, pp 56–72(2020)

Acknowledgements

This work was supported by Scientific Research Fund of Hunan Provincial Education Department (Grant Nos. 22C0171 and 21B0329), the Traffic Science and Technology Project of Hunan Province (Grant No. 202042), and in part by Changsha Municipal Natural Science Foundation (kq2208236).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Human or animal rights

This paper does not contain any studies with human or animal subjects.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, J., Zou, Y. & Wu, H. Image super-resolution method based on attention aggregation hierarchy feature. Vis Comput 40, 2655–2666 (2024). https://doi.org/10.1007/s00371-023-02968-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-02968-x