Abstract

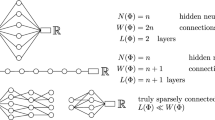

One of the key issues in the analysis of machine learning models is to identify the appropriate function space and norm for the model. This is the set of functions endowed with a quantity which can control the approximation and estimation errors by a particular machine learning model. In this paper, we address this issue for two representative neural network models: the two-layer networks and the residual neural networks. We define the Barron space and show that it is the right space for two-layer neural network models in the sense that optimal direct and inverse approximation theorems hold for functions in the Barron space. For residual neural network models, we construct the so-called flow-induced function space and prove direct and inverse approximation theorems for this space. In addition, we show that the Rademacher complexity for bounded sets under these norms has the optimal upper bounds.

Similar content being viewed by others

References

Aronszajn, N.: Theory of reproducing kernels. Trans. Am. Math. Soc. 68(3), 337–404 (1950)

Bach, F.: Breaking the curse of dimensionality with convex neural networks. J. Mach. Learn. Res. 18(19), 1–53 (2017)

Ball, J.M.: A version of the fundamental theorem for young measures. In: PDEs and continuum models of phase transitions, pp. 207–215. Springer (1989)

Barron, A.R.: Universal approximation bounds for superpositions of a sigmoidal function. IEEE Trans. Inf. Theory 39(3), 930–945 (1993)

Barron, A.R.: Approximation and estimation bounds for artificial neural networks. Mach. Learn. 14(1), 115–133 (1994)

Bartlett, P.L., Mendelson, S.: Rademacher and Gaussian complexities: risk bounds and structural results. J. Mach. Learn. Res. 3(Nov), 463–482 (2002)

Benveniste, A., Métivier, M., Priouret, P.: Adaptive Algorithms and Stochastic Approximations, vol. 22. Springer, Berlin (2012)

Ciarlet, P.G.: The finite element method for elliptic problems. Class. Appl. Math. 40, 1–511 (2002)

DeVore, R.A., Lorentz, G.G.: Constructive Approximation, vol. 303. Springer, Berlin (1993)

E, W., Ma, C., Wang, Q.: A priori estimates of the population risk for residual networks. arXiv preprint arXiv:1903.02154 (2019)

E, W., Ma, C., Lei, W.: A priori estimates of the population risk for two-layer neural networks. Commun. Math. Sci. 17(5), 1407–1425 (2019). arXiv:1810.06397

E, W., Wojtowytsch, S.: Representation formulas and pointwise properties for barron functions. arXiv preprint arXiv:2006.05982 (2020)

Eldan, R., Shamir, O.: The power of depth for feedforward neural networks. In: Conference on Learning Theory, pp. 907–940 (2016)

Jentzen, A., Salimova, D., Welti, T.: A proof that deep artificial neural networks overcome the curse of dimensionality in the numerical approximation of kolmogorov partial differential equations with constant diffusion and nonlinear drift coefficients. arXiv preprint arXiv:1809.07321 (2018)

Klusowski, J.M., Barron, A.R.: Risk bounds for high-dimensional ridge function combinations including neural networks. arXiv preprint arXiv:1607.01434 (2016)

Kurková, V., Sanguineti, M.: Bounds on rates of variable-basis and neural-network approximation. IEEE Trans. Inf. Theory 47(6), 2659–2665 (2001)

Kushner, H., Yin, G.G.: Stochastic Approximation and Recursive Algorithms and Applications, vol. 35. Springer, Berlin (2003)

Li, Z., Ma, C., Wu, L.: Complexity measures for neural networks with general activation functions using path-based norms. arXiv preprint arXiv:2009.06132 (2020)

Mhaskar, H.N.: On the tractability of multivariate integration and approximation by neural networks. J. Complex. 20(4), 561–590 (2004)

Neyshabur, B., Bhojanapalli, S., Mcallester, D., Srebro, N.: Exploring generalization in deep learning. Adv. Neural. Inf. Process. Syst. 30, 5949–5958 (2017)

Rahimi, A., Recht, B.: Uniform approximation of functions with random bases. In: 2008 46th Annual Allerton Conference on Communication, Control, and Computing, pp. 555–561. IEEE (2008)

Shalev-Shwartz, S., Ben-David, S.: Understanding Machine Learning: From Theory to Algorithms. Cambridge University Press, Cambridge (2014)

Young, L.C.: Lecture on the calculus of variations and optimal control theory, vol. 304. American Mathematical Society (2000)

Acknowledgements

The work presented here is supported in part by a gift to Princeton University from iFlytek and the ONR Grant N00014-13-1-0338.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Wolfgang Dahmen, Ronald A. DeVore, and Philipp Grohs.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

E, W., Ma, C. & Wu, L. The Barron Space and the Flow-Induced Function Spaces for Neural Network Models. Constr Approx 55, 369–406 (2022). https://doi.org/10.1007/s00365-021-09549-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00365-021-09549-y