Abstract

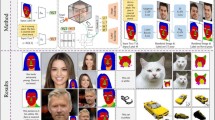

Unconditional human image generation is an important task in vision and graphics, enabling various applications in the creative industry. Existing studies in this field mainly focus on “network engineering” such as designing new components and objective functions. This work takes a data-centric perspective and investigates multiple critical aspects in “data engineering”, which we believe would complement the current practice. To facilitate a comprehensive study, we collect and annotate a large-scale human image dataset with over 230K samples capturing diverse poses and textures. Equipped with this large dataset, we rigorously investigate three essential factors in data engineering for StyleGAN-based human generation, namely data size, data distribution, and data alignment. Extensive experiments reveal several valuable observations w.r.t. these aspects: 1) Large-scale data, more than 40K images, are needed to train a high-fidelity unconditional human generation model with a vanilla StyleGAN. 2) A balanced training set helps improve the generation quality with rare face poses compared to the long-tailed counterpart, whereas simply balancing the clothing texture distribution does not effectively bring an improvement. 3) Human GAN models that employ body centers for alignment outperform models trained using face centers or pelvis points as alignment anchors. In addition, a model zoo and human editing applications are demonstrated to facilitate future research in the community. Code and models are publicly available (Project page: https://stylegan-human.github.io/. Code and models: https://github.com/stylegan-human/StyleGAN-Human.)

J. Fu and S. Li—Equal contribution.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Abdal, R., Qin, Y., Wonka, P.: Image2StyleGAN++: how to edit the embedded images? In: CVPR (2020)

Abdal, R., Zhu, P., Mitra, N.J., Wonka, P.: StyleFlow: attribute-conditioned exploration of StyleGAN-generated images using conditional continuous normalizing flows. ACM TOG 40(3), 1–21 (2021)

Albahar, B., Lu, J., Yang, J., Shu, Z., Shechtman, E., Huang, J.B.: Pose with style: detail-preserving pose-guided image synthesis with conditional StyleGAN. ACM TOG 40(6), 1–11 (2021)

Arjovsky, M., Bottou, L.: Towards principled methods for training generative adversarial networks. In: ICLR (2017)

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein generative adversarial networks. In: ICML (2017)

Brock, A., Donahue, J., Simonyan, K.: Large scale GAN training for high fidelity natural image synthesis. In: ICLR (2019)

Cao, Z., Simon, T., Wei, S.E., Sheikh, Y.: Realtime multi-person 2D pose estimation using part affinity fields. In: CVPR (2017)

Chan, C., Ginosar, S., Zhou, T., Efros, A.A.: Everybody dance now. In: ICCV (2019)

Chan, E.R., et al.: Efficient geometry-aware 3D generative adversarial networks. In: CVPR (2022)

Chan, E.R., Monteiro, M., Kellnhofer, P., Wu, J., Wetzstein, G.: Pi-GAN: periodic implicit generative adversarial networks for 3D-aware image synthesis. In: CVPR (2021)

Chan, K.C., Wang, X., Xu, X., Gu, J., Loy, C.C.: GLEAN: generative latent bank for large-factor image super-resolution. In: CVPR (2021)

MMSegmentation Contributors: MMSegmentation: OpenMMLab semantic segmentation toolbox and benchmark (2020). https://github.com/open-mmlab/mmsegmentation

Dhariwal, P., Nichol, A.: Diffusion models beat GANs on image synthesis. In: NeurIPS (2021)

Dionelis, N., Yaghoobi, M., Tsaftaris, S.A.: Tail of distribution GAN (TailGAN): GenerativeAdversarial-network-based boundary formation. In: SSPD (2020)

Dong, H., et al.: Towards multi-pose guided virtual try-on network. In: ICCV (2019)

Frühstück, A., Singh, K.K., Shechtman, E., Mitra, N.J., Wonka, P., Lu, J.: InsetGAN for full-body image generation. In: CVPR (2022)

Gahan, A.: 3ds Max Modeling for Games: Insider’s Guide to Game Character, Vehicle, and Environment Modeling (2012)

Ghadiyaram, D., Tran, D., Mahajan, D.: Large-scale weakly-supervised pre-training for video action recognition. In: CVPR (2019)

Gong, K., Liang, X., Zhang, D., Shen, X., Lin, L.: Look into person: self-supervised structure-sensitive learning and a new benchmark for human parsing. In: CVPR (2017)

Goodfellow, I., et al.: Generative adversarial nets. In: NeurIPS (2014)

Grigorev, A., et al.: StylePeople: a generative model of fullbody human avatars. In: CVPR (2021)

Grover, A., et al.: Bias correction of learned generative models using likelihood-free importance weighting. In: NeurIPS (2019)

Gu, J., Liu, L., Wang, P., Theobalt, C.: StyleNeRF: a style-based 3d aware generator for high-resolution image synthesis. In: ICLR (2022)

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., Courville, A.C.: Improved training of wasserstein GANs. In: NeurIPS (2017)

Han, X., Wu, Z., Wu, Z., Yu, R., Davis, L.S.: VITON: an image-based virtual try-on network. In: CVPR (2018)

Härkönen, E., Hertzmann, A., Lehtinen, J., Paris, S.: GanSpace: discovering interpretable GAN controls. In: NeurIPS (2020)

Jiang, L., Dai, B., Wu, W., Loy, C.C.: Deceive D: adaptive pseudo augmentation for GAN training with limited data. In: NeurIPS (2021)

Jiang, Y., Chan, K.C., Wang, X., Loy, C.C., Liu, Z.: Robust reference-based super-resolution via C2-matching. In: CVPR (2021)

Jiang, Y., Huang, Z., Pan, X., Loy, C.C., Liu, Z.: Talk-to-edit: fine-grained facial editing via dialog. In: ICCV (2021)

Jiang, Y., Yang, S., Qiu, H., Wu, W., Loy, C.C., Liu, Z.: Text2Human: text-driven controllable human image generation. ACM TOG 41(4), 1–11 (2022)

Jojic, N., Gu, J., Shen, T., Huang, T.S.: Computer modeling, analysis, and synthesis of dressed humans. TCSVT 9(2), 378–388 (1999)

Kang, W.C., Fang, C., Wang, Z., McAuley, J.: Visually-aware fashion recommendation and design with generative image models. In: ICDM (2017)

Karras, T., Aila, T., Laine, S., Lehtinen, J.: Progressive growing of GANs for improved quality, stability, and variation. In: ICLR (2017)

Karras, T., Aittala, M., Hellsten, J., Laine, S., Lehtinen, J., Aila, T.: Training generative adversarial networks with limited data. In: NeurIPS (2020)

Karras, T., et al.: Alias-free generative adversarial networks. In: NeurIPS (2021)

Karras, T., Laine, S., Aila, T.: A style-based generator architecture for generative adversarial networks. In: CVPR (2019)

Karras, T., Laine, S., Aittala, M., Hellsten, J., Lehtinen, J., Aila, T.: Analyzing and improving the image quality of StyleGAN. In: CVPR (2020)

Kazemi, V., Sullivan, J.: One millisecond face alignment with an ensemble of regression trees. In: CVPR (2014)

Kocasari, U., Dirik, A., Tiftikci, M., Yanardag, P.: StyleMC: multi-channel based fast text-guided image generation and manipulation. In: WACV (2022)

Lei, C., Liu, D., Li, W., Zha, Z.J., Li, H.: Comparative deep learning of hybrid representations for image recommendations. In: CVPR (2016)

Lewis, K.M., Varadharajan, S., Kemelmacher-Shlizerman, I.: TryOnGAN: body-aware try-on via layered interpolation. ACM TOG 40(4), 1–10 (2021)

Li, Z., et al.: Animated 3D human avatars from a single image with GAN-based texture inference. CNG 95, 81–91 (2021)

Liang, X., et al.: Deep human parsing with active template regression. PAMI 37(12), 2402–2414 (2015)

Liu, W., Piao, Z., Min, J., Luo, W., Ma, L., Gao, S.: Liquid warping GAN: a unified framework for human motion imitation, appearance transfer and novel view synthesis. In: ICCV (2019)

Liu, Z., Luo, P., Qiu, S., Wang, X., Tang, X.: DeepFashion: powering robust clothes recognition and retrieval with rich annotations. In: CVPR (2016)

Liu, Z., Miao, Z., Zhan, X., Wang, J., Gong, B., Yu, S.X.: Large-scale long-tailed recognition in an open world. In: CVPR (2019)

Liu, Z., Yan, S., Luo, P., Wang, X., Tang, X.: Fashion landmark detection in the wild. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 229–245. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_15

Ma, L., Jia, X., Sun, Q., Schiele, B., Tuytelaars, T., Van Gool, L.: Pose guided person image generation. In: NeurIPS (2017)

Ma, L., Sun, Q., Georgoulis, S., Van Gool, L., Schiele, B., Fritz, M.: Disentangled person image generation. In: CVPR (2018)

Ma, Q., et al.: Learning to dress 3D people in generative clothing. In: CVPR (2020)

Mao, X., Li, Q., Xie, H., Lau, R.Y., Wang, Z., Paul Smolley, S.: Least squares generative adversarial networks. In: ICCV (2017)

Mariani, G., Scheidegger, F., Istrate, R., Bekas, C., Malossi, C.: BaGAN: data augmentation with balancing GAN. arXiv preprint arXiv:1803.09655 (2018)

Martinez, J., Hossain, R., Romero, J., Little, J.J.: A simple yet effective baseline for 3D human pose estimation. In: ICCV (2017)

Menon, S., Damian, A., Hu, S., Ravi, N., Rudin, C.: PULSE: self-supervised photo upsampling via latent space exploration of generative models. In: CVPR (2020)

Miyato, T., Kataoka, T., Koyama, M., Yoshida, Y.: Spectral normalization for generative adversarial networks. In: ICLR (2018)

Neuberger, A., Borenstein, E., Hilleli, B., Oks, E., Alpert, S.: Image based virtual try-on network from unpaired data. In: CVPR (2020)

Nie, X., Feng, J., Zhang, J., Yan, S.: Single-stage multi-person pose machines. In: ICCV (2019)

Niemeyer, M., Geiger, A.: GIRAFFE: representing scenes as compositional generative neural feature fields. In: CVPR (2021)

Or-El, R., Luo, X., Shan, M., Shechtman, E., Park, J.J., Kemelmacher-Shlizerman, I.: StyleSDF: high-resolution 3D-consistent image and geometry generation. In: CVPR (2022)

Patashnik, O., Wu, Z., Shechtman, E., Cohen-Or, D., Lischinski, D.: StyleCLIP: text-driven manipulation of StyleGAN imagery. In: ICCV (2021)

Patel, C., Liao, Z., Pons-Moll, G.: TailorNet: predicting clothing in 3D as a function of human pose, shape and garment style. In: CVPR (2020)

Pavlakos, G., Zhou, X., Derpanis, K.G., Daniilidis, K.: Coarse-to-fine volumetric prediction for single-image 3D human pose. In: CVPR (2017)

Pumarola, A., Sanchez-Riera, J., Choi, G., Sanfeliu, A., Moreno-Noguer, F.: 3DPeople: modeling the geometry of dressed humans. In: CVPR (2019)

Qi, D., Su, L., Song, J., Cui, E., Bharti, T., Sacheti, A.: ImageBERT: cross-modal pre-training with large-scale weak-supervised image-text data. arXiv preprint arXiv:2001.07966 (2020)

Radford, A., et al.: Learning transferable visual models from natural language supervision. In: ICML (2021)

Radford, A., Metz, L., Chintala, S.: Unsupervised representation learning with deep convolutional generative adversarial networks (2016)

Ramesh, A., et al.: Zero-shot text-to-image generation. In: ICML (2021)

Roich, D., Mokady, R., Bermano, A.H., Cohen-Or, D.: Pivotal tuning for latent-based editing of real images. ACM TOG 42(1), 1–13 (2022)

Sarkar, K., Golyanik, V., Liu, L., Theobalt, C.: Style and pose control for image synthesis of humans from a single monocular view. arXiv preprint arXiv:2102.11263 (2021)

Sarkar, K., Mehta, D., Xu, W., Golyanik, V., Theobalt, C.: Neural re-rendering of humans from a single image. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12356, pp. 596–613. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58621-8_35

Sattigeri, P., Hoffman, S.C., Chenthamarakshan, V., Varshney, K.R.: Fairness GAN: generating datasets with fairness properties using a generative adversarial network. IBM JRD 63(4/5), 3-1 (2019)

Sauer, A., Schwarz, K., Geiger, A.: StyleGAN-XL: scaling StyleGAN to large diverse datasets. ACM TOG (2022)

Schwarz, K., Liao, Y., Niemeyer, M., Geiger, A.: GRAF: generative radiance fields for 3D-aware image synthesis. In: NeurIPS (2020)

Serengil, S.I., Ozpinar, A.: HyperExtended LightFace: a facial attribute analysis framework. In: ICEET (2021)

Shen, Y., Yang, C., Tang, X., Zhou, B.: InterFaceGAN: interpreting the disentangled face representation learned by GANs. PAMI 44(4), 2004–2018 (2020)

Shen, Y., Zhou, B.: Closed-form factorization of latent semantics in GANs. In: CVPR (2021)

Siarohin, A., Sangineto, E., Lathuilière, S., Sebe, N.: Deformable GANs for pose-based human image generation. In: CVPR (2018)

Song, D., Tong, R., Chang, J., Yang, X., Tang, M., Zhang, J.J.: 3D body shapes estimation from dressed-human silhouettes. In: CGF (2016)

Tewari, A., et al.: PIE: portrait image embedding for semantic control. ACM TOG 39(6), 1–14 (2020)

Tewari, A., et al.: StyleRig: rigging StyleGAN for 3D control over portrait images. In: CVPR (2020)

Toutouh, J., Hemberg, E., O’Reilly, U.-M.: Data dieting in GAN training. In: Iba, H., Noman, N. (eds.) Deep Neural Evolution. NCS, pp. 379–400. Springer, Singapore (2020). https://doi.org/10.1007/978-981-15-3685-4_14

Tov, O., Alaluf, Y., Nitzan, Y., Patashnik, O., Cohen-Or, D.: Designing an encoder for StyleGAN image manipulation. ACM TOG 40(4), 1–14 (2021)

Tzelepis, C., Tzimiropoulos, G., Patras, I.: WarpedGANSpace: finding non-linear RBF paths in GAN latent space. In: ICCV (2021)

Véges, M., Lőrincz, A.: Absolute human pose estimation with depth prediction network. In: IJCNN (2019)

Wang, B., Zheng, H., Liang, X., Chen, Y., Lin, L., Yang, M.: Toward characteristic-preserving image-based virtual try-on network. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11217, pp. 607–623. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01261-8_36

Wang, T., Zhang, Y., Fan, Y., Wang, J., Chen, Q.: High-fidelity GAN inversion for image attribute editing. In: CVPR (2022)

Wang, T., Zhang, T., Lovell, B.: Faces a la carte: text-to-face generation via attribute disentanglement. In: WACV (2021)

Wu, C., Li, H.: Conditional transferring features: scaling GANs to thousands of classes with 30% less high-quality data for training. In: IJCNN (2020)

Wu, Q., Li, L., Yu, Z.: TextGAIL: generative adversarial imitation learning for text generation. In: AAAI (2021)

Wu, Z., Lischinski, D., Shechtman, E.: StyleSpace analysis: disentangled controls for StyleGAN image generation. In: CVPR (2021)

Xia, W., Yang, Y., Xue, J.H., Wu, B.: TediGAN: text-guided diverse face image generation and manipulation. In: CVPR (2021)

Xu, D., Yuan, S., Zhang, L., Wu, X.: FairGAN: fairness-aware generative adversarial networks. In: IEEE BigData (2018)

Xu, H., Bazavan, E.G., Zanfir, A., Freeman, W.T., Sukthankar, R., Sminchisescu, C.: GHUM & GHUML: generative 3D human shape and articulated pose models. In: CVPR (2020)

Xu, T., et al.: AttnGAN: fine-grained text to image generation with attentional generative adversarial networks. In: CVPR (2018)

Xu, Y., et al.: TransEditor: transformer-based dual-space GAN for highly controllable facial editing. In: CVPR (2022)

Yildirim, G., Jetchev, N., Vollgraf, R., Bergmann, U.: Generating high-resolution fashion model images wearing custom outfits. In: ICCVW (2019)

Zhang, D., Khoreva, A.: Progressive augmentation of GANs. In: NeurIPS (2019)

Zhao, S., Liu, Z., Lin, J., Zhu, J.Y., Han, S.: Differentiable augmentation for data-efficient GAN training. In: NeurIPS (2020)

Zheng, L., Shen, L., Tian, L., Wang, S., Wang, J., Tian, Q.: Scalable person re-identification: a benchmark. In: ICCV (2015)

Acknowledgements

This work is supported by NTU NAP, MOE AcRF Tier 1 (2021-T1-001-088), and under the RIE2020 Industry Alignment Fund – Industry Collaboration Projects (IAF-ICP) Funding Initiative, as well as cash and in-kind contribution from the industry partner(s).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Fu, J. et al. (2022). StyleGAN-Human: A Data-Centric Odyssey of Human Generation. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13676. Springer, Cham. https://doi.org/10.1007/978-3-031-19787-1_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-19787-1_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19786-4

Online ISBN: 978-3-031-19787-1

eBook Packages: Computer ScienceComputer Science (R0)