Abstract

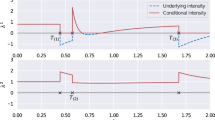

Event-scheduling algorithms can compute in continuous time the next occurrence of points (as events) of a counting process based on their current conditional intensity. In particular, event-scheduling algorithms can be adapted to perform the simulation of finite neuronal networks activity. These algorithms are based on Ogata’s thinning strategy (Ogata in IEEE Trans Inf Theory 27:23–31, 1981), which always needs to simulate the whole network to access the behavior of one particular neuron of the network. On the other hand, for discrete time models, theoretical algorithms based on Kalikow decomposition can pick at random influencing neurons and perform a perfect simulation (meaning without approximations) of the behavior of one given neuron embedded in an infinite network, at every time step. These algorithms are currently not computationally tractable in continuous time. To solve this problem, an event-scheduling algorithm with Kalikow decomposition is proposed here for the sequential simulation of point processes neuronal models satisfying this decomposition. This new algorithm is applied to infinite neuronal networks whose finite time simulation is a prerequisite to realistic brain modeling.

Similar content being viewed by others

Change history

28 September 2023

A Correction to this paper has been published: https://doi.org/10.1007/s42979-023-02168-3

References

Andersen PK, Borgan O, Gill R, Keiding N. Statistical models based on counting processes. Berlin: Springer; 1996.

Brémaud P. Point processes and queues: martingale dynamics. Berlin: Springer; 1981.

Brémaud P, Massoulié L. Stability of nonlinear Hawkes processes. Ann Probab. 1996;24:1563–88.

Comets F, Fernandez R, Ferrari PA. Processes with long memory: regenerative construction and perfect simulation. Ann Appl Probab. 2002;3:921–43.

Muzy A. Exploiting activity for the modeling and simulation of dynamics and learning processes in hierarchical (neurocognitive) systems. Mag Comput Sci Eng. 2019;21:83–93.

Dassios A, Zhao H. Exact simulation of Hawkes process with exponentially decaying intensity. Electron Commun Probab. 2013;18(62):1–13.

Didelez V. Graphical models of markes point processes based on local independence. J R Stat Soc B. 2008;70(1):245–64.

Fernandéz R, Ferrari P, Galves A. Coupling, renewal and perfect simulation of chains of infinite order. In: Notes of a course in the V Brazilian School of Probability, Ubatuba, July 30–August 4, 2001. http://www.staff.science.uu.nl/~ferna107/.

Galves A, Löcherbach E. Infinite systems of interacting chains with memory of variable length—a stochastic model for biological neural nets. J Stat Phys. 2013;151(5):896–921.

Galves A, Löcherbach E. Modeling networks of spiking neurons as interacting processes with memory of variable length. Journal de la Société Française de Statistiques. 2016;157:17–32.

Hodara P, Löcherbach E. Hawkes Processes with variable length memory and an infinite number of components, Adv. Appl. Probab. 2017;49: 84–107 title = Hawkes Processes with variable length memory and an infinite number of components, year = 2016

Kalikow S. Random markov processes and uniform martingales. Isr J Math. 1990;71(1):33–54.

Lewis PAW, Shedler GS. Simulation of nonhomogeneous Poisson processes. Monterey, California: Naval Postgraduate School; 1978.

Mascart C, Muzy A, Reynaud-Bouret P. Centralized and distributed simulations of point processes using local independence graphs: A computational complexity analysis, under finalization for Arxiv deposit 2019

Méléard S, Aléatoire: introduction à la théorie et au calcul des probabilités, Editions de l’école polytechnique, 2010, pp. 185–194.

Møller J, Rasmussen JG. Perfect simulation of Hawkes processes. Adv Appl Probab. 2005;37:629–46.

Ogata Y. On Lewis’ simulation method for point processes. IEEE Trans Inf Theory. 1981;27:23–31.

Ost G, Reynaud-Bouret P. Sparse space-time models: concentration inequalities and Lasso. Under revision. https://arxiv.org/abs/1807.07615.

Peters EAJF, de With G. Rejection-free MonteCarlo sampling for general potentials. Phys Rev E. 2012;85:026703.

Reynaud-Bouret P, Schbath S. Adaptive estimation for Hawkes processes; application to genome analysis. Ann Stat. 2010;38(5):2781–822.

Tocher KD. PLUS/GPS III Specification, United Steel Companies Ltd, Department of Operational Research. 1967

Vere-Jones D, Ozaki T. Some examples of statistical estimation applied to earthquake data. Ann Inst Stat Math. 1982;34(B):189–207.

Zeigler BP. Theory of modelling and simulation. New York: Wiley-Interscience Publication; 1976.

Zeigler BP, Muzy A, Kofman E. Theory of modeling and simulation: discrete event & iterative system computational foundations. New York: Academic Press; 2018.

Acknowledgements

This work was supported by the French government, through the UCA\(^{Jedi}\) Investissements d’Avenir managed by the National Research Agency (ANR-15-IDEX-01) and by the interdisciplinary Institute for Modeling in Neuroscience and Cognition (NeuroMod) of the Université Côte d’Azur. The authors would like to thank Professor E.Löcherbach from Paris 1 for great discussions about Kalikow decomposition and Forward Backward Algorithm.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “Modelling methods in Computer Systems, Networks and Bioinformatics” guest edited by Erol Gelenbe.

Appendices

A Link Between Algorithm 2 and the Kalikow Decomposition

To prove that Algorithm 2 returns the desired processes, let us use some additional and more mathematical notation. Note that all the points simulated on neuron i before being accepted or not can be seen as coming from a common Poisson process of intensity M, denoted \(\varPi _i\). For any \(i \in {\mathbf {I}}\), we denote the arrival times of \(\varPi _i\), \((T^i_n)_{n \in \mathbb {Z}}\), with \(T^i_1\) being the first positive time.

As in Step 6 of Algorithm 2, we attach to each point of \(\varPi ^i\) a stochastic mark X given by

Let us also define \(V^i_n\) the neighborhood choice of \(T^i_n\) picked at random and independently of anything else according to \(\lambda _i\) and shifted at time \(T^i_n\).

In addition, for any \(i \in {\mathbf {I}}\), define \(N^i= (T^i_n,X^i_n)_{n \in \mathbb {Z}}\) an E-marked point process with \(E=\{0;1\}\). In particular, following the notation in Chapter VIII of [2], for any \(i \in {\mathbf {I}}\), let

Moreover note that \((N_t^i(1))_{t\in \mathbb {R}}\) is the counting process associated with the point process \(\mathbf{P}\) simulated by Algorithm 2. Let us denote by \(\varphi _i(t)\) the formula given by (2) and shifted at time t. Note that since the \(\phi _i^v\)’s are \({\mathcal {F}}_{0-}^{int}={\mathcal {F}}_{0-}^{N(1)}\), \(\varphi _i(t)\) is \({\mathcal {F}}^{N(1)}_{t^{-}}\) measurable. We also denote \(\varphi _i^v(t)\) the formula of \(\phi _i^v\) shifted at time t.

With this notation, we can prove the following.

Proposition 2

The process \((N^i_t(1))_{t\in \mathbb {R}}\) admits \(\varphi _i(t)\) as \({\mathcal {F}}^{N(1)}_{t^{-}}\)-predictable intensity.

Proof

Following the technique in Chapter 2 of [2], let us take \(C_t\) a non-negative predictable function with respect to (w.r.t) \({\mathcal {F}}^{N^i(1)}_{t}\) that is \({\mathcal {F}}^{N(1)}_{t-}\) measurable and therefore \({\mathcal {F}}^{N}_{t-}\) measurable . We have, for any \(i \in {\mathbf {I}}\),

Note that by Theorem T35 at Appendix A1 of [2], any point T should be understood as a stopping time and that by Theorem T30 at Appendix A2 of [2],

So

Let us now integrate with respect to the choice \(V^i_n\), which is independent of anything else.

Since \(\varPi ^i\) is a Poisson process with respect to \(({\mathcal {F}}_t^N)_t\) with intensity M, and since \(C_t \frac{\varphi _i(t)}{M}\) is \({\mathcal {F}}_{t-}^N\) measurable, we finally have that

which ends the proof.\(\square\)

B Proof of Proposition 1

Proof

We do the proof for the backward part, starting with \(T=T_{next}\) as the next point after \(t_0\) (Step 4 of Algorithm 3), the proof being similar for the other \(T_{next}\) generated at Step 23. We construct a tree with root (i, T). For each point \((j_{T'},T')\) in the tree, the points which are simulated in \(V_{T'}\) (Step 12 of Algorithm 3) define the children of \((j_{T'}, T')\) in the tree. This forms the tree \(\tilde{{\mathcal {T}}}\).

Let us now build a tree \(\tilde{{\mathcal {C}}}\) with root (i, T) (that includes the previous tree) by mimicking the previous procedure in the backward part, except that we simulate on the whole neighborhood even if it has a part that intersects with previous neighborhoods (if they exist) (Steps 11–12 of Algorithm 3). By doing so, we make the number of children at each node independent of anything else.

If the tree \(\tilde{{\mathcal {C}}}\) goes extinct then so does the tree \(\tilde{{\mathcal {T}}}\) and the backward part of the algorithm terminates.

But if one only counts the number of children in the tree \(\tilde{{\mathcal {C}}}\), we have a marked branching process whose reproduction distribution for the mark i is given by

-

no children with probability \(\lambda _i(\emptyset )\)

-

Poissonian number of children with parameter l(v)M if v is the chosen neighborhood with probability \(\lambda _i(v)\)

This gives that the average number of children issued from a node with the mark i is

If we denote \(\tilde{{\mathcal {C}}}^k\) as the collection of points in the tree \(\tilde{{\mathcal {C}}}\) at generation k, and by \(K_{T'}\) the set of points generated independently as a Poisson process of rate M inside \(V_{T'}\), we see recursively that

But

Therefore, if we denote the total number of sites in \(\tilde{{\mathcal {C}}}^k\) by \(Z^{(k)}\), we have

One can then conclude by recursion that

The last inequality uses the sparsity neighborhood assumption. Then, we deduce that the mean number of children in each generation goes to 0 as k tends to infinity. So using classical branching techniques in [15], we conclude that the tree \(\tilde{{\mathcal {C}}}\) will go extinct almost surely. This also implies that the backward steps end a.s. \(\square\)

Rights and permissions

About this article

Cite this article

Phi, T.C., Muzy, A. & Reynaud-Bouret, P. Event-Scheduling Algorithms with Kalikow Decomposition for Simulating Potentially Infinite Neuronal Networks. SN COMPUT. SCI. 1, 35 (2020). https://doi.org/10.1007/s42979-019-0039-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-019-0039-3