Abstract

The law guarantees the regular functioning of the nation and society. In recent years, legal artificial intelligence (legal AI), which aims to apply artificial intelligence techniques to perform legal tasks, has received significant attention. Legal AI can provide a handy reference and convenient legal services for legal professionals and non-specialists, thus benefiting real-world legal practice. Different from general open-domain tasks, legal tasks have a high demand for understanding and applying expert knowledge. Therefore, enhancing models with various legal knowledge is a key issue of legal AI. In this chapter, we summarize the existing knowledge-intensive legal AI approaches regarding knowledge representation, acquisition, and application. Besides, future directions and ethical considerations are also discussed to promote the development of legal AI.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

11.1 Introduction

The law is the cornerstone of human civilization, and it guarantees the regular functioning of our state and society. The development of law has always been an important symbol of the development of human civilization. The practice of law can be traced back to 3000 BC. Ancient Egyptian law regulated social norms and encouraged people to be at peace with each other [97]. Over the millennia of development and progress, the law can reach every aspect of human activities nowadays. It regulates and mediates the relations and interactions between people, institutions, and state authorities. For example, international law concerns relations between sovereign nations; administrative law regulates the behavior of state authorities; criminal and civil law guarantees the fundamental rights of citizens.

The legal domain is knowledge-intensive and requires a high level of knowledge for its practitioners. It takes years of study and work experience for legal practitioners, including judges and lawyers, to be qualified for their jobs, which results in the scarcity and irreplaceability of legal practitioners. Besides, as society develops and technology advances, public communication and interactions become more frequent, leading to more legal disputes and increasing demand for legal services. Therefore, the following two urgent challenges in real-world judicial practice are increasingly prominent: (1) High caseload for public authorities. Take China, the world’s most populous country, as an example; according to the statistics, the grassroots courts in China need to hear more than 30 million cases per year, with each judge hearing an average of 238 cases a year [1]. This means that each judge has high work pressure. The same situation also exists in other countries, such as the United States [30]. (2) Scarcity of legal services for the public. The scarcity of lawyers leads to the high cost of legal services, and in the United States, roughly 86% low-income people who encounter legal problems cannot obtain adequate and timely legal assistance [23].

With the development of artificial intelligence, the interdisciplinary discipline, legal artificial intelligence (Legal AI), has received increasing attention in recent years [3,4,5, 10, 32, 35, 122]. Legal AI aims to empower legal tasks with AI techniques and help legal professionals to deal with repetitive and cumbersome mental work. Thus, legal AI can assist legal professionals in improving their work efficiency. Besides, legal AI can also provide convenient legal services to people unfamiliar with legal knowledge. Notably, as most legal data are presented in the textual form, many legal AI methods focus on applying natural language processing (NLP) techniques, i.e., legal NLP, which is the focus of this chapter.

The core of legal NLP tasks lies in automatic case analysis and case understanding, which requires the models to understand the legal facts and the corresponding knowledge. Due to the knowledge-intensive feature of case analysis, straightforwardly applying general methods is suboptimal. Enhancing legal NLP systems with legal knowledge is crucial for effective case analysis. Figure 11.1 presents a real-world case document consisting of several parts, including the case fact description, the court’s views, and the judgment results. In this case, the judges are required to apply the corresponding law to the specific circumstances of the defendant’s case. After the judge determines the applicable law based on the attributes of the “domain name” and the purpose of the defendant’s behavior, the judge then makes the final decision on the crime and the prison term. This example shows that the flexible application of legal knowledge is crucial in case analysis.

Unlike the widely used relational triple knowledge in the open domain, the structure of legal knowledge is complex and diverse. In daily work and communication, legal knowledge mainly presents in textual form. Take the two most representative legal systems as an example. In civil law systems, legal knowledge is mainly contained in laws and regulations, and judges need to analyze and decide cases according to the principles in the laws and regulations. In common law systems, legal knowledge is mainly contained in opinions and decisions in previous cases, and judges need to summarize the principles from the past decisions of relevant courts to make judgments for current cases. The textual laws and case documents together form essential legal knowledge sources. Furthermore, many researchers formalize textual legal knowledge into various structured knowledge, such as legal elements, legal events, and legal logical rules, to facilitate the efficiency and fairness of real-world legal case analysis. Structured legal knowledge can decompose a case analysis task into several simplified subtasks and thus reduce the analysis complexity. Both textual and structured knowledge are essential and beneficial for legal NLP systems.

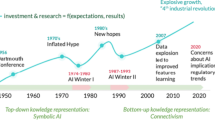

The complexity of structures of legal knowledge poses challenges to legal NLP models. Early legal NLP systems mainly utilize the legal knowledge following the mix of symbolism and rationalism paradigm [83, 95]. These works usually suffer from poor transferability and can only utilize a single type of knowledge. Inspired by the connectionism and empiricism approaches, many efforts have been devoted to designing neural models to integrate the knowledge for legal tasks [15, 31, 41, 59, 66, 105, 110, 120]. We term these methods as legal knowledge representation learning, which attempts to encode legal knowledge with different structures into a unified distributed representation space. Legal representation learning can help transform human-friendly textual and structured knowledge into machine-friendly model knowledge (modeledge) and gradually becomes a common paradigm for legal NLP.

In this chapter, we summarize the state of the arts of legal NLP from the perspective of the definition, acquisition, and application of legal knowledge. Notably, the Supreme People’s Court of the People’s Republic of China has published more than 100 million case documents,Footnote 1 greatly contributing to the development of legal AI. According to the statistics, China has the highest number of research papers on legal AI in recent years [80]. Therefore, in this chapter, the examples and models mainly come from legal AI research for Chinese cases.

In the following sections, we first present the typical tasks and real-world applications in legal NLP in Sect. 11.2, where all these tasks are knowledge-intensive and require multiple types of knowledge for reasoning. Then, we introduce several widely used legal knowledge nowadays, including textual and structured legal knowledge, in Sect. 11.3. Moreover, in Sect. 11.4, we focus on knowledge-guided legal NLP methods, which attempt to learn machine-friendly model knowledge from human-friendly legal knowledge. Based on the discussion in Chap. 9, we divide the existing knowledge-guided methods into four groups according to which model components are fused with knowledge. To promote future research, we discuss some directions in Sect. 11.5 and potential ethical risks of existing legal NLP methods in Sect. 11.6.

11.2 Typical Tasks and Real-World Applications

Recently, many legal tasks have been formally defined from the computational perspective to promote the application of AI techniques in the legal domain. To facilitate the introduction of subsequent sections, we will briefly describe the definition and challenges of several typical legal tasks, including legal judgment prediction, legal information retrieval, and legal question answering. Though many tasks have been intensively studied in recent years, not all of them have been widely used in real-world systems due to unsatisfactory performance and ethical considerations. In this section, we also briefly introduce some real-world applications of existing legal NLP methods.

Legal Judgment Prediction (LJP)

LJP aims to predict the judgment results when giving the fact description and claims. LJP is one of the most practical tasks in legal NLP. Take widely studied Chinese cases as an example; the cases can be classified into three categories: administrative cases, civil cases, and criminal cases. The claims in administrative cases and civil cases are usually diverse, which introduces challenges for task formalization and evaluation. For example, in divorce dispute cases, the claims often include the distribution of property, child custody issues, etc. In contrast, the claims in criminal cases usually are homogeneous and request for courts to impose a certain punishment on the defendants, such as a fine, a prison term, or a death penalty. The homogeneity of claims in criminal cases brings convenience to the evaluation and formalization of LJP. Therefore, existing LJP research mainly focuses on criminal cases, and only limited research has been conducted on civil cases and administrative cases [33, 64]. In this subsection, we will mainly introduce the LJP task for criminal cases. As shown in Fig. 11.2, when given the textual fact description, the model is required to predict the judgment results, including the related law articles, charges, and the prison term, in turn.

An example of legal judgment prediction. Given the fact description, the model is required to predict the judgment results, including the relevant law articles, the charges, and the prison term. The case document is translated from the cases published by the Supreme People’s Court of the People’s Republic of China

With the rapid progress of end-to-end distributed representation learning, judgment prediction tasks are formalized as text classification or regression tasks. LJP mainly faces the following challenges: (1) Long-tail problem. The number of law articles and charges is large, and the number of cases for each category is imbalanced. And existing data-hungry methods cannot perform well for low-frequency categories. (2) Interpretability. Real-world applications are required to provide not only accurate predictions but also meaningful explanations for the results.

LJP has been studied since the 1950s and has been of interest to researchers from various countries, including China [66, 120], the United States [47, 52], Europe [13], and Korea [44]. Early works explore predicting the court decision with mathematical and statistical approaches from hand-crafted features [49, 52, 74]. Recent years witness the progress of neural judgment prediction models [13, 18, 78, 122]. To alleviate the long-tail problem and improve the interpretability of the LJP models, structured legal knowledge is often utilized to guide the model learning [41, 120, 121], which we will discuss in the following sections. LJP can provide potential judgment suggestions for judges and thus reduce their work stress. Automatic LJP models can also offer basic consult advice for the public. However, due to poor interpretability and unsatisfactory performance, LJP models usually face potential ethical risks and cannot be directly applied to real-world legal systems. The details of ethical issues are discussed in Sect. 11.6.

Legal Information Retrieval (Legal IR)

Legal IR aims to retrieve similar cases, laws, regulations, and other information for supporting legal case analysis. Legal IR is essential for both civil and common law systems, where judges need to first retrieve relevant knowledge from the amounts of laws and cases and then make decisions based on the relevant knowledge. Manual retrieval from large-scale knowledge sources is very time-consuming and labor-intensive. Therefore, automatic legal IR based on factual descriptions is an essential task. As shown in Fig. 11.3, given a query case and a candidate set with several cases or law articles, legal IR models are required to calculate relevant scores between the query and candidates and then rank the candidates according to the relevant scores for final retrieval outputs.

Legal IR faces the following challenges: (1) Long text matching. Due to involving complex facts, the case documents usually contain thousands of tokens. The models are supposed to locate the key information in the long text and generate expressive case representation in the semantic space [89, 105]. (2) Diverse definitions of similarity. In open-domain IR, similarity mainly refers to topical similarity. But legal IR aims to find supporting evidence for case analysis, and the definition of similarity may be diverse for different requirements, including similarity from the aspects of related laws, occurring events, and focuses of dispute [90, 91]. For example, the cases shown in Fig. 11.3 are similar in terms of occurring events, but the related laws of the two cases are different, as the value of the stolen property in the query case is much higher than that of the candidate cases.

Conventional statistical legal IR relies heavily on laborious hand-crafted rules and expert knowledge [4], via legal issue decomposition [115] or ontological framework enhancing [87]. Statistical methods mainly focus on lexical similarity and suffer from the token mismatch problem. Recent neural-based methods have been proven effective in capturing semantic similarity between cases [68, 79]. According to the data structures, we can divide neural legal IR models into text-based and network-based methods. Text-based models compute the relevant scores based on the textual content of cases [56, 57, 67, 113]. And network-based models utilize the citation network of cases to learn the representation and then recommend relevant cases and laws for queries [8, 42, 50, 109]. These works achieve good performance and make legal IR a widely used technique for various real-world applications.

Legal Question Answering (Legal QA)

Legal QA aims to answer questions in the legal domain automatically. Figure 11.4 shows an example of legal QA, which needs to find the relevant legal knowledge given the question and then perform reasoning to finally get the answer to the question. An important task of lawyers and legal professionals is to provide legal advice, i.e., answering questions from people unfamiliar with legal knowledge. As mentioned before, the scarcity of legal professionals and the high cost of legal services prevent most low-income people from receiving timely and effective legal assistance. In such a situation, legal QA can be an effective way to achieve convenient and inexpensive legal consultation services.

An example of legal question answering. (The figure is re-drawn according to Fig. 1 in [123])

Legal QA involves complex reasoning steps. As shown in Fig. 11.4, the model needs to perform multiple steps of reasoning based on the retrieved legal knowledge. According to the observation and statistics from a real-world legal question dataset [123], there are five challenging reasoning types for legal QA. (1) Lexical matching. It is a basic reasoning type for legal QA, which requires locating the relevant information and answers based on lexical matching. (2) Concept understanding. Legal questions usually involve abstract legal concepts. As shown in Fig. 11.4, after finding the relevant laws for facts, we can find that Alice commits two crimes with only one behavior. Then the models are supposed to associate the fact with the abstract concept of “Motivational concurrence” for the final decision. (3) Numerical analysis. Case analysis sometimes requires performing numerical calculations. For example, the question in Fig. 11.4 requires comparing two prison terms to select the more serious charges. (4) Multi-paragraph reading. To answer the questions, the models must read and synthesize information from multiple paragraphs. (5) Multi-hop reasoning. It means that we need to conduct multiple steps of logical reasoning. These reasoning requirements make legal QA a challenging task.

Some researchers construct large-scale legal QA datasets and verify the performance of existing open-domain QA methods [29, 123]. They find that existing methods are suboptimal for legal QA, as legal QA usually involves complicated fact and knowledge reasoning. Recently, owing to the powerful large-scale pre-trained models (PTMs), many researchers begin exploring QA with complex reasoning using chain-of-thought prompting [102, 103] and behavior learning [75, 77]. Besides, to build knowledge-intensive models, some researchers enhance PTMs with knowledge graphs [98, 117] and knowledge retrieval [38, 54]. We argue that powerful PTMs also bring great potential for improving the performance of legal QA systems.

Real-World Applications

In previous paragraphs, we introduce the legal AI tasks that have received much attention in academic research. Due to the unsatisfactory model performance, not all tasks have been applied in real-world systems. To provide an overview of the current situation of legal AI applications, we focus on widely used legal technology systems in real-world scenarios in this subsection.

With the development of the Internet, human activities and interactions have become more frequent. Meanwhile, people have become more aware of their rights. It leads to an increasing demand for legal services in recent years. According to legal industry reports, annual revenues for legal services have exceeded 150 billion yuan in China and exceeded 300 billion dollars in the United States [70]. The huge market size has given rise to many real-world legal application systems, designed to provide convenient legal services. These applications can be divided into two stages, legal information applications and legal intelligent applications.

Legal Information Applications

Legal information applications aim to electronically manage and coordinate information and personnel in complex legal services. For example, in the United States, the Federal Judicial Center launched the COURTRAN project for electronic court records in 1975, and the subsequent PACER system has provided more convenient tools for electronic judicial litigation [71]. In China, the Supreme People’s Court reported that China has successfully built legal information systems, where the digitization of case documents and online filing have been realized [19]. In terms of commercial companies, software for case file management and website for online legal consulting services have also received great attention [45]. In summary, legal information applications can help store and transfer information efficiently and reduce the communication cost between legal practitioners and the public.

Legal Intelligent Applications

The development of legal informatization has provided basic data support and applicable scenario support for legal intelligent applications. Different from legal information applications, legal intelligent applications focus on the understanding, reasoning, and prediction of legal data to help achieve efficient knowledge acquisition and data analysis [112]. For example, to help judges and lawyers quickly find past similar cases, case retrieval and recommendation technology are now widely used, and systems providing case retrieval services have appeared in various countries around the world, such as WestLawFootnote 2 and LexisNexisFootnote 3 in the United States and FaxinFootnote 4 in China. Besides, aiming to check the legality of contract terms, automatic contract review is gradually becoming a focus of legal commercial applications. Automatic contract review can provide risk warnings and assessments for public business activities, thus reducing the occurrence of contract disputes. Many startups are established for this application, such as PowerLaw,Footnote 5 LegalSifter,Footnote 6 etc.

It is worth mentioning that though many legal AI tasks have been widely studied in academic research, the application of these tasks in real-world systems is still limited. There are two main reasons. First, the performance of existing models is not satisfactory, as legal case analysis has a high demand for knowledge understanding and complex reasoning. Second, legal AI tasks, such as LJP, involve the basic rights and interests of citizens, but the potential ethical risks caused by the uninterpretability of legal models are not well studied yet. Therefore, some legal AI tasks are still in the research stage while not being applied. This still requires researchers and developers around the world to work together to develop safe, reliable, and accurate legal AI applications.

11.3 Legal Knowledge Representation and Acquisition

Legal tasks rely heavily on expert knowledge and require the model to associate relevant legal knowledge based on the understanding of facts to conduct complex analysis. In this section, we will introduce two important types of legal knowledge with different forms, including textual and structured knowledge. Legal knowledge is naturally presented in the textual form for daily work and communication. To promote work efficiency, some researchers formalize textual knowledge into structured knowledge. Both two types of knowledge play a crucial role in case analysis.

11.3.1 Legal Textual Knowledge

The majority of resources in the legal domain are presented in text forms, such as laws and regulations, legal cases, etc. These data can provide a rich reference basis for legal case analysis and enable models to effectively mine legal judgment patterns from them. In this subsection, we will introduce in detail the widely used legal textual knowledge.

Laws and Regulations

Laws and regulations are a set of rules created by governmental institutions to regulate human and institutional behaviors. They are the basis of real-world legal systems and are the origin of other legal knowledge. Especially in the civil law system, all cases should be judged based on the related law articles, which provide comprehensive and valuable knowledge for case analysis. Notably, laws and regulations usually involve many abstract concepts, which are challenging for models to understand.

Legal Cases

Legal cases cannot only provide amounts of training instances for models but also serve as a helpful knowledge base for case analysis. Different from laws and regulations which contain many abstract concepts, legal cases contain records of real-world facts and court views. Especially in common law systems, the judges are required to make a judgment based on the decision of relevant past cases and synthesize the rules of past cases as applicable to the current facts. Even for civil law systems, past cases can also provide a valuable reference for judges in some countries. Therefore, legal cases are an important supplement to the knowledge of laws and regulations.

Figure 11.5 presents an example of the fact description in a case and the corresponding law article. From the example, we can observe that the case document records the concrete real-world scenario, while the law article gives an abstract definition of the crime of position encroachment. The law articles are the basis for the judgment of legal cases, and the legal cases are the concrete manifestation of the law in the real world. Both laws and cases are important legal knowledge sources, and the legal AI models are required to associate the abstract concepts in laws and specific scenarios in facts for effective case analysis during knowledge applications.

Legal Textual Knowledge Representation Learning

Legal textual knowledge is a very important knowledge source for legal AI, and how to learn informative representation for laws and cases lies in the core of knowledge-intensive legal AI tasks. In this subsection, we will introduce how to represent textual knowledge for downstream tasks. We denote laws and cases as legal documents. Similar to the development of NLP in other domains, early research mainly represents the legal documents with symbolic representation [2, 47, 72]. Inspired by the progress of distributed representation learning [48, 73, 81, 82], pre-trained embeddings and neural network are widely applied for legal case analysis [41, 66, 120, 122] in recent years.

Nowadays, large-scale PTMs have been proven effective in capturing knowledge from the unlabeled corpus. In legal NLP, OpenCLaP [124] and Legal-BERT [15] are the earliest PTMs, which show that domain-specific pre-training can lead to performance improvement [36, 118]. Then Lawformer [105] is proposed to reduce the computational complexity of PTMs for long legal documents with a sparse attention mechanism. In addition, there are strict rules and regulations regarding the writing of legal documents. As a result, legal documents are written with extra attention to protecting privacy and avoiding offensive content. Such high-quality text data can also help mitigate the ethical risks of open-domain PTMs. Henderson et al. [39] show that models pre-trained on legal documents can perform well in learning contextual privacy rules and toxicity norms. Though these works can achieve promising results, the applied approaches ignore the specific characteristics of legal documents. For example, cases usually involve multiple semantic labels, such as the causes of action and the relevant laws. Designing legal-specific models that can effectively capture the semantic information of legal documents is still a challenging task.

11.3.2 Legal Structured Knowledge

In recent years, many researchers attempt to represent legal textual knowledge into structured knowledge. On the one hand, legal textual knowledge has its intrinsic structures. For example, the laws and regulations usually can be translated into the structure of “if...then...,” which can be further converted into logical rules; the facts of a legal case usually consist of several key events; then it can be represented as a structured event timeline. On the other hand, structured knowledge is conducive to knowledge understanding and retention. Therefore, various types of legal structured knowledge have been widely used in legal AI tasks. In this subsection, we will introduce the definition and acquisition of several legal structured knowledge.

Legal Relation Knowledge

Relational factual knowledge organizes the knowledge in a triplet format and is widely used in knowledge graphs. Similar to relational knowledge in the open domain, legal triples can emphasize the important information in the legal domain. As shown in Fig. 11.6, the triple (Alice, sell_drug_to, Bob) can be regarded as the summarization of the given case, which will help models to capture the key information and benefit the downstream tasks. The legal relation extraction methods are similar to methods in the open domain. Please refer to Chap. 9 for details about relation extraction methods.

Legal Event Knowledge

Recognizing the events and the corresponding causal relations between these events is the basis of legal case analysis. Following event definition in open domain [27, 99], legal events refer to crucial information from cases about what happened and what is involved. Figure 11.7 presents a legal case with the annotated event information and the corresponding judgment results. An event consists of one event trigger and several event arguments, such as time, place, and involved participants. Here, crashed into triggers the Bodily_harm event, in which Alice, Bob, and Green Avenue are the event actor, patient, and place. Enhancing case representation with legal event information can benefit various downstream case analysis tasks, such as LJP and legal IR. In this example, Alice causes a traffic accident, and the following Desertion and Escaping events lead to the Death event of Bob. Based on these occurred events, we can easily find the relevant law articles and give the final judgment results.

An example of legal events. We can summarize the fact description as the legal event timeline, which can help in making judgments. (The figure is re-drawn according to Fig. 1 in [110])

Legal events can be regarded as a summarization of legal cases and help models perform accurate case analysis. Therefore, many efforts have been devoted to legal event extraction (LEE). Early works mainly focus on utilizing hand-crafted symbolic features to extract legal events [6, 51]. Inspired by the success of neural networks, Li et al. [55] formalize LEE as a sequence labeling task and employ Bi-LSTM with CRF as the output layer to compute role labels for each token. Furthermore, Shen et al. [92] introduce a hierarchical event schema for LEE, which can capture the connections between different arguments and events. However, these works only attempt to extract events of limited event types and cannot be widely adopted in real-world applications. To this end, a large-scale legal event detection dataset, LEVEN [110] is proposed. LEVEN contains 8, 116 legal documents and 150, 977 annotated event mentions in 108 event types, which can serve as a reliable evaluation benchmark for LEE. It has been verified that many open-domain event detection methods can achieve good results on LEVEN. But we are also looking forward to future works that achieve comprehensive legal event extraction with high coverage of event types and event arguments.

Legal Element Knowledge

Legal elements, also known as legal attributes, refer to properties of cases, based on which we can directly make judgment decisions. Figure 11.8 presents an example of attribute-based charge prediction. Here Theft and Robbery are two confusing charges, whose definitions only differ in a specific action. From the legal attributes, we can observe that due to the violence committed by Bob, he should be sentenced to Robbery crime instead of Theft crime. The element of violence or not is the key to distinguishing these two charges. The intellectual origin of legal attributes is the elemental trial [22, 84, 96], an important legal principle that requires judges to conduct a trial solely based on crucial legal elements. The elemental trial can help judges clarify trial procedures and avoid ethical issues.

An example of legal attributes. (The figure is re-drawn according to Fig. 1 in [41])

Legal element extraction is usually formalized as a multi-label text classification task for legal cases, where each element is a binary value with yes/no as the answer. Zhong et al. [122] evaluate typical text representation models for element classification and find that existing methods can achieve good results on legal element classification. Thus, element extraction is usually treated as an intermediate auxiliary task to promote the performance of downstream tasks, such as confusing charges discrimination [41] and interpretable judgment prediction [121]. Though legal elements are important for case analysis, existing methods only perform extraction on limited types of elements and have low coverage of charges. There is still a lack of benchmarks for comprehensive training and evaluation of element extraction.

Besides, legal element extraction is also a popular topic for contract analysis, where each element is a basic component of contracts, such as parties and beginning and ending dates [12, 14, 40, 101, 116]. This task can improve the speed of contract reading and facilitate the following contract review.

Legal Logical Knowledge

Laws and regulations are in nature logical statements, and legal case analysis is a process of determining whether defendants violate the logical propositions contained in the law. Therefore, many researchers explore representing legal knowledge in a logical form and enhance case analysis with logical reasoning.

Legal logical knowledge can be divided into two categories: (1) Coarse-grained heuristic logic. Inspired by the various legal principles, coarse-grained heuristic logic mainly involve the task-level or charge-level logical rules. For example, task-level logical dependencies [111, 120] require the models to perform multi-task prediction following a specific human-inspired logical order. Elemental trial process [121] designs charge-level key elements for each charge and requires the models to analyze the cases by answering element-oriented questions. (2) Fine-grained first-order logic. As we mentioned before, laws and regulations are a set of rules expressed in natural language. And for better integration of these rules, some researchers attempt to represent laws as a set of fine-grained first-order logic rules [33]. For example, the law article in Fig. 11.5 can be represented as a logic rule:

where Xstaff refers to if the defendant is the staff of the unit, Xproperty refers to if the defendant illegally takes the unit’s property, and Ycrime refers to if the defendant should be sentenced to the crime of position encroachment. With the first-order logic rule, the judgment for the crime of position encroachment can be decomposed into two subtasks (i.e., Xstaff and Xproperty).

Legal logical knowledge can help break down the case analysis into several logical substeps. Thus it can help improve the interpretability and reasoning ability of the models.

11.3.3 Discussion

Legal textual and structured knowledge play a significant role in legal applications, and different types of knowledge possess different characteristics and play different roles. In this subsection, we will discuss the advantages of legal textual and structured knowledge.

Textual Knowledge

Textual knowledge possesses the following characteristics: (1) High coverage. Legal textual knowledge, including both laws and cases, is the origin of other forms of legal knowledge. Almost all scenarios can find their counterparts in the textual knowledge. (2) Updating over time. With the continuous refinement of laws and the increasing number of cases, legal textual knowledge is growing over time. Take the statistics from China as an example; there are more than a thousand national laws and more than 100 million legal cases nowadays, and this legal knowledge is updating and growing rapidly every year. Therefore, the two valuable characteristics make textual knowledge indispensable for existing legal NLP models. However, the textual knowledge is diverse in expression, which also makes it hard to retrieve and integrate legal textual knowledge for downstream tasks.

Structured Knowledge

Structured knowledge possesses the following characteristics: (1) Concise and condensed. Structured knowledge often contains vital information that allows for a quick grasp of the case’s specifics. Hence structured knowledge can benefit downstream models to capture key information from complicated cases. (2) Interpretable. The symbolic case representation derived from structured knowledge can provide intermediate interpretations for the prediction results. For example in Fig. 11.8, the element results can provide explanations for distinguishing confusing charges. However, structured knowledge requires labor-intensive and time-consuming manual annotation. Therefore, it needs further exploration for automatic structured knowledge acquisition.

Towards Model Knowledge

In the era of deep learning, there is also an important type of knowledge, named model knowledge or modeledge. Modeledge refers to knowledge implicitly contained in models. Different from textual and structured knowledge which are explicit human-friendly knowledge, implicit modeledge is machine-friendly and can be easily utilized by AI systems. How to transform textual and structured legal knowledge into model knowledge is a popular research topic and will be discussed in the following section.

11.4 Knowledge-Guided Legal NLP

Legal knowledge, including textual and structured knowledge, is essential for case analysis. However, both two types of knowledge are presented in a human-friendly form, and it is not straightforward to enhance legal knowledge into legal NLP models. To this end, many efforts have been devoted to knowledge-guided legal NLP methods, which aim to embed explicit textual and structured knowledge into implicit model knowledge. Following the knowledgeable framework introduced in Chap. 9, in this section, we introduce knowledge-guided legal NLP methods from the perspective of which component is enhanced with legal knowledge.

11.4.1 Input Augmentation

Input augmentation methods integrate knowledge into the inputs of the models. Regarding the integration methods, the approaches can be mainly divided into two categories: text concatenation and embedding augmentation. Figure 11.9 illustrates the two categories of input augmentation methods.

Text Concatenation

Text concatenation aims to concatenate the knowledge text with the original text and directly feed the concatenation into the model without the architecture modification. For example, Zhong et al. [123] concatenate relevant knowledge for legal question answering. Given the question, the authors retrieve relevant regulations from the knowledge base and concatenate the retrieved knowledge with questions to predict the final answers. Integrating knowledge via direct text concatenation can successfully inject knowledge into models but also may introduce noise if the textual knowledge is irrelevant to the given inputs.

Embedding Augmentation

Embedding augmentation aims to integrate knowledge via fuse knowledge embeddings with original text embeddings. For example, Yao et al. [110] enhance PTMs with legal event knowledge by fusing the event embeddings in the input layer. They first extract legal events from fact description and then add additional event type embeddings with origin token embeddings as the inputs of PTMs for further applications.

11.4.2 Architecture Reformulation

Architecture reformulation refers to methods that design model architectures according to heuristic rules in the legal domain. From the perspective of the inspiration source, the works for architecture reformulation can be divided into two categories. One is inspired by the human thought process and the other is inspired by the knowledge structure.

Inspiration from Human Thought Process

For human judges, there exist thinking logic patterns for legal case analysis, following which we can design models in line with judicial logic. For example, criminal judgment consists of multiple subtasks, including relevant law prediction, charge prediction, and prison term prediction. Judges usually would make decisions step by step, and the thought steps are closely related to each other. For example, the results of charges rely on the relevant laws, and the results of prison terms rely on the charges and relevant laws. Inspired by this observation, TopJudge [120] proposes to capture the logical dependency between different subtasks of LJP via a topological graph, where each node represents a subtask, and each edge represents the information dependency between subtasks. Figure 11.10 presents the model architecture for TopJudge, where the logical dependency is captured via RNN cells. As the prediction of prison terms relies on the results of laws and charges, the cell for prison term takes fact representation as inputs and the hidden vectors from laws and charges as cell states. Furthermore, inspired by the elemental trial principle, which requires judges to analyze cases from the perspective of legal elements, Zhong et al. [121] propose an iterative model to predict the charges by judging the key elements step by step. In this way, the thought process of the model is open and transparent, which can bring interpretability to LJP.

Model architecture for TopJudge. (The figure is re-drawn according to Fig. 2 and Fig. 3 from [120])

Inspiration from Knowledge Structure

There are many different types of legal knowledge, and different types of knowledge can be utilized with different modules. The attention modules mentioned in the input augmentation section can also be regarded as a specific input architecture for knowledge fusion. In addition, structured knowledge also plays a very important role in the design of network architecture. Some researchers build legal-specific output layers. For instance, as the definition of criminal charges follows a hierarchical structure, where each specific charge is the leaf node and similar charges are in the same subtree, Liu et al. [62] design a hierarchical classifier layer, where the model predicts the final charges following the path from the root node to the leaf charge node.

11.4.3 Objective Regularization

Objective regularization methods integrate legal knowledge into the objective functions. By introducing additional expert prior into the objective functions, the model can better capture key information from the text and improve downstream task performance. There are two mainstream approaches for objective regularization: regularization on new targets and regularization on existing targets. The former aims to design new training tasks, while the latter aims to build new constraints to the existing targets.

Regularization on New Targets

Constructing additional supervision signals is a widely used strategy for legal case analysis. Xu et al. [108] improve prison term prediction by requiring the model to predict the seriousness of charges. Feng et al. [31] introduce the event extraction task for judgment prediction. Hu et al. [41] utilize the legal element knowledge via a multi-task framework. As shown in Fig. 11.11, the model is required to predict both the charges and element values. Then the model can generate an element-aware case representation and better distinguish the confusing charges.

Regularization on Existing Targets

Legal case analysis usually consists of multiple subtasks, and there is a logical association between different subtasks. To this end, many researchers attempt to construct extra constraints between different subtasks to improve the consistency across different tasks. For example, Feng et al. [31] add a penalty for legal event-based consistency, which requires that if an event trigger is detected, then all and only its corresponding argument types can be extracted. Chen et al. [20] add regularization for three legal judgment prediction subtasks from the perspective of causal inference. Regularization for multi-task learning can help the model produce consistent case analysis results and improve the reliability of legal AI.

11.4.4 Parameter Transfer

Parameter transfer refers to methods that train models on source tasks and then transfer the parameters to the target tasks to achieve knowledge transfer. As for the source task, existing approaches can be divided into two categories: transferring from self-supervised pre-trained models or transferring from other supervised tasks.

Pre-trained Models

A typical paradigm of parameter transfer is pre-trained language models, which transfer parameters trained with self-supervised tasks to downstream applications. Early works only transfer the word embeddings to the target domain [16, 26]. Further, the pre-training-fine-tuning paradigm is widely used to transfer the knowledge learned from large-scale unsupervised data to downstream tasks. Legal PTMs have been proposed for various languages, such as Chinese [105, 124], English [15, 39], and French [28]. Furthermore, as most legal documents consist of thousands of tokens, a sparse attention-based model, Lawformer [105], is proposed for the legal domain. As shown in Fig. 11.12, instead of applying a fully connected self-attention mechanism, Lawformer utilizes the sparse attention mechanism, where the local attention requires each token to only attend its neighbor tokens, and the global attention only requires limited tokens to attend the whole sequence. Hence, the sparse attention mechanism decreases the computational complexity to linear complexity. These methods mainly adopt existing open-domain methods to the legal domain and do not design legal-specific pre-training tasks and model architectures, which is also important for future research.

The sparse attention mechanism applied in Lawformer. (The figure is re-drawn according to Fig. 2 from [105])

Cross-task Transfer

In addition to transferring parameters via self-supervised pre-training, some researchers attempt to train models on some source supervised tasks and then transfer the model to target tasks. For example, Shao et al. [89] conduct transfer learning from legal entailment to legal case retrieval. Gupta et al. [37] first train the model with open-domain datasets and then conduct further tuning for legal conference resolution. Cross-task transfer requires source tasks to be similar to the target tasks so that the task knowledge can be successfully transferred across tasks.

11.5 Outlook

Although legal NLP is currently well developed and makes good progress on many tasks, there is still a long way to go for the real-world applications of legal NLP methods. In this section, we list four directions for future research.

More Data

As a family of neural networks, existing legal NLP models are data-hungry and require large amounts of high-quality labeled data. However, legal tasks often require complex reasoning about the case facts, which has a high requirement of expertise for annotators. As a result, the annotation of legal datasets is usually time-consuming and costly. For example, the annotation of CUAD, a legal contract review dataset, took dozens of law students a year and over 2 million dollars [40].

PTMs have shown their effectiveness in capturing knowledge from large-scale unlabeled data [25, 85]. Especially, the self-supervised pre-training can effectively improve the ability of few-shot learning [11, 34], which can help alleviate the data-scarce problem. In addition, with the continuous disclosure of legal documents and the accumulation of various legal data on the Internet, we can easily access publicly available legal data, which provides a substantial data basis for legal PTMs.

Some works attempt to train legal PTMs [15, 28, 39, 105]. However, the legal data used in these models are still limited, containing only legal cases, contracts, etc. Most of the pre-training tasks simply follow the tasks in the open domain, and the model size is also still limited. Therefore, we argue that it is very important to use more data and design legal-specific pre-training tasks to train larger legal PTMs with more capabilities.

More Knowledge

Legal tasks place high demands on the understanding and application of legal knowledge. As mentioned in previous sections, many knowledge-guided legal NLP approaches have achieved significant progress in recent years [41, 66, 120, 121]. However, more knowledge is still desired for legal NLP methods.

As for textual knowledge, existing applications are limited by the ability of knowledge retrieval due to the gap between abstract knowledge and concrete facts. As for structured knowledge, existing applications focus on a limited number of case types, resulting in low coverage. Therefore, improving the ability to utilize textual knowledge and increasing the coverage of structured knowledge is an important issue to be addressed. Moreover, as for the combination of multiple knowledge, using more types of knowledge and more amount of knowledge in legal NLP models is also a very important research direction.

More Interpretability

While existing neural network-based approaches have achieved high accuracy, the black-box characteristics of neural models pose a great ethical risk to real-world applications. For example, if gender bias is introduced in case analysis models, the uninterpretability will make it challenging to detect such bias and harm legal fairness. Moreover, the main goal of legal AI is to use technology to assist in legal tasks, which requires the models to cooperate with human experts for decision making. If legal NLP models can only give results without explanations, it will significantly increase the time cost for human experts to understand the model’s results and reduce the credibility of the models.

Thus, while improving the accuracy of legal models, we also need to pay extra attention to the interpretability of legal models. Existing efforts explore improving the interpretability via outputting the intermediate states and results [33, 121], extracting the prediction evidence [59, 113], and generating the corresponding explanations [58, 111]. These methods mainly focus on specific tasks and usually need additional annotation, and it is still challenging to design a general and efficient framework for explainable legal AI.

More Intelligence

Legal case analysis often involves complex legal reasoning, including abstract concept understanding, numerical analysis, multi-hop reasoning, and multi-passage information synthesis, which are still open problems in NLP. Therefore, complex case analysis reasoning requires models with more cognitive intelligence capabilities. In the open domain, many studies have demonstrated that large-scale PTMs can manipulate tools to complete complex tasks. For example, WebGPT learns to use search engines to answer complex questions [75], and CC-Net can manipulate computers to finish some human instructions [43]. This gives the possibility to implement legal models with more intelligence, which is desired for complex case analysis. Enabling models to manipulate legal search engines, numerical calculators, etc. to complete complex case reasoning is also a very important future research direction for legal AI.

11.6 Ethical Consideration

Existing research has shown that legal AI systems can help improve work efficiency and alleviate a considerable workload for legal practitioners. However, legal work involves the essential rights and interests of individuals, and while emphasizing efficiency, we should also pay attention to the fairness and justice of the legal system. At present, legal AI systems are still in the stage of rapid development, and the ethical risks of legal AI systems have not been fully explored. In this section, we discuss the ethical risks of legal AI systems and what principles should be followed in the application of legal AI systems.

Ethical Risks

In the application of legal AI systems, both the inevitable model bias and careless use may cause ethical risks.

Model Bias

While the neural architecture brings significant performance improvement to legal NLP tasks, its black-box characteristics make it difficult to discover and detect the potential bias of the models. Many existing methods prove that the models may learn the bias from the training data, such as gender bias [94] and racial bias [76]. Besides, recent popular PTMs are usually trained on large-scale open-domain data, which may contain various types of bias [88, 104]. These potential model biases may result in severe unfair treatment of individuals, which is a serious ethical risk. Therefore, it is very important to detect and eliminate the bias of legal AI systems.

Some works attempt to explore fairness evaluation for legal models, especially LJP models. For example, FairLex [17] collects a fairness evaluation benchmark across five attributes (gender, age, region, language, and area) for four jurisdictions (European Council, USA, Switzerland, and China). CaLF [100] is a metric for real-world legal fairness and explores bias elimination with adversarial training. However, these works are still limited to specific tasks and attributes. It still needs further efforts to explore the general fairness evaluation across multiple tasks and attributes.

Misuse

The goal of legal AI systems is to assist legal practitioners in their work, and legal AI systems must be used with the guidance of professionals. Due to the inevitable errors of legal models, it is very important to ensure that legal AI systems are used correctly. Specifically, legal AI systems should not be used to make final decisions that may affect the rights and interests of individuals, and legal practitioners should still be responsible for the final decision. It is desired to clarify the boundaries of the applications of legal AI systems and to ensure that the legal AI systems are used correctly.

Besides, the legal AI models are supposed to be well evaluated and used in the appropriate scenario. Each legal AI model is usually trained with specific datasets for a specific scenario, and misuse in an inappropriate scenario can boost the error rate of the models. For example, existing legal case retrieval models are trained with long cases as inputs, while for real-world applications, we may need to retrieve relevant cases with keywords or short sentences as inputs. The gap between model training and application may lead to the misuse of legal AI systems and obtain suboptimal performance.

Application Principles

The potential risks of legal AI may cause serious consequences, and it is very important to ensure that legal AI systems are used correctly and ethically. In this section, we discuss the application principles of legal AI systems from the perspective of purpose, methodology, and monitoring [60, 119].

People-Oriented

The ultimate goal of legal AI systems is to provide assistance and support for legal practitioners. The design of legal AI methods should be people-oriented, which means legal AI systems are designed to provide explainable references, but not the final decision, for legal practitioners, and the legal practitioners should be responsible for the final results.

Human-in-the-Loop

In real-world legal AI applications, humans and AI models should cooperate to form a human-in-the-loop paradigm. Specifically, complex legal tasks should be divided into several subtasks, and the legal AI systems carry out the subtasks suitable for automation, including information storage, information extraction, knowledge retrieval, etc., while the legal practitioners carry out the subtasks that involve important decisions. In this way, the legal AI systems can provide assistance and support for legal practitioners, and the legal practitioners can provide the necessary control and training signals for the legal AI systems. Thus, the human-in-the-loop principle can improve reliability and avoid the misuse of legal AI systems.

Transparency

As mentioned in the previous section, the black-box characteristics of legal AI systems make model biases inevitable. Therefore, it is very important to ensure the transparency of algorithms and details of legal AI systems. It means the public and legal practitioners should be able to understand the model’s reasoning process, algorithm principles, and prediction results. In this way, transparency can help improve the credibility of legal AI systems and prevent the misuse of legal AI systems.

11.7 Open Competitions and Benchmarks

The formalization and definition of legal tasks are the basis of legal AI and require the efforts of both AI researchers and legal researchers. Besides, the evaluation of legal tasks requires large-scale human-annotated data. To this end, some organizations have formalized many legal tasks and collected large-scale legal datasets for the research community. There are three popular open challenges, including COLIEE,Footnote 7 AILA,Footnote 8 and CAIL,Footnote 9 which provide a large number of legal datasets for the research community.

Competition on Legal Information Extraction/Entailment, COLIEE, is held since 2014 and encourages the competitors to perform automatic legal retrieval and entailment. The data in COLIEE is collected from Japanese and Canadian case documents.

Artificial Intelligence for Legal Assistance, AILA, is held since 2019. AILA focuses on legal retrieval in 2019 and then extends the tasks to rhetorical labeling and legal summarization in 2020 and 2021. The data of AILA is collected from cases published by the Indian Supreme Court.

Challenge of AI in Law, CAIL, is held since 2018. CAIL publishes datasets for various legal NLP tasks. The data of CAIL is collected from cases published by the People’s Supreme Court of the People’s Republic of China.

We summarize several representative large-scale datasets for legal NLP in Table 11.1. These datasets provide valuable training and evaluation resources for the development of legal AI. We hope more researchers and organizations can collect and release more large-scale legal datasets for the research community to promote the development of legal AI systems.

11.8 Summary and Further Readings

In this chapter, we first introduce three typical legal knowledge-intensive tasks and their challenges, including legal judgment prediction, legal information retrieval, and legal question answering. All three tasks can provide a handy reference for legal services. Next, we describe the textual and structured legal knowledge, which is summarized by legal experts to facilitate case analysis. Further, we introduce knowledge-guided legal NLP methods from the perspective of how to integrate legal knowledge into neural models. We also discuss some advanced topics that aim to further promote the development of legal NLP approaches.

As for the introduction to legal tasks, Cui et al. [24] give a comprehensive overview of the datasets, subtasks, and methods of legal judgment prediction. Sansone et al. [86] and Locke et al. [63] review recent progress on legal information retrieval systems.

As for the survey of legal AI, Zhong et al. [122] provide insightful discussion and experiments on how existing deep learning methods perform on legal tasks. Bommasani et al. [10] discuss the opportunities and risks of the application in the legal domain of large-scale PTMs.

References

Work report of the Supreme People’s Court of the People’s Republic of China (in Chinese). 2022.

Nikolaos Aletras, Dimitrios Tsarapatsanis, Daniel Preoţiuc-Pietro, and Vasileios Lampos. Predicting judicial decisions of the European Court of Human Rights: A natural language processing perspective. PeerJ Computer Science, 2:e93, 2016.

Zhenwei An, Yuxuan Lai, and Yansong Feng. Natural language understanding for legal documents (in Chinese). Journal of Chinese Information Processing, 36(8):1–11, 2022.

Trevor Bench-Capon, Michał Araszkiewicz, Kevin Ashley, Katie Atkinson, Floris Bex, Filipe Borges, Daniele Bourcier, Paul Bourgine, Jack G Conrad, Enrico Francesconi, et al. A history of ai and law in 50 papers: 25 years of the international conference on ai and law. Artificial Intelligence and Law, 20(3):215–319, 2012.

Trevor Bench-Capon and Giovanni Sartor. A model of legal reasoning with cases incorporating theories and values. Artificial Intelligence, 150(1-2):97–143, 2003.

Anderson Bertoldi, Rove Chishman, Sandro José Rigo, and Thaís Domênica Minghelli. Cognitive linguistic representation of legal events. In Proceedings of COGNITIVE, 2014.

Paheli Bhattacharya, Kripabandhu Ghosh, Saptarshi Ghosh, Arindam Pal, Parth Mehta, Arnab Bhattacharya, and Prasenjit Majumder. Fire 2019 aila track: Artificial intelligence for legal assistance. In Proceedings of FIRE, 2019.

Paheli Bhattacharya, Kripabandhu Ghosh, Arindam Pal, and Saptarshi Ghosh. Hier-spcnet: a legal statute hierarchy-based heterogeneous network for computing legal case document similarity. In Proceedings of SIGIR, 2020.

Paheli Bhattacharya, Parth Mehta, Kripabandhu Ghosh, Saptarshi Ghosh, Arindam Pal, Arnab Bhattacharya, and Prasenjit Majumder. Overview of the fire 2020 aila track: Artificial intelligence for legal assistance. In Proceedings of FIRE, 2020.

Rishi Bommasani, Drew A Hudson, Ehsan Adeli, Russ Altman, Simran Arora, Sydney von Arx, Michael S Bernstein, Jeannette Bohg, Antoine Bosselut, Emma Brunskill, et al. On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258, 2021.

Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, et al. Language models are few-shot learners. In Proceedings of NeurIPS, 2020.

Ilias Chalkidis and Ion Androutsopoulos. A deep learning approach to contract element extraction. In JURIX, 2017.

Ilias Chalkidis, Ion Androutsopoulos, and Nikolaos Aletras. Neural legal judgment prediction in English. In Proceedings of ACL, 2019.

Ilias Chalkidis, Ion Androutsopoulos, and Achilleas Michos. Extracting contract elements. In Proceedings of ICAIL, 2017.

Ilias Chalkidis, Manos Fergadiotis, Prodromos Malakasiotis, Nikolaos Aletras, and Ion Androutsopoulos. Legal-BERT: The muppets straight out of law school. In Proceedings of EMNLP Findings, 2020.

Ilias Chalkidis and Dimitrios Kampas. Deep learning in law: early adaptation and legal word embeddings trained on large corpora. Artificial Intelligence and Law, 27(2):171–198, 2019.

Ilias Chalkidis, Tommaso Pasini, Sheng Zhang, Letizia Tomada, Sebastian Schwemer, and Anders Søgaard. Fairlex: A multilingual benchmark for evaluating fairness in legal text processing. In Proceedings of ACL, 2022.

Huajie Chen, Deng Cai, Wei Dai, Zehui Dai, and Yadong Ding. Charge-based prison term prediction with deep gating network. In Proceedings of EMNLP-IJCNLP, 2019.

Su Chen, He Tian, Yanbin Lyu, and Hu Changming. Annual report on informatization of Chinese courts (in Chinese). Technical report, 2022.

Wenqing Chen, Jidong Tian, Liqiang Xiao, Hao He, and Yaohui Jin. Exploring logically dependent multi-task learning with causal inference. In Proceedings of EMNLP, 2020.

Yanguang Chen, Yuanyuan Sun, Zhihao Yang, and Hongfei Lin. Joint entity and relation extraction for legal documents with legal feature enhancement. In Proceedings of COLING, 2020.

Jerome Alan Cohen. The criminal procedure law of the People’s Republic of China. The Journal of Criminal Law and Criminology, 1982.

Legal Services Corporation. The justice gap: Measuring the unmet civil legal needs of low-income americans, 2017.

Junyun Cui, Xiaoyu Shen, Feiping Nie, Zheng Wang, Jinglong Wang, and Yulong Chen. A survey on legal judgment prediction: Datasets, metrics, models and challenges. arXiv preprint arXiv:2204.04859, 2022.

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. BERT: pre-training of deep bidirectional transformers for language understanding. In Proceedings of NAACL-HLT, 2019.

Jenish Dhanani, Rupa Mehta, and Dipti Rana. Effective and scalable legal judgment recommendation using pre-learned word embedding. Complex & Intelligent Systems, pages 1–15, 2022.

George R Doddington, Alexis Mitchell, Mark A Przybocki, Lance A Ramshaw, Stephanie M Strassel, and Ralph M Weischedel. The automatic content extraction (ace) program-tasks, data, and evaluation. In Proceedings of LREC, 2004.

Stella Douka, Hadi Abdine, Michalis Vazirgiannis, Rajaa El Hamdani, and David Restrepo Amariles. JuriBERT: A masked-language model adaptation for French legal text. In Proceedings of the Natural Legal Language Processing Workshop 2021, 2021.

Xingyi Duan, Baoxin Wang, Ziyue Wang, Wentao Ma, Yiming Cui, Dayong Wu, Shijin Wang, Ting Liu, Tianxiang Huo, Zhen Hu, et al. CJRC: A reliable human-annotated benchmark dataset for Chinese judicial reading comprehension. In Proceedings of CCL, 2019.

Donald J Farole and Lynn Langton. County-based and local public defender offices, 2007. US Department of Justice, Office of Justice Programs, Bureau of Justice …, 2010.

Yi Feng, Chuanyi Li, and Vincent Ng. Legal judgment prediction via event extraction with constraints. In Proceedings of ACL, 2022.

Jens Frankenreiter and Michael A Livermore. Computational methods in legal analysis. Annual Review of Law and Social Science, 16:39–57, 2020.

Leilei Gan, Kun Kuang, Yi Yang, and Fei Wu. Judgment prediction via injecting legal knowledge into neural networks. In Proceedings of AAAI, 2021.

Tianyu Gao, Adam Fisch, and Danqi Chen. Making pre-trained language models better few-shot learners. In Proceedings of ACL, 2021.

Anne von der Lieth Gardner. An artificial intelligence approach to legal reasoning. MIT press, 1987.

Nicolas Garneau, Eve Gaumond, Luc Lamontagne, and Pierre-Luc Déziel. Criminelbart: a French Canadian legal language model specialized in criminal law. In Proceedings of ICAIL, 2021.

Ajay Gupta, Devendra Verma, Sachin Pawar, Sangameshwar Patil, Swapnil Hingmire, Girish K Palshikar, and Pushpak Bhattacharyya. Identifying participant mentions and resolving their coreferences in legal court judgements. In Proceedings of TSD, 2018.

Kelvin Guu, Kenton Lee, Zora Tung, Panupong Pasupat, and Mingwei Chang. Retrieval augmented language model pre-training. In Proceedings of ICML, 2020.

Peter Henderson, Mark S Krass, Lucia Zheng, Neel Guha, Christopher D Manning, Dan Jurafsky, and Daniel E Ho. Pile of law: Learning responsible data filtering from the law and a 256gb open-source legal dataset. arXiv preprint arXiv:2207.00220, 2022.

Dan Hendrycks, Collin Burns, Anya Chen, and Spencer Ball. CUAD: An expert-annotated NLP dataset for legal contract review. arXiv preprint arXiv:2103.06268, 2021.

Zikun Hu, Xiang Li, Cunchao Tu, Zhiyuan Liu, and Maosong Sun. Few-shot charge prediction with discriminative legal attributes. In Proceedings of COLING, 2018.

Zihan Huang, Charles Low, Mengqiu Teng, Hongyi Zhang, Daniel E Ho, Mark S Krass, and Matthias Grabmair. Context-aware legal citation recommendation using deep learning. In Proceedings of ICAIL, 2021.

Peter C Humphreys, David Raposo, Tobias Pohlen, Gregory Thornton, Rachita Chhaparia, Alistair Muldal, Josh Abramson, Petko Georgiev, Adam Santoro, and Timothy Lillicrap. A data-driven approach for learning to control computers. In Proceedings of ICML, 2022.

Wonseok Hwang, Dongjun Lee, Kyoungyeon Cho, Hanuhl Lee, and Minjoon Seo. A multi-task benchmark for korean legal language understanding and judgement prediction. arXiv preprint arXiv:2206.05224, 2022.

Johnathan Jenkins. What can information technology do for law. Harv. JL & Tech., 21:589, 2007.

Arnav Kapoor, Mudit Dhawan, Anmol Goel, TH Arjun, Akshala Bhatnagar, Vibhu Agrawal, Amul Agrawal, Arnab Bhattacharya, Ponnurangam Kumaraguru, and Ashutosh Modi. Hldc: Hindi legal documents corpus. arXiv preprint arXiv:2204.00806, 2022.

Daniel Martin Katz, Michael J Bommarito, and Josh Blackman. A general approach for predicting the behavior of the Supreme Court of the United States. PloS one, 12(4):e0174698, 2017.

Yoon Kim. Convolutional neural networks for sentence classification. In Proceedings of EMNLP, 2014.

Fred Kort. Predicting Supreme Court decisions mathematically: A quantitative analysis of the “right to counsel” cases. American Political Science Review, 51(1):1–12, 1957.

Sushanta Kumar, P Krishna Reddy, V Balakista Reddy, and Aditya Singh. Similarity analysis of legal judgments. In Proceedings of COMPUTE, 2011.

Nikolaos Lagos, Frederique Segond, Stefania Castellani, and Jacki O’Neill. Event extraction for legal case building and reasoning. In Proceedings of IIP, 2010.

Benjamin E Lauderdale and Tom S Clark. The Supreme Court’s many median justices. American Political Science Review, 106(4):847–866, 2012.

Spyretta Leivaditi, Julien Rossi, and Evangelos Kanoulas. A benchmark for lease contract review. arXiv preprint arXiv:2010.10386, 2020.

Patrick Lewis, Ethan Perez, Aleksandra Piktus, Fabio Petroni, Vladimir Karpukhin, Naman Goyal, Heinrich Küttler, Mike Lewis, Wen-tau Yih, Tim Rocktäschel, et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. In Proceedings of NeurIPS, 2020.

Chuanyi Li, Yu Sheng, Jidong Ge, and Bin Luo. Apply event extraction techniques to the judicial field. In Proceedings of UbiComp-ISWC, 2019.

Bulou Liu, Yueyue Wu, Yiqun Liu, Fan Zhang, Yunqiu Shao, Chenliang Li, Min Zhang, and Shaoping Ma. Conversational vs traditional: Comparing search behavior and outcome in legal case retrieval. In Proceedings of SIGIR, 2021.

Bulou Liu, Yueyue Wu, Fan Zhang, Yiqun Liu, Zhihong Wang, Chenliang Li, Min Zhang, and Shaoping Ma. Query generation and buffer mechanism: Towards a better conversational agent for legal case retrieval. Information Processing & Management, 59(5):103051, 2022.

Liting Liu, Wenzheng Zhang, Jie Liu, Wenxuan Shi, and Yalou Huang. Interpretable charge prediction for legal cases based on interdependent legal information. In Proceedings of IJCNN, 2021.

Xiao Liu, Da Yin, Yansong Feng, Yuting Wu, and Dongyan Zhao. Everything has a cause: Leveraging causal inference in legal text analysis. In Proceedings of NAACL, 2021.

Yiqun Liu. Establishing a robust system of rules for the application of legal artificial intelligence to achieve a higher level of digital justice (in Chinese). China Internet Civilization Conference, 2022.

Zhiyuan Liu, Yankai Lin, and Maosong Sun. Representation Learning for Natural Language Processing. Springer, 2020.

Zhiyuan Liu, Cunchao Tu, and Maosong Sun. Legal cause prediction with inner descriptions and outer hierarchies. In Proceedings of CCL, 2019.

Daniel Locke and Guido Zuccon. Case law retrieval: problems, methods, challenges and evaluations in the last 20 years. arXiv preprint arXiv:2202.07209, 2022.

Shangbang Long, Cunchao Tu, Zhiyuan Liu, and Maosong Sun. Automatic judgment prediction via legal reading comprehension. In Proceedings of CCL, 2019.

Antoine Louis and Gerasimos Spanakis. A statutory article retrieval dataset in French. In Proceedings of ACL, 2022.

Bingfeng Luo, Yansong Feng, Jianbo Xu, Xiang Zhang, and Dongyan Zhao. Learning to predict charges for criminal cases with legal basis. In Proceedings of EMNLP, 2017.

Yixiao Ma, Qingyao Ai, Yueyue Wu, Yunqiu Shao, Yiqun Liu, Min Zhang, and Shaoping Ma. Incorporating retrieval information into the truncation of ranking lists for better legal search. In Proceedings of SIGIR, 2022.

Yixiao Ma, Yunqiu Shao, Yueyue Wu, Yiqun Liu, Ruizhe Zhang, Min Zhang, and Shaoping Ma. LeCaRD: a legal case retrieval dataset for Chinese law system. In Proceedings of SIGIR, 2021.

Vijit Malik, Rishabh Sanjay, Shubham Kumar Nigam, Kripabandhu Ghosh, Shouvik Kumar Guha, Arnab Bhattacharya, and Ashutosh Modi. ILDC for CJPE: Indian legal documents corpus for court judgment prediction and explanation. In Proceedings of ACL-IJCNLP, 2021.

MarketLine. Legal services in the United States. Technical report, 2021.

Peter W Martin. Online access to court records-from documents to data, particulars to patterns. Vill. L. Rev., 53:855, 2008.

Masha Medvedeva, Michel Vols, and Martijn Wieling. Using machine learning to predict decisions of the European Court of Human Rights. Artificial Intelligence and Law, 28(2):237–266, 2020.

T Mikolov and J Dean. Distributed representations of words and phrases and their compositionality. In Proceedings of NeurIPS, 2013.

Stuart S Nagel. Applying correlation analysis to case prediction. Tex. L. Rev., 42:1006, 1963.

Reiichiro Nakano, Jacob Hilton, Suchir Balaji, Jeff Wu, Long Ouyang, Christina Kim, Christopher Hesse, Shantanu Jain, Vineet Kosaraju, William Saunders, et al. WebGPT: Browser-assisted question-answering with human feedback. arXiv preprint arXiv:2112.09332, 2021.

Ziad Obermeyer, Brian Powers, Christine Vogeli, and Sendhil Mullainathan. Dissecting racial bias in an algorithm used to manage the health of populations. Science, 366(6464):447–453, 2019.

Long Ouyang, Jeff Wu, Xu Jiang, Diogo Almeida, Carroll L Wainwright, Pamela Mishkin, Chong Zhang, Sandhini Agarwal, Katarina Slama, Alex Ray, et al. Training language models to follow instructions with human feedback. arXiv preprint arXiv:2203.02155, 2022.

Sicheng Pan, Tun Lu, Ning Gu, Huajuan Zhang, and Chunlin Xu. Charge prediction for multi-defendant cases with multi-scale attention. In Proceedings of ChineseCSWC, 2019.

Vedant Parikh, Upal Bhattacharya, Parth Mehta, Ayan Bandyopadhyay, Paheli Bhattacharya, Kripa Ghosh, Saptarshi Ghosh, Arindam Pal, Arnab Bhattacharya, and Prasenjit Majumder. Aila 2021: Shared task on artificial intelligence for legal assistance. In Proceedings of FIRE, 2021.

So-Hui Park, Dong-Gu Lee, Jin-Sung Park, and Jun-Woo Kim. A survey of research on data analytics-based legal tech. Sustainability, 13(14):8085, 2021.

Jeffrey Pennington, Richard Socher, and Christopher Manning. GloVe: Global vectors for word representation. In Proceedings of EMNLP, 2014.

Matthew Peters, Mark Neumann, Mohit Iyyer, Matt Gardner, Christopher Clark, Kenton Lee, and Luke Zettlemoyer. Deep contextualized word representations. In Proceedings of NAACL-HLT, 2018.

James Popple. A pragmatic legal expert system. Dartmouth (Ashgate), 1996.

François Quintard-Morénas. The presumption of innocence in the French and Anglo-American legal traditions. The American Journal of Comparative Law, 58(1):107–149, 2010.

Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J Liu. Exploring the limits of transfer learning with a unified text-to-text transformer. Journal of Machine Learning Research, 21:1–67, 2020.

Carlo Sansone and Giancarlo Sperlí. Legal information retrieval systems: State-of-the-art and open issues. Information Systems, 106:101967, 2022.

Manavalan Saravanan, Balaraman Ravindran, and Shivani Raman. Improving legal information retrieval using an ontological framework. Artificial Intelligence and Law, 17(2):101–124, 2009.

Patrick Schramowski, Cigdem Turan, Nico Andersen, Constantin A Rothkopf, and Kristian Kersting. Large pre-trained language models contain human-like biases of what is right and wrong to do. Nature Machine Intelligence, 4(3):258–268, 2022.

Yunqiu Shao, Jiaxin Mao, Yiqun Liu, Weizhi Ma, Ken Satoh, Min Zhang, and Shaoping Ma. BERT-PLI: Modeling paragraph-level interactions for legal case retrieval. In Proceedings of IJCAI, 2020.

Yunqiu Shao, Yueyue Wu, Yiqun Liu, Jiaxin Mao, and Shaoping Ma. Understanding relevance judgments in legal case retrieval. ACM Transactions on Information Systems, 2022.

Yunqiu Shao, Yueyue Wu, Yiqun Liu, Jiaxin Mao, Min Zhang, and Shaoping Ma. Investigating user behavior in legal case retrieval. In Proceedings of SIGIR, 2021.

Shirong Shen, Guilin Qi, Zhen Li, Sheng Bi, and Lusheng Wang. Hierarchical Chinese legal event extraction via pedal attention mechanism. In Proceedings of COLING, 2020.

Yi Shu, Yao Zhao, Xianghui Zeng, and Qingli Ma. Cail2019-fe. Technical report, 2019.

Tony Sun, Andrew Gaut, Shirlyn Tang, Yuxin Huang, Mai ElSherief, Jieyu Zhao, Diba Mirza, Elizabeth Belding, Kai-Wei Chang, and William Yang Wang. Mitigating gender bias in natural language processing: Literature review. In Proceedings of ACL, 2019.

Richard E Susskind. The latent damage system: A jurisprudential analysis. In Proceedings of ICAIL, 1989.

Victor Tadros and Stephen Tierney. The presumption of innocence and the human rights act. The Modern Law Review, 67(3):402–434, 2004.

Russ VerSteeg. Law in ancient Egypt. Carolina Academic Press, 2002.

Xiaozhi Wang, Tianyu Gao, Zhaocheng Zhu, Zhengyan Zhang, Zhiyuan Liu, Juanzi Li, and Jian Tang. KEPLER: A unified model for knowledge embedding and pre-trained language representation. Transactions of the Association for Computational Linguistics, 9:176–194, 2021.

Xiaozhi Wang, Ziqi Wang, Xu Han, Wangyi Jiang, Rong Han, Zhiyuan Liu, Juanzi Li, Peng Li, Yankai Lin, and Jie Zhou. MAVEN: A massive general domain event detection dataset. In Proceedings of EMNLP, 2020.

Yuzhong Wang, Chaojun Xiao, Shirong Ma, Haoxi Zhong, Cunchao Tu, Tianyang Zhang, Zhiyuan Liu, and Maosong Sun. Equality before the law: legal judgment consistency analysis for fairness. arXiv preprint arXiv:2103.13868, 2021.

Zihan Wang, Hongye Song, Zhaochun Ren, Pengjie Ren, Zhumin Chen, Xiaozhong Liu, Hongsong Li, and Maarten de Rijke. Cross-domain contract element extraction with a bi-directional feedback clause-element relation network. In Proceedings of SIGIR, 2021.

Jason Wei, Maarten Bosma, Vincent Zhao, Kelvin Guu, Adams Wei Yu, Brian Lester, Nan Du, Andrew M Dai, and Quoc V Le. Finetuned language models are zero-shot learners. In Proceedings of ICLR, 2021.

Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Ed Chi, Quoc Le, and Denny Zhou. Chain of thought prompting elicits reasoning in large language models. arXiv preprint arXiv:2201.11903, 2022.

Laura Weidinger, John Mellor, Maribeth Rauh, Conor Griffin, Jonathan Uesato, Po-Sen Huang, Myra Cheng, Mia Glaese, Borja Balle, Atoosa Kasirzadeh, et al. Ethical and social risks of harm from language models. arXiv preprint arXiv:2112.04359, 2021.

Chaojun Xiao, Xueyu Hu, Zhiyuan Liu, Cunchao Tu, and Maosong Sun. Lawformer: A pre-trained language model for Chinese legal long documents. AI Open, 2:79–84, 2021.

Chaojun Xiao, Haoxi Zhong, Zhipeng Guo, Cunchao Tu, Zhiyuan Liu, Maosong Sun, Yansong Feng, Xianpei Han, Zhen Hu, Heng Wang, et al. Cail2018: A large-scale legal dataset for judgment prediction. arXiv preprint arXiv:1807.02478, 2018.

Chaojun Xiao, Haoxi Zhong, Zhipeng Guo, Cunchao Tu, Zhiyuan Liu, Maosong Sun, Tianyang Zhang, Xianpei Han, Zhen Hu, Heng Wang, et al. Cail2019-scm: A dataset of similar case matching in legal domain. arXiv preprint arXiv:1911.08962, 2019.

Zhuopeng Xu, Xia Li, Yinlin Li, Zihan Wang, Yujie Fanxu, and Xiaoyan Lai. Multi-task legal judgement prediction combining a subtask of the seriousness of charges. In Proceedings of CCL, 2020.

Jun Yang, Weizhi Ma, Min Zhang, Xin Zhou, Yiqun Liu, and Shaoping Ma. LegalGNN: Legal information enhanced graph neural network for recommendation. ACM Transactions on Information Systems (TOIS), 40(2):1–29, 2021.

Feng Yao, Chaojun Xiao, Xiaozhi Wang, Zhiyuan Liu, Lei Hou, Cunchao Tu, Juanzi Li, Yun Liu, Weixing Shen, and Maosong Sun. LEVEN: A large-scale Chinese legal event detection dataset. In Proceedings of ACL Findings, 2022.