Abstract

As a disease whose spread is correlated with mobility patterns of the susceptible, understanding how COVID-19 affects a population is by no means a univariate problem. Akin to other communicable diseases caused by viruses like HIV, SARS, MERS, Ebola, etc., the nuances of the socioeconomic strata of the vulnerable population are important predictors and precursors of how certain components of the society will be differentially affected by the spread of the disease. In this work, we shall delineate the use of multivariate analyses in the form of interpretable machine learning to understand the causal connection between socioeconomic disparities and the initial spread of COVID-19. We will show why this is still a concern in a developed nation like the USA with a world leading healthcare system. We will then emphasize why data quality is important for such methodologies and what a developing nation like India can do to build a framework for data-driven methods for policy building in the event of a natural crisis like the ongoing pandemic. We hope that realistic implementations of this work can lead to more insightful policies and directives based on real world statistics rather than subjective modeling of disease spread.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Introduction

Better data, better lives—United Nations.

The spread of SARS-CoV-2 amongst the human population since the end of 2019 was rapid and has been relentless. As we write this chapter, well over five million lives have been lost to COVID-19, several hundreds of millions have been directly affected, and the entire population of the world is indirectly affected by the pandemic. A natural crisis of this scale has not occurred in the recent history of mankind, and we have scrambled to contain outbreaks all over the world. Needless to say, like several other diseases, COVID-19 has disproportionately affected the most vulnerable in society [1,2,3,4,5,6,7]. These vulnerabilities were both medical and socioeconomic in nature.

Tracking medical vulnerabilities to a disease is a well-defined task that progresses through clinical observations and data collection in addition to scientific studies of varying complexities that span several branches of life and medical sciences. However, tracking socioeconomic vulnerabilities to a novel disease is, to say the least, an ill-defined task. This is aggravated by several and severe variations in socioeconomic demographics that are often rooted deep in unquantifiable sources that are cultural, economic, geographic, geopolitical, etc., each bringing about its own set of vulnerabilities on to the population of concern. Moreover, performing controlled experiments to collect data from any subsystem of society in a manner in which the experiment will not perturb the subsystem itself is almost impossible regardless of which part of the socioeconomic demographics one wishes to study [8].

Thus, the urgency to understand how socioeconomic disparities amplify the spread of a novel disease, which in turn amplifies the disparity itself, translates into a requirement of developing mathematically novel and robust methods to glean out patterns from available data. This urgency also requires a rethinking of the means and methods with which data is collected and made available as a greater participation of the scientific community is imminent given the complexity of the problem in hand and its essential interdisciplinary nature where the subtleties of the social sciences combine with the rigor of mathematical sciences and, in addition, requires an understanding of medical and life sciences to capture the nuances of this predator prey game that is being played between two species. The primary goal of this work is to present an initiation into data-driven methods focused on analyzing the effects of disparities on disease spread and vice versa. Computational socioeconomics is an emerging field in which large data and advanced multivariate methods help us to understand the nuances of socioeconomic structures and their interactions in a far more mathematically detailed manner than was previously possible [9]. However, these methods also bring with them the burden of increased complexity of the analyses often resulting in lower interpretability that makes them less appealing to those who are looking for an understanding of the underlying social dynamics rather than just simple data-driven predictions. To this effect, we would like to point out the three necessary conditions for building a credible data-driven framework for disease mitigation. While these are necessary for any data analyses, these become particularly important when addressing problems that affect human lives. These are:

-

Representative data: During the normal course of science, data collection is often designed to be able to answer a concrete scientific question about the real world. In such experiments it is sometimes possible to have a dataset that is quite representative of the system itself. However, this is not the case when data needs to be sourced to solve problems where experiments with interventions cannot be designed. This is true for an unexpected natural crisis like the ongoing pandemic. There is simply no time or means to design experiments to collect data for most socioeconomic studies. In such cases, one has to rely on established sources of data and make accommodations for data that is not present or collectable. So, the question of the how representative the data is, or can be made, needs to be addressed at the beginning of any such studies.

-

Reproducible analyses: If any analysis has to stand the test of time and prove its scientific rigor, it should necessarily be reproducible. For data-driven analyses, this requires that all data and codes related to the analysis be made public and accessible. This is often overlooked by authors who publish their work in the scientific literature and choose to keep their codes and data proprietary. This is to the detriment of future works and often comes in the way of ironing out errors that might have crept into the analysis.

-

Robust conclusions: Data-driven methods often lead to conclusions that are true within a certain periphery or are subject to assumptions made about the system under study. These boundaries might have been established due to the limitation in the coverage of the data or assumptions made during the analysis. This is especially true when socioeconomic data is used since this data is extremely contextual and cultural. Hence, the robustness of conclusions require that the domain of applicability of the analysis be a part of the statements of conclusions. These boundaries should be clearly delineated and the boundaries beyond which the conclusions do not hold good should be clearly stated.

From the perspective of a scientist vested in mathematics and algorithms, it is quite easy to meander about in the theoretical possibilities of crafting various analyses assuming that sufficient data exists or can be collected. This, however, clearly excludes the democratization of data-driven methods since the costs of performing such analyses might be too great for a set of concerned individuals or an institution to invest in regardless of the perceived benefits of doing so. Hence, it is also important to not only lower the costs of data-driven strategies by making them accessible but also, as a long-term goal, gradually build expertise and resources that can support such data-driven strategies. The discussion in this work should not only be taken as an exposition of possibilities but also as a herald to start building infrastructure, where possible, so that we can envisage a future where data-driven methods can be democratized and made available to even those parts of the world that are economically marginalized.

Where Can Data be Found?

Data-driven methods for any analysis that delves into the perturbations made to the socioeconomic strata by the onset of a disease face great challenges even without the problem one wants to address being excessively challenging by itself. The onset of a novel disease, of course, makes matters much worse. As expounding on these challenges in the general context of disease mitigation is too large a focus for this chapter, we will focus on what challenges arise specifically in the context of COVID-19 because of the details of its transmission dynamics. Many of these challenges are relevant in the context of other diseases as well, especially communicable diseases. The nature of the data necessary for an analysis, being dependent on the details of the analysis being performed, can be broadly classified into data in aggregation and data at the individual level, the former being a statistical composition of the latter. We shall discuss the ethical considerations of collecting human data later in the chapter. A comprehensive overview of data and methods for computational socioeconomic can be found in Refs. [8, 9]. For now, let us describe the different types of data needed for building socioeconomic analyses related to the spread of COVID-19.

Disease Revalence Data

At the beginning of the pandemic several institutions, both private and public, started collecting data on COVID-19 prevalence, deaths, hospitalizations, admissions in the intensive care unit (ICU). One of the first institutions that started collecting data worldwide was the John Hopkins University [10]. Initially, data was available for countries as a whole but was later made available at the county level for the USA and with some geographic granularity for some other countries. Following suit, several nations started maintaining publicly available data repositories that provided COVID-19 prevalence data at high geographic granularity. The amount of data made available on COVID-19 prevalence is unprecedented and very decentralized. This is an optimal model for maintaining data on disease prevalence and should be adopted for several other diseases. While a centralized database always has the advantage of a fixed schema for the data, by making it more easily accessible increases the burden of data collection on one agency. The optimal model for data collection would be decentralized data collection adhering to a single schema.

A question naturally arises as to how detailed this data needs to be and what all should be made available. For certain, the various sources of data for different countries neither follow the same protocols nor is presented in the same schema. There are variations in what is reported as the date of occurrence. Some use the test date while others the test report date and some others provide both. This variation does not significantly affect any socioeconomic analysis since the differences are, at best, a few days. Moreover, there are large variations in the protocols used to declaring a positive infection detection and in testing strategies that can seriously misalign the data when compared among different nations.

At the initial stage of the COVID-19 pandemic, all nations were scrambling to implement a coherent strategy for testing and preparing protocols for identifying COVID-19 as the cause of death for a decedent. These factors severely affect the recording of the progression of the disease in the population. Ironically, it is at the advent of a new health crisis that the most vulnerable are differentially affected. This often includes persons of racial and religious minorities, the poorer fraction of the society that might have lesser access to healthcare and educations, live in regions with higher pollution, etc. More often than not the infrastructure in regions inhabited by a larger fraction of minorities and people living in poverty are subpar. These factors make it iteratively difficult to mitigate the spread of the disease in these regions or deploy enough resources to trace the spread of the disease also affecting the quality of the data that is collected in these regions. This, in turn, has a cascading effect on how the disease differentially affects these fractions of the population as was evident from the beginning of the COVID-19 pandemic. These problems can be nationwide problems in low- and middle-income countries. We shall discuss this separately later in the chapter.

The best way to address these challenges to data collection is to setup a standardized method for tracking diseases, old and new, with the basic tenets established for creating new resources in the event of a new epidemic arising in the future. For certain, some protocols need to be left open to accommodate for the variances between diseases and their scale of spread. However, having the basic protocols established will go a long way in avoiding the confusion and the resulting loss of data at the critical point when a novel disease is spreading. Of course, the gold standard would be to establish an international protocol to send data to a centralized database for all diseases of concern maintained by a global collaboration such as the United Nations and institution dedicated for such data maintenance.

Health Data

There are two distinct kinds of health data that can be relevant for socioeconomic analyses designed to understand the spread of a disease. The first one stems from medical literature. Any disease differentially affects people with certain medical conditions referred to as comorbidities. These comorbidities are often correlated to the socioeconomic background of individuals or collectives. As an instance, individuals who are affected by obesity are at higher risk of hospitalization and death due to COVID-19 [11, 12]. Obesity also correlates with socioeconomic disparities and mental ill health [13]. Thus, the interdependence between physical or mental health and the risk from COVID-19 is inherently tied to socioeconomic disparities.

To understand how a disease differentially affects a certain fraction of the society, one must understand the distinct signatures that each disease has which correlate with and aggravate the disparities already present in the society. As another example, it is a medical fact that COVID-19 spreads through contact which implies that it spreads much faster in crowded regions. In the USA, we know that counties with higher poverty rates are likely to have less single family homes and more areas zoned for multi-family homes or are likely to contain inner cities and thus have a greater density [14, 15]. In such dense areas, there are likely to be shorter commutes and a larger transit network due to these areas being more populous [16, 17]. This implies that not only will COVID-19 spread more in these areas due to household contact, a major manner in which the disease spreads, but also due to a larger fraction of the population using the transit systems to commute [18,19,20].

Given these intricate correlations, the first health data that should be collected should encompass an extensive study of the medical literature to understand quantitatively the medical conditions that aggravate the risk from a disease and the transmission dynamics of the disease. For a novel disease both of these form a part of the emerging knowledge at the beginning of the spread of the disease. Therefore, it is difficult to root out differential spreading of the disease due to socioeconomic disparities from the beginning although this should not, by any means, discourage any attempts to do so based on a minimal set of metrics that are available in real-time.

The second kind of health data that is necessary to set up most analyses is data on medical conditions, both individual and as distributions in the population. Individual data is necessary to perform any controlled tests that give insights into differential spread in a particular segment of the population. The fact that there were racial and ethnic disparities in the way COVID-19 deaths occurred in the USA can be understood by collecting individual data on the race and ethnicity of the decedents [21]. However, this does not provide an insight into whether this is because of a physiological predisposition to a severe infection of the disease or whether it is because of some disparity that exist within the socioeconomic strata. To understand causalities one need population wide distribution of factors that can contribute to these differentials in the death rates including population wide comorbidity data [22, 23].

The collection of health data is possibly the most complex part of the data collection process. It is clear that the number of variables can become quite large and difficult to glean. Moreover, the need to understand correlations between these variables necessitates that a large amount of data be collected which can get quite challenging either because the data itself might not be available or the infrastructure necessary for its collection might be absent. The latter is particularly challenging for developing nations.

Mobility Data

While the other data sources are, in general, relevant for computational socioeconomics analyses involving any disease, the spread of COVID-19, in particular, requires a mapping of human mobility within the population because of the dynamics of the disease spread. It is true that mobility data can play a role in analyzing the dynamics of spread of any communicable disease. However, it is particularly important for COVID-19 because of two reasons:

-

SARS-CoV-2 transmission is driven by pre-symptomatic spreading like the influenza virus [24,25,26]. This is not necessarily true for other similar communicable diseases spread by viruses like Variola, SARS-CoV, MERS-CoV, Ebola, etc. which primarily spread in the symptomatic phase [27,28,29,30].

-

The pathogen can be transmitted through the air in high contamination regions and through contaminated dry surfaces for several days leading to its high transmission rates [24, 31, 32]. This brings about additional challenges when the disease cannot be contained within an isolated envelope of a healthcare system. While a similar spreading pattern is seen in SARS-CoV and MERS-CoV, this makes SARS-CoV-2 more easily transmittable than some other diseases like Ebola.

The first reason makes the tracking of COVID-19 transmission extremely difficult even with the help of automated contact tracing or manual contact tracing [33, 34]. The second reason highlights the importance of information on how people interact with each other at the local scales. Hence, to make coherent predictions about the spread of the disease or to understand causalities, mobility data is of utmost importance.

Individual mobility data is used in the construction of Individual-Based Models of disease transmission which are specialized agent based models constructed for predicting disease transmission taking into account the details of the entire population of a region or country[35,36,37,38]. Mobility data in aggregation can also be used to model disease spread across large geographical areas [39, 40]. What is even more relevant for this work is that mobility data contains signatures that can be directly connected with socioeconomic disparities and how the mitigation methods affect the most vulnerable parts of the society [41]. The latter in turn can give us an understanding of how effective certain mitigation methods are in areas in which the socially disadvantaged live.

Even with all these advantages and scientific possibilities that access to mobility data can bring about, it is not easy to find mobility data. The highest quality mobility data comes from anonymized signals collected from cellphones. There are several commercial institutions that harvest these datasets but are mostly restricted to a few countries where they have commercial interests. Some of these commercial institutions have made their data available for academic research related to COVID-19. One of the most notable ones is Safegraph which provides Point-of-Interest data for the United States of America. It provides raw data for several years broken down to census block groups and with significant granularity in place types and foot traffic at all locations and between pairs of locations. This brings about significant scope for modeling mobility be it at the aggregated level or more detailed models based on mobility networks [41]. Safegraph data can be requested from https://www.safegraph.com/publications/academic-research, and the page includes a list of publications that have used the data.

The other source of mobility data is Google. The advantage of its dataset is that it is available for almost all nations and, for some, are broken down by states and districts (counties). However, Google does not provide the raw data but only gives numbers relative to a baseline that it computes using historical data. It only provides aggregated data for a few place types and not details of foot traffic data like the one that is available from Safegraph. Hence, this dataset, while being useful, is of limited utility. The datasets can be found at https://www.google.com/covid19/mobility.

Demographic Data

The final piece that ties together human behavior and disease dynamics to factors that encode socioeconomic disparities is demographic data. While being the easiest data to access through public databases, it is the most challenging to deal with in terms of variable selection, the understanding of confounding factors, modeling interventions, and real-time data collection. Focusing on the USA, demographic data is easily accessible through the US Census Bureau. However, there are two questions that one must address when designing what socioeconomic metrics should be derived from this data.

Firstly, the question of confounding variables is of prime importance in determining the variable set that needs to be used. This decision often boils down to what factors affect the phenomenon that is under study. For example, in the case of the spread of COVID-19, it is evident that factors like population density, mobility amongst the population, poverty, access to healthcare, etc. matter in determining how much the disease affects the population in terms of its spread and deaths due to it. However, one must understand whether factors like education, access to insurance coverage etc. actually affect the spread of the disease and are the determining factors. This is necessary because the variable that is causally connected to the outcome must be identifies to develop any intervention.

The second question follows naturally from the first one. It is the question of correlations between variables. It is well understood that using common linear methods to understand causation gets entangled in correlations because highly correlated variables cannot be used simultaneously in an analysis based on liner models. A move towards non-linear models, like those used in machine learning, allows one to escape the curse of correlations but at the price of losing the interpretability of the model. We shall address this in a later section. However, these two considerations are paramount in deciding what variable set to consider amongst demographic data since any set of variables can be highly correlated given that several aspects of the society are indeed interdependent and belie any explicit separation of causation.

Multivariate Methods

Possibly the greatest challenge in computational socioeconomics is the definition of variables that need to be focused on in any analysis. However, in this work we will not focus on the complexities and methodologies involved in the choice of variables. Rather we will assume that this choice has already been made and the analysis at hand includes several exogenous variables and one or many endogenous variables. Any mathematical structure that is used to tackle this analysis quantitatively can be called a multivariate method. Just as everything comes at a cost, multivariate methods also have costs associated with them.

-

Higher dimensional analyses typically require much larger datasets. Often this is not possible to obtain in an experiment or source from existing data. Several multivariate methods tend to be less interpretable because of their inherent mathematical complexity. This is certainly true when applied to machine learning frameworks as they are some of the most complex mathematical structures that can be used to find patterns in data and hence, sometimes the most effective ones.

-

Given one finds a large enough dataset and is willing to use complex algorithms to find pattern in the data, computational costs can become a concern specially if the analyses needs to be done by individuals who have lesser access to computational facilities.

Machine Learning

Machine learning has become a very loaded class of statistical methods used to understand patterns in complex datasets. The breadth of machine learning covers everything from linear regressions to decision trees to neural networks. Depending on the data type that is used for an analysis, a suitable machine learning method can be chosen to perform the analysis. It should be noted that more often than not, real-world data shows linear and higher order correlations amongst the exogenous variables. These correlations cannot be probed by all machine learning methods. Moreover, the relations between the endogenous and exogenous variables can be far from linear. Hence, advocating linear systems is not always the best choice although they are the most interpretable ones.

To incorporate possible non-linearities in the data one can use decision trees to build models [42]. These are simple rule based algorithms that can be trained using data. Simple decision trees might be insufficient for modeling all the complexities in a dataset but are very highly interpretable. Much better regressors can be created from ensembles of decision trees like boosted decision trees or random forests [43,44,45,46]. While they are much better at explaining the patterns in a dataset, they lack the simple interpretation that a single decision tree has to offer. Methods derived from ensembles of trees are quite popular in the physical and life sciences and also widely used in clinical research. However, they must be used with much caution when dealing with social data [47, 48]. There are no good reasons to use neural networks on tabular data, be it cross-sectional or panel data. Neural networks are universal function generators that outperform tree based algorithms for several applications like image recognition, sequence to sequence analyses, time series analyses, natural language processing, etc. However, these kinds of analyses are almost never used in the study of disparities and disease except for special cases, e.g., where large social network datasets are being used to understand sentiments and trends [49]. So, we will limit our discussion to tree based algorithms here.

One of the greatest challenges that the application of machine learning faces in the social sciences is their reliance on large data sets. Machine learning algorithms are statistical learners and require a sufficient set of examples from the system being studied to model the patterns that lie within the system. The definition of “sufficient” relies completely on the complexity of the problem in hand. There are two factors that must be taken into consideration here:

-

An increase in the number of exogenous variables requires an increasingly large data sample.

-

The complexity of the relation between the endogenous and exogenous variables determines how large a dataset is necessary to perform a reliable regression of the data.

While most machine learning algorithms (other than linear regressions) do not typically suffer from multi-collinearities in data, since the parameters of the model built by these algorithms does not hold a quantitative or qualitative meaning in terms of the relation between the exogenous and endogenous variables, this can certainly pose to be a hurdle in terms of explaining the machine learning models post hoc. We will discuss this in some detail below. Whether one should consider examining multicollinearities in the analysis also depends on whether one wants to build a predictive algorithm or understand how the exogenous variables determine the endogenous one(s). For the former case, the existence of multicollinearities can be mostly ignored since the details of the model built by the machine learning algorithm does not matter. For the latter case, one has to deal with it individually for each analysis and a general prescription cannot be made.

Interpretability of Multivariate Methods

The greatest push against the use of machine learning algorithms for analyses that can directly affect decisions or policies made for humans has been because of the lack of interpretability of machine learning models. These models are made to find patterns in data and often require a degree of mathematical complexity that do not allow for a direct interpretation of the outcome in terms of the input variables. To make the results coming from machine learning models intelligible, explainability of these models is becoming increasingly important from the ethical, legal, security, transparency, and robustness standpoints, especially when they are used to make decisions that can affect human lives [50]. This has fueled the development of interpretable machine learning or explainable AI [51,52,53].

Interpretability in machine learning has several facets. Sometimes it is necessary to interpret the reason why a certain prediction is made. This is more useful for predictive modeling rather than when descriptive modeling is the aim. To be able to explain each prediction, a local explanation of the model is necessary which tells how a certain prediction depends on the input by creating a hierarchy of importance of the input variables in making that prediction. There are several measures of local explanations that can be used for machine learning models. Amongst them, the two most popular are LIME and SHAP [54, 55]. The latter is based on Shapley values which we elaborate on below. The other kind of measure that explains the machine learning model as a whole to understand which input variables are more important in shaping the outcome in general are called global measures. Amongst the several popular global measures, the one that is most deeply rooted in mathematics is the global SHAP measure which is simply the mean of the absolute values of the local SHAP measures [56]. This connection between the local and the global SHAP measures comes from exploiting the additive property of Shapley values which are one of the four axioms that defines it.

In the applications that we propose here, interpretability of machine learning algorithms goes beyond being just something that is good to have to make responsible decisions. We will use interpretability to understand the interdependence of socioeconomic metrics and the spread of COVID-19. This is possible by doing what is commonly known as calculating “feature importance” in machine language parlance. The goal of feature importance is to understand which variables are important overall, or globally, in determining a certain outcome.

To do this we introduce the Shapley values [57, 58]. Formulated by Shapley in the mid-twentieth century, this is a measure in coalition game theory of how important a player is in a game of n players and a preset outcome defined as the objective of the game. A simple way of looking at Shapley values is to imagine what the outcome could have been without a particular player. Now, if one brings the player in the game, the outcome might be changed. The Shapley value provides a measure of the change of the outcome due to this player on an average over several possible combination of players playing the game. In essence, the Shapley value tells us how important the presence of a variable is in determining a certain outcome when compared to its absence from the multivariate problem being addressed. The process naturally and mathematically lends itself to studying the correlations between different variables since all possible combinations of variables can be taken out of the game to check the outcome.Footnote 1

An Early Insight into Disease Spread in the USA

The early stages of the spread of COVID-19 in the United States have highlighted how socioeconomic disparities lead to the disproportionate prevalence of the disease in certain sections of society. This is a pattern previously observed in the spread of several diseases caused by pathogens like HIV, MERS-CoV, SARS-CoV, Ebola, etc. [60,61,62,63]. Beyond variations in socioeconomic conditions, ethnicity is a contributing factor to differences in health care, and more so for health conditions requiring intensive and long-term care [2, 64,65,66,67]. These disparities may also include racial and ethnic stereotypes within the healthcare system [68]. Taking concrete measures to limit the spread of disease among the most at-risk populations requires a thorough understanding of the causal links between socioeconomic disparities and the spread of disease.

It is known that communities of racial minorities, both black and Hispanic, have been disproportionately affected by COVID-19 [69,70,71,72,73,74,75,76,77] from the very early stages of the pandemic. A study of 1,000 children tested for SARS-CoV-2 showed that minority children had a higher probability of testing positive with non-Hispanic blacks being more than twice as likely and Hispanics being over six times as likely over non-Hispanic white children [78]. Socioeconomic differences can also come forth in the form of challenges in imposing shelter in place leading to the inability to effectively mitigate the spread of the disease [79, 80]. A study of COVID-19 outbreak in Wuhan in the first two months of 2020 focused on the effects of socioeconomic factors on the transmission patterns of the disease [81]. This study shows that cities with better socioeconomic resources that allows for better infrastructure and response were better able to contain the spread of the disease. An extensive study of the effects of a large set of socioeconomic conditions that affect the transmission of COVID-19 can be found in ref. [82]. This work studied the importance of various socioeconomic metrics and their correlations in determining disease spread. In Ref. [3], it was shown that the spread of COVID-19 was correlated to various socioeconomic metrics averaged at the county level during the initial stages of the pandemic. The authors of that work introduced the use of Shapley values within a machine learning framework to model how socioeconomic disparities affect the spread of the disease. However, Shapley values probe only the correlation between the endogenous and exogenous variables and do not provide a causal inference.

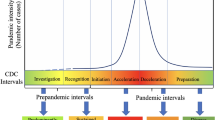

Reference [3] considers different regions of the United States to give a clear understanding of how different socioeconomic metrics affect the spread of COVID-19 in communities with different demographics. On the one hand, looking at differences across the entire nation provides a larger picture that can be important at the national level. On the other hand, there are significant differences in some important factors, and different characteristics may be emphasized when different regions are considered separately without these characteristics being lost to aggregation. For example, there are states which have a large average population density and this can drive the spread of infections in a manner different from the ones in which the population density is low. From Fig. 1, it can be seen that population density is the primary factor driving the spread of COVID-19 in East Coast states. The prevalence of the disease in dense urban areas has overshadowed its prevalence in rural areas of these states. However, this does not apply to states in the southern United States. Moreover, while high population density states were severely affected by the first wave of the pandemic, southern states were severely affected by the second wave. West coast states have been affected throughout the pandemic. We can see that the disease was prevalent in less densely populated areas. It is this variation that needs to be explored, focusing on different clusters of states in order to identify traits that are more important regionally.

Source Plots made with the Highcharts Maps JavaScript library from Highcharts.com with a CC BY-NC 3.0 license

Distribution of some socioeconomic metrics at the county level along with population density distribution. Note The two bottom panels show the COVID-19 confirmed cases and deaths per 100,000 individuals in each county, respectively up until 15th of August 2020. The lower and upper bounds are set by 10 percentile and 90 percentile of the distributions respectively.

The regions are subdivided so as to have certain similarities in socioeconomic distribution within a region. The northeastern states have several urban areas in close proximity. However, urban areas are spread apart in the southern states, with a significant portion of the population living in rural areas where socioeconomic conditions differ from urban areas. On the other hand, the states on the west coast have a mixture of urban and rural areas, and their socioeconomic structures are very different. The three different regions used this study are:

-

High population density regions: States with population density over 400 individuals per sq. km: District of Columbia, New Jersey, Rhode Island, Massachusetts, Connecticut, Maryland, Delaware, and New York

-

The southern states: States in the south with the exception of Delaware and the District of Columbia which are already included in the previous category. The states included in this category are Alabama, Arkansas, Florida, Georgia, Kentucky, Louisiana, Mississippi, North Carolina, Oklahoma, South Carolina, Tennessee, Texas, Virginia, and West Virginia.

-

The west coast: The three states along the west coast: California, Oregon, and Washington are included in this category.

The data used in this work is collected from three primary sources:

-

The 2018 American Community Survey 5-years supplemental update to the 2011 Census found in the USA Census Bureau database for constructing the socioeconomic metrics.

-

The population density data that reflects the 2019 estimates of the US Census Bureau.

-

Data on COVID-19 prevalence and death rate is obtained from the Johns Hopkins University, Center for Systems Science and Engineering database through their GitHub repository [10].

The metrics that were used in this analysis can be motivated as follows. Population density and intra-population mobility are two factors considered a priori important to the spread of COVID-19. Mobility has to be studied indirectly, as the focus is on populations rather than individuals. The measure of mobility within a population can be considered as an aggregate measure of population age. On average, populations with a lower median age are more mobile than those with a much higher median age. Given that COVID-19 tends to affect older people preferentially, the fraction of the population that falls into the senior category [65 years and older] quantifies both the mobility and the degree to which age plays a role in determining the spread of COVID-19.

To understand the relevance of economic conditions on the spread of COVID-19, we focus on metrics that quantify income, poverty, the fraction of the population that is employed, and the unemployment rate. The latter two are not perfectly correlated as the percentage of the population that is employable varies from county to county. To further explore the impact of mobility, the average commute time for each county and the percentage of the population that uses public transportation (transit) are used. Certain occupations are also known to put individuals at greater risk because they are exposed to a larger number of people. This includes those employed in service industries, construction, supply, labor, etc. increase. Therefore, a metric is used to quantify the proportion of the population working in these industries. Finally, the proportion of counties without health insurance is included as a metric to quantify how it affects the prevalence of disease. The list of socioeconomic metrics for each county that were used in this work are as follows:

-

Population density: population density data taken from 2019

-

Non-white: fraction of non-white population in any county including Hispanics and Latinos

-

Income: income per capita as defined by the US Census Bureau

-

Poverty: fraction of the population deemed as being below the poverty line

-

Unemployment: unemployment rate as defined by the US Census Bureau

-

Uninsured: fraction of the population that does not have health insurance

-

Employed: fraction of the population that is employed

-

Labor: fraction of the population working in construction, service, delivery, or production

-

Transit: fraction of the population that takes the public transportation system or carpool excluding those who drive or work from home

-

Mean commute: mean commute distance for a person living in a county in minutes

-

Senior citizen: fraction of the population that is above 65 years of age

The total number of confirmed cases and deaths up until the 15th of August 2020 are considered for the data on COVID-19 prevalence and the following rates are defined:

-

Confirmed case rate: The total number of confirmed cases per 100,000 individuals in any county.

-

Death rate: The total number of deaths per 100,000 individuals in any county.

-

Fatality rate: This is the naive fatality rate and is not adjusted for the delay between case confirmation and death. The rate is defined as the total number of deaths divided by the total number of confirmed cases in any county. The naive fatality rate is mostly uncorrelated with the socioeconomic metrics. The naive fatality rate represents the fraction of infected people that died after being infected with COVID-19 and this seems to be mostly homogeneous and independent of the socioeconomic metrics and population density.

The primary conclusions from the study are:

-

The spread of COVID-19 can be understood as an ‘urban phenomenon’ when the population density is very high. In this regard, large dense cities require special attention for the mitigation of the spread.

-

In lower population densities areas such as suburban and rural areas, the spread of the disease is not governed by the population density of the region.

-

Regions with a larger fraction of racial and ethnic minorities were affected the most. This is evident from the data and is a conclusion supported by several other studies. This is particularly true for the southern states while being less relevant for the states on the west coast. What this study proves in particular is that these disparities are manifested not only at the individual levels (as seen from the clinical data) but also at the regional level where aggregation of counties does not average out the effect. This points at the necessity for large scale intervention at regional levels to stop a differential spread of the disease.

-

While, at an individual level, COVID-19 preferentially affects the elderly, the spread of the disease is dependent on human mobility within a region. This leads to an anti-correlation between the age of a population in a county and how easily the disease spreads as seen in both the southern states and in the west coast states. In other words, while the elderly are most affected by COVID-19, the disease spreads more easily in regions where the population is relatively younger.

-

Fatality rates are mostly uncorrelated with socioeconomic metrics and population density. This implies that death due to COVID-19 amongst the infected population does not depend on the socioeconomic class.

-

This spreading characteristics of COVID-19 does not show representative trends at the national level as the study including all the states considered in this work fails to highlight the different drivers in different regions. Hence, studies must be undertaken at regional levels.

-

The results of the work should be taken as descriptive or, at best, prescriptive and by no means predictive about the spread of COVID-19 although some models do show quite high accuracy that can fall in the predictive regime.

It should be noted that certain subtleties in the data were not fully addressed in Ref. [3]. Data on confirmed cases and mortality were collected in the early stages of the pandemic. In the confirmed case rate, inadequate testing primarily affected the data. In this work, it was implicitly assumed that there were no testing biases correlated with the socioeconomic indicators we considered. People at higher risk of severe infections and those with more pronounced symptoms may be have been prioritized for testing, which is known to be correlated with age. Some bias is possible because of these conditions in this analysis. However, mortality is less susceptible to implicit bias from such correlations. This is because mortality is better captured and is not affected by variables such as testing strategy.

Understanding Causation

When trying to build a path to coherent policy decisions based on data, a causal analysis is indispensable and just a simple analysis of correlations is often insufficient. We get only a picture of the correlations and not of causation when using Shapley values to understand how a socioeconomic metric affects the spread of a disease. Hence, a mathematical structure that is better developed for causal analysis is required.

The framework for Causal Shapley values advocated by Heskes et al. [83] accounts for indirect effects in order to take the causal structure of the data into account when estimating Shapley values. The approach is based on the assumption of the impracticality of computing interventional probabilities to account for indirect effects and, instead, proposes a causal chain graph. A directed acyclic graph, as shown in Fig. 2, represents the (partial) causal ordering in the data as a causal chain graph. The causal ordering depends on a hypothesis of how the socioeconomic metrics form subgroups that are causally connected. Within a subgroup, the metrics are assumed to have no causal connection. However, they can be cyclically connected or share confounding variables. There are no fixed prescriptions for constructing these partial causal orderings. Therefore, domain knowledge has to be used to assume how the metrics affect each other in order to build causal orderings. We show three causal ordering in Fig. 2.

CO#1: The rationale for the first causal ordering is as follows.

-

Areas with a higher fraction of non-white population tend to have higher unemployment rates and a lower fraction of employed workers [84].

-

Areas with higher unemployment rates may have higher fraction of senior citizens if there is low economic mobility and younger generations move out of the area [85].

-

Areas with higher unemployment and lower total employment tend to have more people working in construction, service, delivery, or production, or are rust belt areas that have a declining manufacturing industry [86, 87].

-

Areas with higher unemployment tend to be poorer with the households having lower incomes [88].

-

Areas with more senior citizens who are on Medicare or those with individuals having higher incomes who receive insurance from their employer tend to have less faction of uninsured individuals in the population [89, 90].

-

Counties with higher average incomes tend to have more income inequality and a higher Gini index [91, 92].

-

Areas with a higher percent of people working in construction, service, delivery, or production are likely to have more poverty [93].

-

Counties with higher poverty levels are likely to have less single family homes and more areas zoned for multi-family homes or are likely to contain inner cities and thus have a greater population density [14, 15]. In such dense areas, there is likely to be shorter commutes and better transit due to these areas being more densely populated [16, 17].

CO#2: The second causal ordering, CO#2, has unemployment, employed, income per capita, and fraction working in manufacturing or manual labor as causing the proportion of senior citizens and non-white fraction of the population. This causal ordering takes into account the fact that senior citizens are more likely to stay in areas with a declining manufacturing industry and small economic mobility [85]. A higher fraction of senior citizens results in a lower proportion of non-whites since senior citizens are less likely to be non-white [94].

CO#3: The third causal ordering, CO#3, has the fraction of senior citizens causing the fraction of non-whites. Since senior citizens are less likely to be non-white, a higher fraction of senior citizens results in a lower fraction of non-whites [94]. This causal ordering also has income per capita, the fraction of people in a county working in professions classified as labor, unemployment, and employment on equal footing since manual labor jobs are less temporary and pay lower wages [95].

Using these causal structures the authors of Ref. [96] examine causal relationships between socioeconomic disparities and the spread of COVID-19 in the United States during two different phases of the pandemic. The first phase is from February 2020 to July 2020, and the second phase is from July 2020 to January 2021. These two stages define the early and late stages of the pandemic. They used demographic data collected by the U.S. Census Bureau and the COVID-19 prevalence data from Johns Hopkins University to fit a regression model using an interpretable machine learning framework. By extracting causal Shapley scores from these regression models, inferences can be made about causal relationships between socioeconomic metrics and prevalence of COVID-19 at the county level. The primary conclusions of the analysis are:

-

The effects of socioeconomic disparities on the spread of COVID-19 was more pronounced at the beginning of the pandemic than at the later stages.

-

While in the more densely populated parts of the USA, the spread of COVID-19 was driven partially by the population density, socioeconomic metrics like the fraction of non-white population in a county also showed significant causal connection with the spread of the disease. In fact, population density was not causally connected to the spread of the disease in the west coast region.

-

Of particular note is the relationship between the proportion of senior citizen in the county and the prevalence of disease in the West Coast and Southern states. An inverse correlation between Shapley scores and confirmed COVID-19 case rates meant that counties with younger populations experienced greater spread of the disease. Since it is known that the probability of COVID-19 infection increases drastically with age, the results imply that while the older fraction of the population were being differentially affected to a larger extent, the disease was being spread more widely by the younger and more mobile fraction of the population.

While stressing on the causal nature of various metrics, this works reaffirms what was discussed in Ref. [3] for the first phase of the pandemic. A major limitation of this work is that the machine learning framework requires sufficient data to model the underlying patterns, thus requiring aggregation across regions. Therefore, this state-level analysis cannot be replicated at county-level granularity. Also, the data is very noisy and falls into the “small data” regime, so machine learning models are not very accurate and cannot be used for prediction. The causal structure obtained from the analysis depends somewhat on hypotheses about the causal structure of exogenous variables. These causal structures are well motivated by prior knowledge, but contain a degree of subjectivity that influences the conclusions drawn from the results of the analysis. However, it was found that the degree of variance of the most important causes due to variation in causal plots is of lesser importance and can be used to draw robust conclusions.

Data in Developing Nations

Data-driven methods face special challenges in developing countries. Ironically, these are the nations that would most benefit from data-driven methods since a well-defined path from observation to policy-making is not usually present in these nations and the socioeconomic demographics can be rapidly changing in a manner that policies have to respond in shorter time frames. In this section we will try to emphasize on the importance of maintenance and accessibility of data in developing nations so that they can be used to understand the progression of a health crisis and help in the structuring of mitigation policies.

The primary hurdle in developing nations is sourcing of skills necessary for processing and understanding data and the cost of maintaining the infrastructure that is necessary to make this a continued process. With limited resources available and, often, the necessary skills missing within the population, analysis of data within the oversight of a national governance is almost impossible even if data is collected coherently. One possible solution to this problem is the maintenance of open data that can be publicly accessed so as to appeal to a broader set of experts available domestically and globally who are willing to carry forward such analysis voluntarily.

The model of open data started in the developed world with the United States opening the data.gov portal to make data more accessible to the public. The OpenData BarometerFootnote 2reports indicate that as far back as 2016, 79 out of the 115 countries surveyed had a data portal run by a government agency but not all of them maintained similar quality or quantity of data. In several cases data was available through sources other than portals run by government agencies. A study of the most comprehensive databases available publicly showed that developed nations were far more comprehensive in making data available than the developing nations. In several instances, the datasets existed but were not publicly accessible and an estimate by OpenData Barometer showed this to be the case for almost 90% of the data.

According to the Open Data CharterFootnote 3 which advocates the maintenance of publicly available data by government agencies and private organizations so as to be able to respond to socioeconomic and environmental challenges more effectively, the following six principles should be followed by those agencies that wish to maintain open data:

-

Open by default: All data collected by governments should be made open by default.

-

Timely and comprehensive: All data must be kept up-to-date and complete.

-

Accessible and usable: The data must be made publicly accessible, preferably in a machine-readable format, with little overhead and be clearly defined to be of maximal usability.

-

Comparable and interoperable: Data standards and protocols must be adhered to ensure data quality and consistency.

-

For improved governance and citizen engagement: The data should allow for transparency in governance and should also allow for its citizens to be able to use data for accountability.

-

For inclusive development and innovation: The data made available should be used to promote inclusion of minorities and encourage studies that reduce socioeconomic disparities. These studies can also be used for entrepreneurial efforts and to drive innovations which contribute to the general upliftment of the society.

As discussed earlier in the chapter, to understand how and why disparities get aggravated during a health crisis and to identify their existence, a lot more data than just disease prevalence data is needed. Collecting such data must fall into the long-term goals of any government with special care taken to make the data capable of addressing marginalized groups by providing higher granularity data disaggregated by sex, income level, age, religion, etc. Such data can not only help in the times of crises, but can also be instrumental in understanding how health disparities affect different strata of society [97, 98].

There are clear reasons why the cost of maintaining open data is justifiable for developing nations. The availability of data encourages studies by academic institutions, independent parties, and private organizations and can lead to insights about pockets of needs in the society which, in turn, can drive policy-making in local and national governments. For instance, due to the availability of data in the USA, the UK, and several countries in the European Union like Germany, France, Belgium, etc. a comprehensive study of the progression of the pandemic could be undertaken which drove several policy decisions with short response times. Such data-driven policy decisions were not possible in countries like India where data was not made readily available during the onset of the pandemic. Indeed, a recent estimation of excess mortality [99] shows that the actual mortality rate in India is more than a factor of 8 higher than the reported rate. This is in contrast with the excess mortality rate being a factor of less than 2 higher than the reported rate for most of the developed nations with some exceptions. This trend is seen in several developing nations pointing to the fact that the pandemic was under monitored and the data delivered by the government was faulty.

Another clear example of data-driven methods aiding predictions of COVID-19 case rates and hospitalizations lies in individual based models (IBM) created for the population of Australia and the UK separately [35,36,37,38]. IBMs are agent-based models with each agent representing an individual in a population with the number of agents being representative of the population as a whole. The design of these IBMs requires a complete knowledge of the demographics and mobility of the population which was openly available for both the countries prompting academics to put in effort into build the IBMs. The predictive power of these IBMs is extremely good often extending to several weeks into the future and these models allow for modeling of intervention measures adding to the accuracy of the predictions. The importance of these predictions and the role of open data in making these predictions possible cannot be overemphasized.

Data Ethics

A discussion of data-driven methods is incomplete without a discussion of data ethics surrounding the data that is being used. This is of particular importance when it concerns health data and decisions that can possibly affect human subjects. Moreover, COVID-19 being a global pandemic, the sheer scale of decisions and the number of lives that can be affected by the analysis of data is quite imposing. A long list of applications of data derived both in real-time and of historical nature was proposed by several national and private institutions and was, in some cases, used for surveillance of the citizens or to make decisions on who can have the privilege of mobility while others be quarantined. Basing decisions of such gravity requires that the entire decision-making process, including the data that a decision might be derived from should be subject to strict ethical standards and there should be a clear understanding of the source, structure, and implications of the data being used.

The fears of the nationwide government sponsored surveillance were ignited even in the USA and in the European nations with the proposal of voluntary automated contact tracing [34, 100,101,102]. Even in Germany, where the acceptance of the “Corona-Warn” app was one of the highest in Europe, several psychological factors stacked up against its population wide acceptance [103]. Without large acceptance, voluntary automated contact tracing was bound to fail [3]. In India the uptake of the contact tracing app was about 13% only leading to its complete failure [103]. This proves how clearly defining the bounds of ethics in collecting and distributing data, be it centralized or decentralized, is of prime importance if a digital decision instrument driven by data is to be considered for population wide distribution.

The implementation of data ethics should begin at the initiation of the design of any analysis. Even the choice of data and the design of the analysis can lead to conclusions that are possibly unethical when taking fairness and equity into consideration. Often the choice of variables is limited by the analysis and the scope of data discovery leading to biases in the data and an oversimplification of the conclusions that can be drawn from the analysis [48]. The design of a study is further complicated when addressing studies related to minorities in the population. Beyond the potential hurdle of having insufficient data, or an elevated hesitance to participate in studies seen amongst the minorities population, the dangers of misjudged conclusions adversely affecting the already vulnerable part of the population, or the danger of affecting them in the process of collecting the data) is a risk that should be assessed with great care [104,105,106].

Given the nature of this fallibility of data, methods that are primarily data driven inherit the biases that might exist in the data leading to an inevitable lack of fairness and equity in the decisions that are made using these methods. All data-driven analyses, however explainable they might be, required intervention to ascertain whether any biases have percolated from the data to the decision. Moreover, as the complexity of the analysis increases, the trust in the methods used decreases due to a lack of apparent transparency and explainability of the methods used due either to their mathematical complexity or the disconnect between the structure of the model and the variables as in a neural network. This invariably leads to a lack of trust in the decisions made by what is perceived as a ‘black-box’. In the recent past, questioning biases in machine learning methods and implementing a path to fairness has become a subject matter of research in a rapidly growing community [107]. Nowhere is this more important that in the domain of public health and healthcare. Hence, policy decisions, guidelines, and mandates should be set to ascertain that AI algorithms and models are built with fairness and equity as they are used as guiding principles.

Notes

- 1.

More clarity on Shapley values and interpretable machine learning in general, along with their application can be found in Interpretable Machine Learning by Christoph Molnar [59].

- 2.

- 3.

References

Baciu A, Negussie Y, Geller A (2017) Board on population health and public health practice, committee on community-based solutions to promote health equity in the United States. National Communities in Action: Pathways to Health Equity. Academies Press, United States. https://nap.nationalacademies.org/catalog/24624/communities-in-action-pathways-to-health-equity

Smedley BD, Stith AY, Nelson AR (2003) (Eds) Unequal treatment: Confronting racial and ethnic disparities in health care. The National Academies Press, Washington, DC. https://doi.org/10.17226/12875

Paul A, Englert P, Varga M (2021) Socio-economic disparities and COVID-19 in the USA. J Phys Complex. http://iopscience.iop.org/article/https://doi.org/10.1088/2632-072X/ ac0fc7

Kim SJ, Bostwick W (2020) Social vulnerability and racial inequality in COVID-19 deaths in Chicago. Health Educat Behavior 47(4):509–513. https://doi.org/10. 1177/1090198120929677

Webb Hooper M, Nápoles AM, Pérez-Stable EJ (2020) COVID-19 and racial/ethnic disparities. J Am Medical Associat 323(24):2466–2467. https://doi.org/10.1001/jama.2020.8598

Lopez I Leo, Hart I Louis H, Katz MH. Racial and ethnic health disparities related to COVID-19. Journal of the American Medical Association. 2021; 325(8):719–720. https://doi.org/10.1001/jama.2020.26443

Magesh S, John D, Li WT, Li Y, Mattingly-app A, Jain S, Chang EY, Ongkeko WM. Disparities in COVID-19 outcomes by race, ethnicity, and socioeconomic status: A systematic review and meta-analysis. Journal of the American Medical Association Network Open. 2021 Nov 11; 4(11):e2134147–7. https://doi.org/10.1001/jamanetworkopen.2021.34147

Zhou T (2021) Representative methods of computational socioeconomics. J Phys Complexity 2(3):031002. https://doi.org/10.1088/2632-072x/ac2072

Gao J, Zhang YC, Zhou T (2019) Computational socioeconomics. Physics Reports. 2019; 817:1–104. https://www.sciencedirect.com/science/article/pii/S0370157319301954

Dong E, Du H, Gardner L (2020) An interactive web-based dashboard to track COVID-19 in real time. The Lancet Infectious Diseases. 20(5):533–534. https://www.thelancet.com/journals/laninf/article/PIIS1473-3099(20)30120-1/fulltext

Popkin BM, Du S, Green WD, Beck MA, Algaith T, Herbst CH, Alsukait RF, Alluhidan M, Alazemi N, Shekar M. Individuals with obesity and COVID-19: A global perspective on the epidemiology and biological relationships. Obesity Reviews. 2020; 21(11):e13128. https://onlinelibrary.wiley.com/doi/abs/ https://doi.org/10.1111/obr.13128

Gao M, Piernas C, Astbury NM, Hippisley-Cox J, O’Rahilly S, Aveyard P, Jebb SA. Associations between body-mass index and COVID-19 severity in 6.9 million people in England: A prospective, community- based, cohort study. The Lancet Diabetes and Endocrinology. 2021 Jun 01; 9(6):350–359. https://doi.org/10.1016/S2213-8587(21)00089-9

Khanolkar AR, Patalay P. Socioeconomic inequalities in co-morbidity of overweight, obesity and mental ill-health from adolescence to mid-adulthood in two national birth cohort studies. The Lancet Regional Health, Europe. 2021; 6:100106. https://www.sciencedirect.com/science/ article/pii/S2666776221000831

Rothwell JT, Massey DS. Density zoning and class segregation in U.S. metropolitan areas. Social Science Quarterly. 2010 Oct; 91(5):1123–1143. https://doi.org/10.1111/j.1540-6237. 2010.00724.x

Kasarda JD. Inner-city concentrated poverty and neighborhood distress: 1970 to 1990. Housing Policy Debate. 1993 Jan; 4(3):253–302. https://doi.org/10.1080/10511482.1993.9521135

Bertaud A, Richardson HW. Transit and density: Atlanta, the United States and Western Europe. In: in Urban sprawl in Western Europe and the United Sates, urban planning and environment, Ashgate. 2004. https://courses.washington.edu/gmforum/Readings/Bertaud.pdf

Levinson DM, Kumar A. Density and the journey to work. Growth and Change. 1997 Mar;28(2):147–172. https://doi.org/10.1111/j.1468-2257.1997.tb00768.x

Cerami C, Popkin-Hall ZR, Rapp T, Tompkins K, Zhang H, Muller MS, et al. Household transmission of severe acute respiratory syndrome coronavirus 2 in the United States: Living density, viral load, and disproportionate impact on communities of color. Clinical Infectious Diseases. 2021 Aug 12. https://doi.org/10.1093/cid/ciab701

Bi Q, Lessler J, Eckerle I, Lauer SA, Kaiser L, Vuilleumier N, Cummings D, Flahault A, Petrovic D, Guessous I, Stringhini S, Azman A, SEROCoV-POP Study Group. Insights into household transmission of SARS-CoV-2 from a population-based serological survey. Nature Communications. 2021 Jun 15; 12(1):3643. https://doi.org/10.1038/s41467-021-23733-5

Medlock KB, Temzelides T, Hung SYE. COVID-19 and the value of safe transport in the United States. Scientific Reports. 2021 Nov; 11(1):21707. https://doi.org/10.1038/s41598-021-01202-9

Shiels MS, Haque AT, Haozous EA (2021) Racial and ethnic disparities in excess deaths during the COVID-19 pandemic, March to December 2020. Annals of Internal Medicine. https://doi.org/10.7326/M21-2134

Matthews KA, Gaglioti AH, Holt JB, McGuire LC, Greenlund KJ (2017) County-level concentration of se- lected chronic conditions among medicare fee-for-service beneficiaries and its association with medicare spending in the United States, 2017. Population Health Management. https://doi.org/10.1089/pop.2019.0231

Razzaghi H LH Wang Y, et al. Estimated county-level prevalence of selected underlying medical conditions associated with increased risk for severe COVID-19 illness — United States, 2018. Morbidity and Mortality Weekly Report. 2020 Jul 24; 69(69):945–950. https://www.cdc.gov/mmwr/volumes/69/wr/mm6933e1.htm

Santarpia JL, Rivera DN, Herrera VL, Morwitzer MJ, Creager HM, Santarpia GW, Crown KK, Brett-Major DM, Schnaubelt ER, Broadhurst MJ, Lawler JV, Reid P, Lowe JJ. Aerosol and surface contamination of SARS-CoV-2 observed in quarantine and isolation care. Scientific Reports. 2020 Jul 29; 10(1):12732. https://doi.org/10.1038/s41598-020-69286-3

Wang C, Horby PW, Hayden FG, Gao GF (2020) A novel coronavirus outbreak of global health concern. The Lancet. 395(10223):470–473. https://www.thelancet.com/journals/lancet/article/PIIS0140-6736(20)30185-9/fulltext

He X, Lau EHY, Wu P, Deng X, Wang J, Hao X, et al. Temporal dynamics in viral shedding and transmissibility of COVID-19. Nature Medicine. 2020 May; 26(5):672–675. https://www.nature.com/articles/s41591–020–0869–5

Corman VM, Albarrak AM, Omrani AS, Albarrak MM, Farah ME, Almasri M, et al. Viral shedding and antibody response in 37 patients with middle east respiratory syndrome coronavirus infection. Clinical Infectious Diseases. 2015 11;62(4):477–483. https://doi.org/10.1093/cid/civ951

Chowell G, Abdirizak F, Lee S, Lee J, Jung E, Nishiura H, Vibou C. Transmission characteristics of MERS and SARS in the healthcare setting: A comparative study. BMC Medicine. 2015 Sep 03; 13(1):210. https://doi.org/10.1186/s12916-015-0450-0

Fraser C, Riley S, Anderson RM, Ferguson NM. Factors that make an infectious disease outbreak controllable. Proceedings of the National Academy of Sciences. 2004; 101(16):6146–6151. https://www.pnas.org/content/101/16/6146.

Rewar S, Mirdha D. Transmission of Ebola virus disease: An overview. Annals of Global Health. 2014; 80(6):444–451. http://www.sciencedirect.com/science/article/ pii/S2214999615000107

van Doremalen N, Bushmaker T, Morris DH, Holbrook MG, Gamble A, Williamson BN, Tamin A, Harcourt JL, Gerber SI, Lloyd-Smith JO, Wit Emmie de, Munster VJ. Aerosol and surface stability of SARS-CoV-2 as Compared with SARS-CoV-1. New England Journal of Medicine. 2020; 382(16):1564–1567. https://www.nejm.org/doi/https://doi.org/10.1056/NEJMc2004973

Guo ZD, Wang ZY, Zhang SF, Li X, Li L, Li C, et al. Aerosol and Surface Distribution of Severe Acute Respiratory Syndrome Coronavirus 2 in Hospital Wards, Wuhan, China, 2020. Emerging Infectious Disease journal. 2020;26(7). https://wwwnc.cdc.gov/eid/article/26/7/20-0885_article

Kim H, Paul A. Automated contact tracing: a game of big numbers in the time of COVID-19. Journal of The Royal Society Interface. 2021; 18(175):20200954. https:// royalsocietypublishing.org/doi/abs/https://doi.org/10.1098/rsif.2020.0954

Ferretti L, Wymant C, Kendall M, Zhao L, Nurtay A, Abeler-Dörner L, Parker M, Bonsall D, Fraser C. Quantifying SARS-CoV-2 transmission suggests epidemic control with digital contact tracing. Science. 2020. https://science.sciencemag.org/content/early/2020/04/09/science.abb6936

Chang SL, Harding N, Zachreson C, Cliff OM, Prokopenko M. Modelling transmission and control of the COVID-19 pandemic in Australia. Nature Communications. 2020 Nov; 11(1):5710. https://doi.org/10.1038/s41467-020-19393-6

Aylett-Bullock J, Cuesta-Lazaro C, Quera-Bofarull A, Icaza-Lizaola M, Sedgewick A, Truong H, Curran A, Elliott E, Caulfield T, Fong K, Vernon I, Williams J, Bower R, Krauss F (2021) June: Open-source individual-based epidemiology simulation. Royal Society Open Science. 2021 Jul 01; 8(7):210506. https://www.royalsocietypublishing, https://doi.org/10.1098/rsos.210506

Woo-Sik S (2020) RISEWIDs Team. Individual-based simulation model for COVID-19 transmission in Daegu, Korea. Epidemiology and Health. 2020; 42(0):e2020042. http://www.e-epih.org/journal/view.php?number=1109

Giacopelli G (2021) A full-scale agent-based model to hypothetically explore the impact of lockdown, social distancing, and vaccination during the COVID-19 pandemic in Lombardy, Italy: Model development. JMIRx Med. 2021 Sep; 2(3):e24630. https://med.jmirx.org/2021/3/e24630

Ilin C, Annan-Phan S, Tai XH, Mehra S, Hsiang S, Blumenstock JE. Public mobility data enables COVID-19 forecasting and management at local and global scales. Scientific Reports. 11(1):13531. https://doi.org/10.1038/s41598-021-92892-8

Gottumukkala R, Katragadda S, Bhupatiraju RT, Kamal AM, Raghavan V, Chu H, Kolluru R, Ashkar Z. Exploring the relationship between mobility and COVID-19 infection rates for the second peak in the United States using phase-wise association. BMC Public Health. 2021 Sep; 21(1):1669. https://doi.org/10.1186/s12889-021-11657-0

Chang S, Pierson E, Koh PW, Gerardin J, Redbird B, Grusky D, Leskovec J (2021) Mobility network models of COVID- 19 explain inequities and inform reopening. Nature 589(7840):82–87. https://doi.org/10.1038/s41586-020-2923-3

Quinlan JR (1987) Simplifying decision trees. Int J Man-Machine Stud 27(3):221–234. https://www.sciencedirect.com/science/article/pii/S0020737387800536

Hastie T, Tibshirani R, Friedman J. In: Boosting and additive trees. Springer New York. 2009: 337–387. https://doi.org/10.1007/978-0-387-84858-7_10

Chen T, Guestrin C. XGBoost: A scalable tree boosting system. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. KDD ’16. Association for Computing Machinery, New York, USA; 2016: 785–794. https://doi.org/10.1145/2939672.2939785

Breiman L. Random forests. Machine Learning. 2001 Oct; 45(1):5–32. https://doi.org/10.1023/A:1010933404324

Fawagreh K, Gaber MM, Elyan E. Random forests: From early developments to recent advancements. Systems Science and Control Engineering. 2014; 2(1):602–609. https://doi.org/10.1080/21642583.2014.956265,

Grimmer J, Roberts ME, Stewart BM. Machine learning for social science: An agnostic approach. Annual Review of Political Science. 2021; 24(1):395–419. https://doi.org/10.1146/annurev-polisci-053119-015921,

Adler N, Bush NR, Pantell MS. Rigor, vigor, and the study of health disparities. Proceedings of the National Academy of Sciences. 2012;109(2):17154–17159. https://www.pnas.org/content/109/Supplement_2/17154

Chew AWZ, Pan Y, Wang Y, Zhang L. Hybrid deep learning of social media big data for predicting the evolution of COVID-19 transmission. Knowledge-Based Systems. 2021; 233:107417. https://www.sciencedirect.com/science/article/pii/S0950705121006791

Hamon R, Junklewitz H, Sanchez I. Robustness and explainability of Artificial Intelligence. Publications Office of the European Union. 2020. https://publications.jrc.ec.europa.eu/repository/handle/JRC119336

Rudin C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nature Machine Intelligence. 2019 May; 1(5):206–215. https://doi.org/ https://doi.org/10.1038/s42256-019-0048-x,

Murdoch WJ, Singh C, Kumbier K, Abbasi-Asl R, Yu B. Definitions, methods, and applications in interpretable machine learning. Proceedings of the National Academy of Sciences. 2019; 116(44):22071–22080. https://www.pnas.org/content/116/44/22071,

Barredo Arrieta A, DÃaz-RodrÃguez N, Del Ser J, Bennetot A, Tabik S, Barbado A, Garcia S, Gil-Lopez S, Molina D, Bejamins R, Chatila R, Herrera F. Explainable artificial intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Information Fusion. 2020; 58:82–115. https://www.sciencedirect.com/science/ article/pii/S1566253519308103,

Ribeiro MT, Singh S, Guestrin C. “Why should I trust you?”: Explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. KDD ’16. Association for Computing Machinery, New York, USA. 2016: 1135–1144. https://doi.org/10.1145/2939672.2939778,

Lundberg SM, Lee SI. A Unified approach to interpreting model predictions. In: Guyon I, Luxburg UV, Bengio S, Wallach H, Fergus R, Vishwanathan S, et al., editors. Advances in neural information processing systems. Curran Associates, Inc. 2017: 4765–4774. https://www.proceedings.neurips.cc/paper/2017/file/8a20a8621978632d76c43dfd28b67767-Paper.pdf,

Lundberg SM, Erion G, Chen H, DeGrave A, Prutkin JM, Nair B, Katz R, Himmelfarb J, Bansal N, Lee SI. From local explanations to global understanding with explainable AI for trees. Nature Machine Intelligence. 2020; 2(1):56–67. https://www.nature.com/articles/s42256-019-0138-9

Shapley LS. Notes on the n-person game-II: The value of an n-person game. Rand Corporation. 1951. https://www.rand.org/pubs/research_memoranda/RM0670.html

Shapley LS. A value for n-person games. In: Kuhn HW, Tucker AW, editors. Contributions to the theory of games. Princeton: Princeton University Press; 1953. https://www.scirp.org/(S(351jmbntvnsjt1aadkozje))/reference/referencespapers.aspx?referenceid=2126587

Molnar C. Interpretable machine learning. Lulu. 2020. https://christophm.github. io/interpretable-ml-book/,

Ransome Y, Kawachi I, Braunstein S, Nash D. Structural inequalities drive late HIV diagnosis: The role of black racial concentration, income inequality, socioeconomic deprivation, and HIV testing. Health and Place. 2016 Nov; 42:148–158. https://pubmed.ncbi.nlm.nih.gov/27770671,

Farmer P. Social inequalities and emerging infectious diseases. Emerging infectious diseases. 1996; 2(4):259–269. https://pubmed.ncbi.nlm.nih.gov/8969243,

Hosseini P, Sokolow SH, Vandegrift KJ, Kilpatrick AM, Daszak P. Predictive power of air travel and socio-economic data for early pandemic spread. PLOS ONE. 2010 09; 5(9):1–8. https://doi.org/10.1371/journal.pone.0012763.

Quinn SC, Kumar S. Health inequalities and infectious disease epidemics: A challenge for global health security. Biosecurity and Bioterrorism: Biodefense Strategy, Practice, and Science. 2014; 12(5):263–273. https://pubmed.ncbi.nlm.nih.gov/25254915

The Council on Ethical and Judicial Affairs, American Medical Association. Black-white disparities in health care. JAMA. 1990 05;263(17):2344–6. https://doi.org/10.1001/jama.1990. 03440170066038