Abstract

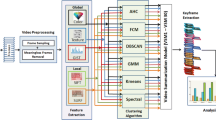

In this paper, we present TAC-SUM, a novel and efficient training-free approach for video summarization that addresses the limitations of existing cluster-based models by incorporating temporal context. Our method partitions the input video into temporally consecutive segments with clustering information, enabling the injection of temporal awareness into the clustering process, setting it apart from prior cluster-based summarization methods. The resulting temporal-aware clusters are then utilized to compute the final summary, using simple rules for keyframe selection and frame importance scoring. Experimental results on the SumMe dataset demonstrate the effectiveness of our proposed approach, outperforming existing unsupervised methods and achieving comparable performance to state-of-the-art supervised summarization techniques. Our source code is available for reference at https://github.com/hcmus-thesis-gulu/TAC-SUM.

H.-D. Huynh-Lam and N.-P. Ho-Thi—Both authors contributed equally to this research.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Apostolidis, E., Adamantidou, E., Metsai, A.I., Mezaris, V., Patras, I.: AC-SUM-GAN: connecting actor-critic and generative adversarial networks for unsupervised video summarization. IEEE Trans. Circuits Syst. Video Technol. 31(8), 3278–3292 (2020)

Apostolidis, E., Adamantidou, E., Metsai, A.I., Mezaris, V., Patras, I.: Performance over random: a robust evaluation protocol for video summarization methods. In: Proceedings of the 28th ACM International Conference on Multimedia, pp. 1056–1064 (2020)

Apostolidis, E., Adamantidou, E., Metsai, A.I., Mezaris, V., Patras, I.: Unsupervised video summarization via attention-driven adversarial learning. In: Ro, Y.M., et al. (eds.) MMM 2020. LNCS, vol. 11961, pp. 492–504. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-37731-1_40

Apostolidis, E., Adamantidou, E., Metsai, A.I., Mezaris, V., Patras, I.: Video summarization using deep neural networks: a survey. IEEE Trans. Pattern Anal. Mach. Intell. (2021)

Apostolidis, E., Balaouras, G., Mezaris, V., Patras, I.: Combining global and local attention with positional encoding for video summarization. In: 2021 IEEE International Symposium on Multimedia (ISM), pp. 226–234 (2021)

Asadi, E., Charkari, N.M.: Video summarization using fuzzy C-means clustering. In: 20th Iranian Conference on Electrical Engineering (ICEE2012), pp. 690–694. IEEE (2012)

Caron, M., et al.: Emerging properties in self-supervised vision transformers. In: Proceedings of the International Conference on Computer Vision (ICCV) (2021)

Chu, W.T., Liu, Y.H.: Spatiotemporal modeling and label distribution learning for video summarization. In: 2019 IEEE 21st International Workshop on Multimedia Signal Processing (MMSP), pp. 1–6. IEEE (2019)

De Avila, S.E.F., Lopes, A.P.B., da Luz Jr, A., de Albuquerque Araújo, A.: VSUMM: a mechanism designed to produce static video summaries and a novel evaluation method. Pattern Recogn. Lett. 32(1), 56–68 (2011)

Fajtl, J., Sokeh, H.S., Argyriou, V., Monekosso, D., Remagnino, P.: Summarizing videos with attention. In: Carneiro, G., You, S. (eds.) ACCV 2018. LNCS, vol. 11367, pp. 39–54. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-21074-8_4

Gygli, M., Grabner, H., Riemenschneider, H., Van Gool, L.: Creating summaries from user videos. In: ECCV (2014)

He, X., et al.: Unsupervised video summarization with attentive conditional generative adversarial networks. In: Proceedings of the 27th ACM International Conference on multimedia, pp. 2296–2304 (2019)

Jung, Y., Cho, D., Kim, D., Woo, S., Kweon, I.S.: Discriminative feature learning for unsupervised video summarization. In: Proceedings of the AAAI Conference on artificial intelligence, vol. 33, pp. 8537–8544 (2019)

Jung, Y., Cho, D., Woo, S., Kweon, I.S.: Global-and-Local relative position embedding for unsupervised video summarization. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12370, pp. 167–183. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58595-2_11

Li, P., Ye, Q., Zhang, L., Yuan, L., Xu, X., Shao, L.: Exploring global diverse attention via pairwise temporal relation for video summarization. Pattern Recogn. 111, 107677 (2021)

Liu, Y.T., Li, Y.J., Yang, F.E., Chen, S.F., Wang, Y.C.F.: Learning hierarchical self-attention for video summarization. In: 2019 IEEE International Conference on Image Processing (ICIP), pp. 3377–3381. IEEE (2019)

Mahasseni, B., Lam, M., Todorovic, S.: Unsupervised video summarization with adversarial LSTM networks. In: CVPR (2017)

Mahmoud, K.M., Ghanem, N.M., Ismail, M.A.: Unsupervised video summarization via dynamic modeling-based hierarchical clustering. In: Proceedings of the 12th International Conference on Machine Learning and Applications, vol. 2, pp. 303–308 (2013)

Mahmoud, K.M., Ismail, M.A., Ghanem, N.M.: VSCAN: an enhanced video summarization using density-based spatial clustering. In: Petrosino, A. (ed.) ICIAP 2013. LNCS, vol. 8156, pp. 733–742. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-41181-6_74

Mundur, P., Rao, Y., Yesha, Y.: Keyframe-based video summarization using Delaunay clustering. Int. J. Digit. Libr. 6, 219–232 (2006)

Radford, A., et al.: Learning transferable visual models from natural language supervision. In: International Conference on Machine Learning, pp. 8748–8763. PMLR (2021)

Rochan, M., Ye, L., Wang, Y.: Video summarization using fully convolutional sequence networks. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 347–363 (2018)

Shroff, N., Turaga, P., Chellappa, R.: Video précis: highlighting diverse aspects of videos. IEEE Trans. Multimedia 12(8), 853–868 (2010)

Wang, J., Wang, W., Wang, Z., Wang, L., Feng, D., Tan, T.: Stacked memory network for video summarization. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 836–844 (2019)

Zhang, K., Chao, W.-L., Sha, F., Grauman, K.: Video summarization with long short-term memory. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9911, pp. 766–782. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46478-7_47

Zhang, T., Ramakrishnan, R., Livny, M.: BIRCH: an efficient data clustering method for very large databases. SIGMOD Rec. 25(2), 103–114 (1996). https://doi.org/10.1145/235968.233324

Zhao, B., Li, X., Lu, X.: Hierarchical recurrent neural network for video summarization. In: Proceedings of the 25th ACM International Conference on Multimedia, pp. 863–871 (2017)

Zhao, B., Li, X., Lu, X.: HSA-RNN: hierarchical structure-adaptive RNN for video summarization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7405–7414 (2018)

Zhao, B., Li, X., Lu, X.: TTH-RNN: tensor-train hierarchical recurrent neural network for video summarization. IEEE Trans. Industr. Electron. 68(4), 3629–3637 (2020)

Zhou, K., Qiao, Y., Xiang, T.: Deep reinforcement learning for unsupervised video summarization with diversity-representativeness reward. In: AAAI (2018)

Acknowledgement

This research is supported by research funding from Faculty of Information Technology, University of Science, Vietnam National University - Ho Chi Minh City.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Huynh-Lam, HD., Ho-Thi, NP., Tran, MT., Le, TN. (2024). Cluster-Based Video Summarization with Temporal Context Awareness. In: Yan, W.Q., Nguyen, M., Nand, P., Li, X. (eds) Image and Video Technology. PSIVT 2023. Lecture Notes in Computer Science, vol 14403. Springer, Singapore. https://doi.org/10.1007/978-981-97-0376-0_2

Download citation

DOI: https://doi.org/10.1007/978-981-97-0376-0_2

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-0375-3

Online ISBN: 978-981-97-0376-0

eBook Packages: Computer ScienceComputer Science (R0)