Abstract

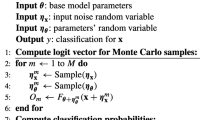

We investigate the robustness of stochastic ANNs to adversarial attacks. We perform experiments on three known datasets. Our experiments reveal similar susceptibility of stochastic ANNs compared to conventional ANNs when confronted with simple iterative gradient-based attacks in the white-box settings. We observe, however, that in black-box settings, SANNs are more robust than conventional ANNs against boundary and surrogate attacks. Consequently, we propose improved attacks against stochastic ANNs. In the first step, we show that using stochastic networks as surrogates outperforms deterministic ones, when performing surrogate-based black-box attacks. In order to further boost adversarial success rates, we propose in a second step the novel Variance Mimicking (VM) surrogate training, and validate its improved performance.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Athalye A, Engstrom L, Ilyas A, Kwok K (2018) Synthesizing robust adversarial examples. In: Dy J, Krause A (eds) Proceedings of the 35th international conference on machine learning. Proceedings of machine learning research, vol 80, pp 284–293. PMLR, Stockholmsmässan, Stockholm Sweden (10–15 Jul 2018). http://proceedings.mlr.press/v80/athalye18b.html

Bengio Y, Léonard N, Courville A (2013) Estimating or propagating gradients through stochastic neurons for conditional computation, pp 1–12. http://arxiv.org/abs/1308.3432

Brendel W, Rauber J, Bethge M (2018) Decision-based adversarial attacks: reliable attacks against black-box machine learning models. In: International conference on learning representations. https://arxiv.org/abs/1712.04248

Carlini N, Wagner D (2017) Adversarial examples are not easily detected: bypassing ten detection methods. http://arxiv.org/abs/1705.07263

Carlini N, Wagner D (2017) Towards evaluating the robustness of neural networks. In: Proceedings—IEEE symposium on security and privacy, pp 39–57. https://doi.org/10.1109/SP.2017.49

Chen J, Wu X, Rastogi V, Liang Y, Jha S (2019) Towards understanding limitations of pixel discretization against adversarial attacks. In: 2019 IEEE European symposium on security and privacy (EuroS&P). IEEE, New York, pp 480–495

Cheng S, Dong Y, Pang T, Su H, Zhu J (2019) Improving black-box adversarial attacks with a transfer-based prior. In: Advances in neural information processing systems, pp 10932–10942

Co KT, Muñoz-González L, de Maupeou S, Lupu EC (2019) Procedural noise adversarial examples for black-box attacks on deep convolutional networks. In: Proceedings of the 2019 ACM SIGSAC conference on computer and communications security, pp 275–289

Galloway A, Taylor GW, Moussa M (2017) Attacking binarized neural networks, pp 1–14. http://arxiv.org/abs/1711.00449

Goh J, Adepu S, Tan M, Lee ZS (2017) Anomaly detection in cyber physical systems using recurrent neural networks. In: 2017 IEEE 18th international symposium on high assurance systems engineering (HASE), pp 140–145 (Jan 2017). https://doi.org/10.1109/HASE.2017.36

Goodfellow IJ, Shlens J, Szegedy C (2014) Explaining and harnessing adversarial examples, pp 1–11. http://arxiv.org/abs/1412.6572

Hubara I, Courbariaux M, Soudry D, El-Yaniv R, Bengio Y (2016) Binarized neural networks. In: Advances in neural information processing systems (Nips), pp 4114–4122

Khalil EB, Gupta A, Dilkina B (2018) Combinatorial attacks on binarized neural networks, pp 1–12. http://arxiv.org/abs/1810.03538

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Krizhevsky A et al (2009) Learning multiple layers of features from tiny images. Technical report, Citeseer

Kurakin A, Goodfellow I, Bengio S (2016) Adversarial examples in the physical world. arXiv preprint arXiv:1607.02533

LeCun Y, Bottou L, Bengio Y, Haffner P et al (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Lecuyer M, Atlidakis V, Geambasu R, Hsu D, Jana S (2019) Certified robustness to adversarial examples with differential privacy. In: 2019 IEEE symposium on security and privacy (SP). IEEE, New York, pp 656–672

Li B, Chen C, Wang W, Carin L (2019) Certified adversarial robustness with additive noise. In: Advances in neural information processing systems, pp 9464–9474

Li H, Xu Z, Taylor G, Studer C, Goldstein T (2018) Visualizing the loss landscape of neural nets. In: Advances in neural information processing systems, pp 6389–6399

Madry A, Makelov A, Schmidt L, Tsipras D, Vladu A (2017) Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083

Moosavi-Dezfooli SM, Fawzi A, Frossard P (2016) Deepfool: a simple and accurate method to fool deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2574–2582

Papernot N, McDaniel P, Goodfellow I, Jha S, Celik ZB, Swami A (2017) Practical black-box attacks against machine learning. In: Proceedings of the 2017 ACM on Asia conference on computer and communications security. ACM, New York, pp 506–519

Paszke A, Gross S, Chintala S, Chanan G, Yang E, DeVito Z, Lin Z, Desmaison A, Antiga L, Lerer A (2017) Automatic differentiation in pytorch

Raiko T, Berglund M, Alain G, Dinh L (2014) Techniques for learning binary stochastic feedforward neural networks, pp 1–10. http://arxiv.org/abs/1406.2989

Rauber J, Brendel W, Bethge M (2017) Foolbox: a python toolbox to benchmark the robustness of machine learning models. arXiv preprint arXiv:1707.04131

Rosenberg I, Shabtai A, Elovici Y, Rokach L (2018) Low resource black-box end-to-end attack against state of the art API call based malware classifiers. http://arxiv.org/abs/1804.08778, https://doi.org/10.1145/TODO

Silver D, Huang A, Maddison CJ, Guez A, Sifre L, Van Den Driessche G, Schrittwieser J, Antonoglou I, Panneershelvam V, Lanctot M et al (2016) Mastering the game of go with deep neural networks and tree search. Nature 529(7587):484

Szegedy C, Zaremba W, Sutskever I, Bruna J, Erhan D, Goodfellow I, Fergus R (2013) Intriguing properties of neural networks. arXiv preprint arXiv:1312.6199

Tan YXM, Iacovazzi A, Homoliak I, Elovici Y, Binder A (2019) Adversarial attacks on remote user authentication using behavioural mouse dynamics. arXiv preprint arXiv:1905.11831

Veeling BS, Linmans J, Winkens J, Cohen T, Welling M (2018) Rotation equivariant CNNs for digital pathology (Jun 2018)

Yin M, Zhou M (2019) ARM: augment-reinforce-merge gradient for stochastic binary networks, pp 1–21. https://github.com/mingzhang-yin/ARM-gradient

Acknowledgements

This research is supported by both ST Engineering Electronics and National Research Foundation, Singapore, under its Corporate Laboratory @ University Scheme (Programme Title: STEE Infosec-SUTD Corporate Laboratory).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Tan, Y.X.M., Elovici, Y., Binder, A. (2021). Analysing the Adversarial Landscape of Binary Stochastic Networks. In: Kim, H., Kim, K.J., Park, S. (eds) Information Science and Applications. Lecture Notes in Electrical Engineering, vol 739. Springer, Singapore. https://doi.org/10.1007/978-981-33-6385-4_14

Download citation

DOI: https://doi.org/10.1007/978-981-33-6385-4_14

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-33-6384-7

Online ISBN: 978-981-33-6385-4

eBook Packages: Computer ScienceComputer Science (R0)