Abstract

Observing the ocean’s interior is becoming extremely important since recent evidence suggests widespread warming in the subsurface and deeper ocean as a response to the Earth’s Energy Imbalance (EEI) in recent decades. However, the ocean’s interior observations are sparse and insufficient, severely constraining the studies of ocean interior dynamics and variabilities. Detecting and predicting subsurface and deeper ocean thermohaline structure from satellite remote sensing measurements is quite essential for understanding ocean interior 3D environment and processes effectively. This chapter proposes several novel approaches based on artificial intelligence to accurately retrieve and predict subsurface thermohaline structure (upper 2000 m) from multiple satellite observations combined with Argo float data. We manage to construct AI-based deep ocean remote sensing technique with high spatiotemporal applicability to subtly detect and describe global subsurface thermohaline structure, so as to support the studies of ocean internal processes and anomalies under global warming. The AI-based approaches demonstrate great potential for subsurface environment data reconstruction and should be a promising technique for investigating ocean interior change and variability as well as its role in global climate change from satellite remote sensing measurements.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Introduction

The ocean acts as a heat sink and is vital to the Earth’s climate system. It regulates and balances the global climate environment through the exchange of energy and substances in the atmosphere and the water cycle. As a huge heat storage, the ocean collects most of the heat from global warming and is sensitive to global climate change. The global ocean hold over 90% of the Earth’s increasing heat as a response to the Earth’s Energy Imbalance (EEI), leading to substantial ocean warming in recent decades [24, 36]. Subsurface thermohaline are basic and essential dynamic environmental variables for understanding the global ocean’s involvement in recent global warming caused by the greenhouse gas emissions. Moreover, many significant dynamic processes and phenomena are located beneath the ocean’s surface, and there are many multiscale and complicated 3D dynamic processes in the ocean’s interior. To completely comprehend these processes, it is necessary to accurately estimate the thermohaline structure in the global ocean’s interior [43].

The ocean has warmed dramatically as a result of heat absorption and sequestration during recent global warming. Meanwhile, the heat content of the ocean has risen rapidly in recent decades [3, 14]. The global upper ocean warmed significantly from 1993 to 2008 [6]. The rate of heat uptake in the intermediate ocean below 300 m has increased much more in recent years [2]. It shows that the warming of the ocean above 300 m slows down, while the warming of the ocean below 300 m speeds up. The ocean system accelerates heat uptake, leading to significant and unprecedented heat content increasing and worldwide ocean warming, particularly in the subsurface and deeper ocean. This has caused the global ocean heat content hitting a record high in recent years [14, 15]. In addition, the ocean salinity as another key dynamic variable is also crucial for investigations on ocean variability and warming. The salinity mechanism has been proposed to expound how the upper ocean’s warming heat transferred to the subsurface and deeper ocean [12], which highlights the importance of salinity distribution in the heat redistribution and the process of ocean warming. Furthermore, the global hydrological cycle is modulated by ocean salinity [4]. The thermohaline expansions, which contribute significantly to sea-level rise, are also linked to ocean temperature and salinity [9]. Therefore, to improve the understanding of the dynamic process and climate variability in subsurface and deeper ocean, deriving and predicting subsurface thermohaline structure is critical [31].

Due to the sparse and uneven sampling of float observations and the lack of time-series data in the ocean, there are still large uncertainties in the estimation of the ocean heat content and the analysis of the ocean warming process [13, 42]. In the era of ship-based measurement, large areas of the global ocean are without or lack of in-situ observation data, especially in the Southern Ocean. The data obtained by the traditional ship-based method not only has limited coverage, but also can’t achieve uniform spatiotemporal measurement, hindering the multi-scale studies on the ocean processes. Since 2004, the Argo observation network has achieved the synchronous observation for the upper 2000 m of the global ocean in space and time [39, 51]. However, the number of Argo floats is currently insufficient and far from enough for the global ocean observation, which cannot provide high-resolution internal observation and cannot meet the requirements of global ocean processes and climate change study. Given that satellite remote sensing can obtain large-scale sea surface range and high-resolution sea surface observation data, satellite remote sensing has become an essential technique for ocean observation. Although sea surface satellites can provide large-scale, high-resolution sea surface observation data, they cannot directly observe the ocean subsurface temperature structure [1]. Since many subsurface phenomena have surface manifestations that can be interpreted with the help of satellite measurements, it is able to derive the key dynamic parameters (especially the thermohaline structure) within the ocean from sea surface satellite observations by certain mechanism models. Deep ocean remote sensing (DORS) has the ability to retrieve ocean interior dynamic parameters and enables us to characterize ocean interior processes and features and their implications for the climate change [25].

Previous studies have demonstrated that the DORS technique has a great potential to detect and predict the dynamic parameters of ocean interior indirectly based on satellite measurements combined with float observations [41, 43]. DORS methods mainly include numerical modeling and data assimilation [25], dynamic theoretical approach [30, 48, 50], and empirical statistical and machine learning approach [23, 41]. The accuracy of numerical and dynamic modeling for subsurface ocean simulation and estimation at large scale is not guaranteed due to the complexity and uncertainty of these methods. Reference [47] empirically estimated mesoscale 3D oceanic thermal structures by employing a two-layer model with a set of parameters. Reference [35] determined the vertical structure and transport on a transect across the North Atlantic Current by integrating historical hydrography with acoustic travel time. Reference [34] estimated the 4D structure of the Southern Ocean from satellite altimetry by a gravest empirical mode projection. However, in the big ocean data and artificial intelligence era, data-driven models, particularly cutting-edge artificial intelligence or machine learning models, perform well and can reach high accuracy in DORS techniques and applications. So far, the empirical statistical and AI models have been well developed and applied, including the linear regression model [19, 23], empirical orthogonal function-based approach [32, 37], geographically weighted regression model [43], and advanced machine learning models, such as artificial neural networks [1, 45], self-organizing map [10], support vector machine [28, 41], random forests (RFs) [43], clustering neural networks [31], and XGBoost [44]. Although traditional machine learning methods have made significant contributions to DORS techniques, they are unable to consider and learn the spatiotemporal characteristics of ocean observation data. In the big earth data era, deep learning has been widely utilized for process understanding for data-driven Earth system science [38]. Deep learning techniques offer great potential in DORS studies to help overcome limitations and improve performance [46]. For example, Long Short-Term Memory (LSTM) can well capture data time-series features and achieves time-series learning [8], and Convolutional Neural Networks (CNN) take into account data spatial characteristics to easily realize spatial learning [5]. Deep learning technique has unleashed great potential in data-driven oceanography and remote sensing research.

This chapter proposes several novel approaches based on ensemble learning and deep learning to accurately retrieve and depict subsurface thermohaline structure from multisource satellite observations combined with Argo in situ data, and highlight the AI applications in the deep ocean remote sensing and climate change studies. We aim to construct AI-based inversion models with strong robustness and generalization ability to well detect and describe the subsurface thermohaline structure of the global ocean. Our new methods can provide powerful AI-based techniques for examining subsurface and deeper ocean thermohaline change and variability which has played a significant role in recent global warming from remote sensing perspective on a global scale.

2 Study Area and Data

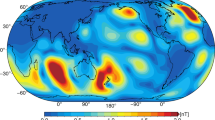

The ocean plays a significant role in modulating the global climate system, especially during recent global warming and ocean warming [51]. It serves as a significant heat sink for the Earth’s climate system [12], and also acts as an important sink for the increasing CO2 caused by anthropogenic activities and emissions. The study area focused here is the global ocean which includes the Pacific Ocean, Atlantic Ocean, Indian Ocean, and Southern Ocean (180\(^{\circ }\) W~180\(^{\circ }\) E and 78.375\(^{\circ }\) S~77.625\(^{\circ }\) N).

The satellite-based sea surface measurements adopted in this study include sea surface height (SSH), sea surface temperature (SST), sea surface salinity (SSS), and sea surface wind (SSW). Here, the SSH is obtained from AVISO satellite altimetry. The SST is acquired from Optimum Interpolation Sea-Surface Temperature (OISST) data. The SSS is obtained from the Soil Moisture and Ocean Salinity (SMOS). The SSW is acquired from Cross-Calibrated Multi-Platform (CCMP). The longitude (LON) and latitude (LAT) georeference information are also employed as supplementary input parameters. All sea surface variables above have the same 0.25\(^{\circ }\) \(\times \) 0.25\(^{\circ }\) spatial resolution. The subsurface temperature (ST) and salinity (SS) data are from Argo gridded products with 1\(^{\circ }\) \(\times \) 1\(^{\circ }\) spatial resolution. This study adopted Argo gridded data for subsurface ocean upper 1,000 m with 16 depth levels as labeling data. We initially applied the nearest neighbor interpolation approach to unify the satellite-based sea surface variables to 1\(^{\circ }\) \(\times \) 1\(^{\circ }\) spatial resolution.

All the aforementioned satellite-based sea surface variables and Argo gridded data should be subtracted their climatology (baseline: 2005–2016) to obtain their anomaly fields in order to avoid the climatology seasonal variation signal [41]. In this study, We primarily focus on the nonseasonal anomaly signals, which are more difficult to detect but more significant for climate change. We applied a maximum-minimum normalization approach to normalize the training dataset to the range of [0, 1]. The testing dataset was likewise subjected to the corresponding normalization, which can effectively prevent data leakage during the modeling.

3 Retrieving Subsurface Thermohaline Based on Ensemble Learning

Here, the specific procedure of subsurface thermohaline retrieval based on machine learning approaches contains three technical steps. Firstly, the training dataset for the model was constructed. We selected the satellite-based sea surface parameters (SSH, SST, SSS, SSW) as input variables for AI-based models, and the subsurface temperature anomaly (STA) and salinity anomaly (SSA) from Argo gridded data were adopted as data labels for training and testing. Moreover, all the input surface and subsurface datasets were uniformly normalized and randomly separated into a training dataset (60%) and a testing dataset (40%), which were utilized to train and test the AI-based models, respectively. Secondly, the model was trained using the training dataset. The model’s hyper-parameters were tuned by using Bayesian optimization approach, and then a proper machine learning model was well set up using the optimal input parameters. Finally, the prediction was performed based on the trained model. We predicted the STA and SSA by the optimized model, and then evaluated the model performance and accuracy by determination coefficient (R2) and root-mean-square error (RMSE).

3.1 EXtreme Gradient Boosting (XGBoost)

Gradient Boosting Decision Tree (GBDT) as a boosting algorithm is an iterative decision trees algorithm and is composed of multiple decision trees [16]. EXtreme Gradient Boosting (XGBoost) is an upgraded GBDT ensemble learning algorithm [11], as well as an optimized distributed gradient boosting library. XGBoost implements an ensemble machine learning algorithm based on decision tree that adopts a gradient boosting framework, and also provides a parallel tree boosting that solve many data science problems in an efficient, flexible and accurate way. To achieve the optimal model performance, the parameter tuning is essential during the modeling. XGBoost contains several hyper-parameters which are related to the complexity and regularization of the model [49], and they must be optimized in order to refine the model and improve the performance. Here, we used the well-performed Bayesian optimization approach to tune the XGBoost hyper-parameters.

Figures 1–2 show the spatial distribution of subsurface temperature and salinity anomalies (STA and SSA) of the global ocean from the XGBoost-based result and Argo gridded data in December 2015 at 600 m depth. It is clear that both the XGBoost-estimated STA and SSA were significantly consistent with the Argo gridded STA and SSA at 600 m depth. The R2 of STA/SSA between Argo gridded data and XGBoost-estimated result is 0.989/0.981, and the RMSE is 0.026 \(^{\circ }\)C/0.004 PSU.

3.2 Random Forests (RFs)

Random Forests (RFs) are a popular and well-used ensemble learning method for data classification and regression. Reference [7] proposed the general strategy of RFs, which fit numerous decision trees on various data subsets by randomly resampling the training data. RFs adopt averaging to improve the prediction accuracy and control overfitting, and correct for the decision tree’s tendency of overfitting. RFs have been effectively applied in varying remote sensing fields [21, 53] and generally perform very well. Several advantages make RFs well-suited to remote sensing studies [20, 52].

The basic strategy of RFs is to grow a number of decision trees on random subsets of the training data [40], and determine the decision rules, and choose the best split for each node splitting [29]. This strategy performs well compared to many other classifiers and makes it robust against overfitting [7]. RFs only require two input parameters for training, the number of trees in the forest (ntree) and the number of variables/features in the random subset at each node (mtry), and both parameters are generally insensitive to their values [29].

Figures 3–4 show the spatial distribution of subsurface thermohaline anomalies of the global ocean from RFs-based result and Argo gridded data in June 2015 at 600 m depth. It is clear that the spatial distribution and pattern between RFs-estimated results and Argo gridded data are quite similar. The R2 of STA/SSA between Argo data and XGBoost-estimated result is 0.971/0.972, and the RMSE is 0.042 \(^{\circ }\)C/0.005 PSU.

4 Predicting Subsurface Thermohaline Based on Deep Learning

The predicting process for subsurface thermohaline based on deep learning includes three steps. Firstly, the training dataset combined satellite-based sea surface parameters (SSH, SST, SSS, SSW) with Argo subsurface data as training label were prepared. Secondly, we carried out a hyperparameter tuning based on a grid-search strategy to achieve an optimal deep learning model by training. Here, we set up the time-series deep learning models by adopting the time-series data as the training dataset and the rest as the testing dataset, so as to realize time-series subsurface thermohaline prediction. Finally, the performance measures of RMSE and R2 were adopted to evaluate the model performance and accuracy.

4.1 Bi-Long Short-Term Memory (Bi-LSTM)

The LSTM is a sort of recurrent neural network [22], which is well-suited to time-series modeling and has been widely applied in natural language processing and speech recognition. The primary principle behind LSTM is to leverage the target variable’s historical information. Unlike traditional feedforward neural networks, the training errors in an LSTM propagate over a time sequence, capturing the time-dependent relationship of the training data’s historical information [18]. Bi-Long Short-Term Memory (Bi-LSTM) is an upgraded LSTM algorithm. The Bi-LSTM consists of two unidirectional LSTM that processes the input sequence forward and backward meanwhile, and captures the information ignored by the unidirectional LSTM.

To ensure the Bi-LSTM model can achieve good performance and high accuracy, it is necessary to select and tune the proper hyperparameters as the input of Bi-LSTM model. Here, we randomly picked 20% of the training dataset for Bi-LSTM hyperparameter tuning, so as to achieve the optimal model input. The Bayesian optimization approach was utilized in this study to obtain the best number of layers and neurons for Bi-LSTM network. By model testing, we finally selected a neural network with three layers and neuron counts of 32, 64, and 64 for respective layer. Moreover, the batch normalization was conducted after the hidden layer of each network. According to the previous practice, the optimal performance could be effectively attained with mini-batch sizes ranging from 2 to 32 [33]. Thus, the best batch size was set to 32 for the model. In addition, the optimal epoch of the STA network was set to 257, while the best one of the SSA network was set to 81. Moreover, We adopted the RMSE, R2, and Spearman’s rank correlation coefficient (\(\rho \)) to obtain the optimal Bi-LSTM timestep. The results demonstrate that the Bi-LSTM model performs optimally when the network timestep is set to 10. Thus, the timestep here was set as 10.

We employed the data from December 2010 to November 2015 as the training dataset and the data in December 2015 as the testing dataset. The testing dataset adopted the target month dataset for performance evaluation (Table 1). In general, Bi-LSTM was characterized by a whole temporal sequence in both training and prediction, but for the accuracy validation, we only focused on the target month. When constructed the input dataset for Bi-LSTM, we restructured the data grid by grid with time sequence according to the rule of \(X_{i = 1}^{j = 1}\), \(X_{i = 1}^{j = 2}\)...\(X_{i = 1}^{j = 60}\), \(X_{i = 2}^{j = 1}\)...\(X_{i = 2}^{j = 60}\),..., \(X_{i = 24922}^{j = 1}\)...\(X_{i = 24922}^{j = 60}\) (i represents the grid point, j represents the month).

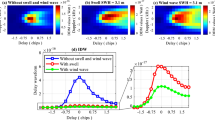

Figures 5–6 show the spatial distribution of subsurface temperature and salinity anomalies of the global ocean from the LSTM-predicted result and Argo gridded data in December 2015 at 200 m depth. It is clear that the LSTM-predicted result can accurately retrieve and capture most anomaly signals in the subsurface ocean. The R2 of STA/SSA between Argo gridded data and LSTM-predicted result is 0.728/0.476, and the RMSE is 0.378 \(^{\circ }\)C/0.055 PSU.

Figure 7 is the meridional profile (at longitude 190\(^{\circ }\)) for Argo gridded and LSTM-predicted STA for vertical comparison and validation. The results presented that the two vertical profiles are highly consistent in the vertical distribution pattern, and over 99.75% of the profile points were within ±1 \(^{\circ }\)C prediction error, while over 99.44% of the profile points were within ±0.5 \(^{\circ }\)C error. Figure 8 is the same meridional profile for Argo gridded and LSTM-predicted SSA for vertical comparison and validation. The results indicated that the two vertical profiles match well in the vertical distribution pattern, and over 99.55% of the profile points were within ±0.2 PSU prediction error, while over 99.36% of the profile points were within ±0.1 PSU error. The results demonstrated that the model prediction performance for STA and SSA are excellent with high accuracy.

4.2 Convolutional Neural Network (CNN)

Convolutional Neural Network (CNN) is a well-known deep learning algorithm. [17] proposed a neural network structure, including convolution and pooling layers, which can be regarded as the first implementation of the CNN model. On this basis, [27] proposed the LeNet-5 network, which used the error backpropagation algorithm in the network structure and was considered a prototype of CNN. Until 2012, the deep network structure and dropout method were applied in the ImageNet image recognition contest [26], and significantly reduced the error rate, which opened a new era in the image recognition field. So far, the CNN technique has already been widely utilized in a variety of applications, including climate change and marine environmental remote sensing applications [5]. Here, the CNN algorithm combined with satellite observations was employed to predict ocean subsurface parameters.

We utilized the CNN approach to retrieve ocean subsurface temperature (ST) and salinity (SS) based on satellite remote sensing data directly. Figures 9–10 show the spatial distribution of subsurface thermohaline of the global ocean from the CNN-predicted and Argo gridded data in December 2015 at 200 m depth. The R2 of STA/SSA between Argo gridded data and CNN-predicted result is 0.972/0.822, and the RMSE is 0.924 \(^{\circ }\)C/0.293 PSU.

5 Conclusions

This chapter proposes several AI-based techniques (ensemble learning and deep learning) for retrieving and predicting subsurface thermohaline in the global ocean. The proposed models are proved to estimate the subsurface temperature and salinity structures accurately in the global ocean through multisource satellite remote sensing observations (SSH, SST, SSS, and SSW) combined with Argo float data. The performance and accuracy of the models are well evaluated by Argo in situ data. The results demonstrate that the AI-based model has strong robustness and generalization ability, and can be well applied to the prediction and reconstruction of subsurface dynamic environmental parameters.

We employ XGBoost and RFs ensemble learning algorithms to derive the subsurface temperature and salinity of the global ocean, and the R2/RMSE of XGBoost retrieved STA and SSA are 0.989/0.026 \(^{\circ }\)C and 0.981/0.004 PSU, and the R2/RMSE of RFs retrieved STA and SSA are 0.971/0.042 \(^{\circ }\)C and 0.972/0.005 PSU. Moreover, Bi-LSTM and CNN deep learning algorithms are adopted to time-series predicting of subsurface thermohaline, the R2/RMSE of Bi-LSTM predicted STA and SSA are 0.728/0.378 \(^{\circ }\)C and 0.476/0.055 PSU, the R2 / RMSE of CNN predicted ST and SS are 0.972/0.924 \(^{\circ }\)C and 0.822/0.293 PSU (CNN to predict the ST and SS directly). Overall, ensemble learning algorithms which are suited for small data modeling can be used to well retrieve mono-temporal subsurface thermohaline structure, while deep learning algorithms which are fit for big data modeling can be well adopted to predict time-series subsurface thermohaline structure.

In the future, we can employ longer time-series of remote sensing data for modeling and utilize more advanced deep learning algorithms to improve the model applicability and robustness. We should further promote the application of AI and deep learning techniques in the deep ocean remote sensing and data reconstruction for revisiting global ocean warming and climate change. The powerful AI technology shows great potential for detecting and predicting the subsurface environmental parameters based on multisource satellite measurements, and can provide a useful technique for promoting the studies of deep ocean remote sensing as well as ocean warming and climate change during recent decades.

References

Ali M, Swain D, Weller R (2004) Estimation of ocean subsurface thermal structure from surface parameters: a neural network approach. Geophys Res Lett 31(20)

Allison L, Roberts C, Palmer M, Hermanson L, Killick R, Rayner N, Smith D, Andrews M (2019) Towards quantifying uncertainty in ocean heat content changes using synthetic profiles. Environ Res Lett 14(8):084037

Balmaseda MA, Trenberth KE, Källén E (2013) Distinctive climate signals in reanalysis of global ocean heat content. Geophys Res Lett 40(9):1754–1759

Bao S, Zhang R, Wang H, Yan H, Yu Y, Chen J (2019) Salinity profile estimation in the Pacific Ocean from satellite surface salinity observations. J Atmos Oceanic Tech 36(1):53–68

Barth A, Alvera-Azcárate A, Licer M, Beckers JM (2020) DINCAE 1.0: a convolutional neural network with error estimates to reconstruct sea surface temperature satellite observations. Geosci Model Develop 13(3):1609–1622

Boyer T, Domingues CM, Good SA, Johnson GC, Lyman JM, Ishii M, Gouretski V, Willis JK, Antonov J, Wijffels S et al (2016) Sensitivity of global upper-ocean heat content estimates to mapping methods, XBT bias corrections, and baseline climatologies. J Clim 29(13):4817–4842

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Buongiorno Nardelli B (2020) A deep learning network to retrieve ocean hydrographic profiles from combined satellite and in situ measurements. Remote Sensing 12(19):3151

Cazenave A, Meyssignac B, Ablain M, Balmaseda M, Bamber J, Barletta V, Beckley B, Benveniste J, Berthier E, Blazquez A et al (2018) Global sea-level budget 1993-present. Earth System Science Data 10(3):1551–1590

Chen C, Yang K, Ma Y, Wang Y (2018) Reconstructing the subsurface temperature field by using sea surface data through self-organizing map method. IEEE Geosci Remote Sens Lett 15(12):1812–1816

Chen T, Guestrin C (2016) XGBoost: A scalable tree boosting system. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 785–794

Chen X, Tung KK (2014) Varying planetary heat sink led to global-warming slowdown and acceleration. Science 345(6199):897–903

Cheng L, Zhu J (2014) Uncertainties of the ocean heat content estimation induced by insufficient vertical resolution of historical ocean subsurface observations. J Atmos Oceanic Tech 31(6):1383–1396

Cheng L, Abraham J, Zhu J, Trenberth KE, Fasullo J, Boyer T, Locarnini R, Zhang B, Yu F, Wan L et al (2020) Record-setting ocean warmth continued in 2019. Adv Atmos Sci 37(2):137–142

Cheng L, Abraham J, Trenberth KE, Fasullo J, Boyer T, Locarnini R, Zhang B, Yu F, Wan L, Chen X et al (2021) Upper ocean temperatures hit record high in 2020. Adv Atmos Sci 38(4):523–530

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. In: The Annals of Statistics, pp 1189–1232

Fukushima K, Miyake S (1982) Neocognitron: A self-organizing neural network model for a mechanism of visual pattern recognition. In: Competition and cooperation in neural nets. Springer, pp 267–285

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press

Guinehut S, Dhomps AL, Larnicol G, Le Traon PY (2012) High resolution 3-D temperature and salinity fields derived from in situ and satellite observations. Ocean Sci 8(5):845–857

Guo L, Chehata N, Mallet C, Boukir S (2011) Relevance of airborne lidar and multispectral image data for urban scene classification using Random Forests. ISPRS J Photogramm Remote Sens 66(1):56–66

Ham J, Chen Y, Crawford MM, Ghosh J (2005) Investigation of the Random Forest framework for classification of hyperspectral data. IEEE Trans Geosci Remote Sens 43(3):492–501

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Jeong Y, Hwang J, Park J, Jang CJ, Jo YH (2019) Reconstructed 3-D ocean temperature derived from remotely sensed sea surface measurements for mixed layer depth analysis. Remote Sensing 11(24):3018

Johnson GC, Lyman JM (2020) Warming trends increasingly dominate global ocean. Nat Clim Chang 10(8):757–761

Klemas V, Yan XH (2014) Subsurface and deeper ocean remote sensing from satellites: An overview and new results. Prog Oceanogr 122:1–9

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25:1097–1105

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Li W, Su H, Wang X, Yan X (2017) Estimation of global subsurface temperature anomaly based on multisource satellite observations. J Remote Sens 21:881–891

Liaw A, Wiener M et al (2002) Classification and regression by Random Forest. R news 2(3):18–22

Liu L, Xue H, Sasaki H (2019) Reconstructing the ocean interior from high-resolution sea surface information. J Phys Oceanogr 49(12):3245–3262

Lu W, Su H, Yang X, Yan XH (2019) Subsurface temperature estimation from remote sensing data using a clustering-neural network method. Remote Sens Environ 229:213–222

Maes C, Behringer D, Reynolds RW, Ji M (2000) Retrospective analysis of the salinity variability in the western tropical Pacific Ocean using an indirect minimization approach. J Atmos Oceanic Tech 17(4):512–524

Masters D, Luschi C (2018) Revisiting small batch training for deep neural networks. arXiv preprint arXiv:1804.07612

Meijers A, Bindoff N, Rintoul S (2011) Estimating the four-dimensional structure of the Southern Ocean using satellite altimetry. J Atmos Oceanic Tech 28(4):548–568

Meinen CS, Watts DR (2000) Vertical structure and transport on a transect across the North Atlantic Current near 42\(^{\circ }\)N: Time series and mean. J Geophys Res: Oceans 105(C9):21869–21891

Meyssignac B, Boyer T, Zhao Z, Hakuba MZ, Landerer FW, Stammer D, Köhl A, Kato S, L’ecuyer T, Ablain M, et al (2019) Measuring global ocean heat content to estimate the Earth Energy Imbalance. Front Marine Sci 6:432

Nardelli BB, Santoleri R (2005) Methods for the reconstruction of vertical profiles from surface data: Multivariate analyses, residual GEM, and variable temporal signals in the North Pacific Ocean. J Atmos Oceanic Tech 22(11):1762–1781

Reichstein M, Camps-Valls G, Stevens B, Jung M, Denzler J, Carvalhais N et al (2019) Deep learning and process understanding for data-driven Earth system science. Nature 566(7743):195–204

Roemmich D, Gilson J (2009) The 2004–2008 mean and annual cycle of temperature, salinity, and steric height in the global ocean from the Argo program. Prog Oceanogr 82(2):81–100

Stumpf A, Kerle N (2011) Object-oriented mapping of landslides using Random Forests. Remote Sens Environ 115(10):2564–2577

Su H, Wu X, Yan XH, Kidwell A (2015) Estimation of subsurface temperature anomaly in the Indian Ocean during recent global surface warming hiatus from satellite measurements: A support vector machine approach. Remote Sens Environ 160:63–71

Su H, Wu X, Lu W, Zhang W, Yan XH (2017) Inconsistent subsurface and deeper ocean warming signals during recent global warming and hiatus. J Geophys Res: Oceans 122(10):8182–8195

Su H, Li W, Yan XH (2018) Retrieving temperature anomaly in the global subsurface and deeper ocean from satellite observations. J Geophys Res: Oceans 123(1):399–410

Su H, Yang X, Lu W, Yan XH (2019) Estimating subsurface thermohaline structure of the global ocean using surface remote sensing observations. Remote Sensing 11(13):1598

Su H, Zhang H, Geng X, Qin T, Lu W, Yan XH (2020) OPEN: A new estimation of global ocean heat content for upper 2000 meters from remote sensing data. Remote Sensing 12(14):2294

Su H, Zhang T, Lin M, Lu W, Yan XH (2021) Predicting subsurface thermohaline structure from remote sensing data based on long short-term memory neural networks. Remote Sens Environ 260:112465

Takano A, Yamazaki H, Nagai T, Honda O (2009) A method to estimate three-dimensional thermal structure from satellite altimetry data. J Atmos Oceanic Tech 26(12):2655–2664

Wang J, Flierl GR, LaCasce JH, McClean JL, Mahadevan A (2013) Reconstructing the ocean’s interior from surface data. J Phys Oceanogr 43(8):1611–1626

Xia Y, Liu C, Li Y, Liu N (2017) A boosted decision tree approach using bayesian hyper-parameter optimization for credit scoring. Expert Syst Appl 78:225–241

Yan H, Wang H, Zhang R, Chen J, Bao S, Wang G (2020) A dynamical-statistical approach to retrieve the ocean interior structure from surface data: SQG-mEOF-R. J Geophys Res: Oceans 125(2):e2019JC015840

Yan XH, Boyer T, Trenberth K, Karl TR, Xie SP, Nieves V, Tung KK, Roemmich D (2016) The global warming hiatus: Slowdown or redistribution? Earth’s Future 4(11):472–482

Yu X, Hyyppä J, Vastaranta M, Holopainen M, Viitala R (2011) Predicting individual tree attributes from airborne laser point clouds based on the random forests technique. ISPRS J Photogramm Remote Sens 66(1):28–37

Zhang Y, Zhang H, Lin H (2014) Improving the impervious surface estimation with combined use of optical and SAR remote sensing images. Remote Sens Environ 141:155–167

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License (http://creativecommons.org/licenses/by-nc-nd/4.0/), which permits any noncommercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if you modified the licensed material. You do not have permission under this license to share adapted material derived from this chapter or parts of it.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Su, H., Lu, W., Wang, A., Zhang, T. (2023). AI-Based Subsurface Thermohaline Structure Retrieval from Remote Sensing Observations. In: Li, X., Wang, F. (eds) Artificial Intelligence Oceanography. Springer, Singapore. https://doi.org/10.1007/978-981-19-6375-9_5

Download citation

DOI: https://doi.org/10.1007/978-981-19-6375-9_5

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-6374-2

Online ISBN: 978-981-19-6375-9

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)