Abstract

This chapter provides a broad overview over the different hyperparameter tunings. It details the process of HPT, and discusses popular HPT approaches and difficulties. It focuses on surrogate optimization, because this is the most powerful approach. It introduces Sequential Parameter Optimization Toolbox (SPOT) as one typical surrogate method. SPOT is well established and maintained, open source, available on Comprehensive R Archive Network (CRAN), and catches mistakes. Because SPOT is open source and well documented, the human remains in the loop of decision-making. The introduction of SPOT is accompanied by detailed descriptions of the implementation and program code. This chapter particularly provides a deep insight in Kriging (aka Gaussian Process (GP) aka Bayesian Optimization (BO)) as a workhorse of this methodology. Thus it is very hands-on and practical.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Hyperparameter Tuning: Approaches and Goals

The following HPT approaches are popular:

-

manual search (or trial-and-error (Meignan et al. 2015)),

-

simple Random Search (RS), i.e., randomly and repeatedly choosing hyperparameters to evaluate,

-

grid search (Tatsis and Parsopoulos 2016),

-

directed, model free algorithms, i.e., algorithms that do not explicitly make use of a model, e.g., Evolution Strategys (ESs) (Hansen 2006; Bartz-Beielstein et al. 2014) or pattern search (Lewis et al. 2000),

-

hyperband, i.e., a multi-armed bandit strategy that dynamically allocates resources to a set of random configurations and uses successive halving to stop poorly performing configurations (Li et al. 2016),

-

Surrogate Model Based Optimization (SMBO) such as SPOT, (Bartz-Beielstein et al. 2005, 2021).Footnote 1

Manual search and grid search are probably the most popular algorithms for HPT. Similar to suggestions made by Bartz-Beielstein et al. (2020a), we propose the following recommendations for performing HPT studies:

-

(R-1)

Goals: clearly state the reasons for performing HPT. Improving an existing solution, finding a solution for a new, unknown problem, or benchmarking two methods are only three examples with different goals. Each of these goals requires a different experimental design.

-

(R-2)

Problems: select suitable problems. Decide, how many different problems or problem instances are necessary. In some situations, surrogates (e.g., Computational Fluid Dynamics (CFD) simulations) can accelerate the tuning (Bartz-Beielstein et al. 2018).

-

(R-3)

Algorithms: select a portfolio of ML and DL algorithms to be included in the HPT experimental study. Consider base-line methods such as RS and methods with their default hyperparameter settings.

-

(R-4)

Performance: specify the performance measure(s). See the discussion in Sect. 2.2.

-

(R-5)

Analysis: describe how the results can be evaluated. Decide, whether parametric or non-parametric methods are applicable. See the discussion in Chap. 5.

-

(R-6)

Design: set up the experimental design of the study, e.g., how many runs shall be performed. Tools from Design of Experiments (DOE) and Design and Analysis of Computer Experiments (DACE) are highly recommended. See the discussion in Sect. 5.6.5.

-

(R-7)

Presentation: select an adequate presentation of the results. Consider the audience: a presentation for the management might differ from a publication in a journal.

-

(R-8)

Reproducibility: consider how to guarantee scientifically sound results and how to guarantee a lasting impact, e.g., in terms of comparability. López-Ibáñez et al. (2021a) present important ideas.

In addition to these recommendations, there are some specific issues that are caused by the ML and DL setup.

We consider a HPT approach based on SPOT that focuses on the following topics:

- Limited Resources.:

-

We focus on situations, where limited computational resources are available. This may be simply due to the availability and cost of hardware, or because confidential data has to be processed strictly locally.

- Understanding.:

-

In contrast to standard HPO approaches, SPOT provides statistical tools for understanding hyperparameter importance and interactions between several hyperparameters.

- Explainability.:

-

Understanding is a key tool for enabling transparency and explainability, e.g., quantifying the contribution of ML and DL components (layers, activation functions, etc.).

- Replicablity.:

-

The software code used in this study is available in the open source R software environment for statistical computing and graphics (R) package SPOT via the CRAN. Replicability is discussed in Sect. 2.7.2. SPOT is a well-established open-source software, maintained for more than 15 years (Bartz-Beielstein et al. 2005).

Furthermore, Falkner et al. (2018) claim that practical HPO solutions should fulfill the following requirements:

-

strong anytime and final performance,

-

effective use of parallel resources,

-

scalability, as well as robustness and flexibility.

For sure, we are not seeking the overall best hyperparameter configuration that results in a method which outperforms any other method in every problem domain (Wolpert and Macready 1997). Results are specific for one problem instance—their generalizability to other problem instances or even other problem domains is not self-evident and has to be proven (Haftka 2016).

2 Special Case: Monotonous Hyperparameters

A special case is hyperparameters with monotonous effect on the quality and run time (and/or memory requirements) of the tuned model. In our survey (see Table 4.1), two examples are included: \(\texttt {num.trees}\) (RF) and \(\texttt {thresh}\) (EN). Due to the monotonicity properties, treating these parameters differently is a likely consideration. In the following, we focus the discussion on \(\texttt {num.trees}\) as an example, since this parameter is frequently discussed in literature and online communities (Probst et al. 2018).

It is known from the literature that larger values of \(\texttt {num.trees}\) generally lead to better models. As the size increases, a saturation sets in, leading to progressively lower quality gains. It should be noted that this is not necessarily true for every quality measure. Probst et al. (2018), for example, show that this relation holds for log-loss and Brier score, but not for Area Under the receiver operating characteristic Curve (AUC).

Because of this relationship, Probst et al. (2018) claim that \(\texttt {num.trees}\) should not be optimized. Instead, it is recommended setting the parameter to a “computationally feasible large number” (Probst et al. 2018). For certain applications, especially for relatively small or medium-sized data sets, we support this assessment. However, at least in perspective, the analysis in this book considers tuning hyperparameters for very large data sets (many observations and/or many features). For this use case, we do not share this recommendation, because the required run time of the model plays an increasingly important role and is not explicitly considered in the recommendation. In this case, a “computationally feasible large number” is not trivial to determine.

In total, we consider five solutions for handling monotonous hyperparameters:

-

(M-1)

Set manually: The parameter is set to the largest possible value that is still feasible with the available computing resources. This solution involves the following risks:

-

a.

Single evaluations during tuning waste time unnecessarily.

-

b.

Interactions with parameters (e.g., \(\texttt {mtry}\)) are not considered.

-

c.

The value may be unnecessarily large (from a model quality point of view).

-

d.

The determination of this value can be difficult, it requires detailed knowledge regarding: size of the data set, efficiency of the model implementation, available resources (memory/computer cores / time).

-

a.

-

(M-2)

Manual adjustment of the tuning: After a preliminary examination (as represented, e.g., by the initial design step of SPOT) a user intervention takes place. Based on the preliminary investigation, a value that seems reasonable is chosen by the user and is not changed in the further course of the tuning. This solution involves the following risks:

-

a.

The preliminary investigation itself takes too much time.

-

b.

The decision after the preliminary investigation requires intervention by the user (problematic for automation). While this is feasible for individual cases, it is not practical for numerous experiments with different data (as in the experiments of the study in Chap. 12). Moreover, this reduces the reproducibility of the results.

-

c.

Depending on the scope and approach of the preliminary study, interactions with other parameters may not be adequately accounted for.

-

a.

-

(M-3)

No distinction: parameters like \(\texttt {num.trees}\) are optimized by the tuning procedure just like all other hyperparameters. This solution involves the following risks:

-

a.

The upper bound for the parameter is set too low, so potentially good models are not explored by the tuning procedure. (Note: bounds set too tight for the search space are a general risk that can affect all other hyperparameters as well).

-

b.

The upper bound is set too high, causing individual evaluations to use unnecessary amounts of time during tuning.

-

c.

The best found value may become unnecessarily large (from a model quality point of view).

-

a.

-

(M-4)

Multi-objective: run time and model quality can be optimized simultaneously in the context of multi-objective optimization. This solution involves the following risks:

-

a.

Again, manual evaluation is necessary (selection of a sector of the Pareto front) to avoid that from a practical point of view irrelevant (but possibly Pareto-optimal) solutions are investigated.

-

b.

This manual evaluation also reduces reproducibility.

-

a.

-

(M-5)

Regularization via weighted sum: The number of trees (or similar parameters) can be incorporated into the objective function. In this case, the objective function becomes a weighted sum of model quality and number of trees (or run time), with a weighting factor \(\theta \).

-

a.

The new parameter of the tuning procedure, \(\theta \), has to be determined.

-

b.

Moreover, the optimization of a weighted sum cannot find certain Pareto-optimal solutions if the Pareto front is non-convex.

-

a.

In the experimental investigation in Chap. 12, we use solution (M-3). That is, the corresponding parameters are tuned but do not undergo any special treatment during tuning. Due to the large number of experiments, user interventions would not be possible and would also complicate the reproducibility of the results. In principle, we recommend this solution for use in practice.

In individual cases, or if a good understanding of algorithms and data is available, solution (M-2) can also be used. For this, SPOT can be interrupted after the first evaluation step, in order to set the corresponding parameters to a certain value or to adjust the bounds if necessary (e.g., if \(\texttt {num.trees}\) was examined with too low an upper bound).

3 Model-Free Search

3.1 Manual Search

A frequently applied approach is that ML and DL methods are configured manually (Bergstra and Bengio 2012). Users apply their own experience and trial-and-error to find reasonable hyperparameter values.

In individual cases, this approach may indeed yield good results: when expert knowledge about data, methods, and parameters is available. At the same time, this approach has major weaknesses, e.g., it may require significant amount of work time by the users, bias may be introduced due to wrong assumptions, limited options for parallel computation, and extremely limited reproducibility. Hence, an automated approach is of interest.

3.2 Undirected Search

Undirected search algorithms determine new hyperparameter values independently of any results of their evaluation. Two important examples are Grid Search and RS.

Grid Search covers the search space with a regular grid. Each grid point is evaluated. RS selects new values at random (usually independently, uniform distributed) in the search space.

Grid Search is a frequently used approach, as it is easy to understand and implement (including parallelization). As discussed by Bergstra and Bengio (2012), RS shares the advantages of Grid Search. However, they show that RS may be preferable to Grid Search, especially in high-dimensional spaces or when the importance of individual parameters is fairly heterogeneous. They hence suggest to use RS instead Grid Search if such simple procedures are required. Probst et al. (2019a) also use a RS variant to determine the tunability of models and hyperparameters. For these reasons, we employ RS as a baseline for the comparison in our experimental investigation in Chap. 12.

Next to Grid Search and RS, there are other undirected search methods. Hyperband is an extension of RS, which controls the use of certain resources (e.g., iterations, training time) (Li et al. 2018). Another relevant set of methods is the Design of Experiments methods, such as Latin Hypercube Designs (Leary et al. 2003).

! Attention: Random Search Versus Grid Search

Interestingly, Bergstra and Bengio (2012) demonstrate empirically and show theoretically that randomly chosen trials are more efficient for HPT than trials on a grid. Because their results are of practical relevance, they are briefly summarized here: In grid search the set of trials is formed by using every possible combination of values, grid search suffers from the curse of dimensionality because the number of joint values grows exponentially with the number of hyperparameters.

A Gaussian process analysis of the function from hyper-parameters to validation set performance reveals that for most data sets only a few of the hyper-parameters really matter, but that different hyper-parameters are important on different data sets. This phenomenon makes grid search a poor choice for configuring algorithms for new data sets (Bergstra and Bengio 2012).

Let \(\Psi \) denote the space of hyperparameter response functions. Bergstra and Bengio (2012) claim that RS is more efficient in ML than grid search because a hyperparameter response function \(\psi \in \Psi \) usually has a low effective dimensionality (see Definition 2.25), i.e., \(\psi \) is more sensitive to changes in some dimensions than others (Caflisch et al. 1997).

The observation that only a few of the parameters matter can also be made in the engineering domain, where parameters such as pressure or temperature play a dominant role. In contrast to DL, this set of important parameters does not change fundamentally in different situations. We assume that the high variance in the set of important DL hyperparameters is caused by confounding.

Due to its simplicity, it turns out in many situations that RS is the best solution, especially in high-dimensional spaces. Hyperband should also be mentioned in this context, although it can result in a worse final performance than model-based approaches, because it only samples configurations randomly and does not learn from previously sampled configurations (Li et al. 2016). Bergstra and Bengio (2012) note that RS can probably be improved by automating what manual search does, i.e., using SMBO approaches such as SPOT.

HPT is a powerful technique that is an absolute requirement to get to state-of-the-art models on any real-world learning task, e.g., classification and regression. However, there are important issues to keep in mind when doing HPT: for example, validation-set overfitting can occur, because hyperparameters are usually optimized based on information derived from the validation data.

3.3 Directed Search

One obvious disadvantage of undirected search is that a large amount of the computational effort may be spent on evaluating solutions that cover the whole search space. Hence, only a comparatively small amount of the computational budget will be spent on potentially optimal or at least promising regions of the search space.

Directed search on the other hand may provide a more purposeful approach. Basically any gradient-free, global optimization algorithm could be employed. Prominent examples are Iterative Local Search (ILS) (Hutter et al. 2010b) and Iterative Racing (IRACE) (López-Ibáñez et al. 2016). Metaheuristics like Evolutionary Algorithms (EAs) or Swarm Optimization are also applicable (Yang and Shami 2020). In comparison to undirected search procedures, directed search has two frequent drawbacks: an increased complexity that makes implementation a larger issue, and being more complicated to parallelize.

We employ a model-based directed search procedure in this book, which is described in the following Sect. 4.4.

4 Model-Based Search

A disadvantage of model-free, directed search procedures is that they may require a relatively large number of evaluations (i.e., long run times) to approximate the values of optimal hyperparameters.

Tuning ML and DL algorithms can become problematic if complex methods are tuned on large data sets, because the run time for evaluating a single hyperparameter configuration may go up into the range of hours or even days. Model-based search is one approach to resolve this issue. These search procedures use information gathered during the search to learn the relationship between hyperparameter values and performance measures (e.g., misclassification error). The model that encodes this learned relationship is called the surrogate model or surrogate.

Definition 4.1

(Surrogate Optimization) The surrogate optimization uses two phases.

- Construct Surrogate:

-

Generate (random) solutions. Evaluate the (expensive) objective function at these points. Construct a surrogate, \(\mathcal {S}\), of the objective function, e.g., by building a GP aka Kriging model (surrogate).

- Search for Minimum:

-

Search for a minimum of the objective function on the (cheap) surrogate. Choose the best point as a candidate. Evaluate the objective function at the best candidate point. This point is called an infill point. Update the surrogate using this value and search again.

The advantage of this surrogate optimization is that a considerable part of the evaluation burden (i.e., the computational effort) can be shifted from real evaluations to evaluations of the surrogate, which should be faster to evaluate.

In HPT, mixed optimization problems are common, i.e., the variables are continuous or discrete (Cuesta Ramirez et al. 2022). Bartz-Beielstein and Zaefferer (2017) provide an overview of metamodels that have or can be used in optimization. They show how it was made possible by the realization that GP kernels (covariance functions) in mixed variables can be created by composing continuous and discrete kernels. In this case, the infill criterion (acquisition function) is defined over the same space as the objective function. Therefore maximizing the acquisition function is also a mixed variables problem.

One variant of model-based search is SPOT (Bartz-Beielstein 2005), which be will described in Sect. 4.5.

5 Sequential Parameter Optimization Toolbox

SMBO methods are common approaches in simulation and optimization. SPOT has been developed, because there is a strong need for sound statistical analysis of simulation and optimization algorithms. SPOT includes methods for tuning based on classical regression and analysis of variance techniques; tree-based models such as Classification and Regression Trees (CART) and random forest; BO (Gaussian process models, aka Kriging), and combinations of different meta-modeling approaches.

Basic elements of the Kriging-based surrogate optimization such as interpolation, expected improvement, and regression are presented in the Appendix, see Sect. 4.6. The Sequential Parameter Optimization (SPO) toolbox implements a modified version of this method and will be described in this section.

SPOT implements key techniques such as exploratory fitness landscape analysis and sensitivity analysis. SPOT can be used for understanding the performance of algorithms and gaining insight into algorithm’s behavior. Furthermore, SPOT can be used as an optimizer and for automatic and interactive tuning.

Details of SPOT and its application in practice are given by Bartz-Beielstein et al. (2021). SPOT was originally developed for the tuning of optimization algorithms. The requirements and challenges of algorithm tuning in optimization broadly reflect those of tuning machine learning models. SPOT uses the following approach (outer loop).

- Setup::

-

In a first step, several candidate solutions (here: different combinations of hyperparameter values) are created. These are steps (S-1) and (S-2) in the function \(\texttt {spot}\), see Fig. 4.1.

- Evaluate::

-

All new candidate solutions are evaluated (here: training the respective ML or DL model with the specified hyperparameter values and measuring the quality / performance). This is step (S-3).

- Termination::

-

Check whether a termination criterion has been reached (e.g., number of iterations, evaluations, run time, or a satisfying solution has been found). These are steps (S-4) to (S-9).

- Select::

-

Samples for building the surrogate \(\mathcal {S}\)are selected. This is step (S-10).

- Training::

-

The surrogate \(\mathcal {S}\)will be trained with all data derived from the evaluated candidate solutions, thus learning how hyperparameters affect model quality. This is step (S-11).

- Surrogate search::

-

The trained model is used to perform a search for new, promising candidate solutions. These are steps (S-12) and (S-16).

- Budget::

-

Optimal Computing Budget Allocation (OCBA) is used to determine the number of repeated evaluations. This is step (S-17).

- Evaluation:

-

The new solutions are evaluated on the objective function, e.g., the loss is determined. These are steps (S-18) to (S-22).

- Exploit::

-

An optimizer is used to perform a local search on \(\mathcal {S}\)to refine the best solution found. These are steps (S-23) and (S-24). Whereas optimization on the surrogate in the main loop is a weighted combination of exploration and exploitation, using Expected Improvenment (EI) as a default weighting mechanism, this final optimization step is purely exploitative.

Note, that it can be useful to allow for user interaction with the tuner after the evaluation step. Thus, the user may affect changes of the search space (stretch or shrink bounds on parameters, eliminate parameters). However, we will consider an automatic search in our experiments.

We use the R implementation of SPOT, as provided by the R package SPOT (Bartz-Beielstein et al. 2021, 2021c). The SPOT workflow will be described in the following sections.

In the remainder of this book, SPOT will refer to the general method, whereas \(\texttt {spot}\) denotes the function from the R package SPOT.

Steps, subroutines and data of the \(\texttt {spot}\) process are shown in Fig. 4.2.

5.1 spot as an Optimizer

\(\texttt {spot}\) uses the same syntax as \(\texttt {optim}\), R ’s general-purpose optimization based on Nelder-Mead, quasi-Newton, and conjugate-gradient algorithms (R Core Team 2022). \(\texttt {spot}\) can be called as shown in the following example.

Example: spot

SPOT comes with many pre-defined functions from optimization, e.g., Sphere, Rosenbrock, or Branin. These implementations use the prefix “\(\texttt {fun}\)”, e.g., \(\texttt {funSphere}\) is the name of the sphere function. The package SPOTMisc provides \(\texttt {funBBOBCall}\), an interface to the real-parameter Black-Box Optimization Benchmarking (BBOB) function suite (Mersmann et al. 2010a). Furthermore, users can also specify their own objective functions.

Searching for the optimum of the (two-dimensional) sphere function \(\texttt {funSphere}\), i.e., \(f(x)= \sum _{i=1}^2 x_i^2\), on the interval between \((-1, -1)\) and (1, 1) can be done as follows:

Four arguments are passed to \(\texttt {spot}\): no explicit starting point for the optimization is used, because the parameter \(\texttt {x}\) was set to \(\texttt {NULL}\), the function \(\texttt {funSphere}\), and the \(\texttt {lower}\) and \(\texttt {upper}\) bounds. The length of the lower bound argument defines the problem dimension n.

> Mandatory Parameters

The arguments \(\texttt {x}\), \(\texttt {fun}\), \(\texttt {lower}\), and \(\texttt {upper}\) are mandatory for \(\texttt {spot}\), they are shown in Table 4.2.

Additional arguments can be passed to \(\texttt {spot}\). They allow a very flexible handling, e.g., for passing extra arguments to the objective function \(\texttt {fun}\). To improve the overview, parameters are organized as lists. The “main” list is called \(\texttt {control}\), see Table 4.3. It collects \(\texttt {spot}\) ’s parameters, some of them are organized as lists. They are shown in Table 4.4.

The \(\texttt {control}\) list is used for managing SPOT ’s parametrization, e.g., for defining hyperparameter types and ranges.

5.2 spot’s Initial Phase

The initial phase consists of five steps (S-1) to (S-5). The corresponding R code is shown in Sect. 4.7.

-

(S-1)

Setup. After performing an initial check on the control list, the \(\texttt {control}\) list is completed.

The \(\texttt {control}\) list contains the parameters from Table 4.3.

-

(S-2)

Initial design. The parameter \(\texttt {seedSPOT}\) is used to set the seed for \(\texttt {spot}\) before the initial design X is generated. The design type is specified via \(\texttt {control\$design}\). The recommended design function is \(\texttt {designLHD}\), i.e., a Latin Hypercube Design (LHD), which is also the default configuration.

Ten initial design points are available now, because the default value of the parameter \(\texttt {designControl\$size}\), which specifies the initial design size, is set to 10 if the function \(\texttt {designLHD}\) is used (Fig. 4.3).

Program Code: Steps (S-1) and (S-2)

Steps (S-1) and (S-2) are implemented as follows:

Example: Modifying the initial design size

Arbitrary initial design sizes can be generated by modifying the \(\texttt {size}\) argument of the \(\texttt {designControl}\) list:

Here is the full code for starting \(\texttt {spot}\) with an initial design of size five:

Because the \(\texttt {lower}\) bound was set to \(\texttt {(-1, -1)}\), a two-dimensional problem is defined, i.e., \(f(x_1,x_2)= x_1^2 + x_2^2\). The result from this \(\texttt {spot}\) run is stored as a list in the variable \(\texttt {return}\).

Variable types are assumed to be \(\texttt {numeric}\), which is the default type if no other type is specified. Type information, which is available from \(\texttt {config\$types}\), is used to transform the variables. The function \(\texttt {spot}\) can handle the data types \(\texttt {numeric}\), \(\texttt {integer}\), and \(\texttt {factor}\). The function \(\texttt {repairNonNumeric}\) maps non-numerical values to \(\texttt {integers}\).

-

(S-3)

Evaluation of the Initial Design. Using \(\texttt {objectiveFunction}\) \(\texttt {Evaluation}\), the objective function \(\texttt {fun}\) is evaluated on the initial design matrix \(\texttt {x}\).

In addition to \(\texttt {xnew}\), a matrix of already known solutions, to determine whether Random Number Generator (RNG) seeds for new solutions need to be incremented, can be passed to the function \(\texttt {objectiveFunction}\) \(\texttt {Evaluation}\).

! Transformation of Variables

If variable transformation functions are defined, the function \(\texttt {transformX}\) is applied to the parameters during the execution of the function \(\texttt {objectiveFunctionEvaluation}\).

The function \(\texttt {objectiveFunctionEvaluation}\) returns the matrix \(\texttt {y}\).

-

(S-4)

Imputation: Handling Missing Values. The feasibility of the y-matrix is checked. Methods to handle \(\texttt {NA}\) and infinite y-values are applied, which are available via the function \(\texttt {imputeY}\).

The spot loop starts after the initial phase. The function \(\texttt {spotLoop}\) is called.

Program Code: Steps (S-3) and (S-4)

Steps (S-3) and (S-4) are implemented as follows:

5.3 The Function \(\texttt {spotLoop}\)

-

(S-5)

Calling the spotLoop function. After the initial phase is finished, the function \(\texttt {spotLoop}\) is called, which manages the main loop. It is implemented as a stand-alone function, because it can be called separately, e.g., to continue interrupted experiments. With this mechanism, \(\texttt {spot}\) provides a convenient way for continuing experiments on different computers or extending existing experiments, e.g., if the results are inconclusive or a pre-experimental study should be performed first.

Example: Continue existing experiments

The studies in Sects. 5.8.1, 5.8.2, and 5.8.3 start with a relatively small pre-experimental design. Results from the pre-experimental tests are combined with results from the full experiment.

-

(S-6)

Consistency Check and Initialization. Because the \(\texttt {spotLoop}\) can be used to continue an interrupted \(\texttt {spot}\) run, it performs a consistency check before the main loop is started.

-

(S-7)

Imputation. The function defined by the argument \(\texttt {control\$yImputation\$handleNAsMethod}\) is called to handle \(\texttt {NA}\) s, \(\texttt {Inf}\) s, etc. This is necessary here, because \(\texttt {spotLoop}\) can be used as an entry point to continue an interrupted \(\texttt {spot}\) optimization run. How to continue existing \(\texttt {spot}\) runs is explained in the \(\texttt {spotLoop}\) documentation.

-

(S-8)

Counter and Log Data. Furthermore, counters and logging variables are initialized. The matrix \(\texttt {yBestVec}\) stores the best function value found so far. It is initialized with the minimum value of the objective function on the initial design. Note, \(\texttt {ySurr}\), which keeps track of the objective function values on the surrogate \(\mathcal {S}\), has \(\texttt {NA}\) s, because no surrogate was built so far:

Program Code: Steps (S-5) to (S-8)

5.4 Entering the Main Loop

-

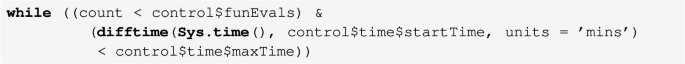

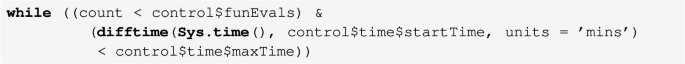

(S-9)

Termination Criteria, Conditions. The main loop is entered as follows:

Two termination criteria are implemented:

-

a.

the number of objective function evaluations must be smaller than \(\texttt {funEvals}\) and

-

b.

the time must be smaller than \(\texttt {maxTime}\).

-

a.

-

(S-10)

Subset Selection for the Surrogate. Surrogates can be built with the full or a reduced set of available \(\texttt {x}\)- and \(\texttt {y}\)-values. A subset selection method, which is defined via \(\texttt {control\$subsetSelect}\), can be used before the surrogate \(\mathcal {S}\)is built. If \(\texttt {subsetSelect}\) is set to \(\texttt {selectAll}\), which is the default, all points are used. Fitting the surrogate \(\mathcal {S}\)with a subset of the available points only appears to be counterintuitively, but can be reasonable, e.g., if the sample points are too close to each other or if the problem changes dynamically.

-

(S-11)

Fitting the Surrogate. SPOT can use arbitrary regression models as surrogates, e.g., RF or GP models (Kriging).

The arguments \(\texttt {x}\) and \(\texttt {y}\) are mandatory for the function \(\texttt {model}\). The \(\texttt {model}\) function must return a fit object that provides a \(\texttt {predict}\) method. A Gaussian process model, which performs well in many situations and can work well with discrete and continuous hyperparameters, is SPOT ’s default model. Random forest is less suited as a surrogate for continuous parameters, as it has to approximate said parameters in a step-wise constant manner. The function \(\texttt {control\$model}\) is applied to the \(\texttt {x}\)- and \(\texttt {y}\)-matrices. A default \(\texttt {model}\) is fitted to the data with the function \(\texttt {buildKriging}\).

Program Code: Steps (S-9) to (S-11)

Steps (S-9) to (S-11) are implemented as follows:

Background: Surrogates

There is a naming convention for surrogates in spot: functions names should start with the prefix “\(\texttt {build}\)”. Surrogates in spot use the same interface. They accept the arguments \(\texttt {x}\), \(\texttt {y}\), which must be matrices, and the list \(\texttt {control}\). They fit a model, e.g., \(\texttt {buildLM}\) uses the \(\texttt {lm}\), which provides a method \(\texttt {predict}\). Each model returns an object of the corresponding model class, here: \(\texttt {"spotLinearModel"}\), with a \(\texttt {predict}\) method. The return value is implemented as a list with the entries from Table 4.5.

Note, \(\texttt {buildLM}\) is a very simple model. SPOT ’s workhorse is a Kriging model, that is fitted via Maximum Likelihood Estimation (MLE). \(\texttt {buildKriging}\) is explained in Sect. 4.6.5.

-

(S-12)

Objective Function on the Surrogate (Predict). After building the surrogate, the \(\texttt {modelFit}\) (surrogate model) is available. It is used to define the function \(\texttt {funSurrogate}\), which works as an objective function on the surrogate \(\mathcal {S}\): \(\texttt {funSurrogate}\) does not evaluate solutions on the original function f, but on the surrogate \(\mathcal {S}\). Thus, \(\texttt {spot}\) searches for the hyperparameter configuration that is predicted to result in the best possible model quality. Therefore, an objective function is generated based on the \(\texttt {modelFit}\) via \(\texttt {predict}\).

Program Code: Step (S-12)

Step (S-12) is implemented as follows:

Background: Surrogate and Infill Criteria

The function \(\texttt {evaluateModel}\) generates an objective function that predicts function values on the surrogate. Some surrogate optimization procedures do not use the function values from the surrogate \(\mathcal {S}\)—they use an infill criterion instead.

Definition 4.2 (Infill Criterion, Acquisition Function) Infill criteria are methods that guide the exploration of the surrogate. They combine information from the predicted mean and the predicted variance generated by the GP model. In BO, the term “acquisition function” is used for functions that implement infill criteria.

For example, the function \(\texttt {buildKriging}\) provides three return values that can be used to generate elementary infill criteria. These return values are specified via the argument \(\texttt {target}\), which is a vector of strings. Each string specifies a value to be predicted, e.g., \(\texttt {"y"}\) for mean, \(\texttt {"s"}\) for standard deviation, and \(\texttt {"ei"}\) for expected improvement. In addition to these elementary values, spot provides the function \(\texttt {infillCriterion}\) to specify user-defined criteria. The function \(\texttt {evaluateModel}\) that manages the infill criteria in \(\texttt {spot}\) is shown below.

Example: Expected Improvement

EI is a popular infill criterion, which was defined in Eq. (4.10). It is calculated as shown in Eq. (4.11) and can be called from \(\texttt {evaluateModel}\) via \(\texttt {modelControl = list(target = c("ei")}\). The following code shows an EI implementation that returns a vector with the negative logarithm of the expected improvement values, \( - \log _{10}(\text {EI})\). The function \(\texttt {expectedImprovement}\) is called, if the argument \(\texttt {"ei"}\) is selected as a \(\texttt {target}\), e.g., spot(,fun,l,u,control=list(modelControl=list(target="ei"))).

-

(S-13)

Multiple Starting Points. If the current best point is feasible, it is used as a starting point for the search on the surrogate \(\mathcal {S}\). Because the surrogate can be multi-modal, multiple starting points are recommended. The function \(\texttt {getMultiStartPoints}\) implements a multi-start mechanism. \(\texttt {spot}\) provides the function \(\texttt {getMultiStartPoints}\).

In addition to the current best point further starting points can be used. Their amount can be specified by the value of \(\texttt {multiStart}\). If \(\texttt {multiStart} > 1\), then additional starting points will be used. The \(\texttt {design}\) function, which was used for generating the initial design in Sect. 4.5.2, will be used here to generate additional points.

-

(S-14)

Optimization on the Surrogate. The search on the surrogate \(\mathcal {S}\)can be performed next. The simplest objective function is \(\texttt {optimLHD}\), which selects the point with the smallest function value from a relatively large set of LHD points. Other objective functions are available, e.g., \(\texttt {optimLBFGS}\) or \(\texttt {optimDE}\). To find the next candidate solution, the predicted value of the surrogate is optimized via Differential Evolution (Storn and Price 1997). Other global optimization algorithms can be used as well. Even RS would be a feasible strategy.

> Mandatory Parameters

Optimization functions must use the same interface as \(\texttt {spot}\), i.e., function(x, fun,lower,upper,control=list(),...). The arguments \(\texttt {fun}\), \(\texttt {lower}\), and \(\texttt {upper}\) are mandatory for optimization functions. This is similar to the interface of R ’s general-purpose optimization function \(\texttt {optim}\).

As described in Sect. 4.5.4, the optimization on the surrogate \(\mathcal {S}\)can be performed with or without pre-defined starting points. We describe a search without starting points first.

-

(S-14a)

Search Without Starting Points. If no starting points for the search are provided, the optimizer, which is specified via \(\texttt {control\$optimizer}\), is called.

The result from this optimization is stored in the list \(\texttt {optimResSurr}\). The optimal value from the search on the surrogate is \(\texttt {optimResSurr\$xbest}\), the corresponding y-value is \(\texttt {optimResSurr\$ybest}\). Alternatively, the search on the surrogate can be performed with starting points.

-

(S-14b)

Search With Starting Points. If starting points are used for the optimization on the surrogate, these are passed via \(\texttt {x = x0}\) to the optimizer. Several starting points result in several \(\texttt {optimResSurr\$xbest}\) and \(\texttt {optimResSurr\$ybest}\) values from which the best, i.e., the point with the smallest y-value, is selected.

For example, if \(\texttt {multiStart = 2}\) is selected, the current best and one random point will be used.

The optimization on the surrogate \(\mathcal {S}\) is performed separately for each starting point and the matrix \(\texttt {xnew}\) is computed.

\(\texttt {xnew}\) is determined based on the multi-start results.

-

(S-15)

Compile Results from the Search on the Surrogate. The function value of \(\texttt {xnew}\) (from (S-14a) or (S-14b)) is saved as \(\texttt {ySurrNew}\). Note, this function values can be modified using \(\texttt {control\$modelControl\$target}\), e.g., \(\texttt {"y"}\), \(\texttt {"s2}\), or \(\texttt {"ei"}\), i.e., the optimization on the surrogate can be based on the predicted new value \(\texttt {"y"}\), a combination of \(\texttt {"y"}\) and the variance or the EI \(\texttt {"ei"}\).

-

(S-16)

Noise, Repeats, and Consistency Checks for New Points. After the new solution candidate \(\texttt {xnew}\) and its associated function value on the surrogate \(\texttt {ySurrNew}\) have been determined, \(\texttt {spot}\) checks for duplicates and determines the number of replicates. This step treats noisy and deterministic objective functions in a different way.

If \(\texttt {control\$noise == TRUE}\), then replicates are allowed, i.e., a single solution x can be evaluated several times. If \(\texttt {control\$noise == FALSE}\), then every solution is evaluated only once.

Program Code: Steps (S-13) to (S-16)

Steps (S-13) to (S-16) are implemented as follows:

Background: Duplicates and Replicates

The function \(\texttt {duplicateAndReplicateHandling}\) checks whether the new solution \(\texttt {xnew}\) has been evaluated before. In this case, it is taken as it is and no additional evaluations are performed. If \(\texttt {xnew}\) was not evaluated before, it will be evaluated. The number of evaluations is defined via \(\texttt {control\$replicates}\). Duplicate and replicate handling in \(\texttt {spot}\) depends on the setting of the parameter \(\texttt {noise}\). If the value is \(\texttt {TRUE}\) then a test whether \(\texttt {xnew}\) is new or has been evaluated before is performed. If \(\texttt {xnew}\) is new (was not evaluated before), then it should be evaluated \(\texttt {replicates}\) times. Assume, control$replicates \({<}- 3\), i.e., three initial replicates are required and \(\texttt {xnew}\) was not evaluated before. Then two additional evaluations should be done, i.e., \(\texttt {xtmp}\) contains two entries which are combined with one already existing entry in \(\texttt {xnew}\).

If the parameter \(\texttt {noise}\) has the value \(\texttt {FALSE}\), two cases have to be distinguished. First, if \(\texttt {xnew}\) was not evaluated before, then it should be evaluated once (and not \(\texttt {replicates}\) times), because additional evaluation is useless. They would deterministically generate the same result.

Second, if \(\texttt {xnew}\) was evaluated before, then a warning is issued and a randomly generated solution for each entry in \(\texttt {xnew}\) will be used.

A type check is performed, i.e., all non-numeric values produced by the optimizer are rounded.

-

(S-17)

OCBA for Known Points. OCBA is called next if \(\texttt {OCBA}\) and \(\texttt {noise}\) are both set to \(\texttt {TRUE}\): the function \(\texttt {repeatsOCBA}\) returns a vector that specifies how often each known solution should be re-evaluated (or replicated). This function can spend a budget of \(\texttt {control\$OCBABudget}\) additional evaluations. The solutions proposed by \(\texttt {repeatsOCBA}\) are added to the set of new x candidates \(\texttt {xnew}\). Because OCBA calculates an estimate of the variance, it is based on evaluated solutions and their function values, i.e., \(\texttt {x}\) and \(\texttt {y}\) values respectively.

Program Code: Step (S-17)

Step (S-17) is implemented as follows:

Background: Optimal Computational Budget Allocation

OCBA is a very efficient solution to solve the “general ranking and selection problem” if the objective function is noisy (Chen 2010; Bartz-Beielstein et al. 2011). It allocates function evaluations in an uneven manner to identify the best solutions and to reduce the total optimization costs.

Theorem 4.1 Given a total number of optimization samples N to be allocated to k competing solutions whose performance is depicted by random variables with means \(\bar{y}_i\) ( \(i=1, 2, \ldots , k\)), and finite variances \(\sigma _i^2\), respectively, as \(N \rightarrow \infty \), the Approximate Probability of Correct Selection (APCS) can be asymptotically maximized when

where \(N_i\) is the number of replications allocated to solution i, \(\delta _{b,i} = \bar{y}_b - \bar{y}_i\), and \(\bar{y}_b \le \min _{i\ne b} \bar{y}_i\) (Chen 2010).

-

(S-18)

Evaluating New Solutions. To avoid exceeding the available budget of objective function evaluations, which is specified via \(\texttt {control\$funEvals}\), a check is performed. Solution candidates are passed to the function \(\texttt {objectiveFunctionEvaluation}\), which calculates the associated objective function values \(\texttt {ynew}\) on the function \(\texttt {fun}\).

-

(S-19)

Imputation. Because the evaluation of solution candidates might result in infinite \(\texttt {Inf}\) or Not-a-Number \(\texttt {NaN}\) \(\texttt {ynew}\) values, the function \(\texttt {imputeY}\), which handled non-numeric values, is called.

-

(S-20)

Update Counter and Log Data. Next, counters \(\texttt {count}\) and \(\texttt {ySurr}\), information about the function values on the surrogate \(\mathcal {S}\), are updated.

Calculation of the progress and preparation of progress plots conclude the main loop. The last step of the main loop compiles the list \(\texttt {return}\), which is returned to the \(\texttt {spot}\) function.

-

(S-21)

Reporting after the While-Loop. After the while loop is finished, results are compiled. Some objective functions return several values (Multi Objective Optimization (MOO)). The corresponding values are stored as \(\texttt {logInfo}\), because the default \(\texttt {spot}\) function uses only one objective function value. This mechanism enables \(\texttt {spot}\) handling MOO problems. The values of the transformed parameters are stored as \(\texttt {xt}\). Important for noisy optimization is the following feature: OCBA can be used for the selection of the best value. The function \(\texttt {ocbaRanking}\) computes the best \(\texttt {x}\) and \(\texttt {y}\) values, \(\texttt {xBestOcba}\) and \(\texttt {yBestOcba}\), respectively. \(\texttt {yBestOcba}\) is the mean value of the corresponding x-parameter setting \(\texttt {xBestOcba}\).

Program Code: Steps (S-18) to (S-22)

Steps (S-18) to (S-22) are implemented as follows:

The function \(\texttt {spotLoop}\) ends here and the final steps of the main function \(\texttt {spot}\), which are summarized in the following section, are executed.

5.5 Final Steps

To exploit the region of the best solution from the surrogate, \(\mathcal {S}\), which was determined during the SMBO in the main loop with \(\texttt {spotLoop}\), \(\texttt {SPOT}\) allows a local optimization step. If \(\texttt {control\$directOptControl\$funEvals}\) is larger than zero, this optimization is started. If the best solution from the surrogate, \(\texttt {xbest}\), satisfies the inequality constraints, it is used as a starting point for the local optimization with the local optimizer \(\texttt {control\$directOpt}\). For example, directOpt = optimNLOPTR or directOpt = optimLBFGSB, can be used.

Results from the direct optimization will be appended to the matrices of the \(\texttt {x}\) and \(\texttt {y}\) values based on SMBO. SPOT returns the gathered information in a list (Table 4.6). Because SPOT focuses on reliability and reproducibility, it is not the speediest algorithm.

6 Kriging

Basic elements of the Kriging-based surrogate optimization such as interpolation, expected improvement, and regression are presented. The presentation follows the approach described in Forrester et al. (2008a).

6.1 The Kriging Model

Consider sample data \(\textbf{X}\) and \(\textbf{y}\) from n locations that are available in matrix form: \(\textbf{X}\) is a \((n \times k)\) matrix, where k denotes the problem dimension and \(\textbf{y}\) is a \((n\times 1)\) vector. The observed responses \(\textbf{y}\) are considered as if they are from a stochastic process, which will be denoted as

The set of random vectors (also referred to as a “random field”) has a mean of \(\textbf{1} \mu \), which is a \((n\times 1)\) vector. The random vectors are correlated with each other using the basis function expression

The \((n \times n)\) correlation matrix of the observed sample data is

Note: correlations depend on the absolute distances between sample points \(|x_j^{(n)} - x_j^{(n)}|\) and the parameters \(p_j\) and \(\theta _j\).

To estimate the values of \(\mathbf {\theta }\) and \(\textbf{p}\), they are chosen to maximize the likelihood of \(\textbf{y}\), which can be expressed as

which can be expressed in terms of the sample data

and formulated as the log-likelihood:

Optimization of the log-likelihood by taking derivatives with respect to \(\mu \) and \(\sigma \) results in

and

Substituting (4.5) and (4.6) into (4.4) leads to the concentrated log-likelihood:

Note: To maximize \(\ln (L)\), optimal values of \(\mathbf {\theta }\) and \(\textbf{p}\) are determined numerically, because (4.7) is not differentiable.

6.2 Kriging Prediction

For a new prediction \(\hat{y}\) at \(\textbf{x}\), the value of \(\hat{y}\) is chosen so that it maximizes the likelihood of the sample data \(\textbf{X}\) and the prediction, given the correlation parameter \(\mathbf {\theta }\) and \(\textbf{p}\). The observed data \(\textbf{y}\) is augmented with the new prediction \(\hat{y}\) which results in the augmented vector \(\tilde{\textbf{y}} = ( \textbf{y}^T, \hat{y})^T\). A vector of correlations between the observed data and the new prediction is defined as

The augmented correlation matrix is constructed as

Similar to (4.4), the log-likelihood of the augmented data is

The MLE for \(\hat{y}\) can be calculated as

Equation 4.9 reveals two important properties of the Kriging predictor.

-

The basis function impacts the vector \(\mathbf {\psi }\), which contains the n correlations between the new point \(\textbf{x}\) and the observed locations. Values from the n basis functions are added to a mean base term \(\mu \) with weightings \(\textbf{w} = \tilde{\mathbf {\Psi }}^{(-1)} (\textbf{y} - \textbf{1}\hat{\mu })\).

-

The predictions interpolate the sample data. When calculating the prediction at the ith sample point, \(\textbf{x}^{(i)}\), the ith column of \(\mathbf {\Psi }^{-1}\) is \(\mathbf {\psi }\), and \(\mathbf {\psi } \mathbf {\Psi }^{-1}\) is the ith unit vector. Hence, \(\hat{y}(\textbf{x}^{(i)}) = y^{(i)}\).

6.3 Expected Improvement

The EI is a criterion for error-based exploration, which uses the MSE of the Kriging prediction. The MSE is calculated as

Here, \(s^2(\textbf{x}) = 0\) at sample points, and the last term is omitted in Bayesian settings.

Since the EI extends the Probability of Improvement (PI), it will be described first. Let \(y_{\min }\) denote the best-observed value so far and consider \(\hat{y}(\textbf{x})\) as the realization of a random variable. Then, the probability of an improvement \(I = y_{\min } - \textbf{Y}(\textbf{x})\) can be calculated as

The EI does not calculate the probability that there will be some improvement, it calculates the amount of expected improvement. The rationale of using this expectation is that we are less interested in highly probable improvement if the magnitude of that improvement is very small. The EI is defined as follows.

Definition 4.3

(Expected Improvement)

where \(\Phi (.)\) and \(\phi (.)\) are the Cumulative Distribution Function (CDF) and Probability Distribution Function (PDF), respectively.

The EI is evaluated as

6.4 Infill Criteria with Noisy Data

The EI infill criterion was formulated under the assumption that the true underlying function is deterministic, smooth, and continuous. In deterministic settings, the Kriging predictor should interpolate the data. Noise can complicate the modeling process: predictions can become erratic, because there is a high MSE in regions far away from observed data. Therefore, the interpolation property should be dropped to filter noise. A regression constant, \(\lambda \), is added to the diagonal of \(\mathbf {\Psi }\) and \(\mathbf {\Psi } + \lambda \textbf{I}\) is used. Then, \(\mathbf {\Psi } + \lambda \textbf{I}\) does not contain \(\mathbf {\psi }\) as a column and the data is not interpolated. The same method of derivation as in interpolating Kriging (Eq. 4.9) can be used for regression Kriging. The regression Kriging prediction is given by

where

Including the regression constant \(\lambda \) the following equation allows the calculation of an estimate of the error in the Kriging regression model for noisy data:

where

Note: Eq. (4.12) includes the error associated with noise in the data. There is nonzero error in all areas which leads to nonzero EI in all areas. As a consequence, resampling can occur. Resampling can be useful if replicates result in different outcomes. Although the possibility of resampling can destroy the convergence to the global optimum, resampling can be a wanted feature in optimization with noisy data. In a deterministic setting, resampling is an unwanted feature, because new evaluations of the same point do not provide additional information and can stall the optimization process.

Re-interpolation can be used to eliminate the errors due to noise in the data from the model. Re-interpolation bases the estimated error on an interpolation of points predicted by the regression model at the sample locations. It proceeds as follows: calculate values for the Kriging regression at the sample locations using

This vector can be substituted into Eq. (4.9), which is substituted into (4.6). This results in

Using the interpolating Kriging error estimate (4.12), the re-interpolation error estimate reads

6.5 spot’s Workhorse: Kriging

This section explains the implementation of the function \(\texttt {buildKriging}\) in SPOT.

-

(K-1)

Set Parameters. \(\texttt {buildKriging}\) uses the parameters shown in Table 4.7. It returns an object of class \(\texttt {kriging}\), which is basically a list, with the options and found parameters for the model which has to be passed to the \(\texttt {predict}\) method of this class.

Program Code: Step (K-1)

-

(K-2)

Normalization.

The function \(\texttt {normalizeMatrix}\) is used to normalize the data, i.e., each column of the (n, k)-matrix X has values in the range from zero to one.

Program Code: Step (K-2)

-

(K-3)

Correlation Matrix. Prepare correlation matrix \(\Psi \) (Eq. (4.3)) and start points for the optimization. The distance matrix is determined. The i-th row of \((k,n^2)\)-matrix A contains the distances between the elements of the i-th column (dimension). A(1, 1) is the distance of the first element to the first element in the first dimension, A(1, 2) the distance of the first element to the second element in the first dimension, \(A(1,n+1)\) is the distance of the second element to the first element in the first dimension, and so on.

Program Code: Step (K-3)

-

(K-4)

Prepare Starting Points.

-

(K-4.1)

\(\theta \). The starting point for the optimization of \(\theta \) is determined. If no explicit starting point is specified, then

$$\begin{aligned} \theta _0= n/(100k) \end{aligned}$$(4.13)is chosen.

-

(K-4.2)

p. The parameter \(\texttt {optimizeP}\) determines whether p should be optimized or not. In the latter case, \(p=2\) is set and the matrix A is squared. Otherwise, the starting point for the optimization of p is chosen as \(p_0=1.9\) and the search interval is set to [0.01, 2].

-

(K-4.3)

Nugget. If a nugget effect should be integrated, the starting point for the optimization of \(\lambda \) is set to

$$\begin{aligned} \lambda _0 = \frac{\lambda _{\text {lower}} + \lambda _{\text {upper}}}{2} \end{aligned}$$(4.14) -

(K-4.4)

Penalty. The penalty value is set to

$$\begin{aligned} \phi = n \times \log ({\text {Var}}(y)) + 1e4 . \end{aligned}$$(4.15)Note: this penalty value should not be a hard constant. The scale of the likelihood, i.e., \(n \times \log (SigmaSqr) + LnDetPsi\) at least depends on \(\log ({\text {Var}}(y))\) and the number of samples. Hence, large number of samples may lead to cases where the penalty is lower than the likelihood of most valid parameterizations. A suggested penalty is therefore \( \phi = n \times \log ({\text {Var}}(y)) + 1 e 4\). Currently, this penalty is set in the \(\texttt {buildKriging}\) function, when calling \(\texttt {krigingLikelihood}\).

-

(K-4.1)

Program Code: Step (K-4)

-

(K-5)

Objective. The objective function \(\texttt {fitFun}\) for the MLE optimizer \(\texttt {algTheta}\) is defined in this step.

-

(K-6)

krigingLikelihood. The function \(\texttt {krigingLikelihood}\), see Sect. 4.6.6, is called.

Program Code: Steps (K-5) and (K-6)

-

(K-7)

Performing the Optimization with fitFun. The optimizer is called as follows:

Program Code: Step (K-7)

-

(K-8)

Compile Results. Step Compile return values: The return values from the optimization run, which are stored in the list \(\texttt {res}\), are added to the list \(\texttt {fit}\) that specifies the object of the class \(\texttt {kriging}\). The list \(\texttt {fit}\) contains the following optimized values: \(\theta ^*\) as \(\texttt {Theta}\), \(10^{\theta ^*}\) as \(\texttt {dmodeltheta}\), \(p^*\), as \(\texttt {P}\), \(\lambda ^*\), as \(\texttt {Lambda}\) and \(10^{\lambda ^*}\), as \(\texttt {dmodellambda}\).

Program Code: Step (K-8)

-

(K-9)

Use Results to Determine Likelihood and Best Parameters. The function \(\texttt {krigingLikelihood}\) is called with these optimized values, \(\theta ^*\), \(p^*\), and \(\lambda ^*\) to determine the values used for the fit of the Kriging model.

Program Code: Step (K-9)

-

(K-10)

Compile the fit Object. The return values from this call to \(\texttt {krigingLikelihood}\) are added to the \(\texttt {fit}\) object.

Program Code: Step (K-10)

-

(K-11)

Calculate the mean objective function value. In addition to the results from the MLE optimization, the mean objective function value of the best x value, \(y_{\min }\), is calculated and stored in the \(\texttt {fit}\) list as \(\texttt {min}\). This value is needed for the EI computation.

Now the \(\texttt {fit}\) is available and can be used for predictions. The corresponding code is shown below.

6.6 krigingLikelihood

Step: MLE optimization with \(\texttt {krigingLikelihood}\). The objective function accepts the following parameters: \(\texttt {x}\), a vector, which contains the parameters log10(theta), log10(lambda), and p, \(\texttt {AX}\), a three-dimensional array, constructed by \(\texttt {buildKriging}\) from the sample locations, \(\texttt {Ay}\), a vector of observations at sample locations, \(\texttt {optimizeP}\), logical, which specifies whether or not to optimize parameter \(\texttt {p}\) (exponents) or fix at two, \(\texttt {useLambda}\), logical, which specifies whether to use the nugget, and \(\texttt {penval}\), a penalty value which affects the value returned for invalid correlation matrices or configurations. The function \(\texttt {krigingLikelihood}\) performs the following calculations: The \(\theta \) and \(\lambda \) values are updated:

-

(L-1)

Starting Points.

-

(L-2)

Correlation Matrix \(\Psi \). The matrix \(\Psi \) can be calculated. If \(\texttt {useLambda == TRUE}\), the nugget effect \(\lambda \) is added.

-

(L-3)

Cholesky Factorization. Since \(\Psi >0\), its Cholesky factorization is computed.

-

(L-4)

Determinant. The natural log of the determinant of \(\Psi \), \(\texttt {LnDetPsi}\) is calculated, because it is numerically more reliable and also faster than using \(\texttt {det}\) or \(\texttt {determinant}\).

-

(L-5)

Matrix inverse, mean, error, and likelihood.

Using \(\texttt {chol2inv}\), the following values can be calculated: \(\ln (L)\) (Eq. (4.7)), \(\hat{\mu }\) (Eq. (4.5)), and \(\hat{\sigma }\) (Eq. (4.6)). Together with the matrices \(\Psi \) and \(\Psi ^{-1}\), and the vector \(1 \mu \), these values are combined into a list, which is returned from the function \(\texttt {krigingLikelihood}\).

The following code illustrates the main components of \(\texttt {krigingLikelihood}\).

Program Code: krigingLikelihood Function

6.7 Predictions

The \(\texttt {buildKriging}\) function from the R package spot provides two Kriging predictors: prediction with and without re-interpolation. Re-interpolation is presented here, because it prevents an incorrect approximation of the error which might cause a poor global convergence. Re-interpolation bases the computation of the estimated error on an interpolation of points predicted by the regression model at the sample locations, see Forrester et al. (2008a).

The function \(\texttt {predictKrigingReinterpolation}\) requires two arguments: (i) \(\texttt {object}\), the Kriging model (settings and parameters) of class \(\texttt {kriging}\), and (ii) \(\texttt {newdata}\), the design matrix to be predicted.

The function \(\texttt {normalizeMatrix2}\) is used to normalize the data. It uses information from the normalization performed during the Kriging model building phase, namely \(\texttt {normalizexmin}\) and \(\texttt {normalizexmax}\) to ensure the same scaling of the known and new data. Furthermore, the following optimized parameters from the Kriging model are extracted: \(\texttt {scaledx}\), \(\texttt {dmodeltheta}\), \(\texttt {dmodellambda}\), \(\texttt {Psi}\), \(\texttt {Psinv}\), \(\texttt {mu}\), and \(\texttt {yonemu}\).

For re-interpolation, the error in the model excluding the error caused by noise is computed. The following modifications are made:

The MLE for \(\hat{y}\) is

This is Eq. (2.40) in Forrester et al. (2008a). It is implemented as follows:

Depending on the setting of the parameter \(\texttt {target}\), the values \(\texttt {y}\) and \(\texttt {s}\) or \(\texttt {y}\), \(\texttt {s}\), and \(\texttt {ei}\) are returned.

7 Program Code

One complete \(\texttt {spot}\) run is shown below. To increase readability, only one iteration of the \(\texttt {spotLoop}\) is performed.

Program Code: spot Run

The result from one \(\texttt {spotLoop}\) is saved in the variable \(\texttt {result}\).

Notes

- 1.

The acronym SMBO originated in the engineering domain (Booker et al. 1999; Mack et al. 2007). It is also popular in the ML community, where it stands for sequential model-based optimization. We will use the terms sequential model-based optimization and surrrogate model-based optimization synonymously.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Bartz-Beielstein, T., Zaefferer, M. (2023). Hyperparameter Tuning Approaches. In: Bartz, E., Bartz-Beielstein, T., Zaefferer, M., Mersmann, O. (eds) Hyperparameter Tuning for Machine and Deep Learning with R. Springer, Singapore. https://doi.org/10.1007/978-981-19-5170-1_4

Download citation

DOI: https://doi.org/10.1007/978-981-19-5170-1_4

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-5169-5

Online ISBN: 978-981-19-5170-1

eBook Packages: Computer ScienceComputer Science (R0)