Abstract

There are many different IRT models. The simplest model specification is the dichotomous Rasch model. The word “dichotomous” refers to the case where each item is scored as correct or incorrect (0 or 1).

This is a preview of subscription content, log in via an institution.

Buying options

Tax calculation will be finalised at checkout

Purchases are for personal use only

Learn about institutional subscriptionsReferences

Baker FB, Kim S-H (2004) Item response theory: parameter estimation techniques, 2nd edn. Dekker, New York

Birnbaum A (1968) Some latent trait models and their use in inferring an examinee’s ability. In: Lord FM, Novick MR (eds) Statistical theories of mental test scores. Addison-Wesley, Reading, pp 395–479

de Ayala RJ (2009) The theory and practice of item response theory. The Guilford Press, New York

Embretson SE, Reise SP (2000) Item response theory for psychologists. Lawrence Erlbaum Associates, Mahwah

Fan X (1998) Item response theory and classical test theory. An empirical comparison of their item/person statistics. Educ Psychol Measur 58(3):357–381

Humphry SM, Andrich D (2008) Understanding the unit in the Rasch model. J Appl Measur 9(3):249–264

Kiefer T, Robitzsch A, Wu M (2013) TAM (Test analysis modules)—an R package [computer software]

Linacre JM (1998) Estimating Rasch measures with known polytomous item difficulties. Rasch Measur Trans 12(2):638

Linacre JM (2009) The efficacy of Warm’s weighted mean likelihood estimate (WLE) correction to maximum likelihood estimate (MLE) bias. Rasch Measur Trans 23(1):1188–1189

Lord FM, Novick MR (1968) Statistical theories of mental test scores. Addison-Wesley, Reading

Molenaar IW (1995) Estimation of item parameters. In: Fischer G, Molenaar IW (eds) Rasch models: foundations, recent developments, and applications. Springer, New York, pp 39–51

Rasch G (1960) Probabilistic models for some intelligence and attainment tests. Danish Institute for Educational Research, Copenhagen

Rasch G (1977) On specific objectivity: an attempt at formalizing the request for generality and validity of scientific statements. The Danish Yearbook of Philosophy 14:58–93

Samejima F (1977) The use of the information function in tailored testing. Appl Psychol Meas 1:233–247

Thissen D, Steinberg L (2009) Item response theory. In: Millsap RE, Maydeu-Olivares A (eds) The Sage handbook of quantitative methods in psychology. Sage, Thousand Oaks, pp 148–177

Thissen D, Wainer H (2001) Test scoring. Lawrence Erlbaum Associates, Mahwah

van der Linden WJ, Hambleton RK (1997) Handbook of modern item response theory. Springer, New York

Verhelst ND, Glas CAW (1995) One-parameter logistic model. In: Fischer G, Molenaar IW (eds) Rasch models: Foundations, recent developments, and applications. Springer, New York, pp 215–237

Wainer H (2007) A psychometric cicada: educational measurement returns. Book review. Educ Researcher 36:485–486

Warm TA (1989) Weighted likelihood estimation of ability in item response theory. Psychometrika 54:427–450

Wilson M (2011) Some notes on the term: “Wright Map”. Rasch Measur Trans 25(3):1331

Wright BD (1977) Solving measurement problems with the Rasch model. J Educ Meas 14:97–115

Wright BD, Panchapakesan N (1969) A procedure for sample-free item analysis. Educ Psychol Measur 29:23–48

Further Readings

Baker F (2001) The basics of item response theory. ERIC Clearinghouse on Assessment and Evaluation, University of Maryland, College Park, MD. Available online at http://edres.org/irt/baker/

Fischer GH, Molenaar IW (eds) (1995) Rasch models: foundations, recent developments, and applications. Springer, New York

Hambleton RK, Swaminathan H, Rogers HJ (1991) Fundamentals of item response theory. Sage, Newbury Park

Harris D (1989) Comparison of 1-, 2-, and 3-parameter IRT models. NCME Instructional Topics in Educational Measurement Series (ITEMS) Module 7. Retrieved 21 July 2014 from http://ncme.org/publications/items/. There are other modules at this website on various topics of educational measurement

Rasch G (1980) Probabilistic models for some intelligence and attainment tests. University of Chicago Press, Chicago

Rasch Measurement Transactions. http://www.rasch.org/rmt. Many helpful articles can be found at this website

Author information

Authors and Affiliations

Corresponding author

Appendices

Hands-on Practices

Task 1

In EXCEL, compute the probability of success under the Rasch model, given an ability measure and an item difficulty measure. Plot the item characteristic curve. Follow the steps below.

-

Step 1

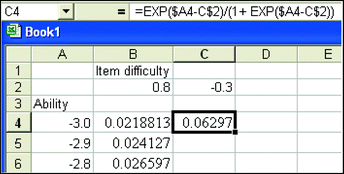

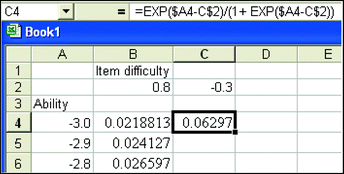

In EXCEL, create a spreadsheet with the first column showing abilities from −3 to 3, in steps of 0.1. In Cell B2, type in a value for an item difficulty, say 0.8, as shown below.

-

Step 2

In Cell B4, compute the probability of success: Type the following formula, as shown

$$ = \exp \left( {\$ A 4- B\$ 2} \right)/\left( { 1+ \exp \left( {\$ A 4- B\$ 2} \right)} \right) $$

-

Step 3

Autofill the rest of column B, for all ability values, as shown

-

Step 4

Make a XY (scatter) plot of ability against probability of success, as shown below.

This graph shows the probability of success (Y axis) against ability (X axis), for an item with difficulty 0.8.

-

Q1.

When the ability equals the item difficulty (0.8 in this case), what is the probability of success?

-

Step 5

Add another item in the spreadsheet with item difficulty of −0.3. In Cell C2, enter −0.3. Autofill cell C4 from cell B4. Then autofill the column of C for the other ability values.

-

Step 6

Plot the probability of success on both items, as a function of ability (hint: plot columns A, B and C).

-

Q2.

A person with ability −1.0 has a probability of 0.14185 of getting the first item right. At what ability does a person have the same probability of getting the second item right?

-

Q3.

What is the difference between the abilities of the two persons where Person A’s probability of getting the first item right is the same as Person B’s probability of getting the second item right?

-

Q4.

How does this difference relate to the item difficulties of the two items?

-

Q5.

If there is a very difficult item (say, with difficulty value of 2), can you sketch the probability curves on the above graph (without computing it in EXCEL)? Check your graph with an actual computation and plot in EXCEL.

Task 2. Compare Logistic and Normal Ogive Functions

The following shows an excerpt of an EXCEL spreadsheet.

In column A, set a sequence of abilities from −3 to 3, in steps of 0.1.

In column B, compute the logistic function

where \( \theta \) is the person-parameter (ability in column A) and \( \delta \) is zero. More specifically, type the formula “=exp(A2)/(1 + exp(A2))” in cell B2, and auto-fill column B.

In column C, compute the normal ogive function (i.e., cumulative normal distribution with mean 0 and standard deviation 1). Specifically, in column C2, type the formula “=normdist(A2, 0, 1, 1)”.

In column D, compute the logistic function, but this time, use a scale parameter of 1.7. Specifically, in cell D2, type the formula “=exp(1.7 * A2)/(1 + exp(1.7 * A2))”.

Make a scatter plot of columns A to D on the same graph. You should get a graph like the following:

Which two functions overlap with each other?

Since the ability scale has an arbitrary scale factor, it does not matter whether we use \( \frac{{\exp \left( {\theta - \delta } \right)}}{{1 + \exp \left( {\theta - \delta } \right)}} \) or \( \frac{{\exp \left( {1.7\left( {\theta - \delta } \right)} \right)}}{{1 + \exp \left( {1.7\left( {\theta - \delta } \right)} \right)}} \).

The latter form is still a Rasch model, and it is very close to the normal ogive function. For this reason, some software programs set the ability scale with a scale factor of 1.7 instead of 1. This will not affect the interpretations of IRT results in any way.

Task 3. Compute the Likelihood Function

To understand the idea of raw score as sufficient statistic for ability estimate, this hands-on practice shows the comparison between likelihood functions for different item response patterns with the same raw test score.

We will use EXCEL for this exercise. Figure 7.10 shows an EXCEL spreadsheet.

In this example, a test has five items where the item difficulties are −2, −1, 0, 1 and 2 (see Row 2 in the spreadsheet in Fig. 7.10). In cells A5 to A17, there is a list of abilities from −3 to 3. Row 3 contains an item response pattern. In this example, it is assumed that a student obtained a score of three by answering Items 1, 3 and 5 correctly. In cells B5 to F17, compute the probability of the item response with given ability. The EXCEL formula in B5 is “=EXP(B$3 * ($A5 − B$2))/(1 + EXP($A5 − B$2))”. The multiplier “B$3” in the formula is the item response. This formula evaluates to \( \frac{{\exp \left( {\theta - \delta_{i} } \right)}}{{1 + \exp \left( {\theta - \delta_{i} } \right)}} \) when the item response is 1, and \( \frac{1}{{1 + \exp \left( {\theta - \delta_{i} } \right)}} \) when the item response is 0. Column G is the likelihood which is the product of the probabilities in cells B to F.

Scanning down column G, the largest value (maximum) is in row 12, when the ability is 0.5. This is the ability that “maximises” the likelihood. So the ability estimate for a response pattern of “10101” (right-wrong-right-wrong-right) will be around 0.5.

Repeat this computation for a different response pattern but still for a raw score of 3. For example, use the response pattern “01101” in cells B3 to F3. What is the maximum likelihood and which ability corresponds to this maximum likelihood?

Plot the likelihood functions for both response patterns “10101” and “01101” as a function of ability. Figure 7.11 shows such a graph.

Repeat this for another response pattern, say, “11100”, and plot the likelihood for the three response patterns on the same graph.

Discussion Points

-

1.

In relation to the Hands-on Practices Task 3, discuss the concept of “raw score as sufficient statistic for ability estimate”. For example, given a raw score, how do different response patterns affect the estimation of ability? Given a raw score, compare the likelihood functions for similarities and differences for different response patterns. Would different response patterns impact on the fit of the items to the model?

-

2.

From a fairness point of view, do you think the ability estimate should be the same for the same raw score irrespective of the actual items answered correctly? If not, how would you score the items? Consider the illustrative example. If Items 9 and 10 are administered, one student got Item 9 right but Item 10 wrong, the other student got Item 9 wrong but Item 10 right, so both students got 1 out of 2. Should they have the same ability estimate?

-

3.

Discuss the concept of “sample-free” in Rasch models. In what ways are estimated statistics sample-free and sample-dependent?

Rights and permissions

Copyright information

© 2016 Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Wu, M., Tam, H.P., Jen, TH. (2016). Rasch Model (The Dichotomous Case). In: Educational Measurement for Applied Researchers. Springer, Singapore. https://doi.org/10.1007/978-981-10-3302-5_7

Download citation

DOI: https://doi.org/10.1007/978-981-10-3302-5_7

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-3300-1

Online ISBN: 978-981-10-3302-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)