Abstract

Cyber Physical Production Systems (CPPS) provide a huge amount and variety of process and production data. Simultaneously, operational decisions are getting ever more complex due to smaller batch sizes (down to batch size one), a larger product variety and complex processes in production systems. Production engineers struggle to utilize the recorded data to optimize production processes effectively.

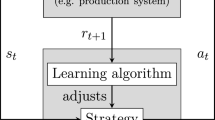

In contrast, CPPS promote decentralized decision-making, so-called intelligent agents that are able to gather data (via sensors), process these data, possibly in combination with other information via a connection to and exchange with others, and finally take decisions into action (via actors). Modular and decentralized decision-making systems are thereby able to handle far more complex systems than rigid and static architectures.

This paper discusses possible applications of Machine Learning (ML) algorithms, in particular Reinforcement Learning (RL), and the potentials towards an production planning and control aiming for operational excellence.

Chapter PDF

Similar content being viewed by others

Keywords

References

Mönch L, Fowler JW, Mason SJ (2013) Production planning and control for semiconductor wafer fabrication facilities. Springer, New York

Monostori L, Csáji BC, Kádár B (2004) Adaptation and Learning in Distributed Production Control. CIRP Annals 53:349-352

Csáji BC, Monostori L, Kádár B (2006) Reinforcement learning in a distributed marketbased production control system. Advanced Engineering Informatics 20:279-288

Sturm R (2006) Modellbasiertes Verfahren zur Online-Leistungsbewertung von automatisierten Transportsystemen in der Halbleiterfertigung. Jost-Jetter Verlag, Heimsheim

Monostori L, Váncza J, Kumara SRT (2006) Agent-Based Systems for Manufacturing. CIRP Annals 55:697-720

Günther J, Pilarski PM, Helfrich G, Shen H, Diepold K (2016) Intelligent laser welding through representation, prediction, and control learning. Mechatronics 34:1-11

Stegherr F (2000) Reinforcement Learning zur dispositiven Auftragssteuerung in der Varianten- Reihenproduktion. Herbert Utz Verlag, München

Thomas MM (1997) Machine Learning. McGraw-Hill, Inc., New York

Ertel W. (2011) Introduction to Artificial Intelligence. Springer, London

Russel S, Norvig P (2016) Artificial intelligence. Pearson Education Limited, Malaysia

Sutton RS, Barto AG (1998) Reinforcement Learning: An Introduction. MIT press, Cambridge

Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O (2017) Proximal Policy Optimization Algorithms. arXiv preprint:1707.06347

Schulman J, Levine S, Moritz P, Jordan MI, Abbeel P (2015) Trust Region Policy Optimization. International Conference on Machine Learning 2015;1889-1897

Mnih V, Kavukcuoglu K, Silver D, Graves A, Antonoglou I, Wierstra D, Riedmiller M (2013) Playing Atari with Deep Reinforcement Learning. arXiv preprint:1312.5602

Stricker N, Kuhnle A, Sturm R, Friess S (2018) Reinforcement learning for adaptive order dispatching in the semiconductor industry. CIRP Annals 67;511-514

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2019 The Author(s)

About this paper

Cite this paper

Kuhnle, A., Lanza, G. (2019). Application of Reinforcement Learning in Production Planning and Control of Cyber Physical Production Systems. In: Beyerer, J., Kühnert, C., Niggemann, O. (eds) Machine Learning for Cyber Physical Systems. Technologien für die intelligente Automation, vol 9. Springer Vieweg, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-58485-9_14

Download citation

DOI: https://doi.org/10.1007/978-3-662-58485-9_14

Published:

Publisher Name: Springer Vieweg, Berlin, Heidelberg

Print ISBN: 978-3-662-58484-2

Online ISBN: 978-3-662-58485-9

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)