Abstract

Technological advancement is changing societal risk constellations. In many cases the result is significantly improved safety and accordingly positive results such as good health, longer life expectancies and greater prosperity. However, the novelty of technological innovations also frequently brings with it unintended and unforeseen consequences, including new risk types.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction and Overview

Technological advancement is changing societal risk constellations. In many cases the result is significantly improved safety and accordingly positive results such as good health, longer life expectancies and greater prosperity. However, the novelty of technological innovations also frequently brings with it unintended and unforeseen consequences, including new risk types. The objective of technology assessment is not only to identify the potential of the innovation but also to examine potential risks at the earliest possible stage and thus contribute to a reasoned evaluation and sound decision-making [1].

In many respects, autonomous driving represents an attractive innovation for the future of mobility. Greater safety and convenience, use of the time otherwise required for driving for other purposes and efficiency gains on the system level are a few of the most commonly expected advantages [2]. At the same time, the systems and technologies for autonomous driving—like any technology—are susceptible to errors that can lead to accidents resulting in property damage or personal injuries. The central role of software can lead to systemic risks, as is well known from the internet and computer worlds. Economic risks—for example for the automotive industry—must also be considered, as well as social risks, such as privacy concerns. Early, comprehensive analysis and evaluation of the possible risks of autonomous driving are an indispensable part of a responsible research and innovation process and thus equally important preconditions for acceptance both on the individual and societal levels. Against this backdrop, this chapter will provide answers to the following questions as they apply specifically to technology assessment:

-

What specific societal risk constellations are posed by autonomous driving? Who could be affected by which damages/injuries and with what probability?

-

What can be learned from previous experience with risk debates on technological advancement for the development and use of autonomous driving?

-

How can the societal risk for the manufacture and operation of autonomous vehicles be intelligibly assessed and organized in a responsible way?

The answers to these questions are intended to draw attention to potentially problematic developments in order to take any such issues into account in design decisions and regulations and thus contribute to responsible and transparent organization of the societal risk.

2 Risk Analysis and Ethics

We define risks as possible harm that can occur as the result of human action and decisions. Many times we are willing to accept some risk, for example because we regard the damage as very unlikely to occur or because we expect a benefit from the decision that outweighs the possible damage that could occur. In some cases, however, we are subjected to risks by the decisions of others, for example through the driving behavior of other drivers or political or regulatory decisions concerning business policy.

2.1 Risk-Terminology Dimensions

Risks contain three central semantic elements: the moment of uncertainty, because the occurrence of possible damage is not certain; the moment of the undesired, because damage is never welcome; and the social moment, because both opportunities and risks are always distributed and are always opportunities and risks for particular individuals or groups.

The moment of uncertainty gets to the epistemological aspect of risk: What do we know about possible harms resulting from our actions and how reliable is this knowledge? This question comprises two subquestions: (1) What type and how severe is the harm that could occur as a result of the action and decision and (2) how plausible and probable is it that the harm will actually occur? For both questions, the spectrum of possible answers ranges from scientifically attested and statistically evaluable to mere assumptions and speculations. If the provability of occurrence and expected scale of the damage can be specified quantitatively, their product is frequently described as an ‘objective risk’ and used in the insurance industry, for example. Otherwise one speaks of ‘subjectively’ estimated risks, for example based on the perception of risk among certain groups of people. Between these poles there is a broad spectrum with many shades of gray with regard to plausibility considerations.

The moment of the undesired is, at least in the societal realm, semantically inseparable from the concept of risk: As representing possible harm, risks are undesirable in themselves.Footnote 1 Nevertheless, the evaluation of the possible consequences of actions as a risk or opportunity may be disputed [3]. One example is whether genetic modification of plants is perceived as a means of securing world nutrition or as a risk for humanity and the environment. The assessment depends on the expected or assumed degree of affectedness by the consequences, which in combination with the uncertainty of those consequences leaves some room for interpretation—and thus all but invites controversy.

Finally, risks also have a social dimension: They are always a risk for someone. Many times, opportunities and risks are distributed differently among different groups of people. In extreme cases, the beneficiaries are not affected by possible harms at all, while those who bear the risks have no part in the expected benefits. So in deliberations regarding opportunities and risks, it is not sufficient to simply apply some abstract method such as a cost-benefit analysis on the macroeconomic level; rather, it is crucial also to consider who is affected by the opportunities and risks in which ways and whether the distribution is fair (Chap. 27).

We accept risks as the unintended consequences of actions and decisions or we impose them on others [4], or expose objects to them (such as the natural elements). When we speak of a societal risk constellation, we are referring to the relationship between groups of people such as decision-makers, regulators, stakeholders, affected parties, advisors, politicians and beneficiaries in view of the frequently controversial diagnoses of expected benefits and feared risks. Describing the societal risk constellations for autonomous driving is the primary objective of this chapter. It is crucial to distinguish between active and passive confrontation with the risks of autonomous driving:

-

1.

Active: People assume risks in their actions; they accept them individually or collectively, consciously or unconsciously. Those who decide and act frequently risk something for themselves. A car company that views autonomous driving as a future field of business and invests massively in it risks losing its investment. The harm would primarily be borne by the company itself (shareholders, employees).

-

2.

Passive: People are subjected to risks by the decisions of other people. Those affected are different than those who make the decisions. A risky driver risks not only his or her own health but also the lives and health of others.

Between these extremes, the following risk levels can be distinguished analytically: (1) Risks that the individual can decide to take or not, such as riding a motorcycle, extreme sports or, in the future, perhaps a space voyage; (2) imposed risks that the individual can reasonably easily avoid, such as potential health risks due to food additives that can, given appropriate labeling, be avoided by purchasing other foods; (3) imposed risks that can only be avoided with considerable effort, e.g. in cases such as the decision of where to locate a waste incineration site, radioactive waste storage site or chemical factories—it is theoretically possible to move to another location, but only at great cost; and (4) imposed risks that cannot be avoided such as the hole in the ozone layer, gradual pollution of groundwater, soil degradation, accumulation of harmful substances in the food chain, noise, airborne particles, etc.

The central risk-related ethical question is under which conditions assuming risks (in the active case) or imposing them on others (in the passive case) can be justified [5]. In many cases there is a difference between the actual acceptance of risks and the normatively expected acceptance, i.e. the acceptability of the risks [6, 7]. While acceptance rests with the individual, acceptability raises a host of ethical questions: Why and under what conditions can one legitimately impose risks on other people (Chap. 4)? The question gains yet more urgency when the potentially affected are neither informed of the risks nor have the chance to give their consent, e.g. in the case of risks for future generations. The objective of the ethics of risk [5] is a normative evaluation of the acceptability and justifiability of risks in light of the empirical knowledge about the type and severity of the harm, the probability of its occurrence and resilience (capacity for resistance) and in relation to a specific risk constellation.Footnote 2

2.2 Constellation Analysis for Risks

Conventional questions as to the type and severity of the risk associated with particular measures or new technologies are therefore too general and obscure salient differentiations. An adequate and careful discussion of risks must be conducted in a context-based and differentiated manner and should, as far as possible, answer the following questions with regard to autonomous driving:

-

For whom can damages occur; which groups of people may be affected or suffer disadvantages?

-

How are the possible risks distributed among different groups of people? Are those who bear the risks different groups than those which would benefit from autonomous driving?

-

Who are the respective decision-makers? Do they decide on risks they would take themselves or do they impose risks on others?

-

What types of harm are imaginable (health/life of people, property damage, effects on jobs, corporate reputations, etc.)?

-

What is the plausibility or probability that the risks will occur? What does their occurrence depend on?

-

How great is the potential scope of the risks of autonomous driving in terms of geographic reach and duration? Are there indirect risks, e.g. of systemic effects?

-

Which actors are involved in risk analysis, risk communication and the evaluation of risks, and what perspectives do they bring to the table?

-

What do we know about these constellations; with what degree of reliability do we know it; what uncertainties are involved; and which of those may not be possible to eliminate?

In this way, ‘the’ abstract risk can be broken down into a number of clearly definable risks in precise constellations whose legitimation and justifiability can then be discussed individually in concrete terms. The societal risks of autonomous driving are made transparent—one could say that a ‘map’ of the risks emerges, representing an initial step towards understanding the overall risk situation in this field. Different constellations involve different legitimation expectations and different ethical issues, e.g. in terms of rights to information and co-determination [5, 4], but also different measures in the societal handling of these risks.

3 Societal Risk Constellations for Autonomous Driving

Societal risk constellations for autonomous driving comprise various aspects of possible harms and disadvantages, different social dimensions and varying degrees of plausibility of the occurrence of possible harms.

3.1 Accident Risk Constellation

One of the expected advantages of autonomous driving is a major reduction in the number of traffic accidents and thus less harm to life, health and valuables [2] (Chap. 17). This reduction is a major ethical matter [9]. Nevertheless, it cannot be ruled out that due to technological defects or in situations for which the technology is not prepared, accidents may occur which are specific to autonomous driving and which would be unlikely to occur with a human driver. One example of this could be unforeseen situations which are unmanageable for the automation in a parking assistant (Chap. 2). Even if only minor dents were the result here, it would have clear legal and financial repercussions that would have to be addressed. These would be all the more complex the greater the accident damage, e.g. in the case of a severe accident with a highway auto-pilot (Chap. 2).

We are very familiar with auto accidents after more than 100 years of established automotive traffic. They involve one or a few vehicles, result in damage for a limited number of people and have limited financial effects. For this risk type of small-scale accidents, an extensive and refined system of emergency services, trauma medicine, liability law and insurance is in place. Individual accidents caused by autonomous vehicles can in large part presumably be handled within the existing system. However, the necessary further development of the legal framework is no trivial matter (see [10]).

Accident risks of this type affect the users of autonomous vehicles as passengers. Users decide for themselves whether they wish to subject themselves to the risk by either using or not using an autonomous vehicle. But the accident risks of autonomous driving can also affect other road users, including, naturally, those who do not participate in autonomous driving. One can theoretically avoid these risks by not taking part in road traffic—but that would be associated with significant limitations. Thus dealing with the risks of autonomous driving is a complex question of negotiation and regulation (Chaps. 4 and 25), in which not only the market, i.e. buying behavior, is decisive, but in which questions of the common welfare such as the potential endangerment of others and their protection must also be considered. This will require democratic and legally established procedures (registration procedures, authorities, checks, traffic regulations, product liability, etc.).

These risks and also their complex distribution are not new; indeed they are omnipresent in the practice of everyday traffic and also societally accepted. This is manifest in the fact that the currently more than 3000 annual traffic deaths in Germany, for example, do not lead to protests, rejection of car transportation or massive pressure for change. The latter component was different in the 1970s, when in West Germany alone over 20,000 annual traffic deaths were recorded despite traffic volumes roughly a tenth of what they are today. Measures primarily aimed at improving passive safety were enacted, leading to a significant reduction in the death rates. Decisive for any societal risk comparison of the previous system with a future system including autonomous driving will be a substantial reduction in the total number of traffic accidents and the damage caused by them (Chaps. 17 and 21). The fact that autonomous driving will not suddenly replace the current system but will presumably be gradually integrated into the traffic system is, against this backdrop, a mixed blessing. On the one hand, mixed systems with human drivers and autonomously guided vehicles will presumably be of significantly greater complexity and unpredictability than a system completely switched-over to autonomous driving. On the other hand, gradual introduction into the transportation system will provide the chance to learn from damage incidents and make improvements. The monitoring of damage incidents and cause analysis will be of critical importance here (Chap. 21).

If the expectation is borne out that human-caused accidents drop substantially with the introduction of autonomous driving, liability law will gain additional significance as the share (albeit not necessarily the absolute number) of technology-caused accidents rises.Footnote 3 While today in most cases the cause of accidents is a human driver and therefore the liability insurance providers pay for damages, in accidents caused by an autonomous vehicle, the operator liability of the vehicle owner or the manufacturer’s product liability would apply (Chap. 26; see also [11, 10]).

3.2 Transportation System Risk Constellation

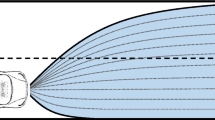

On the system level, autonomous driving promises greater efficiency, reduced congestion and (albeit presumably only marginally; see [2, 12]) better environmental performance (Chap. 16). In the traditional transportation system, systemic effects and thus also potential risks arise primarily due to the available traffic infrastructure and its bottlenecks in conjunction with individual driver behavior and traffic volume. Individual situations such as severe accidents or construction zones can lead to systemic effects like traffic jams. Autonomous driving adds another possible cause of systemic effects to the existing ones: the underlying technology. The following will consider only autonomous vehicles relying on an internet connection (not on-board autonomous).

Through the control software and the reliance on the internet (e.g. as part of the “internet of things”), new effects could emerge (Chap. 24). While in the automotive world to date vehicles are operated more or less independently of one another and mass phenomena only occur through the unplanned interactions of the individually guided vehicles, autonomously guided traffic will to some extent be connected through control centers and networking, e.g. to direct traffic optimally using interconnected auto-pilots (Chap. 2). The control of a large number of vehicles will in all likelihood be conducted through software that is identical in its fundamental structure, as the complexity and concentration of companies will presumably strongly limit the number of providers. This could, in theory, lead to the simultaneous breakdown or malfunctioning of a large number of vehicles based on the same software problem. These problems would then no longer necessarily be on a manageable scale in terms of geography, duration and scale of the damage as in the aforementioned accident cases, but could take on economically significant proportions.

Insofar as autonomous vehicles are connected to each other in a network via internet or some other technology, the system of autonomous driving can be regarded as a coordinated mega-system. Its complexity would be extremely high, not least because it would also have to integrate human-guided traffic—the pattern constellation for systemic risks which are difficult or even impossible to foresee in which the risks of complex technology and software could combine with unanticipated human actions to create unexpected system problems [4].

A completely different type of system problem, in this case only for non-on-board autonomous systems but rather for networked vehicles, could be a sort of concealed centralization and an associated concentration of power. Since optimization on the system level can only be done through extra-regional control centers (Chap. 24), a certain degree of centralization—all decentralization on the user side notwithstanding—on the control and management level is indispensable. This could give rise to concerns that the necessities engendered by the technology could lead to problematic societal consequences: a centrally controlled traffic system as the precursor to a centralist society whose lifeblood was a ‘mega-infrastructure’ beyond the control of the democratic order. But this is not a foreseeable societal risk of autonomous driving and is not by any means a necessary development in this regard. Rather, this is more a concern of the type that should be borne in mind as a potentially problematic issue during the establishment of autonomous driving so that, in the event of its emergence, countermeasures can be taken.

In all of these fields, the decision-makers are a complex web of automotive companies, software producers, political regulators and agencies with different responsibilities. It is possible the new actors may also become involved whose roles cannot be foreseen today. Decision-makers and those affected are largely distinct groups. And since road users and other citizens would have practically no means of avoiding such risks, it is essential that an open, society-wide debate on these matters take place in order to observe and assess the development with due care and bring in any political or regulatory measures that may be appropriate.

3.3 Investment Risk Constellation

Research and development of the technologies of autonomous driving are extremely elaborate and accordingly cost-intensive. Suppliers and automotive companies are already investing now, and considerable additional investments would be required before any introduction of autonomous driving. As with other investments, the business risk exists that the return on investment may not be on the expected scale or in the expected timeframe due to autonomous driving failing to catch on a large scale for whatever reason. If a company opts not to invest in the technology, by contrast, there is the risk that competitors will pursue autonomous driving and, if it succeeds, vastly increase their market share. Thus strategic business decisions have to be made in consideration of the different risks.

Upon first consideration this is a standard case of business management and a classic task for the management of a company. In view of the scale of the needed investments and the patience required for the development of autonomous driving, decisions made in this regard will have very long-term implications. Missteps would initially affect shareholders and employees of the respective companies, and if major crises were triggered by such failures the consequences could extend to the economy as a whole, for example in Germany with its heavy reliance on automotive companies. Although in principle a competitive situation, the scale of the economic challenge may speak in favor of forming strategic coalitions to design a joint standard base technology.

Even after a successful market launch, mishaps or even technology-related system effects (see above) can occur which could pose a major risk for the affected brands (Chaps. 21 and 28). One issue that merits special mention in this context is the risk factor posed by the complexity of the software required for autonomous driving. Complex software is impossible to test in its entirety, which means that in actual use unexpected problems can occur (see Chap. 21 on the testability and back-up of the system). In this sense, use is essentially another test phase, and the users are testers. This is already the case to some degree in the automotive world, with software problems a relatively frequent cause of breakdowns. Whether drivers will accept being used as ‘test subjects’ in a more expansive sense remains to be seen. Any such willingness would presumably be all but nonexistent in safety-related cases. When a computer crashes due to a software error, it is annoying. When an autonomous vehicle causes an accident due to a software error, it is unacceptable. In such cases—unlike with “normal” accidents—massive media attention could be expected.

In particular due to the power of images and their dissemination through the media, such incidents can still have a major impact even when the issues are easily correctable and the consequences minor. A good example of this is the notoriety of the “moose test”, which came to public attention through publication of an incident on a test course. Another example is the story of the sustained damage to the reputation of Audi on the American market that resulted from a report in the US media in 1986 (Chap. 28). Irrespective of the severity of the mishap, such incidents threaten to damage the company’s reputation and thus pose economic risks. Such risks are also present in the case of major recall actions, in which the substantial economic burden posed by the action itself is generally outweighed by the damage to the company’s reputation. The probability of such developments rises with the complexity of the required software, which thus emerges as a central aspect of the risks associated with autonomous driving.

3.4 Labor Market Risk Constellation

Technological and socio-technological transformation processes typically have labor market implications. Automation, in particular, is associated with concerns about the loss of jobs. The automation in production and manufacturing in the 1980s led to the loss of millions of jobs in Germany alone, primarily in the sectors dominated by simple manual tasks. A massive societal effort was required to prepare at least a portion of those affected by the job losses for new jobs through qualification and training. Today there are concerns that the next wave of automation could make even more sophisticated activities superfluous. On the upside, moves towards greater automation can lead to the emergence of new fields of activity and job opportunities, so the overall balance need not be negative. But these new fields of activity are generally only open to people with higher qualifications.

Comprehensive introduction of autonomous driving would undoubtedly affect the labor market. The primary losers would be drivers of vehicles which are currently manually operated: truck drivers, taxi drivers, employees of logistics and delivery companies, especially in the Vehicle-on-Demand’ use case (Chap. 2). A mobility system completely converted to autonomous driving could in fact largely do without these jobs altogether. On the other side of the equation, new jobs in the control and monitoring of autonomous traffic could emerge, and highly qualified personnel would be needed in the development, testing and manufacture of the systems, particularly in the supplier industry.

Thus a similar scenario to the one described above for the previous waves of automation would emerge here as well: elimination of unskilled jobs and the creation of new, highly skilled positions. The net outcome in jobs is impossible to predict from this vantage point. What is clear is that the high number of potentially affected jobs makes it imperative to think about pro-active measures to deal with the development at an early stage, e.g. developing and providing qualification measures. As it may be presumed that the integration of autonomous driving into the current transportation system will occur gradually, and because there is a wealth of experience from previous automation processes, the prerequisites for an effective response should be in place. To identify developments that could be problematic for the labor market at an early stage, research and cooperation on the part of unions, employers and the employment agency to observe current develops is required.

3.5 Accessibility Risk Constellation

Autonomous driving promises greater accessibility for people with reduced mobility, such as the elderly. While this is clearly on the plus side from an ethical standpoint, it could also have social justice implications which, as ‘possible harms,’ could be regarded as risks. This includes primarily the occasionally voiced concern regarding increased costs of individual mobility due to the costs of autonomous driving. In a mixed system this concern could be countered with the argument that the traditional alternative of driving oneself still exists and thus that no change for the worse would occur.

This scenario would beg the question, however, of who would actually profit from the benefits—primarily safety and convenience—of autonomous driving. If the sole effect of autonomous driving were simply to enable those who currently have the means to employ a chauffeur to dispense with their chauffeurs and even save money in the process in spite of the higher up-front and operating costs, this would certainly not promote greater accessibility to mobility in general. Indeed, it would be detrimental if a large share of those who currently drive themselves were unable to afford to participate in autonomous driving. When considering such problematic developments, it must be noted, first, that questions of distributive justice are not specific to autonomous driving; the just distribution of the benefits of new technologies is a fundamental question with regard to technological advancement. Second, these concerns remain speculative at present as no reliable cost information is available.

Social justice issues could arise in relation to the costs of any infrastructure to be established for autonomous driving [2, p. 12] [12]. If it were regarded, for example, as a public responsibility and were funded through tax moneys, non-users would also take part in financing autonomous driving. If the costs were borne by all drivers, non-users would also regard themselves as subsidizing autonomous driving. Depending on how high the infrastructure costs and thus the costs for individuals were, this could also give rise to social justice questions relating to the distribution of the costs.

3.6 Privacy Risk Constellations

Even today modern automobiles provide a wealth of data and leave electronic trails, e.g. by using a navigation aid or through data transmitted to the manufacturer. If autonomous vehicles were not on-board autonomous but had to be connected at all times, the electronic trail would amount to a complete movement profile [12]. Of course the movement profiles of vehicles do not necessarily have to be identical to the movement profiles of their users. In the case of vehicles on demand (Chap. 2) it is of course possible to imagine that they could be used anonymously if ordering and payment were conducted anonymously—which, however, seems rather inconsistent with the current development toward electronic booking and payment systems.

Movement profiles provide valuable information for intelligence services, which could for instance track the movements of regime opponents, but also for companies, which could use such information to create profiles for targeted advertising. In view of the increasing digitalization and connectedness of more and more areas of our social and personal lives, the specific additional digitalization in the field of autonomous driving would presumably represent just one element among many others. The problem—even today the end of privacy is declared with regularity—is immensely larger, as current debates about the NSA, industry 4.0 and Big Data show. The emergence of a converging mega-structure comprising the transportation system, supply of information, communication systems, energy systems and possibly other, currently independently functioning infrastructures, represents one of the biggest challenges of the coming years or even decades. The issue will be to re-define the boundaries between privacy and the public sphere and then actually implement those decisions. This must be done on the political level and is of fundamental significance for democracy as a concept, for absolute transparency is but the flip-side of a totalitarian system. Autonomous driving is presumably of only minor specific significance in this debate.

3.7 Dependency Risk Constellation

Modern societies are increasingly dependent on the smooth functioning of technology. This begins with the dependency on personal computers and cars and extends to the complete dependency on a functioning energy supply and worldwide data communication networks and data processing capabilities as prerequisites for the global economy. Infrastructures that are becoming more complex, such as the electricity supply, which increasingly has to deal with a fluctuating supply due to renewable energy sources, now more frequently engender ‘systemic risks’ [4] in which small causes can lead to system instability through complex chain reactions and positive feedback. This increasingly frequently discussed susceptibility to technical failure and random events, but also terrorist attacks on the technological infrastructure of society (e.g. in the form of a cyber-terror attack on the information technology backbone of the globalized economy) represents an unintended and undesirable, yet ultimately unavoidable consequence of accelerated technological advancement [1]. At issue are risks that emerge more or less gradually, which in many cases are not consciously accepted in the context of an explicit consideration of risk versus opportunity but instead only come to public attention when the dependency becomes tangible, such as during a lengthy blackout, which would incapacitate practically every venue of public life [13].

In the case of a large-scale shift of mobility capacity to autonomous driving, a high proportion of society’s mobility needs would naturally depend on the functioning of this system. A breakdown would be manageable as long as there were enough people who could still operate the vehicles manually. In a system that allowed passenger vehicle traffic to switch from autonomous to manual, for example, this would be no problem. But if a large share of the logistics and freight traffic were switched over to autonomous systems, it would be implausible, in the case of a longer-term total breakdown of the system, to maintain a sufficient pool of drivers, not to mention the fact that the vehicles would have to be equipped to enable manual operation in the first place. Even if significant logistics chains were to be interrupted for a lengthy period due to a system failure, bottlenecks could still quickly form, both in terms of supplying the population and maintaining production in the manufacturing industries [13]. This type of risk is nothing new: the massive dependence on a reliable energy supply that has been the object of much discussion since Germany’s energy transformation policy came into effect, as well as the dependence of the global economy on the internet, are structurally very similar.

Another form of dependence can arise from autonomous driving on the individual level through the atrophying of skills. Large-scale use of autonomous vehicles would result in the loss of driving practice. If the vehicles are then operated manually, e.g. on weekends or on vacation, the reduced driving practice—a certain dependence on autonomously guided vehicles—could result in lower skill levels, such as the ability to master unexpected situations. In the case of a total breakdown of the system, there would suddenly be large numbers of manually operated vehicles on the roads, in which case the reduced skill of the drivers could once again prove problematic. Moreover, there would presumably be major bottlenecks and traffic jams if the expected efficiency gains on the system level (see above) were suddenly to disappear.

Finally, the further digitalization and transfer of autonomy to technical systems would increase society’s vulnerability to intentional disruptions and external attacks. Autonomous driving could be affected by terrorists, psychopaths or indeed military scenarios (cyber warfare). Control centers could be hacked, malware installed, or even a system collapse triggered through malicious action. Defensive measures are of course necessary and technically feasible; but here we run into the notorious ‘tortoise and hare’ problem. But here again, this is not a problem specific to autonomous driving; it is known in the internet world and has arrived in the energy sector, for example, as well.

3.8 On the Relationship Between Risk Constellation and Introduction Scenario

The risk constellations described here are based on qualitative and exploratory considerations from the current world of mobility. They therefore have a certain plausibility, but also contain speculative aspects. They should not be understood as predictions, but as guideposts that should be observed along the way to the research, development and introduction of autonomous driving. Indeed, they cannot be predictive because any future occurrence of particular risk constellations depends on the introduction scenario for autonomous driving and its specific characteristics, and both of these elements are unknown today. For example, the contours of the ‘privacy’ risk constellation will depend heavily on whether an on-board autonomous driving scenario is implemented or autonomous vehicles continuously need to be connected to the internet and control centers.

The public perception of the risk will depend largely on how autonomous driving is introduced. If it happens as part of a gradual automation of driving, the potential to learn gradually from the experiences gained along the way will greatly lessen the risk of a ‘scandalization’ of autonomous driving as a high-risk technology for passengers and bystanders. Steps on the way to further automation such as Traffic Jam Assist, Automated Valet Parking or Automated Highway Cruising (Chap. 2), the introduction of which seems likely in the coming years (Chap. 10), would presumably not lead to an increased perception of risk because they would seem a natural development along the incremental path of technological advancement.

Unlike switching on a nuclear reactor, for example, the process of increasing driver assistance towards greater automation has so far progressed gradually. The automatic transmission has been around for some decades, we are comfortable with ABS, ESP and parking assistants, and further steps towards a greater degree of driver assistance are in the works. Incremental introduction allows for a maximum degree of learning and would also enable gradual adaptation of the labor market, for example, or privacy concerns (see above).

In more revolutionary introduction scenarios (Chap. 10) other and likely more vexing challenges would present themselves for the prospective analysis and perception of risks. The public perception would then react especially sensitively to accidents or critical situations, the risk of ‘scandalization’ would be greater and the ‘investment’ risk constellation (see above) could develop into a real problem for individual suppliers or brands.

4 Relationship to Previous Risk Debates

Germany and other industrialized countries have extensive experience with acceptance and risk debates over many decades. This section will seek to draw insights from these previous risk communications and apply them to autonomous driving—insofar as this is possible in light of the very different risk constellations.

4.1 Experience from the Major Risk Debates

4.1.1 Nuclear Power

The risk constellations for nuclear energy arose principally from the fact that one side was comprised of the decision-makers from politics and the business community, supported by experts from the associated natural science and engineering disciplines. On the other side were the affected parties, including in particular residents in the vicinity of the nuclear power plants, the reprocessing plant and the planned nuclear waste repository in Gorleben, but also growing numbers of the German populace.

Nuclear power is a typical technology that is far-removed form everyday experience. Although many people profit from the generated energy, the source of the energy is not visible, as evidenced by the old saying that “electricity comes from the electrical socket.” The possibility of a worst-case scenario with catastrophic damage potential like in Chernobyl and Fukushima; the fact that on account of those incidents no insurance company was or is willing to insure nuclear power plants; the extremely long-term danger posed by radioactive waste; all of these factors demonstrate that the risk constellation in the case of nuclear power is completely different than the one for autonomous driving. Only one point was instructive:

The early nuclear power debate in Germany was characterized by the arrogance of the experts, who at that time were almost entirely in favor of nuclear power, in the face of criticism. Concerns among the public were not taken seriously, and critics were portrayed as irrational, behind the times or ignorant [14]. In this way, trust was squandered in several areas: Trust in the network of experts, politicians and business leaders behind nuclear energy; trust in the democratic process; and trust in technical experts regarding commercial technologies in general. The risk debate on nuclear energy illustrates the importance of trust in institutions and people and how quickly it can be lost.

4.1.2 Green Biotechnology

The risk constellation in the debate over green biotechnology is interesting in a different way. In spite of a rhetorical focus on risks, for example through the release and uncontrolled spread of modified organisms, the actual topic here is less the concrete scale of the risk and more the distribution of risks and benefits. Unlike with red biotechnology, the end consumer in this case would have no apparent benefit from genetically modified food. In the best case, the genetically modified foods would not be worse than conventionally produced foods. The benefits would accrue primarily to the producing companies. In terms of potential health risks (e.g. allergies), however, the converse would be the case: they would affect users. That is naturally a bad trade-off for users: excluded from the benefits, but exposed to potential risks. This viewpoint could present a plausible explanation for the lack of acceptance and offer a lesson for autonomous driving, i.e. not to emphasize abstract benefits (e.g. for the economy or the environment) but to focus on the benefits to the ‘end customer’.

A second insight also emerges. The attempt by agribusiness companies, and in particular Monsanto, to impose green biotechnology in Europe only intensified the mistrust and opposition. In sensitive areas such as food, pressure and lobbying raise consumer consciousness and lead to mistrust. Attempting to ‘push through’ new technologies in fields that are close to everyday experience, which driving undoubtedly is, therefore seems like an inherently risky strategy.

4.1.3 Mobile Communication Technologies

Mobile technologies (mobile phones, mobile internet) are an interesting case of a technology introduction that is successful in spite of public risk discussions. Although there has been a lively risk discussion regarding electromagnetic radiation (EMF), this has had no adverse affect on acceptance on the individual level. Only locations with transmission towers are the focus of ongoing protests and opposition. There is practically no opposition whatsoever to the technology in itself. The most obvious explanation is that the benefit—and not the benefit to the economy, but the individual benefit experienced by each user—is simply too great.

Even the serious and publicly discussed risks to privacy that have come to light in recent years due to spying by companies and intelligence services have had barely any impact on consumer behavior. Mobile telephony, online banking, e-commerce and the perpetually-online lifestyle enable the creation of eerily accurate profiles, and yet this does not prevent users from continuing to use these technologies and continuously volunteer private information in the process. It is plainly the immediate benefit that moves us to accept these risks even when we know and criticize them.

4.1.4 Nanotechnology

Ever since a risk debate about nanotechnology emerged some 15 years ago, the technology has been dogged by concerns that it could fall victim to a public perception disaster similar to the ones experienced by nuclear energy or genetically modified crops. The extremely wide-ranging, but entirely speculative concerns of the early days, which took the form of horror scenarios such as the loss of human control over the technology, have since disappeared from the debate without these effects coming into play. Through open debate, nanotechnology emerged from the speculative extremes between rapturous expectations and apocalyptic fears to become a ‘normal’ technology whose benefits reside primarily in new material characteristics achieved through the coating or incorporation of nanoparticles.

Here again there were fears of large-scale rejection of the technology. Calls by organizations such as the ETC-Group and BUND for a strict interpretation of the precautionary principle, for example in the form of a moratorium on the use of nanoparticles in consumer products such as cosmetics or foods, gave voice to such concerns. Yet a fundamentalist hardening of the fronts did not occur despite the evident potential. One reason is presumably that communication of the risk proceeded much differently in this case than with nuclear technology and genetic engineering. While in those cases experts initially failed to take concerns and misgivings seriously, dismissing them as irrational and conveying a message of “we have everything under control,” the risk discussion concerning nanotechnology was and remains characterized by openness. Scientists and representatives of the business community have not denied that further research is still needed with regard to potential risks and that risks cannot be ruled out until the toxicology is more advanced. This helped build trust. The situation could be described somewhat paradoxically as follows: Because all sides have openly discussed the need for further research and potential risks, the debate has remained constructive. The gaps in the science regarding potential risks has been construed as a need for research rather than cause for demanding that products with nanomaterials be barred from the market. Rather than insisting on the absolute avoidance of risks (zero risk), trust that any risks will be dealt with responsibly has been fostered.

4.2 Conclusions for Autonomous Driving

Autonomous driving will be an everyday technology that is as closely connected to people’s lives as driving is today. That distinguishes it greatly from nuclear energy, while it shares this everyday-life quality with green biotechnology (through its use in food production) and mobile communication technology. From both risk debates we may extract the insight of how central the dimension of individual benefit is. While any such benefit from eating genetically modified food has scarcely even been advanced by its proponents, the individual benefits of mobile telephones and mobile internet access are readily evident. And as soon as this benefit is demonstrably large, people are prepared to assume possible risks. And this is absolutely rational from an action theory standpoint. What is irrational is to assume risks when the benefits are not evident or would only accrue to other actors (e.g. Monsanto in the green biotechnology debate). In such cases a risk debate can have dramatic consequences and obliterate any chance of acceptance.

Another such knock-out scenario is the possibility of a massive catastrophe such as the ‘residual risk’ of the meltdown of a nuclear power facility. This was decided on the political level, albeit with little chance of external influence. The situation was thus widely regarded as a passive risk situation in which people were exposed to potential harm by the decisions of others. Nothing can be gleaned directly from this scenario for autonomous driving as it would presumably be introduced in the familiar market context of conventional transportation and thus de facto depend on the acceptance of users from the outset. While it is possible to imagine other introduction scenarios (Chap. 10), state-mandated use of autonomous driving is all but unthinkable. The only indirect lesson from the history of nuclear energy is that expertocratic arrogance generates mistrust. An open discussion ‘between equals’—a lesson from the nanotechnology debate as well—is a key precondition for a constructive debate on technology in an open society.

These examples also show that talk of a German aversion to technology is a myth born of the experiences with nuclear energy and genetic engineering. All empirical studies show that otherwise, acceptance is very high across a large spectrum of technology (digital technologies, entertainment technology, new automotive technologies, new materials, etc.) [15]. This also applies to some technologies for which intensive risk debates have taken place in the past or are still ongoing (e.g. mobile telephones). The fact that nowadays questions regarding risks are immediately posed does not indicate hostility to technology; rather, it is a reflection of the experience of the ambivalent character of technology and the desire to obtain as much information as possible. Much that is commonly taken as evidence of hostility to technology has little to do with technology. The Stuttgart21 protests were not about rejecting rail technology; protests against new runways at major airports are not about rejecting aviation technology; nor are protests against highways and bypasses about rejecting the technology of driving cars. Even the rejection of genetically modified crops and nuclear energy have much to do with non-technological factors: mistrust of multinational corporations like Monsanto and a lack of confidence in adequate control and monitoring (e.g. Tebco in Japan).

5 Strategies of Responsible Risk Management

Risk management must be adapted to the respective risk constellations and be conducted on the appropriate levels (public debates, legal regulations, politically legitimated regulation, business decisions, etc.). It is based on the description of the risk constellation, in-depth risk analyses in the respective fields and a societal risk assessment.

5.1 Risk Evaluation

The societal risk assessment is a complex process involving a variety of actors that can take on its own dynamics and lead to unforeseeable developments [4]. Starting with statements by especially visible actors (in the case of new technologies, frequently scientists and businesses on the one hand and pressure groups and environmental groups on the other) leads to a gradual concentration of positions and point-counterpoint situations in the mass media and the public debates represented by them. Individual incidents can tip public opinion or dramatically accelerate developments, as seen in the rapid shift of attitudes about nuclear energy following the reactor incidents in Chernobyl and Fukushima. That makes predictions extremely difficult. The following will venture a tentative statement on autonomous driving in consideration of the plausibility and the lessons drawn from the previous debates.

To begin with, it is important to note that comparisons are very important for risk assessments [6, 12, 4]. We evaluate new forms of risk by looking at them in relation to known forms of risk. Here we have a known risk constellation shared by autonomous driving and conventional driving that can and should be applied as a key benchmark for comparison (Chap. 28; see also Sect. 30.3 in this paper). If autonomous driving were to score significantly and unambiguously better in this comparison (i.e. greater safety, lower risk of accidents), this would be a very important factor in the societal risk assessment.

Moreover, the risks of autonomous driving appear to be relatively minor in many respects. Technology-related accidents would occur with a certain probability, which could be minimized, but not eliminated, in an intensive test phase. Unlike in the case of nuclear energy, for example, their consequences would be relatively minor in geographic and temporal terms (compare the half-lives of radioactive materials) as well as with respect to the number of involved people and the property damage. A worst-case scenario of catastrophic proportions does not apply.

Largely new is the digital networking potentially associated with the automation of driving, with all of its implications for possible systemic risks, vulnerabilities, privacy and surveillance. These will inevitably be major issues in any public debate on autonomous driving. But as these challenges are also present in a multitude of other fields (including, increasingly, non-autonomous driving), they would be unlikely to lead to risk concerns relating specifically to autonomous driving.

One special case is the business risk assessment by manufacturers (Sect. 3.3), both in terms of the return on investment and potential reputation problems due to accidents. Any such business assessment must work with extremely uncertain assumptions, for instance concerning the media’s willingness to play up scandals as well as the effect of any such scandals in themselves. Here misperceptions can arise in both directions: exaggerated fears and naive optimism. It is to be expected, in any event, that from the public perspective, scandals would be specifically attributed to a particular brand rather than to autonomous driving as an abstract concept. That may hold no consolation for the affected brand; but it does indicate—at least insofar as the benefits of autonomous driving are undisputed—that this type of problem would impact the competition between the manufacturers, but not autonomous driving as a technology in itself. And that is already the case for other technologies today.

Overall, the public risk constellation tends towards being unproblematic. It cannot be compared with genetic engineering or nuclear technology: there is no catastrophic scenario, the benefit is readily apparent, the introduction proceeds through a market and not by decree ‘from above’. And it would probably not occur overnight ‘at the flip of a switch,’ but gradually.

5.2 Risk and Acceptance

Acceptance (Chap. 29) cannot be ‘manufactured,’ as is sometimes expected, but can only ‘develop’ (or not). This ‘development’ depends on many factors, some of which can certainly be influenced. In rough terms, public as well as individual acceptance depend largely on perceptions of the benefits and risks. It is crucial that the expected benefits not be couched exclusively in abstract macroeconomic terms, but actually comprise concrete benefits for those who would use the new technologies. Also critical is the ability to influence whether or not one is exposed to risks. Acceptance is generally much easier when the individuals can choose for themselves (e.g. to go skiing or not) than when people are exposed to risks by external entities and are thus effectively subjected to the control of others.

Another important factor with respect to risk concerns is a (relatively) just and comprehensible distribution of benefits and risks (this was the main problem in the genetic engineering debate; see above). And it is absolutely essential that the involved institutions (manufacturers, operators, regulators, monitoring and control authorities) enjoy public trust and that the impression does not arise that there is an effort to ‘push the thing through’ on the backs of the affected with their concerns. To achieve this, communication about possible risks must be conducted in an environment of openness—nothing is more suspect from a mass-media standpoint than to assert that there are no risks and that everything is under control. Concerns and questions must be taken seriously and must not be dismissed a priori as irrational. All of this requires early and open communication with relevant civil society groups as well as in the mass media sphere, and where appropriate in the spirit of ‘participatory technology development.’

There is some reason to believe that for the acceptance of autonomous driving, expected benefits will outweigh concerns regarding risks. After all, conventional driving is an almost universally accepted technology in spite of over 3000 traffic deaths annually in Germany. In contrast with nuclear energy, for example, the scope of potential damage appears limited both geographically and in terms of time. Other societal risks (see above; e.g. surveillance) are more abstract in nature, while the expected benefits are, in part, very tangible. I would therefore turn the juxtaposition “abstract social gain - concrete human loss” (Chap. 27) on its head: abstract risks such as dependence on complex technologies or privacy problems versus concrete individual benefits in terms of safety and comfort. A focus on risks would therefore presumably miss the core of the challenge: the decisive factor seems to be the expected benefits.

That only applies, of course, because the risk assessment identified no dramatic results. Instead, the risk constellation for autonomous driving represents “business as usual” in the course of technological advancement—undoubtedly with its trade-offs and societal risks, but also with the opportunity to deal with them responsibly and civilly. In particular the presumably gradual introduction of autonomous driving and the resultant opportunity to learn and improve in conjunction with the absence of catastrophic-scale risks somewhat diminish the importance of risk questions in the further course of the debate on autonomous driving in relation to, say, labor market or social justice concerns. Rather than focusing on risk, it seems appropriate to regard the elements and options of autonomous driving as parts of an attractive mobility future with greater safety and efficiency, more social justice and more convenience/flexibility. Of course, there is no zero-risk scenario—but that has not been the case with conventional driving either.

Two major acceptance risks remain. One is the possibility of scandalization of problems in test drives or technical failures, perhaps in the context of accidents, through media reporting (read moose test). The consequences would be difficult to predict. But here again it must be noted that the moose test did not give rise to rejection of the technology of driving in itself. The problem—presuming the correctness of the assumption that expected benefits are more important for acceptance than risk concerns—would be more that any reputational damage would affect the respective brands rather than the technology of autonomous driving in itself.

The second big unknown is human psychology. Whether and to what degree people will entrust their lives and health to autonomous driving is an open question. There are no known cases of acceptance problems for other autonomous transportation systems such as subways or shuttle services. But railway vehicles are perceived differently than cars; because in rail transport one is always driven rather than driving oneself; because the respective systems are controlled centrally by the control system; and because the complexity and possibility of unforeseen events in many situations is considerably greater in automobile traffic than in railway cars.

Another aspect with regard to acceptance is that in conventional automobile transportation a well-developed culture of damage adjustment is in place through the traffic courts, appraisers and insurance companies that has reached a high degree of precision and reliability. Autonomous driving, by contrast, would pose new challenges to the damage adjustment system as the question of “Who caused the damage, man or machine?” would have to be answered in an unambiguous and legally unassailable manner. Acceptance of autonomous driving will therefore largely depend on the development of answers to these questions that are equal to the precision of today’s damage adjustment system (Chaps. 4 and 5).

5.3 Elements of Societal Risk Management

This diagnosis of the risk constellation for autonomous driving from the societal perspective suggests, indeed demands, the following risk management measures:

-

Registration criteria for autonomous vehicles must be defined by the responsible authorities; with regard to safety standards, both fundamental ethical questions (how safe is safe enough?) and political aspects (e.g. distributive justice, data protection) must be taken into account and handled on the legislative/regulatory level as appropriate.

-

Key will be the legal regulation of dealing with the possible and small-scale accidents in autonomous driving, and in particular adjustment processes. Product liability in particular will have to be resolved (Chaps. 25 and 26).

-

Adaptation or expansion of the current traffic laws would have to be considered in this context.

-

The change would affect driving schools with regard to the competences required for users of autonomous vehicles, and in particular for the switch from ‘manual’ to ‘autonomous’ mode and vice versa.

-

Crucial for risk management are safety measures to ensure both that autonomously guided vehicles can safely stop in critical situations and that they provide passive protection for occupants in the event of an accident.

-

From this perspective, dispensing with passive safety measures such as seatbelts and airbags would only be legitimate once sufficient positive experience had been gained with autonomous driving to justify such a move. This idea should therefore not be presented as a prospect too early in the process.

-

The expected gradual introduction of the technologies of autonomous driving opens up a variety of possibilities for monitoring and ‘improving on the job.’ Their use in the form of new sensor and evaluation technologies should be an important element of the societal risk management scenario (Chaps. 17, 21 and 28).

-

Risks for the labor market should be observed closely; if job losses are foreseeable, early action should be taken to arrange educational and re-training opportunities for those affected.

-

Significant problems with data protection and privacy are to be expected if autonomous driving takes place within networked systems (although autonomous driving does not pose specific issues in this regard compared to other fields). Technical and legal measures (Chap. 24) should be introduced here to take account of the wide-ranging public debate on these issues (e.g. NSA, indiscriminate data collection).

-

In terms of innovation and business policy, it is incumbent upon national governments to discuss with the automobile manufacturers adequate distribution of the business risks that would cause major economic damage in the event of adverse incidents, e.g. damage to a company’s reputation due to highly publicized breakdowns or accidents.

-

As for risk management in relation to public communication and information policy, the aforementioned lessons from the debates on nuclear energy and nanotechnology are instructive with regard to the need to establish trust.

-

Involving stakeholders is naturally important and decisive for the societal introduction, or ‘adoption,’ of autonomous driving. This would include principally the drivers’ associations which are so influential in Germany. In terms of public opinion, the mass media play a crucial role. Consumer protection is naturally also an important consideration.

-

For modern technology development, it is critically important that it not be conducted entirely within a more or less closed world of engineers, scientists and managers, which is then obliged to plead for acceptance of its own developments after the fact. Instead, the development process—at least the parts which do not directly affect the competitive interests of the companies—should take place with a certain degree of openness. The aforementioned stakeholders should be involved in the development process itself and not just in the later market-launch phase. Ethical questions about how to deal with risks and legal questions regarding the distribution of responsibility should be examined in parallel with the technical development and publicly discussed.

Many of the challenges mentioned here are of a complex system type. In this respect, system research on various levels is especially important. Autonomous driving should not be regarded simply as a replacement of today’s vehicles by autonomously guided ones, but as an expression of new mobility concepts in a changing society.

Notes

- 1.

In other areas, that is not always the case. For example, in some leadership positions a willingness to take risks is regarded as a strength, and in leisure activities such as computer games or sports, people often consciously seek risk.

- 2.

The results of Fraedrich/Lenz [8, S. 50] show that potential beneficiaries consider not only individual but also societal risks.

- 3.

This would, incidentally, also affect a major line of business in the insurance industry, third-party liability insurance.

References

acatech - Deutsche Akademie der Technikwissenschaften: Akzeptanz von Technik und Infrastrukturen. acatech Position Nr. 9, Berlin 2011. Available at: www.acatech.de/de/publikationen/publikationssuche/detail/artikel/akzeptanz-von-technik-und-infrastrukturen.html (accessed 7/29/2014)

Bechmann, G.: Die Beschreibung der Zukunft als Chance oder Risiko? Technikfolgenabschätzung – Theorie und Praxis 16 (1), 24-31 (2007)

Becker, U.: Mobilität und Verkehr. In: A. Grunwald (Ed.) Handbuch Technikethik, Metzler, Stuttgart (2013), pp. 332-337

Beiker, S. (2012): Legal Aspects of Autonomous Driving. In: Chen, L.K.; Quigley, S.K.; Felton, P.L.; Roberts, C.; Laidlaw, P. (Ed.): Driving the Future: The Legal Implications of Autonomous Vehicles. Santa Clara Review. Volume 52, Number 4. pp. 1145-1156. Available online at http://digitalcommons.law.scu.edu/lawreview/vol52/iss4/

Eugensson, A., Brännström, M., Frasher, D. et al.: Environmental, safety, legal, and societal implications of autonomous driving systems (2013). Available at: www-nrd.nhtsa.dot.gov/pdf/esv/esv23/23ESV-000467.PDF (accessed 7/29/2014)

Fraedrich, E., Lenz, B.: Autonomes Fahren - Mobilität und Auto in der Welt von morgen. Technikfolgenabschätzung - Theorie und Praxis 23(1), 46-53 (2014)

Gasser, T., Arzt, C., Ayoubi, M. et al.: Rechtsfolgen zunehmender Fahrzeugautomatisierung. In: Berichte der Bundesanstalt für Straßenwesen. Fahrzeugtechnik F 83, Bremerhaven (2012)

Gethmann, C. F., Mittelstraß, J.: Umweltstandards. GAIA 1, 16–25. (1992)

Grunwald, A.: Zur Rolle von Akzeptanz und Akzeptabilität von Technik bei der Bewältigung von Technikkonflikten“. Technikfolgenabschätzung – Theorie und Praxis 14(3), 54-60 (2005)

Grunwald, A.: Technology assessment: concepts and methods. In: A. Meijers (ed.): Philosophy of Technology and Engineering Sciences. Vol. 9, pp. 1103–1146. Amsterdam, North Holland (2009)

Nida-Rümelin, J.: Risikoethik. In: Nida-Rümelin, J. (Ed.) Angewandte Ethik. Die Bereichsethiken und ihre theoretische Fundierung, pp. 863-887 Kröner, Stuttgart (1996)

Petermann, T., Bradke, H., Lüllmann, A., Poetzsch, M., Riehm, U. (2011): Was bei einem Blackout geschieht. Folgen eines langandauernden und großflächigen Stromausfalls. Berlin, Edition Sigma. Summary in English available at: https://www.tab-beim-bundestag.de/en/pdf/publications/summarys/TAB-Arbeitsbericht-ab141_Z.pdf (accessed 3/24/2016)

POST - Parliamentary Office of Science & Technology: Autonomous Road Vehicles. POSTNOTE 443, London www.parliament.uk/business/publications/research/briefing-papers/POST-PN-443/autonomous-road-vehicles (2013) Accessed 7/30/2014

Radkau, J., Hahn, L.: Aufstieg und Fall der deutschen Atomwirtschaft. oekom Verlag München 2013

Renn, O., Schweizer, P.-J., Dreyer, M., Klinke, A.: Risiko. Über den gesellschaftlichen Umgang mit Unsicherheit. oekom verlag, München (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, duplication, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, a link is provided to the Creative Commons license and any changes made are indicated.

The images or other third party material in this chapter are included in the work's Creative Commons license, unless indicated otherwise in the credit line; if such material is not included in the work's Creative Commons license and the respective action is not permitted by statutory regulation, users will need to obtain permission from the license holder to duplicate, adapt or reproduce the material.

Copyright information

© 2016 The Author(s)

About this chapter

Cite this chapter

Grunwald, A. (2016). Societal Risk Constellations for Autonomous Driving. Analysis, Historical Context and Assessment. In: Maurer, M., Gerdes, J., Lenz, B., Winner, H. (eds) Autonomous Driving. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-48847-8_30

Download citation

DOI: https://doi.org/10.1007/978-3-662-48847-8_30

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-48845-4

Online ISBN: 978-3-662-48847-8

eBook Packages: EngineeringEngineering (R0)