Abstract

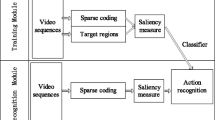

This paper presents a spatio-temporal coding technique for a video sequence. The framework is based on a space-time extension of scale-invariant feature transform (SIFT) combined with locality-constrained linear coding (LLC). The coding scheme projects each spatio-temporal descriptor into a local coordinate representation produced by max pooling. The extension is evaluated using human action classification tasks. Experiments with the KTH, Weizmann, UCF sports and Hollywood datasets indicate that the approach is able to produce results comparable to the state-of-the-art.

Chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

References

Laptev, I.: On space-time interest points. International Journal of Computer Vision, 107–123 (2005)

Shechtman, E., Irani, M.: Space-time behavior-based correlation-or-how to tell if two underlying motion fields are similar without computing them? IEEE Trans. Pattern Anal. Mach. Intell., 2045–2056 (2007)

Liu, J., Shah, M.: Learning human actions via information maximization. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR (2008)

Laptev, I., Marszalek, M., Schmid, C., Rozenfeld, B.: Learning realistic human actions from movies. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR, pp. 16:1–16:43 (2008)

Klaser, A., Marszałek, M., Schmid, C.: A spatio-temporal descriptor based on 3D-gradients. In: BMVC, pp. 995–1004. British Machine Vision Association (2008)

Wong, S., Cipolla, R.: Extracting spatiotemporal interest points using global information. In: IEEE International Conference on Computer Vision, ICCV, pp. 3455–3460 (2007)

Willems, G., Tuytelaars, T., Van Gool, L.: An Efficient Dense and Scale-Invariant Spatio-Temporal Interest Point Detector. In: Forsyth, D., Torr, P., Zisserman, A. (eds.) ECCV 2008, Part II. LNCS, vol. 5303, pp. 650–663. Springer, Heidelberg (2008)

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision, 91–110 (2004)

Scovanner, P., Ali, S., Shah, M.: A 3-dimensional sift descriptor and its application to action recognition. In: Proceedings of the international conference on Multimedia, pp. 357–360. ACM (2007)

Cheung, W., Hamarneh, G.: N-sift: N-dimensional scale invariant feature transform for matching medical images. In: IEEE International Symposium on Biomedical Imaging: From Nano to Macro, pp. 720–723 (2007)

Chen, M.Y., Hauptmann, A.: Mosift: Recognizing human actions in surveillance videos. Transform, 1–16 (2009)

Yang, M., Zhang, L., Yang, J., Zhang, D.: Robust sparse coding for face recognition. In: Computer Vision and Pattern Recognition, CVPR, pp. 625–632 (2011)

Grauman, K., Darrell, T.: The pyramid match kernel: Discriminative classification with sets of image features. In: IEEE International Conference on Computer Vision, ICCV, pp. 1458–1465 (2005)

Yang, J., Yu, K., Gong, Y., Huang, T.: Linear spatial pyramid matching using sparse coding for image classification. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR, pp. 1794–1801 (2009)

Wang, J., Yang, J., Yu, K., Lv, F., Huang, T., Gong, Y.: Locality-constrained linear coding for image classification. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3360–3367 (2010)

Allaire, S., Kim, J., Breen, S., Jaffray, D., Pekar, V.: Full orientation invariance and improved feature selectivity of 3D sift with application to medical image analysis. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, CVPR (2008)

Lopes, A., Oliveira, R., de Almeida, J., de A Araujo, A.: Spatio-temporal frames in a bag-of-visual-features approach for human actions recognition. In: XXII Brazilian Symposium on Computer Graphics and Image Processing, pp. 315–321 (2009)

Vedaldi, A., Fulkerson, B.: Vlfeat: an open and portable library of computer vision algorithms. In: Proceedings of the International Conference on Multimedia, pp. 1469–1472. ACM (2010)

Schuldt, C., Laptev, I., Caputo, B.: Recognizing human actions: a local svm approach. In: Proceedings of the International Conference on Pattern Recognition, pp. 32–36 (2004)

Blank, M., Gorelick, L., Shechtman, E., Irani, M., Basri, R.: Actions as space-time shapes. In: The IEEE International Conference on Computer Vision, ICCV, pp. 1395–1402 (2005)

Rodriguez, M., Ahmed, J., Shah, M.: Action mach a spatio-temporal maximum average correlation height filter for action recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR (2008)

Sun, C., Junejo, I.N., Foroosh, H.: Action recognition using rank-1 approximation of joint self-similarity volume. In: IEEE International Conference on Computer Vision, ICCV, pp. 1007–1012 (2011)

Weinland, D., Özuysal, M., Fua, P.: Making Action Recognition Robust to Occlusions and Viewpoint Changes. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010, Part III. LNCS, vol. 6313, pp. 635–648. Springer, Heidelberg (2010)

Schindler, K., Van Gool, L.: Action snippets: How many frames does human action recognition require? In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR (2008)

Yeffet, L., Wolf, L.: Local trinary patterns for human action recognition. In: IEEE International Conference on Computer Vision, ICCV, pp. 492–497 (2009)

Yao, A., Gall, J., Van Gool, L.: A hough transform-based voting framework for action recognition. In: Computer Vision and Pattern Recognition, CVPR, pp. 2061–2068 (2010)

de Campos, T., Barnard, M., Mikolajczyk, K., Kittler, J., Yan, F., Christmas, W., Windridge, D.: An evaluation of bags-of-words and spatio-temporal shapes for action recognition. In: IEEE Workshop on Applications of Computer Vision, WACV, pp. 344–351 (2011)

Wu, S., Oreifej, O., Shah, M.: Action recognition in videos acquired by a moving camera using motion decomposition of lagrangian particle trajectories. In: IEEE International Conference on Computer Vision, ICCV, pp. 1419–1426 (2011)

Gilbert, A., Illingworth, J., Bowden, R.: Fast realistic multi-action recognition using mined dense spatio-temporal features. In: IEEE International Conference on Computer Vision, ICCV, pp. 925–931 (2009)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2012 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Al Ghamdi, M., Al Harbi, N., Gotoh, Y. (2012). Spatio-temporal Video Representation with Locality-Constrained Linear Coding. In: Fusiello, A., Murino, V., Cucchiara, R. (eds) Computer Vision – ECCV 2012. Workshops and Demonstrations. ECCV 2012. Lecture Notes in Computer Science, vol 7585. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-33885-4_11

Download citation

DOI: https://doi.org/10.1007/978-3-642-33885-4_11

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-33884-7

Online ISBN: 978-3-642-33885-4

eBook Packages: Computer ScienceComputer Science (R0)