Abstract

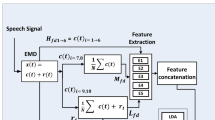

Research activities in the field of human-computer interaction increasingly addressed the aspect of integrating some type of emotional intelligence. Human emotions are expressed through different modalities such as speech, facial expressions, hand or body gestures, and therefore the classification of human emotions should be considered as a multimodal pattern recognition problem. The aim of our paper is to investigate multiple classifier systems utilizing audio and visual features to classify human emotional states. For that a variety of features have been derived. From the audio signal the fundamental frequency, LPC- and MFCC coefficients, and RASTA-PLP have been used. In addition to that two types of visual features have been computed, namely form and motion features of intermediate complexity. The numerical evaluation has been performed on the four emotional labels Arousal, Expectancy, Power, Valence as defined in the AVEC data set. As classifier architectures multiple classifier systems are applied, these have been proven to be accurate and robust against missing and noisy data.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Bayerl, P., Neumann, H.: A fast biologically inspired algorithm for recurrent motion estimation. IEEE Transactions on Pattern Analysis and Machine Intelligence 29(2), 246–260 (2007)

Breiman, L.: Bagging predictors. Machine learning 24(2), 123–140 (1996)

Cowie, R., Douglas-Cowie, E., Tsapatsoulis, N., Votsis, G., Kollias, S., Fellenz, W., Taylor, J.: Emotion recognition in human-computer interaction. Signal Processing Magazine 18(1), 32–80 (2001)

Davis, S., Mermelstein, P.: Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. Transactions on Acoustics, Speech and Signal Processing 28(4), 357–366 (1980)

Devillers, L., Vidrascu, L., Lamel, L.: Challenges in real-life emotion annotation and machine learning based detection. Neural Networks 18(4), 407–422 (2005)

Hermansky, H.: Perceptual linear predictive (PLP) analysis of speech. Journal of the Acoustical Society of America 87(4), 1738–1752 (1990)

Hermansky, H., Hanson, B., Wakita, H.: Perceptually based linear predictive analysis of speech. In: International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 10, pp. 509–512. IEEE, Los Alamitos (1985)

Hermansky, H., Morgan, N., Bayya, A., Kohn, P.: RASTA-PLP speech analysis technique. In: International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 1, pp. 121–124. IEEE, Los Alamitos (1992)

Kuncheva, L., Whitaker, C.: Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Machine Learning 51(2), 181–207 (2003)

Mutch, J., Lowe, D.: Object class recognition and localization using sparse features with limited receptive fields. International Journal of Computer Vision 80(1), 45–57 (2008)

Oudeyer, P.: The production and recognition of emotions in speech: features and algorithms. International Journal of Human-Computer Studies 59(1-2), 157–183 (2003)

Platt, J.: Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. In: Advances in Large Margin Classifiers, pp. 61–74 (1999)

Poggio, T., Knoblich, U., Mutch, J.: CNS: a GPU-based framework for simulating cortically-organized networks. MIT-CSAIL-TR-2010-013/CBCL-286 (2010)

Rabiner, L., Juang, B.: Fundamentals of speech recognition. Prentice-Hall Signal Processing Series (1993)

Rabiner, L.R.: A tutorial on hidden Markov models and selected applications in speech recognition. IEEE 77(2), 257–286 (1989)

Riesenhuber, M., Poggio, T.: Hierarchical models of object recognition in cortex. Nature Neuroscience 2, 1019–1025 (1999)

Robinson, D.W., Dadson, R.: A re-determination of the equal-loudness relations for pure tones. British Journal of Applied Physics 7, 166–181 (1956)

Rolls, E.: Brain mechanisms for invariant visual recognition and learning. Behavioural Processes 33(1-2), 113–138 (1994)

Schels, M., Schwenker, F.: A multiple classifier system approach for facial expressions in image sequences utilizing GMM supervectors. In: International Conference on Pattern Recognition (ICPR), pp. 4251–4254 (2010)

Scherer, S., Schwenker, F., Palm, G.: Classifier fusion for emotion recognition from speech. In: Advanced Intelligent Environments, pp. 95–117 (2009)

Schmidt, M., Schels, M., Schwenker, F.: A hidden markov model based approach for facial expression recognition in image sequences. In: Schwenker, F., El Gayar, N. (eds.) ANNPR 2010. LNCS(LNAI), vol. 5998, pp. 149–160. Springer, Heidelberg (2010)

Schölkopf, B., Smola, A.J., Williamson, R., Bartlett, P.: New support vector algorithms. Neural Computation 12(5), 1207–1245 (2000)

Schuller, B., Valsta, M., Eyben, F., McKeown, G., Cowie, R., Pantic, M.: The first international audio/visual emotion challenge and workshop (AVEC 2011). In: D´Mello, S., et al. (eds.) ACII 2011, Part II. LNCS, vol. 6975, pp. 415–424. Springer, Heidelberg (2011)

Schwenker, F., Scherer, S., Magdi, Y.M., Palm, G.: The GMM-SVM supervector approach for the recognition of the emotional status from speech. In: Alippi, C., Polycarpou, M., Panayiotou, C., Ellinas, G. (eds.) ICANN 2009, Part I. LNCS, vol. 5768, pp. 894–903. Springer, Heidelberg (2009)

Schwenker, F., Scherer, S., Schmidt, M., Schels, M., Glodek, M.: Multiple classifier systems for the recogonition of human emotions. In: El Gayar, N., Kittler, J., Roli, F. (eds.) MCS 2010. LNCS, vol. 5997, pp. 315–324. Springer, Heidelberg (2010)

Serre, T., Wolf, L., Poggio, T.: Object recognition with features inspired by visual cortex. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), vol. 2, pp. 994–1000 (2005)

Walter, S., Scherer, S., Schels, M., Glodek, M., Hrabal, D., Schmidt, M., Böck, R., Limbrecht, K., Traue, H.C., Schwenker, F.: Multimodal emotion classification in naturalistic user behavior. In: Jacko, J.A. (ed.) HCI International 2011, Part III. LNCS, vol. 6763, pp. 603–611. Springer, Heidelberg (2011)

Zheng, F., Zhang, G., Song, Z.: Comparison of different implementations of MFCC. Journal of Computer Science and Technology 16(6), 582–589 (2001)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2011 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Glodek, M. et al. (2011). Multiple Classifier Systems for the Classification of Audio-Visual Emotional States. In: D’Mello, S., Graesser, A., Schuller, B., Martin, JC. (eds) Affective Computing and Intelligent Interaction. ACII 2011. Lecture Notes in Computer Science, vol 6975. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-24571-8_47

Download citation

DOI: https://doi.org/10.1007/978-3-642-24571-8_47

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-24570-1

Online ISBN: 978-3-642-24571-8

eBook Packages: Computer ScienceComputer Science (R0)