Abstract

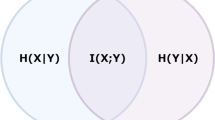

Feature selection methods are widely employed to improve classification accuracy by removing redundant and noisy features. However, removing terms from documents may damage the integrity of content. To bridge the gap between the integrity of documents and the performance of classification, we propose a novel method for classification by two steps. Firstly, we select index terms and expansion terms through Maximum-Relevance and Minimum-Redundancy Analysis (MR2A). Then we combine the predictive power of index terms and expansion terms via Concept Similarity Mapping (CSM). Testing experiments on 20Newsgroups, and SOGOU datasets are carried out under different classifiers. The experiment results show that both CSM and MR2A outperform the baseline methods: Information Gain and Chi-square.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Liu, H., Liu, L., Zhang, H.: Boosting feature selection using information metric for classification. Neurocomputing 73(1-3), 295–303 (2009)

Peng, H., Long, F., Ding, C.: Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1226–1238 (2005)

Sahlgren, M., Cöster, R.: Using bag-of-concepts to improve the performance of support vector machines in text categorization. In: Proceedings of the 20th international conference on Computational Linguistics. Association for Computational Linguistics, p. 487 (2004)

Ding, C., Peng, H.: Minimum redundancy feature selection from microarray gene expression data. In: Proceedings of the 2003 IEEE of the Bioinformatics Conference, CSB 2003, pp. 523–528. IEEE, Los Alamitos (2003)

Yu, L., Liu, H.: Feature selection for high-dimensional data: A fast correlation-based filter solution. In: Machine Learning-International Workshop Then Conference, vol. 20, p. 856 (2003)

Wang, G., Lochovsky, F.: Feature selp̈ection with conditional mutual information maximin in text categorization. In: Proceedings of the Thirteenth ACM International Conference on Information and Knowledge Management, pp. 342–349. ACM, New York (2004)

Yang, Y., Chute, C.: An example-based mapping method for text categorization and retrieval. ACM Transactions on Information Systems (TOIS) 12(3), 252–277 (1994)

Wittek, P., Darányi, S., Tan, C.: Improving text classification by a sense spectrum approach to term expansion. In: Proceedings of the Thirteenth Conference on Computational Natural Language Learning. Association for Computational Linguistics, pp. 183–191 (2009)

Mladenic, D., Grobelnik, M.: Feature selection for classification based on text hierarchy. In: Proceedings of the Workshop on Learning from Text and the Web (1998)

Rogati, M., Yang, Y.: High-performing feature selection for text classification. In: Proceedings of the Eleventh International Conference on Information and Knowledge Management, pp. 659–661. ACM, New York (2002)

Deng, Z., Tang, S., Yang, D., Li, M., Xie, K.: A comparative study on feature weight in text categorization. In: Advanced Web Technologies and Applications, pp. 588–597 (2004)

Ko, Y., Seo, J.: Automatic text categorization by unsupervised learning. In: Proceedings of the 18th Conference on Computational Linguistics. Association for Computational Linguistics, vol. 1, pp. 453–459 (2000)

Yang, Y., Slattery, S., Ghani, R.: A study of approaches to hypertext categorization. Journal of Intelligent Information Systems 18(2), 219–241 (2002)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2011 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Fu, X., Liu, L., Gong, T., Tao, L. (2011). Improving Text Classification with Concept Index Terms and Expansion Terms. In: Liu, D., Zhang, H., Polycarpou, M., Alippi, C., He, H. (eds) Advances in Neural Networks – ISNN 2011. ISNN 2011. Lecture Notes in Computer Science, vol 6677. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-21111-9_55

Download citation

DOI: https://doi.org/10.1007/978-3-642-21111-9_55

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-21110-2

Online ISBN: 978-3-642-21111-9

eBook Packages: Computer ScienceComputer Science (R0)