Abstract

Juraj Hromkovič discusses in his book Algorithmic Adventures [4] the notion of infinity. The title of Chap. 3 is Infinity Is Not Equal to Infinity, or Why Infinity Is Infinitely Important in Computer Science. Infinity is indeed important in mathematics and computer science. However, a computer is a finite machine. It can represent only a finite set of numbers and an algorithm cannot run forever. Nevertheless we are able to deal with infinity and compute limits on the computer as is shown in this article.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Infinity Is Man-Made

The popular use of infinity describes something that never ends. An ocean may be said to be infinitely large. The sky is so vast that one might regard it as infinite. The number of stars we see in the sky seems to be infinite. And the time passes by, it never stops, it runs infinitely forever. Christians end the Lord’s Prayer mentioning eternity, never stopping time:

For thine is the kingdom, the power, and the glory, for ever and ever.

However, we know today that all what we experience is finite. One liter of water contains \(3.343\times 10^{25}\) molecules \(H_2O\) or \(1.003\times 10^{26}\) hydrogen and oxygen atoms (according to Wolfram AlphaFootnote 1). Every human is composed of a finite number of molecules, though it may be difficult to actually count them. In principle one could also count the finite number of atoms which form our planet.

The following quotation is attributed to Albert Einstein (though there is no proof for this):

“Two things are infinite: the universe and human stupidity; and I’m not sure about the universe.”

Today scientists believe what Einstein refers to. Wolfram Alpha estimates that the universe contains \(10^{80}\) atoms.

Thus we have to conclude:

Infinity does not exists in nature – it is man-made.

2 Infinity in Mathematics

The Encyclopaedia BritannicaFootnote 2 defines

Infinity, the concept of something that is unlimited, endless, without bound. The common symbol for infinity, \(\infty \), was invented by the English mathematician John Wallis in 1657. Three main types of infinity may be distinguished: the mathematical, the physical, and the metaphysical. Mathematical infinities occur, for instance, as the number of points on a continuous line or as the size of the endless sequence of counting numbers: \(1, 2, 3, \ldots \). Spatial and temporal concepts of infinity occur in physics when one asks if there are infinitely many stars or if the universe will last forever. In a metaphysical discussion of God or the Absolute, there are questions of whether an ultimate entity must be infinite and whether lesser things could be infinite as well.

The notion of infinity in mathematics is not only very useful but also necessary. Calculus would not exist without the concept of limit computations. And this has consequences in physics. Without defining and computing limits we would e.g. not be able to define velocity and acceleration.

The model for a set with countable infinite elements are the natural numbers  . Juraj Hromkovič discusses the well known fact in [4] that the set of all rational numbers

. Juraj Hromkovič discusses the well known fact in [4] that the set of all rational numbers

has the same cardinality as  , thus

, thus  . On the other hand there are more real numbers even in the interval [0, 1], thus

. On the other hand there are more real numbers even in the interval [0, 1], thus  . Juraj Hromkovič points in [4] also to the known fact that the real numbers are uncountable and that there are at least two infinite sets of different sizes.

. Juraj Hromkovič points in [4] also to the known fact that the real numbers are uncountable and that there are at least two infinite sets of different sizes.

We shall not discuss the difference in size of these infinite sets of numbers, rather we will concentrate in the following on computing limits.

3 Infinite Series

Infinite series \( \sum \limits _{k=1}^\infty a_k \) occur frequently in mathematics and one is interested if the partial sums

converge to a limit or not.

It is well known that the harmonic sum

diverges. We can make this plausible by the following argument. We start with the geometric series and integrate:

If we let \(z\rightarrow 1\) then the right hand side tends to the harmonic series, but the left hand side \(-\log (1-z)\rightarrow \infty \) thus suggests that the harmonic series diverges.

By dividing the last equation by z we obtain

and by integrating we get the dilogarithm function

For \(z=1\) we get the well known series of the reciprocal squares

This series is also the value of the \(\zeta \)-function

for \(z=2\). By dividing the dilogarithm function by z and integrating we get

We can compute the numerical value for \(\zeta (3)\) but a nice result as for \(\zeta (2)\) is still not known.

4 Infinity and Numerical Computations

4.1 Straightforward Computation Fails

Consider again the harmonic series. The terms converge to zero. Summing up the series using floating point arithmetic such a series converges! The following program sums up the terms of the series until the next term is so small that it does not changes the partial sum s anymore.

If we would let this program run on a laptop, we would need to wait a long time till it terminates. In fact it is known (see [5], p. 556) that

where \(\gamma = 0.57721566490153286\ldots \) is the Euler-Mascheroni Constant.

For \(n=10^{15}\) we get \(s_n \approx \log (n) +\gamma = 35.116\) and numerically in IEEE arithmetic we have \(s_n+1/n=s_n\). So the harmonic series converges on the computer to \(s\approx 35\).

My laptop needs 4 s to compute the partial sum \(s_n\) for \(n=10^9\). To go to \(n=10^{15}\) the computing time would be \( T = 4\cdot 10^6\) s or about 46 days! Furthermore the result would be affected by rounding errors.

Stammbach makes in [7] similar estimates and concludes:

Das Beispiel ist instruktiv: Bereits im sehr einfachen Fall der harmonischen Reihe ist der Computer nicht in der Lage, den Resultaten der Mathematik wirklich gerecht zu werden. Und er wird es nie können, denn selbst der Einsatz eines Computers, der eine Million Mal schneller ist, bringt in dieser Richtung wenig.

If one uses the straightforward approach, he is indeed right with his critical opinion towards computers. However, it is well-known that the usual textbook formulas often cannot be used on the computer without a careful analysis as they may be unstable and/or the results may suffer from rounding errors. Already Forsythe [2] noticed that even the popular formula for the solutions of a quadratic equation has to be modified for the use in finite arithmetic. It is the task of numerical analysts to develop robust algorithms which work well on computers.

Let’s consider also the series of the inverse squares:

Here the straightforward summation works in reasonable time, since the terms converge rapidly to zero. We can sum up until the terms become negligible compared with the partial sum:

The program terminates on my laptop in 0.4 s with \(n=94'906'266\) and \(s=1.644934057834575\). It is well known than numerically it is preferable to sum backwards starting with the smaller terms first. Doing so, with

we get another value \(s=1.644934056311514\). Do the rounding error affect both results so much? Let’s compare the results using Maple with more precision:

The result is the same as with the backwards summation. But we are far away from the limit value:

Thus also this series cannot be summed up in a straightforward fashion. On the computer it converges too early to a wrong limit.

Numerical analysts have developed summation methods for accelerating convergence. In the following we shall discuss some of theses techniques: Aitken acceleration, extrapolation and the \(\varepsilon \)-algorithm.

4.2 Aitkens \(\varDelta ^2\)-Acceleration

Let us first consider Aitkens \(\varDelta ^2\)-Acceleration [3]. Let \(\{x_k \}\) be a sequence which converges linearly to a limit s. This means that for the error \(x_k -s\) we have

Thus asymptotically

or when solved for \(x_n\) we get a model of the asymptotic behavior of the sequence:

If we replace “\(\sim \)” by “\(=\)” and write the last equation for \(n-2,n-1\) and n, we obtain a system of three equations which we can solve for \(\rho \), C and s. Using Maple

we get the solution

Forming the new sequence \(x'_{n}=s\) and rearranging, we get

which is called Aitken’s \(\varDelta ^2\) -Acceleration. The hope is that the new sequence \(\{x'_{n}\}\) converges faster to the limit.

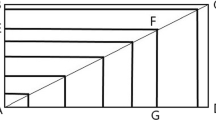

Assuming that \(\{x'_{n}\}\) converges also linearly we can iterate this transformation and end up with a triangular scheme.

Let’s apply this acceleration to compute the Euler-Mascheroni Constant \(\gamma \). First we generate a sequence of \(K+1\) partial sums

Then we program the function for the iterated Aitken-Scheme:

Now we can call the main program

We get

The Aitken extrapolation gives 7 correct decimal digits of \(\gamma \) using only the first 256 terms of the series.

The convergence of the sequence \(\{x_k\}\) is linear with the factor \(\rho \approx 0.5\) as we can see by computing the quotients \((x_{k+1}-\gamma )/(x_{k}-\gamma )\)

4.3 Extrapolation

We follow here the theory given in [3]. Extrapolation is used to compute limits. Let h be a discretization parameter and T(h) an approximation of an unknown quantity \(a_0\) with the following property:

The usual assumption is that T(0) is difficult to compute – maybe numerically unstable or requiring infinitely many operations. If we compute some function values \(T(h_i)\) for \(h_i>0\), \(i=0,1,\ldots ,n\) and construct the interpolation polynomial \(P_n(x)\) then \(P_n(0)\) will be an approximation for \(a_0\).

If a limit \(a_0=\lim _{m\rightarrow \infty } s_m\) is to be computed then, using the transformation \(h=1/m\) and \(T(h)=s_m\), the problem is reduced to \(\lim _{h=0} T(h)=a_0\).

To compute the sequence \(\{P_n(0)\}\) for \(n=0,1,2, \ldots \) it is best to use Aitken-Neville interpolation (see [3]). The hope is that the diagonal of the Aitken-Neville scheme will converge to \(a_0\). This is indeed the case if there exists an asymptotic expansion for T(h) of the form

and if the sequence \(\{h_i\}\) is chosen such that

i.e. if the sequence \(\{h_i\}\) converges sufficiently rapidly to zero. In this case, the diagonals of the Aitken-Neville scheme converge faster to \(a_0\) than the columns, see [1].

Since we extrapolate for \(x=0\) the recurrence for computing the Aitken-Neville scheme simplifies to

Furthermore if we choose the special sequence

then the recurrence becomes

Note that this scheme can also be interpreted as an algorithm for elimination of lower order error terms by taking the appropriate linear combinations. This process is called Richardson Extrapolation and is the same as Aitken–Neville extrapolation.

Consider the expansion

Forming the quantities

we obtain

Thus we have eliminated the term with \( h^2 \). Continuing with the linear combination

we eliminate the next term with \(h^4\).

Often in the asymptotic expansion (3) the odd powers of h are missing, and

holds. In this case it is advantageous to extrapolate with a polynomial in the variable \(x=h^2\). This way we obtain faster approximations of (8) of higher order. Instead of (4) we then use

Moreover, if we use the sequence (5) for \(h_i\), we obtain the recurrence

which is used in the Romberg Algorithm for computing integrals.

For the special choice of the sequence \(h_i\) according to (5) we obtain the following extrapolation algorithm:

Let’s turn now to the series with the inverse squares. We have mentioned before that this series is a special case (for \(z=2\)) of the \(\zeta \)-function

To compute \(\zeta (2)\) we apply the Aitken-Neville scheme to extrapolate the limit of partial sums:

So far we did not investigate the asymptotic behavior of \(s_m\). Assuming that all powers of 1 / m are present, we extrapolate with (6).

We get the following results (we truncated the numbers to save space):

We have used \(2^7=128\) terms of the series and obtained as extrapolated value for the limit \(A_{8,8}=1.644934066805390\). The error is \(\pi ^2-A_{8,8}= 4.28\cdot 10^{-11}\) so the extrapolation works well.

Asymptotic Expansion of \({\varvec{\zeta }}\)-Function. Consider the partial sum

Applying the Euler-MacLaurin Summation Formula (for a derivation see [3]) to the tail we get the asymptotic expansion

The \(B_k\) are the Bernoulli numbers:

and \(B_3=B_5=B_7 = \ldots = 0\). In general the series on the right hand side does not converge. Thus we get

For \(z=3\) we obtain an asymptotic expansion with only even exponents

And for \(z=2\) we obtain

which is an expansion with only odd exponents.

Knowing these asymptotic expansions, there is no need to accelerate convergence by extrapolation. For instance we can choose \(m=1000\) and use (11) to compute

and obtain \(\zeta (3)\) to machine precision.

If, however, knowing only that the expansion has even exponents, we can extrapolate

and get \(A_{8,8}= 1.202056903159594\), and thus \(\zeta (3)\) also to machine precision.

Could we also take advantage if the asymptotic development as e.g. in (12) contains only odd exponents? We need to modify the extrapolation scheme following the idea of Richardson by eliminating lower order error terms. If

we form the extrapolation scheme

Then \(T_{k2}\) has eliminated the term with h and \(T_{k3}\) has also eliminated the term with \(h^3\). In general, for \(h_i=h_02^{-i}\), we extrapolate the limit \(\lim _{k\rightarrow \infty } x_k\) by initializing \(T_{i1}=x_i, i=1,2,3,\ldots \) and

This scheme is computed by the following function:

We now extrapolate again the partial sum for the inverse squares:

This time we converge to machine precision (we omitted again digits to save space):

4.4 The \(\varepsilon \)-Algorithm

In this section we again follow the theory given in [3]. Aitken’s \(\varDelta ^2\)-Acceleration uses as model for the asymptotic behavior of the error

By replacing “\(\sim \)” with “\(=\)” and by using three consecutive iterations we obtained in Subsect. 4.2 a nonlinear system for \(\rho \), C and s. Solving for s we obtain a new sequence \(\{x'\}\). A generalization of this was proposed by Shanks [6]. Consider the asymptotic error model

Replacing again “\(\sim \)” with “\(=\)” and using \(2k+1\) consecutive iterations we get a system of nonlinear equations

Assuming we can solve this system, we obtain a new sequence \(x'_n=s_{n,k}\). This is called a Shanks Transformation.

Solving this nonlinear system is not easy and becomes quickly unwieldy. In order to find a different characterization for the Shanks Transformation, let \(P_k(x) = c_0 + c_1x + \cdots + c_kx^k\) be the polynomial with zeros \({ \rho }_1, \ldots ,{ \rho }_k\), normalized such that \(\sum c_i = 1\), and consider the equations

Adding all these equations, we obtain the sum

and since \(\sum c_i = 1\), the extrapolated value becomes

Thus \(s_{n,k}\) is a linear combination of successive iterates, a weighted average. If we knew the coefficients \(c_j\) of the polynomial, we could directly compute \( s_{n,k}\).

Wynn established in 1956, see [8], the remarkable result that the quantities \(s_{n,k}\) can be computed recursively. This procedure is called the \(\varepsilon \)-algorithm. Let \(\varepsilon _{-1}^{(n)} = 0\) and \(\varepsilon _{0}^{(n)} = x_n\) for \(n=0,1,2,\ldots \). From these values, the following table using the recurrence relation

is constructed:

Wynn showed that \(\varepsilon _{2k}^{(n)} = s_{n,k}\) and \(\varepsilon _{2k+1}^{(n)} = \frac{1}{S_k(\varDelta x_n)}\), where \(S_k(\varDelta x_n)\) denotes the Shanks transformation of the sequence of the differences \(\varDelta x_n = x_{n+1}-x_n\). Thus every second column in the \(\varepsilon \)-table is in principle of interest. For the Matlab implementation, we write the \(\varepsilon \)-table in the lower triangular part of the matrix E, and since the indices in Matlab start at 1, we shift appropriately:

We obtain the algorithm

The performance of the \(\varepsilon \)-algorithm is shown by accelerating the partial sums of the series

We first compute the partial sums and then apply the \(\varepsilon \)-algorithm

We obtain the result

It is quite remarkable that we can obtain a result with about 7 decimal digits of accuracy by extrapolation using only partial sums of the first 9 terms, especially since the last partial sum still has no correct digit!

References

Stoer, J., Bulirsch, R.: Introduction to Numerical Analysis. Springer, New York (1991)

Forsythe, G.E.: How Do You Solve a Quadratic Equation? Stanford Technical report No. CS40, 16 June 1966

Gander, W., Gander, M.J., Kwok, F.: Scientific Computing, an Introduction Using Maple and Matlab. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-04325-8

Hromkovič, J.: Algorithmic Adventures. Springer, Berlin (2009). https://doi.org/10.1007/978-3-540-85986-4

Knopp, K.: Theorie und Anwendungen der unendlichen Reihen. Springer, Heidelberg (1947)

Shanks, D.: Non-linear transformation of divergent and slowly convergent sequences. J. Math. Phys. 34, 1–42 (1955)

Stammbach, U.: Die harmonische Reihe: Historisches und Mathematisches. El. Math. 54, 93–106 (1999)

Wynn, P.: On a device for computing the \(e_m (S_n)\)-transformation. MTAC 10, 91–96 (1956)

Acknowledgment

The author wishes to thank the referees for their very careful reading the paper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Gander, W. (2018). Infinity and Finite Arithmetic. In: Böckenhauer, HJ., Komm, D., Unger, W. (eds) Adventures Between Lower Bounds and Higher Altitudes. Lecture Notes in Computer Science(), vol 11011. Springer, Cham. https://doi.org/10.1007/978-3-319-98355-4_21

Download citation

DOI: https://doi.org/10.1007/978-3-319-98355-4_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-98354-7

Online ISBN: 978-3-319-98355-4

eBook Packages: Computer ScienceComputer Science (R0)