Abstract

Binary versions of evolutionary algorithms have emerged as alternatives to the state of the art methods for optimization in binary search spaces due to their simplicity and inexpensive computational cost. The adaption of such a binary version from an evolutionary algorithm is based on a transfer function that maps a continuous search space to a discrete search space. In an effort to identify the most efficient combination of transfer functions and algorithms, we investigate binary versions of Gravitational Search, Bat Algorithm, and Dragonfly Algorithm along with two families of transfer functions in unimodal and multimodal single objective optimization problems. The results indicate that the incorporation of the v-shaped family of transfer functions in the Binary Bat Algorithm significantly outperforms previous methods in this domain.

Similar content being viewed by others

Keywords

- Binary evolutionary algorithm

- Bat Algorithm

- Gravitational search

- Transfer function

- Single objective optimization

1 Introduction

Evolutionary algorithms (EAs) [1] are relatively new unconstrained heuristic search techniques which require little problem specific knowledge. EAs consist of nature-inspired stochastic optimization techniques that mimic social behavior of animal or natural phenomena known as Swarm Intelligence techniques [2]. EAs are superior to other techniques such as Hill-Climbing algorithms [3] that are easily deceived in multimodal problem spaces and often get stuck in some sub optima.

There are many optimization problems possessing intrinsic search spaces of a binary nature such as feature selection [4] and dimensionality reduction [5]. In this paper, we aim to compare the binary versions of 3 evolutionary algorithms with other high performance algorithms for unimodal and bimodal single objective optimization problems. We also investigate the effect of 2 different families of Transfer Functions (TFs) that are instrumental in mapping continuous variables into binary search spaces that allow us to apply Binary evolutionary algorithms (BEAs). The main contributions of our work can be summarized as:

-

1.

We present a comparative study between three BEAs.

-

2.

Our work focuses on global minimization of 4 standard benchmark functions across 4 evaluation metrics.

-

3.

We study the variations in performance due to different TFs in BEAs.

The rest of the paper is organized as follows: Sects. 2 and 3 describe the various BEAs and their transfer functions. Section 4 lays down the experimental details for which the results are provided and discussed. Section 5 summarizes the ideas presented in this paper and outlines the directions for future work.

2 Binary Evolutionary Algorithms

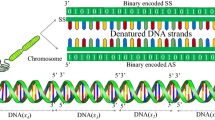

Binary versions of EAs have been adapted from their regular versions that are employed in continuous search spaces. BEAs operate on search spaces that can be considered as hypercubes. In addition, problems with continuous real search space can be converted into binary problems by mapping continuous variables to vectors of binary variables. A brief presentation of the algorithms used for single objective optimization in this paper are shown in the following subsections.

2.1 Gravitational Search Algorithm

Gravitational Search algorithm (GSA) proposed by Rashedi et al. [6] is a nature inspired heuristic optimization algorithm based on the law of gravity and mass interactions. The position of each agent represents the solution of the problem. In GSA, the gravitational force causes a global movement where objects with a lighter mass move towards objects with heavier masses. The inertial mass that determines the position of an object is determined by the fitness function, and the position of the heaviest mass presents the optimal solution. The binary version of GSA, known as BGSA proposed by Rashedi et al. [7] is an efficient adaptation of GSA in binary search spaces.

2.2 Bat Algorithm

Bat Algorithm (BA) [8] is a meta-heuristic optimization algorithm based on the echolocation behavior of bats. BA aims to behave as a colony of bats that track their prey and food using their echolocation capabilities. The search process is intensified by a local random walk. The BA is a modification of Particle Swarm Optimization in which the position and velocity of virtual microbats are updated based on the frequency of their emitted pulses and loudness. The binary version of BA, [9] models the movement of bats (agents) across a hypercube.

2.3 Dragonfly Algorithm

Dragonfly Algorithm (DA) proposed by Mirjalili [10] is a novel swarm optimization technique originating from the swarming behavior of dragonflies in nature. DA consists of two essential phases of optimization, exploitation and exploration that are deigned by modeling the social interaction of dragonflies in navigating, search for good, and avoiding enemies when swarming. The binary version of DA, known as BDA maps the five parameters: cohesion, alignment, escaping from enemies, separation and attraction towards food to be applicable for calculating the position of dragonflies (agents) in the binary search space.

3 Transfer Functions for Binary Evolutionary Algorithms

The continuous and binary versions of evolutionary algorithms differ by two different components: a new transfer function and a different position updating procedure. The transfer function maps a continuous search space to a binary one, and the updating process is based on the value of the transfer function to switch positions of particles between 0 and 1 in the hypercube. The agents of a binary optimization problem can thus move to nearer and farther corners of the hypercube by flipping bits based on the transfer function and position updating procedure. Various transfer functions have been studied to transform all real values of velocities to probability values in the interval [0, 1].

In this paper, we investigate two families of transfer functions first presented in [11], namely s-shaped transfer functions and v-shaped transfer functions. Table 1 presents the s-shaped and v-shaped transfer functions that are in accordance with the concepts presented by Rashedi et al. [7].

The names s-shaped and v-shaped transfer functions arise from the shape of the curves as depicted in Fig. 1 [11], and study the variations in performance due to these.

Due to the drastic difference between s-shaped and v-shaped transfer functions, different position updating rules are required. For s-shaped transfer functions, Formula (1) is employed to update positions based on velocities.

Formula (2) [11] is used to update positions for v-shaped transfer functions.

Formula (2) is significantly different from Formula (1) as it flips the value of a particle only when it has a high velocity as compared to simply forcing a value of 0 or 1. Algorithm 1 presents the basic steps of utilizing both s-shaped and v-shaped functions for updating the position of particles and finding the optima that can be generalized and used with any Binary Evolutionary Algorithm.

4 Experiment Settings and Results

4.1 Benchmark Functions and Evaluation Metrics

In order to compare the performance of the BEAs with both s-shaped and v-shaped transfer functions, four benchmark functions [12] are employed. The objective of the algorithms is to find the global minimum for each of these functions. Both unimodal (\(F_1,F_2\)) and multimodal (\(F_3,F_4\)) functions are used for performance evaluation, and are shown in Table 2. The Range of the function quantifies the boundary of the functions search space. The global minimum value for each of the functions is 0. The two dimensional versions of the functions are shown in Fig. 2. We use a 15 bit vector to map each continuous variable to a binary search space, where one bit is reserved for the sign of each variable. As the dimension of the benchmark function is 5, the dimension of each agent is 75. We use the following four metrics for evaluation and comparison.

-

1.

Average Best So Far (ABSF) solution over 40 runs in the last iteration.

-

2.

Median Best So Far (MBSF) solution over 40 runs in the last iteration.

-

3.

Standard Deviation (STDV) of the best so far solution over 40 runs.

-

4.

Best indicates the best solution over 40 runs in all iterations.

4.2 Experiment Setting

The algorithms are run 40 times with a random seed on an Intel Core 2 Duo ma- chine, 3.06 GHz CPU and 4 GB of RAM. Metrics are reported over multiple runs to reduce the effect of random variations keeping in mind the stochastic nature of the algorithms. Table 3 shows the parameters for simulation of the algorithms.

4.3 Results and Discussion

As may be seen from the results presented in Tables 4 and 5 both BBA and BDA perform better than BGSA. We also observe that the v-shaped family of functions outperforms the s-shaped family and can significantly improve the ability of BEAs in avoiding local minima for these benchmark functions. It is also evident from the results, that BBA with v-shaped transfer functions also performs better than BDA in terms of most aspects. Both BBA and BDA with v-shaped transfer functions significantly outperform high performance algorithms such as CLPSO [13], FIPS [14], and DMS-PSO [15]. Despite relatively accurate results, a high standard deviation is observed which is attributed to the stochastic behavior of swarm optimization algorithms. The number of iterations drastically affects the ability of all 3 algorithms to converge to global minima easily in unimodal functions particularly \(F_2\), characterized by an extremely deep valley that makes convergence at the minima extremely slow. Similarly, for multimodal functions, \(F_3\), \(F_4\), increasing the number of iterations combined with the effect of using v-shaped transfer functions allows a significant improvement in the accuracy as well as convergence rate when compared to s-shaped transfer functions.

5 Conclusion

In this paper, a comparative study between binary evolutionary algorithms is performed and the effect of s and v-shaped transfer functions on the performance of single objective optimization problems is explored. The highest performing algorithm was BBA when compared with BGSA, BDA and other evolutionary algorithms in terms of avoiding local minima in both unimodal and multimodal functions. The results show the drastic improvement in performance with the introduction of v-shaped family of transfer functions for updating the position of agents. Our work shows the merit v-shaped functions and BBA have for use in binary algorithms. In the future, the current work aims to compare the performance of the transfer functions on other evolutionary and heuristic algorithms.

References

Larrañaga, P., Lozano, J.A.: Estimation of Distribution Algorithms: A New Tool for Evolutionary Computation, vol. 2. Springer, New York (2001)

Kennedy, J.: Particle swarm optimization. In: Encyclopedia of Machine Learning, pp. 760–766. Springer (2011)

Tsamardinos, I., Brown, L.E., Aliferis, C.F.: The max-min hill-climbing Bayesian network structure learning algorithm. Mach. Learn. 65(1), 31–78 (2006)

Ritthof, O., Klinkenberg, R., Fischer, S., Mierswa, I.: A hybrid approach to feature selection and generation using an evolutionary algorithm. In: UK Workshop on Computational Intelligence, pp. 147–154 (2002)

Raymer, M.L., Punch, W.F., Goodman, E.D., Kuhn, L.A., Jain, A.K.: Dimensionality reduction using genetic algorithms. IEEE Trans. Evol. Comput. 4(2), 164–171 (2000)

Rashedi, E., Nezamabadi-Pour, H., Saryazdi, S.: GSA: a gravitational search algorithm. Inf. Sci. 179(13), 2232–2248 (2009)

Rashedi, E., Nezamabadi-Pour, H., Saryazdi, S.: BGSA: binary gravitational search algorithm. Nat. Comput. 9(3), 727–745 (2010)

Yang, X.S.: A new metaheuristic bat-inspired algorithm. In: Nature Inspired Cooperative Strategies for Optimization (NICSO 2010), pp. 65–74. Springer (2010)

Mirjalili, S., Mirjalili, S.M., Yang, X.S.: Binary bat algorithm. Neural Comput. Appl. 25(3–4), 663–681 (2014)

Mirjalili, S.: Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 27(4), 1053–1073 (2016)

Mirjalili, S., Lewis, A.: S-shaped versus v-shaped transfer functions for binary particle swarm optimization. Swarm Evol. Comput. 9, 1–14 (2013). http://www.sciencedirect.com/science/article/pii/S2210650212000648

Suganthan, P., Hansen, N., Liang, J., Deb, K., Chen, Y.p., Auger, A., Tiwari, S.: Problem definitions and evaluation criteria for the CEC 2005 special session on real-parameter optimization, pp. 341–357, January 2005

Liang, J.J., Qin, A.K., Suganthan, P.N., Baskar, S.: Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans. Evol. Comput. 10(3), 281–295 (2006)

Mendes, R., Kennedy, J., Neves, J.: The fully informed particle swarm: simpler, maybe better. IEEE Trans. Evol. Comput. 8(3), 204–210 (2004)

Liang, J.J., Suganthan, P.N.: Dynamic multi-swarm particle swarm optimizer with local search. In: The 2005 IEEE Congress on Evolutionary Computation, vol. 1, pp. 522–528. IEEE (2005)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Sawhney, R., Shankar, R., Jain, R. (2019). A Comparative Study of Transfer Functions in Binary Evolutionary Algorithms for Single Objective Optimization. In: De La Prieta, F., Omatu, S., Fernández-Caballero, A. (eds) Distributed Computing and Artificial Intelligence, 15th International Conference. DCAI 2018. Advances in Intelligent Systems and Computing, vol 800. Springer, Cham. https://doi.org/10.1007/978-3-319-94649-8_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-94649-8_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-94648-1

Online ISBN: 978-3-319-94649-8

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)