Abstract

In this paper, we propose an iterative contraction and merging framework (ICM) for semantic segmentation in indoor scenes. Given an input image and a raw depth image, we first derive the dense prediction map from a convolutional neural network (CNN) and a normal vector map from the depth image. By combining the RGB-D image with these two maps, we can guide the ICM process to produce a more accurate hierarchical segmentation tree in a bottom-up manner. After that, based on the hierarchical segmentation tree, we design a decision process which uses the dense prediction map as a reference to make the final decision of semantic segmentation. Experimental results show that the proposed method can generate much more accurate object boundaries if compared to the state-of-the-art methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Semantic segmentation, an important topic in computer vision, aims to assign each pixel a semantic label in an input image and to generate a dense semantic prediction for the given image. Up to now, many semantic segmentation algorithms have been proposed to improve the quality of dense semantic prediction. However, semantic segmentation is still a challenging work because of the complex and diverse contents in an indoor scene.

Today, RGB-D cameras are getting more popular and cheaper, such as Microsoft Kinect and Intel RealSense cameras. With RGB-D cameras, semantic segmentation algorithms [1,2,3] take into account both color and depth data to improve the quality of semantic labeling. On the other hand, deep learning techniques are getting popular due to the availability of large-scale datasets and powerful hardware. In [4], Long et al. proposed a deep learning model, called fully convolutional network (FCN), with both color and depth data to perform impressive semantic segmentation. However, since the FCN model derives the dense semantic prediction by combining the up-sampling of multilayer information, the obtained semantic prediction results are usually not very accurate around the boundary area.

On the other hand, some hierarchical segmentation algorithms [5, 6] have adopted the bottom-up graph-based approach to generate a hierarchical segmentation tree for image segmentation. These algorithms can properly partition an image into image segments with very accurate region boundaries. However, due to the lack of semantic information during the bottom-up process, these hierarchical segmentation algorithms have difficulties in obtaining reasonable semantic segmentation results.

In this paper, we propose a semantic segmentation based on an iterative contraction and merging process to achieve semantic segmentation with much improved boundary extraction. In the proposed approach, we first cascade two bilateral filters to improve the quality of the raw depth data. Second, we integrate the RGB-D image with a dense semantic predictor, which extracts high-level information, and a normal estimation map, which extracts mid-level information, to guide the ICM process for the generation of a more accurate hierarchical segmentation tree. Finally, we make decisions over the hierarchical segmentation tree to obtain the final semantic segmentation result.

2 Proposed Method

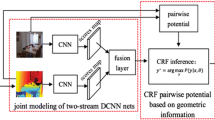

In this paper, we propose an architecture that includes three parts to solve the semantic segmentation problem, as illustrated in Fig. 1. In the first part, we capture the mid-level information from the depth image, such as the refined depth image and the normal vector map, and the high-level information from the deep learning model, such as the dense semantic prediction map. To get the refined depth image, we cascade two bilateral filters to fill in the holes in the original depth image. Based on the refined depth image, we estimate the normal vector map based on cross-product computation. Moreover, the depth image is transformed into an HHA image, which has been defined in [3]. The FCN model combines both the RGB image and the HHA image to derive a semantic prediction map. In the second part, the iterative contraction and merging (ICM) process, which was originally proposed in [5], is used for unsupervised image segmentation. In this paper, we maintain the original ICM features in [5] and add a few more features captured from the first part to derive a more robust hierarchical segmentation tree. In the final part, we design a decision process to decide the semantic segmentation result based on the hierarchical segmentation tree.

3 First Part: Feature Extraction

3.1 Depth Recovery

The raw depth data as shown in Fig. 2 (b) includes holes with no depth values. In order to fill in the estimated depth values over the holes, we assume adjacent pixels with similar RGB color values should have similar depth values. Besides, it is reasonable to trust nearby depth values than far-away depth values in the spatial domain. Hence, we design a spatial kernel which refers to the depth value. On the other hand, we design a range kernel which refers to the RGB values. Based on the spatial kernel and the range kernel, we define a bilateral filter \( D^{h} \left( {\text{x}} \right) \) to fill in the depth values over the hole regions, as expressed below:

where I and \( D \) denote the RGB and depth value, \( {\text{k}}_{1} \) denotes the half-width of the filter, \( \sigma_{s1} \) denote the sigma of the spatial kernel, and \( \sigma_{r1} \) denotes the sigma of the range kernel. In our experiments, we choose \( {\text{k}}_{1} = 20 \), \( \sigma_{s1} = 10 \) and \( \sigma_{r1} = 0.1 \). In some case, the hole area is too large in the original depth images so we need to iteratively fill the holes based on (1) until there is no hole in the depth image. In order to reduce the noise effect on the depth image, we apply the following bilateral filter to smooth the depth image:

In our experiments, we choose k2 = 20, \( \sigma_{s2} = 12 \) and \( \sigma_{r2} = 0.05 \). In Fig. 2(c), we show an example of the refined depth image.

3.2 Normal Vector Map

On the surface of the objects, each point is described as \( \left( {{\text{x}},{\text{y}},{\text{d}}\left( {{\text{x}},{\text{y}}} \right)} \right) \) in the 3D coordinate where \( {\text{d}}\left( {{\text{x}},{\text{y}}} \right) \) denotes the depth value at \( \left( {{\text{x}},{\text{y}}} \right) \). We then estimate the gradient maps by computing the derivatives along the x-direction and y-direction of the depth values. On the surface S, the normal vector at a point i is derived by computing the cross product \( \frac{{\partial {\text{S}}_{\text{i}} }}{{\partial {\text{x}}}} \times \frac{{\partial {\text{S}}_{\text{i}} }}{{\partial {\text{y}}}} \) which is expressed as

where \( \frac{{\partial {\text{d}}\left( {{\text{x}},{\text{y}}} \right)}}{{\partial {\text{x}}}} \approx \frac{{{\text{d}}\left( {{\text{x}} + 1,{\text{y}}} \right) - {\text{d}}\left( {{\text{x}} - 1,{\text{y}}} \right)}}{2} \) and \( \frac{{\partial {\text{d}}\left( {{\text{x}},{\text{y}}} \right)}}{{\partial {\text{y}}}} \approx \frac{{{\text{d}}\left( {{\text{x}},{\text{y}} + 1} \right) - {\text{d}}\left( {{\text{x}},{\text{y}} - 1} \right)}}{2} \). Based on the above computations, we can get the normal vector map as shown in Fig. 2(d).

3.3 Dense Semantic Prediction

In our approach, we generate a dense prediction map by using the fully convolutional network (FCN) [4]. The FCN model was originally designed for RGB images. In order to fit the 3-dimensional input format of the FCN model, we follow Gupta’s approach in [3] and transform the refined depth image to the HHA image format. Besides, we fine-tune the FCN model for color images and learn another FCN model for depth images based on the NYUD-V2 dataset. Since the NYU-Depth V2 dataset [2] contains 40-class labels, the learned FCN model generates 40 layers of score maps. We combine each end of the feature map with the weight 0.5 and perform up-sampling to derive the score maps with each layer of the score maps representing one class. The dense prediction map is assigned by finding the maximal value at each pixel among 40 layers of score maps:

where \( S_{iK} \) is the score of pixel \( i \) correspond to Class \( K \).

4 Second Part: Iterative Contraction and Merging

The iterative contraction and merging process (ICM) in [5] can construct a hierarchical segmentation tree in two phases. In this paper, we aim to derive a more robust hierarchical segmentation tree for indoor-scene images. Hence, we maintain the original features captured in [5] and add additional features extracted in the first part of our system. In Phase 1 of the ICM process, the pixel-wise contraction and merging process quickly merges pixels with similar features into regions. In this phase, the definition of the affinity value \( {\text{A}}\left( {{\text{i}},{\text{j}}} \right) \) between pixels i and j is based on pixel-wise features, such as color, depth, normal vector, and dense semantic prediction value. After that, a remnant removal process is used to remove small remnant regions around the boundary. In Phase 2 of the ICM process, since similar image pixels have already been merged into regions, several kinds of regional information, including features in [5] and other additional features, such as depth, normal vector, and dense semantic prediction, are taken into account to define a more informative affinity value \( {\text{A}}\left( {{\text{R}}_{m} ,{\text{R}}_{n} } \right) \) to describe the similarity between the region pair Rm and Rn. Based on the region-wise affinity matrix, we iteratively apply the contraction and merging process to merge image regions into larger ones and progressively build a hierarchical segmentation tree. In the following subsections, we will describe the details of each module in the ICM process.

4.1 Phase-1: Pixel-Wise Contraction and Merging

Phase-1 of the ICM process aims to quickly merge pixels with similar features into regions. Here, we use a mixed feature space that consists of five subspaces: color space, spatial location space, depth space, normal vector space and dense prediction score space. The features at an image pixel i is mapped into a vector \( \left( {L_{\text{i}} ,a_{\text{i}} ,b_{\text{i}} ,x_{\text{i}} ,y_{\text{i}} ,d_{\text{i}} ,u_{\text{i}} ,v_{\text{i}} ,z_{\text{i}} ,S_{{{\text{i}}1}} \,S_{{{\text{i}}2}} \ldots S_{\text{iK}} } \right) \) in the feature space where \( \left( {L_{\text{i}} ,a_{\text{i}} ,b_{\text{i}} } \right) \), (\( x_{\text{i}} ,y_{\text{i}} ) \), (\( d_{\text{i}} \)), \( (u_{\text{i}} ,v_{\text{i}} ,z_{\text{i}} ) \) and \( (S_{{{\text{i}}1}} \,S_{{{\text{i}}2}} \ldots S_{\text{iK}} \)) denote the color values, spatial coordinates, depth value, normal vector values and dense prediction values, respectively.

The contraction process aims to pull pixel pairs with similar features closer in the feature space than pixel pairs with less similar features. Here, the contraction process is formulated as the problem of finding the twisted coordinates \( \left( {\tilde{L}_{\text{i}} ,\tilde{a}_{\text{i}} ,\tilde{b}_{\text{i}} ,\tilde{x}_{\text{i}} ,\tilde{y}_{\text{i}} ,\tilde{d}_{\text{i}} ,\tilde{u}_{\text{i}} ,\tilde{v}_{\text{i}} ,\tilde{z}_{\text{i}} ,\tilde{S}_{{{\text{i}}1}} \tilde{S}_{{{\text{i}}2}} \ldots \tilde{S}_{\text{iK}} } \right) \) and is defined as

where \( N \) denotes the total number of pixels, \( w_{i} \) denotes the neighborhood around the pixel i, \( \theta_{\text{i}} \in \left\{ {L_{\text{i}} ,a_{\text{i}} ,b_{\text{i}} ,x_{\text{i}} ,y_{\text{i}} ,d_{\text{i}} ,u_{\text{i}} ,v_{\text{i}} ,z_{\text{i}} ,S_{{{\text{i}}1}} S_{{{\text{i}}2}} \ldots S_{\text{iK}} } \right\} \) denotes the original image feature values, \( \tilde{\theta }_{1} \in \left\{ {\tilde{L}_{\text{i}} ,\tilde{a}_{\text{i}} ,\tilde{b}_{\text{i}} ,\tilde{x}_{\text{i}} ,\tilde{y}_{\text{i}} ,\tilde{d}_{\text{i}} ,\tilde{u}_{\text{i}} ,\tilde{v}_{\text{i}} ,\tilde{z}_{\text{i}} ,\tilde{S}_{{{\text{i}}1}} \tilde{S}_{{{\text{i}}2}} \ldots \tilde{S}_{\text{iK}} } \right\} \) denotes the twisted image feature values, and \( \tilde{\varvec{\theta }} = \left[ {\tilde{\theta }_{1} ,\tilde{\theta }_{2} , \ldots ,\tilde{\theta }_{\text{N}} } \right]^{\text{T}} \). In our simulation, we empirically choose \( \uplambda_{\text{x}} =\uplambda_{\text{y}} = 0.001 \) and \( \uplambda_{\uptheta} = 0.01 \) otherwise. More details of contraction process can be found in [5]. In this paper, we define the affinity value \( {\text{A}}\left( {i,j} \right) \) of pairwise pixels as

where \( {\text{D}}\left( {i,j} \right) \) denotes the feature difference between \( i \) and \( j \) and is defined as

Different from [5], here we add depth, normal vector, and dense prediction information into the affinity function. The score weight \( \upkappa_{1} \), \( \upkappa_{2} \), \( \upkappa_{3} \) and \( \upkappa_{4} \) controls the strength of the impact from the color, depth, normal vector and dense prediction, respectively. Similar to [5], the parameter \( \uprho \) is adjusted to satisfy the condition that 70% of the \( {\text{A}}\left( {i,j} \right) \) values is larger than 0.01.

After applying the contraction process, we use the same grid-based merging process in [5] to group nearby pixels into regions. In order to efficiently perform the merging process, we only consider the \( \left( {L_{\text{i}} ,a_{\text{i}} ,b_{\text{i}} ,x_{\text{i}} ,y_{\text{i}} } \right) \) features during merging. Here, the feature space \( {\text{S}}^{\uptheta} = \hbox{max} \left(\uptheta \right) - \hbox{min} \left(\uptheta \right) \) denotes the dynamic range in each feature value. To achieve grid-based merging, we divided the feature space from \( {\text{S}}^{\text{L}} \times {\text{S}}^{\text{a}} \times {\text{S}}^{\text{b}} \times {\text{S}}^{\text{x}} \times {\text{S}}^{\text{y}} \) into \( \left[ {{\text{S}}^{\text{L}} /15} \right]\left[ {{\text{S}}^{\text{a}} /15} \right]\left[ {{\text{S}}^{\text{b}} /15} \right]\left[ {{\text{S}}^{\text{x}} /25} \right]\left[ {{\text{S}}^{\text{y}} /25} \right] \) regions.

After the pixel-wise contraction and merging process, pixels are merged into regions. However, there may exist a few pixels around the boundary of objects looking like noisy data. To deal with this problem, the remnant removal process in [5] is used to merge regions whose size is smaller than the predefined threshold into one of their adjacent regions with the most similar color appearance.

4.2 Phase-2: Region-Wise Contraction and Merging

After Phase-1, image pixels have been merged into a set of regions. Similar to Phase-1, the averaged feature values in each region \( {\text{R}}_{m} \) can be mapped into a feature vector \( \left( {L_{\text{m}} ,a_{m} ,b_{m} ,x_{m} ,y_{m} ,d_{m} ,u_{m} ,v_{m} ,z_{m} ,S_{{{\text{m}}1}} S_{{{\text{m}}2}} \ldots \,S_{\text{mK}} } \right) \) in the feature space. Likewise, we can derive the twisted coordinates and define the energy function as

where \( {\text{N}}_{\text{R}} \) denotes the total number of regions, \( {\upvarphi } \) denotes the neighboring regions of \( R_{\text{m}} \), \( \theta_{{{\text{R}}_{m} }} \in \left\{ {L_{\text{m}} ,a_{m} ,b_{m} ,x_{m} ,y_{m} ,d_{m} ,u_{m} ,v_{m} ,z_{m} ,S_{{{\text{m}}1}} S_{{{\text{m}}2}} \ldots S_{\text{mK}} } \right\} \) denotes the original feature values, \( \tilde{\theta }_{{{\text{R}}_{\text{m}} }} \in \left\{ {\tilde{L}_{\text{m}} ,\tilde{a}_{\text{m}} ,\tilde{b}_{\text{m}} ,\tilde{x}_{\text{m}} ,\tilde{y}_{\text{m}} ,\tilde{d}_{\text{m}} ,\tilde{u}_{\text{m}} ,\tilde{v}_{\text{m}} ,\tilde{z}_{m} ,\tilde{S}_{{{\text{m}}1}} \tilde{S}_{{{\text{m}}2}} \ldots \tilde{S}_{\text{mK}} } \right\} \) denotes the twisted feature values, and \( \tilde{\varvec{\theta }} = \left[ {\tilde{\uptheta}_{1} ,\tilde{\uptheta}_{2} , \ldots ,\tilde{\uptheta}_{{{\text{N}}_{\text{R}} }} } \right]^{\text{T}} \). The affinity function of pairwise region is defined as

where the parameter \( \uprho \) is set so that 10% of the affinity values are larger than 0.01 if \( {\text{N}}_{\text{R}} > 200 \) and \( \uprho \) is set so that 1% of the affinity values are larger than 0.01 if \( {\text{N}}_{\text{R}} \le 200 \). The difference function between two regions \( R_{\text{m}} \) and \( R_{n} \) is defined as

where the terms \( {\text{D}}_{N} \), \( {\text{D}}_{\text{C}} \), \( {\text{D}}_{\text{T}} \), \( {\tilde{\text{D}}}_{\text{C}}^{\text{B}} \) and \( {\text{SI}} \) denote the difference of region-size, color, texture, color-of-border and spatial-intertwining. Some of these terms have been introduced in [5]. In this paper, we add four additional difference terms, including depth \( {\text{D}}_{\text{D}} \), depth-of-border \( {\tilde{\text{D}}}_{\text{D}}^{\text{B}} \), normal-vector \( {\text{D}}_{\text{N}} \) and dense prediction score \( {\text{D}}_{\text{S}} \). In our simulation, we empirically choose \( \upalpha = 1 \), \( \upbeta = 3 \), \( \upgamma = 6 \), \( {\rm M}_{1} = 3 \), \( {\rm M}_{2} = 1 \), \( {\rm M}_{3} = 6 \), \( {\rm M}_{4} = 1 \) and \( {\rm M}_{5} = 2 \). After the ICM, we can construct a hierarchical segmentation tree. Please refer to [5] for the details of hierarchical segmentation tree construction.

5 Decision Process

Based on the hierarchical segmentation tree, we propose a decision process to find a semantic segmentation by referring to the dense prediction map. In the dense prediction map, we merge pixels with the same class into regions. For each region SK in the dense semantic prediction map, we check the corresponding node in the hierarchical segmentation tree that has the largest overlapping with SK. We call this region the candidate region of SK. In other words, given a class region SK from the dense semantic prediction map and a node region Tn, the candidate region \( {\text{C}}_{K} \) is defined as

where n denotes the node in the hierarchical segmentation tree and \( \left| { \cdot } \right| \) denotes number of pixels in the region. After computing candidate regions, we calculate the covering in the image with these candidate regions. Here, three cases may occur at each pixel:

-

(1)

one candidate region: the pixel is only covered by one candidate region,

-

(2)

more than one candidate region: the pixel is covered by more than two candidate regions, and

-

(3)

no candidate region: the pixel is not covered by any candidate region.

For the first case, we can immediately assign the semantic label based on the corresponding class label. In the second case, we tend to trust the candidate region with a smaller size and assign the semantic label accordingly. In the third case, we have to assign these no-candidate regions with some semantic labels. Here, we reverse the search from the no-candidate region into the dense prediction map. We can search multiple nodes in the no-candidate region and find the larger overlap with the class of the dense prediction map.

6 Experimental Results

Our propose model is evaluated on NYU-Depth V2 dataset [2] which includes 1449 RGBD images captured by Microsoft Kinect V1. The dataset contains dense per-pixel labeling and are classified into 40 class for semantic segmentation task by Gupta et al. [3]. The quantitative evaluation is measured by four common metrics: pixel accuracy, mean accuracy, mean IU, and frequency weighted IU. In order to know the most important features in our proposed method, we add depth, normal vector, and dense prediction score. In Table 1, we list the quantitative evaluation over the 100 random sampling images in NYUD-V2 dataset by using different combinations of the RGB, depth, normal vector, and dense prediction score. It can be observed that the high-level dense prediction score provides more important information than other features. It turns out the combination of all features does provide the most preferred results. In Table 2, we also compare our proposed method with other semantic segmentation methods. We can observe that the quantitative evaluation of our proposed method is only close to the original FCN model. This is because most boundary regions of the objects are not taken into account in the quantitative evaluation and the improvement of our method mainly occur in those boundary areas.

In Fig. 3, we compare the semantic segmentation results of our method with the original FCN [4] method over four image samples. It can be easily seen that our proposed method provides more accurate semantic segmentation results around the object boundaries.

(a) Original image. (b) Ground truth. (c) FCN [4] method. (d) Our proposed method.

7 Conclusion

In this paper, we propose an iterative contraction and merging framework for semantic segmentation of indoor-scene images. Based on the ICM framework, we improve the quality of the hierarchical segmentation tree by considering more mid-level and high-level features. We also design a decision process to decide the final semantic segmentation result based on the hierarchical segmentation tree. Experimental results show that the proposed method can generate more accurate object boundaries on semantic segmentation results.

References

Ren, X., Bo, L., Fox, D.: RGB-(D) scene labeling: features and algorithms. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2759–2766 (2012)

Silberman, N., Hoiem, D., Kohli, P., Fergus, R.: Indoor segmentation and support inference from RGBD images. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012 Part V. LNCS, vol. 7576, pp. 746–760. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33715-4_54

Gupta, S., Girshick, R., Arbeláez, P., Malik, J.: Learning rich features from RGB-D images for object detection and segmentation. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014 Part VII. LNCS, vol. 8695, pp. 345–360. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10584-0_23

Long, J., Shelhamer, E., Darrell, T: Fully convolutional networks for semantic segmentation. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015)

Syu, J.H., Wang, S.J., Wang, L.C.: Hierarchical image segmentation based on iterative contraction and merging. IEEE Trans. Image Process. 26(5), 2246–2260 (2017)

Arbelaez, P., Maire, M., Fowlkes, C., Malik, J.: Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 33(5), 898–916 (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Syu, JH., Cho, SH., Wang, SJ., Wang, LC. (2018). Semantic Segmentation of Indoor-Scene RGB-D Images Based on Iterative Contraction and Merging. In: Mansouri, A., El Moataz, A., Nouboud, F., Mammass, D. (eds) Image and Signal Processing. ICISP 2018. Lecture Notes in Computer Science(), vol 10884. Springer, Cham. https://doi.org/10.1007/978-3-319-94211-7_28

Download citation

DOI: https://doi.org/10.1007/978-3-319-94211-7_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-94210-0

Online ISBN: 978-3-319-94211-7

eBook Packages: Computer ScienceComputer Science (R0)