Abstract

In this paper a new device and methods to get an acoustic image of the environment is proposed. It can be used as an electronic aid for people who are visual impaired or blind. The paper presents current methods on human echolocation and current research in electronic aids. It also describes the technical basics and implementation of the audible high resolution ultrasonic sonar followed by a first evaluation of the device. The paper concludes with a discussion and a comparison to classical methods on active human echolocation.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The human echolocation is used by people who are visual impaired or blind to help build a mental spatial map of their environment. Echolocation is often enabled by creating a clicking sound with their tongue. Objects in the environment reflect discernible sounds to the human ear. The human brain can construct a structured image of the environment to build a mental spatial map. With this method, trained users reach enormous perception performances. Position, size or density of objects could be determined. In the brain of people who are blind, the visual cortex supports this kind of construction.

Unlike bats, which perceive structures in submillimeter range by ultrasonic echolocation, the human perception is restricted by the large wavelength of acoustic waves. Ultrasonic waves are reflected back by little, finely structured or soft objects where acoustic waves pass through objects like fences, bushes or thin piles without any considerable reflection because of their stronger diffraction. Another problem is smooth surfaces which normal don’t point in the direction of the user. As light will be spread back at finely structured surfaces even roughly structured surfaces act like a mirror for acoustic waves. As a result, transversal sound waves from the user to the objects are not reflected to the user (stealth effect). This way it is not possible by the user to detect such objects. Ultrasonic waves with high frequencies or short wavelengths, conversely, are reflected to the sound source at smaller structures as soon as the wavelength reaches the order of magnitude of the structure size.

Experiments in creating electronic aids to hear ultrasonic waves and perceive special environmental information are very difficult. The cause lies in the complexity of hearing. Stereo microphones can only measure time differences of an ultrasonic signal. Only the azimuth angle can be derived at this time difference. The information if the object is in front, behind, on top or under the user is normally derived of complex direction-dependent filters which are formed among others by the pinna (outer ear) of the user. Without such a mechanical filter this information could not be derived. The digital clone of this filter, the Head Related Transfer Function, often fails in terms of functionality (forward backward confusion) and complexity (long record sessions).

Nevertheless, a way to use ultrasonic waves in combination with the human ear is enabled by nonlinear acoustics. In presence of high sound pressure levels, the air behaves in a nonlinear manner. This enables the transformation of ultrasonic to acoustic waves in the air itself by special signal modulation. During the process of transformation, the physical features of the ultrasonic signal retains in the acoustic signal. This auto conversion from ultrasonic to acoustic waves enables the user at perceiving ultrasonic signals with their own ears.

With this method a directed ultrasonic wave could be oriented to an object. The object reflects the ultrasonic waves. In the journey, from the source via the object back to the source, the ultrasonic waves will be transformed to acoustic waves. To the listener it behaves as if the object itself is the sound source.

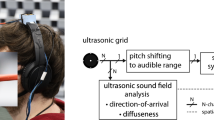

A device which creates exactly the effects described above has been developed by the authors and will be described in the proposed paper. With this device it is possible for the user to hear objects and obstacles and determine their position. We tested the audible high resolution ultrasonic sonar (AHRUS) with four participants to get first insights about the possibilities that the system offers.

The device introduced brings a completely new application of ultrasonic waves in the case of electronic aids for people who are visual impaired and blind. The approach uses the ears of the user, with existing skills knowing how to interpret acoustic stimuli (like a falling coin), without additional help. The interpretation of the generated signals, however, needs to be trained, just like interpretation of normal acoustic signals was trained in childhood. But the advantages of the device are very promising and improve the perception of the environment as an extension to the white cane.

The proposed paper first comes up with a discussion on related work. Next the basics of spatial hearing are covered to give an insight about human hearing. A current strong related method is the human echolocation that is used by trained people to build an acoustic image of the environment. Different methods for human echolocation are explained in Sect. 4. The following section describes the AHRUS device itself. After a discussion of different promising signal shapes and methods to use the AHRUS device, a first evaluation of AHRUS is presented. Conclusively a comparison of the results to classical methods of active human echolocation is drawn up followed by a conclusion.

2 Related Work

In research there are several aids that use ultrasonic signals. The existing aids share the commonality that they use the ultrasonic signals to measure characteristics about the environment and interpret echoes by a computer. This differs from the approach of using a parametric ultrasonic speaker and letting the ear and brain do the rest.

To name a view projects that use ultrasonic sensors for aiding people who are visual impaired, an early project was “The People Sonar” described in [3]. It uses ultrasonic sensors to get information about obstacles in the environment. It measures if there are living or non-living obstacles near the user and presents the information by vibrotactile feedback. Another project takes a greater advantage in the field of robotics. In [9] a robot is used as guide dog. The user pushes the robot and it recognizes and bypasses obstacles providing guidance around the obstacle.

There is also research related to the sonification aspect of AHRUS. AHRUS sonifies the environment physically but there is the possibility to do this in a virtual manner. The environment is recorded with 3D cameras. These images are processed and relevant obstacles can be presented by playing a 3D sound to the user that mimics the objects position. This topic, with others is researched in the “Sound of Vision” project [7].

The contribution of AHRUS in comparison to related work is a direct perception of the environment by the own ears and brain. There is no digital signal processing and presentation layer between the physical environment and the user.

3 Spatial Hearing

The human ear is very precise. It is possible to find a fallen object like keys just by hearing their sound as they land on the ground. The possibility of hearing stereo sounds is one key in the process of locating a sound source. Figure 1 on the left side illustrates a sound source that is moving on a horizontal plane where the azimuth angle changes. The brain can resolve direction by interpreting the time delay between the sound arriving each ear [10]. If the brain used only this method, it be impossible to differentiate sounds from the front and sounds from the back. To give a plain example Fig. 1 shows on the right side a sound source that is moving exactly in the middle between the ears. There is no delay between the sounds arriving at each ear. Determining the different directions is also done by introducing the shape of the ears and the head. They build a directional signal filter that enables the brain to determine the direction of a sound. Since childhood the brain is trained to make such calculations. By combining both signal interpretations humans can distinguish between directions in azimuth by \(5^\circ \) and in elevation by \(13^\circ \) [5].

Because of the uniqueness of the shape of a human ear in combination with the shape of the head the directional manipulation of sounds is different from person to person. So Spatial Listening is a learned, subconscious perception of the phenomena.

4 State of the Art in Human Echolocation

The term human echolocation describes a group of methods that enable people who are visual impaired to gather an acoustic image of their environment. To learn the skills for such an environmental perception there are orientation and mobility (O&M) trainings as well as traveling skills using the white cane. The goal of human echolocation is to improve the orientation and navigation skills but also to get structural information about the environment nearly like an image provides.

Passive Methods

The methods are differentiated into active and passive methods. Passive echolocation is done by casual sounds made by a practitioner, e.g. sounds from steps or the white cane. The sound waves are thrown back by objects in the environment like walls. The audio impressions of such reflected sounds are dependent on specific features of the objects like height or hardness. Those impressions allow conclusions about the environment. Such an acoustic image is fuzzy, but it provides enough information to find doors or cars. Despite the inadequacy this method is often used even if it is used subconsciously [1].

Active Methods

By generating a striking acoustic signal a practitioner gets a much more detailed acoustic image of the environment. Such techniques are called active echolocation. The generated signals are adapted to the current situation. A kind of dialog between the practitioner and its environment arises in which the practitioner systematically scans its environment. This is realized by changing the practitioners position relative to the object or in changing the loudness or waveform of the signal. A tongue click, or a finger flick are common used signals in human echolocation. The duration of an impulse and the echoes of the environment give only a short acoustic image. Because of these characteristics this technique is also called flash sonar [1, 4].

Daniel Kish, a pioneer of flash sonar, says that a suitable signal is as sharp as the bursting bubble of a bubble gum as well as discreet and only as loud as needed. Otherwise multiple echoes emerge and disturb the perception of the environment. Aids as hand clicker are in certain circumstances to loud e.g. for indoor environments. Other acoustic signals like the noise of a long cane are in unfavourable orientation to the practitioner’s ears. A disadvantageous angle between sound source, object and ear causes a wrong acoustic image. So, a tongue klick seems to be the best method for a controlled signal to get an acoustic image. In comparison to passive echolocation characteristics of surrounding structures and objects are much more detailed in flash sonar if the technique is learned and applied the right way. An intentional produced sound signal can be identified quite good even in noisy backdrops [1, 2].

Brain-scan research has shown that hearing offers the ability to analyze scenes. This analysis of scenes describes the ability of recognizing and imagining different events in a dynamic changing room [6, 8].

Possibilities and Limits

Practitioners, even with exercise, can discern object sizes, shapes of rooms, courses of buildings or holes in objects. Advanced practitioners can analyze complex scenes with different shapes and objects. They are also able to recognize complex environmental characteristics. Even little objects and fine details can be discerned. Some advanced practitioners can ski or go biking [1].

Subgroups of flash sonar are differentiated between localizing, shape and texture recognition. Localizing describes the task of recognizing different objects and their relative position to the practitioner. In shape recognition by finding edges or corners of an object the size and shape is determined. Texture recognition allows the classification of different surfaces like hard or soft, fine structured or rough structured, smooth or porous.

The maximum resolution that a practitioner without any aid can reach is in the case of a freestanding object, under calm circumstances an area of about \(0.2\,\mathrm{m}^2\) (\(8\,\mathrm{in}^2\)) at a distance of about 45 cm (18 in). The range depends on the ambient noise, the object’s size and hardness. By using a tongue click the range is about 10 m (33 ft). By using clapping hands and big objects like buildings the range can be extended up to 50 m (164 ft) or greater [1, 2].

5 The AHRUS System

AHRUS, is defined as an ultrasonic sonar whose echoes can be perceived directly with the human ear. The concept was developed to make the positive properties of ultrasonic waves, known from bats or dolphins, usable for the orientation and navigation by people who are blind. Hearing aids for the translation of ultrasound in auditory sound are deliberately omitted, since they generally cannot reproduce the precise directional perception of natural human hearing.

Working Principles

The AHRUS-System is based on “parametric speakers”. This special form of ultrasonic speakers uses effects of nonlinear acoustics to achieve a self demodulation of an ultrasound beam after a reflection on an object to a strongly directed audible sound which can be perceived directly with the human ear, but having ultrasound-like properties. The principle of a so-called parametric ultrasonic loudspeaker has been known for many years and is usually used for highly directed audio spotlights, e.g. for a playback of sharply demarcated audio information in a museum.

A strongly directed ultrasonic beam with a very high sound pressure level is generated with an array of ultrasonic transducers. This beam is modulated in its amplitude by an audio signal, which remains initially inaudible. At a very high pressure level the air as “sound transmitting medium” can no longer be considered as linear. Instead, characteristics and behavior occur that are described by the laws of nonlinear acoustics. One of these characteristics is the gradual transformation (demodulation) of the modulated ultrasonic beam. On journey through the air, the ultrasonic beam is converted into a sharply focused audible signal which is detected with the natural ear. The resulting generation zone can extend up to about 10 m (33 ft). Beyond this zone the demodulation stops because of decreasing ultrasound pressure level due attenuation in air and beam expansion [9].

Figure 2 illustrates the principle of the audible ultrasonic sonar. An object in front of the user is illuminated with the modulated ultrasound beam. Because of diffraction effects the ultrasound component of the beam is preferred reflected at the object and returns as echo. On their return, these echoes are de-modulated to audible sound and then processed over the natural sense of hearing. This results in an optimal spatial perception of the reflecting object.

Device Design

Figure 3 shows the prototype of the AHRUS system. The transducer is composed of 19 piezo ultrasound emitters, producing an ultrasound pressure level up to 135 dB at a frequency of 40 kHz. The beam is very focused with an aperture angle of about \(5^\circ \). The short wavelength of the ultrasonic waves of 8 mm (0.3 in) results in well audible echoes, also from finely structured or small obstacles like wire fences or twigs.

The beam is harmless for humans and animals. A guide dog, for example, is only able to hear frequencies up to 25 kHz and therefore not able to hear the sound of the AHRUS device at all. The intensity of the beam decreases rapidly with distance due to strong attenuation of ultrasound in the air. So the reach of the ultrasound is strongly limited and animals using ultrasound for navigation like bats are not disturbed.

Figure 4 illustrates a design scheme of the AHRUS system. In addition to the battery and power management, the device includes an amplifier for powering the transducers, a Bluetooth Low Energy (BLE) Module for configuration via a smart-phone and an Inertial-Measurement-Unit (IMU). The user interface is implemented by an audio menu using a mini speaker and seven tactile buttons. The menu allows the configuration of the device parameters, the selection of the operating mode and the modulation-signal form.

The IMU is used to support the user in spatial orientation and includes a 3D-compass, -gyroscope and -accelerometer. Silent, haptic hints to the user are given by an integrated multistage vibration motor. The heart of the whole system is an ARM Cortex-M4 microcontroller, which provides sufficient computing power with despite low energy consumption due to its integrated digital signal processor.

A configurable synthesizer for signal generation has been implemented on the signal processor. It allows the generation of click, noise or sound signals, as a continuous signal or pulses with selectable pulse frequency and width. A frequency modulation for generation of signals in which the frequency increases or decreases, so called “chirp-signals”, is also included. Chirps are also used by bats or dolphins for echo localization.

Future plans, to integrate a community platform to allow users to create their own modulation signals and share them with others. This, to promote the exchange and improve the experience with the new technology.

6 Methods of Application

Based on the different signal shapes some methods for practical use of the AHRUS-System were considered. We distinguish the following disciplines: Localization of objects, perception of outlines, obstacles detection, estimation of distances and the recognition of different structures and their roughness.

Localization

For people using AHRUS the first time, a continuous white-noise-like sound was most suitable. Irradiated objects are easily audible and localizable. The auditory impression is as if the object itself is emitting the sound. The signal sounds like a wind gust and is not intrusive. Other people, in proximity, are not disturbed. This modus can be used as a kind of “acoustic white cane”. The user who is blind scans the environment with the focused beam while for example discernible edges or obstacles in distances up to about 10 m (33 ft), depending on size and reflecting surface, will get audible and localizable.

Because of a diffuse backscattering of the ultrasonic waves, also structured grounds like grassland or gravel in a shallow angle to the beam are audibly discerned in most cases. This can be used to localize the border of a sideway going along for example. Also fine structured objects like bushes or fences usually are audible. This is a significant difference to the classical flash sonar method, which (due to the directed reflection) depends on relatively large surfaces with an orthogonal alignment to the listener.

Distance Estimation

For distance estimation of objects or obstacles based on the time span, a signal needs to travel its way from the AHRUS device to a reflector and back to the ear of the user, continuous sounds are not usable. Instead short staccato tone or noise bursts with a clear beginning and ending are best suited. The human sense of hearing is able to perceive the delay of an echo very exactly. Very short distances are perceived as an increasing reverb while larger distances sound like a more or less time shifted impulse [10]. Experienced users (e.g. one of the authors of this paper) are able to estimate object distances up to 10 m (33 ft) with an accuracy of about 0.5–1.5 m (1.6–5 ft).

A variation of this method for less experienced persons is achieved by an adjustment of the pulse frequency on the AHRUS device for a certain reference distance. Therefore a duty cycle between sound and silence of 50 % is chosen. In the next step a reference object in a certain distance (e.g. 10 m or 33 ft) is targeted. Now the pulse frequency is adjusted in a way that an echo of a broadcast burst exactly hits the silent phase of the cycle. So the user hears a continuous tone. For a reference distance of 10 m (33 ft) a sonic speed of 340 m (1100 ft) per second we get a delay of about 60 ms, so if we choose a burst duration of 60 ms the echo fills the space between two bursts.

A variation of the distance leads to significant gaps between signal and echo again. For an unexperienced user it is much easier to assign the gap duration to a difference in distance than the first method based on the estimation of the absolute echo delay.

Structures and Their Roughness

The methods presented so far dealt with the localization of objects and obstacles and the estimation of distances. The next method is used to distinguish different surface roughness and structures. Objects with a surface roughness with a magnitude below the ultrasonic wavelength (8 mm or 315 mil in case of AHRUS) can be described as “almost smooth”, e.g. walls or smooth road surfaces. Echoes from such a reflector are very directed and clear in the sense that the echo has the same signal shape as the original. Rough objects, on the other hand, deliver diffuse (non directed) echoes. Structured objects even deliver multiple echoes from different parts of the structure in various distances of the user.

A good approach to make this effect detectable is the use of “chirps”. A chirp is a signal in which the frequency increases (up-chirp) or decreases (down-chirp) with time. Structured objects change a clear chirp-echo to a blurred noise-like sound. Because of the frequency modulation of the chirp the frequency of an individual echo corresponds with its flight time. In the end the listener hears a mixture of echoes having slightly different distances and delay, depending on the depth distribution of the structure parts. A mixture of many different frequencies is perceived as noise. The strength and spectral distribution of the noisy sound corresponds with the surface structure of the reflector, so the user can differentiate surface textures like a smooth wall, grass or bushes.

Handling Considerations

In addition to the signal shape there are different ways to carry the device. AHRUS can be mounted on a hood or hand held. The advantage of Head-mounting is that the hands stay free for holding a white cane. Also the audio-beam has a fixed position related to the ears and the beam follows the line of vision. So the user is able to scan his environment by turning the head. This method is described as very intuitive and similar to the classical flash sonar technique where the clicks are produced with the mouth.

If the device is carried on a necklace at the height of the solar plexus, the user has to turn their torso to influence the direction of the audio-beam. But compared to head-mounting this way is less conspicuous and may be preferred by some users.

If AHRUS is hold in the hand, fast periodic scanning moves are possible by turning the device off the wrist. In this way an angle of about \(45\,\circ \) degrees can be scanned continuously. The method is similar to the use of a white cane of a blind person walking. The white cane is moved periodically from the left to the right edge of the foot walk and vice versa. So obstacles in the complete width of the walkway are detected. In case of AHRUS this scanning is done with the audio-beam with the advantage that the beam also detects obstacles above the ground level (e.g. letter boxes or branches sticking out of the way). Also the scanning range is up to 10 m (33 ft) and more, so the device can also be used for orientation purposes by detecting known objects in the near environment.

A last method is holding AHRUS at the outstretched arm for finding the position and distance of objects or obstacles. The method is based on two steps. In the first step the direction of an object is piled by holding the device in the center and turning the whole body until the echo magnitude reaches its peak. Now the body of the user is aligned to the object. In the second step the arm is stretched out while the wrist and AHRUS is slowly turned to the inside until the beam hits the object again and the echo magnitude reaches its peak. The angle of the rotation corresponds to the distance of the object. This method is generally called triangulation and works fine for localization of near objects.

7 Evaluation of AHRUS

To get a first insight about the acceptance and the usefulness of the AHRUS system, we evaluated it with four subjects. All of them were in an age between 25 and 27. Two subjects are blind (Subject 1, Subject 2). One from birth and the other for about ten years. The other two subjects were blindfolded (Subject 3, Subject 4). The subjects had to use the AHRUS system in five situations which are explained and evaluated below.

Directional Perception

An obstacle was placed in a distance of five meters around the subject. The subjects had to point to the obstacle. The results of this experiment are outlined in Fig. 5. Most of the subjects, especially the subjects who are blind, found every obstacle. Only one subject made a mistake by recognizing a bush as obstacle which was not the desired obstacle to find.

Distance Perception Threshold

We tested two different obstacles that had to be perceived, a car and a pillar. The subject was placed 15 m (49 ft) from the car and 10 m (33 ft) from the pillar. The subject was led to the obstacle and gave a signal when they heard the obstacle. The distance to the obstacle was measured. The results are nearly the same. The car is a big obstacle that the subjects heard directly. The pillar gave more information about the accuracy of AHRUS. Both subjects who are blind heard it in a higher distance than the blindfolded subjects. Figure 6 illustrates the results of this experiment.

Width Estimation

To test the accuracy of width measurement and the perception of object borders, the subjects had to show the width of an obstacle. They were positioned three meters from the obstacle. By using the AHRUS system the subjects had to show the width of the obstacle with their hands. We measured the difference to the obstacle’s width. Figure 7 illustrates the results for each subject. It shows that a user can get a rough impression about the size of an object.

Perception of Borders between Surfaces

To test the possibility of hearing boarders between two surfaces, in this case between grass and crushed stone, the subjects had to point to the border between both surfaces. Figure 5 illustrates that all subjects recognized the border by using the AHRUS system.

Distance Perception

We also tested the perception of the distance. We positioned an obstacle in 1 m, 2 m and 5 m (3 ft, 7 ft and 16 ft) distance to the subject. The subject had to differentiate between near, middle and far away. Figure 5 illustrates promising results. Three of four subjects did this very well. One subject had problems with this technique but told us that he thinks it would be better with more than five minutes training for the distance perception.

8 Comparison to Flash Sonar

The classical flash sonar with tongue click and the AHRUS differ in several essential points. The reason is the different wavelength of audible sound and ultrasound. Flash sonar uses a large wavelength \(\lambda \) between 80–800 mm or 3–31 in (0.4–4 kHz). AHRUS, on the other hand, works with a wavelength \(\lambda \) of only 8 mm or 0.30 in (40 kHz) what is about 10–100 times smaller.

Directivity

The small wavelength allows sharp focusing of the ultrasound beam with an aperture angle of about \(6^\circ \). In the case a tongue click, the aperture angle is in a magnitude of about 45–\(90^\circ \) depending on the frequency and the mouth opening. Figure 8 shows the difference based on a point source synthesis of the two sound sources in Matlab.

A sharp focused sound source like AHRUS has many advantages. It allows the user a selective scanning of his environment while an echo only comes from a small target area. On the opposite site an unfocused tongue click causes multiple simultaneous and unidirectional echoes from many different reflectors. The simultaneous echoes overwhelm the user while less powerful echoes from smaller objects cannot be heard anymore.

Loudness

In order for a listener to hear the echoes reflected by an object, these have to be sufficiently louder than the environment noise. This is a problem especially in traffic situations or other noisy places. The echo volume depends on the intensity of the sound wave, on the sonar cross section and in case of fine structured or small objects also from the wavelength.

In case of a less focused sound source the sound intensity decreases quickly with increasing distance. While a tongue click rapidly degenerates with distance due the fast growing area on which the sound energy is distributed. The sharp focused beam of AHRUS keeps its intensity over a long distance. This is an important prerequisite for audible echoes from far away or small objects [10].

The sonar cross section describes the ability of an object to reflect a soundwave in the direction of its source. On the one hand, it depends on the size and surface characteristics of an object. On the other hand it depends on the relation between structure size and wavelength.

While the properties of a reflecting object are given, the wavelength can be affected. If the structure size in the magnitude is of the wavelength or even smaller, diffraction effects can be observed. This directs the sound wave around the object and the echo volume decreases rapidly. In the case of audible sound, this limit is already reached at structure sizes of about 0.1 m (4 in), thus small or finely structured objects such as fences or bushes hardly produce any echoes. With the small ultrasound waves, on the other hand, much smaller structures can be perceived by the user.

The Stealth Problem

The reflection law states that the angle of incidence and emergent angle of a wave entering a reflector have to be the same. This effect is known from the billiards game. When a ball hits a gang at an angle it bounces back in exactly the same angle. The same thing happens with a soundwave bouncing at a smooth surface. When the surface is perpendicular to the user, it sends back a loud echo. On the other hand, when the reflecting surface is at a flat angle to the user the sound energy is reflected away from him and only weak or no echoes can be heard. Similar effects are used in technology to hide ships or airplanes from radar waves (Stealthtechnology).

This effect is one of the biggest problems for human echolocation because smooth surfaces with unfavorable angle cannot be perceived in some cases. For flash sonar users the world sometimes appears like a kind of mirror cabinet. For example a smooth wall in walking direction is nearly imperceptible.

A solution of the “stelth problem” is the use of so called diffuse reflection. It occurs when the roughness or structure of a surface is greater than the sound wave length. In this case the wave is scattered diffusely in every direction. In relation to the small wavelength of visible light, almost all surfaces, with the exception of mirrors, are very rough. The seeing person is therefore unaware of this problem of the acoustic world. Only the short wavelengths of ultrasound can at least partially eliminate this condition. Due to the diffuse reflection with the AHRUS system, structured surfaces at an unfavorable angle to the listener are audible even from a roughness of about 4–8 mm (0.15–0.30 in), for example rough road surfaces or a meadow [10].

Comparison Cases

Table 1 shows a comparison between the classical active human echo localization (e.g. in form of flash sonar with tongue click) and the AHRUS system using some practical examples. In summary the AHRUS system has serious advantages. The sharply focused beam is very selective and delivers loud echoes also from smaller objects. The small wavelength reduces the stealth-problem and allows distinction of surface structures and floor coverings. Special methods like triangulation support the estimation of object distances. In result AHRUS delivers better results in the four considered disciplines Localization, Shape Recognition, Overlapping Objects and also Distance Recognition.

The main disadvantage is that the user has to carry an electronic device with him/her. Here, the developers have the task of making the device as small, reliable and user-friendly as possible to increase user acceptance.

9 Conclusion

Methods of the active echolocation support users in the tasks of orientation, localization and perception of forms. Methods to support such tasks are systematically developed like different tongue clicks. The long wavelength of acoustic signals only allows a very low resolution of the acoustic image gathered by tongue clicks. A big problem is based on the long wavelengths that are often longer than an object structure of interest. Therefor only echoes of surfaces with vertical alignment to the user are reflected. The user only hears these echoes whereby many objects or parts of them cannot be perceived.

The proposed audible high resolution ultrasonic sonar (AHRUS) eliminates the significant disadvantages of classic active echolocation techniques. By using self-demodulating ultrasonic waves, it enables the perception of much smaller object structures. Even little surface roughness, e.g. at surfaces of roads, reflects ultrasonic waves in a diffuse manner. This enables the user to take notice of surfaces even by beaming the ultrasonic waves in a low angle at it. While a tongue click creates an undirected signal the ultrasonic beam of AHRUS is bundled up so that the signal is strongly directed to a point a user focuses. By this behaviour a user can scan the environment precisely.

The technology is very small. This enables the user to wear it close to the body or in the hands. Special signal forms enable new application methods for the environment perception, e.g. chirp signals. One example is the perception of surfaces and depth structures. In contrast to electronic aids that use headphones with special signal processing as an audio interface to the user, AHRUS uses the individual and efficient ears of the user himself. Furthermore, the ears stay free to hear the normal information of the environment.

By using soft but striking signals e.g. noisy clicks, the signals are easy to hear but not disturbing during travel. Because of the advantages of ultrasonic waves and configurable signals AHRUS is an efficient extension to classical flash sonar. Nonetheless the full potential of AHRUS will be discovered after more persons who are blind use this technology to discover its pros and cons.

References

Kish, D.: Flash sonar program: learning a new way to see. World Access for the Blind, Copyright (2013)

Kish, D.: Bilder im Kopf: Klick-Echoortung für blinde Menschen, 1 edn. edition bentheim, Würzburg (2015)

Ram, S., Sharf, J.: The people sensor: a mobility aid for the visually impaired. In: Second International Symposium on Wearable Computers, Digest of Papers, pp. 166–167. IEEE (1998)

Rojas, J.A.M., Hermosilla, J.A., Montero, R.S., Esp, P.L.L.: Physical analysis of several organic signals for human echolocation: oral vacuum pulses. Acta acustica united with acustica 95(2), 325–330 (2009)

Romigh, G.D., Brungart, D.S., Simpson, B.D.: Free-field localization performance with a head-tracked virtual auditory display. IEEE J. Selected Topics Sig. Process. 9(5), 943–954 (2015)

Sinne: Klickblitze im Dunkeln. http://www.spektrum.de/news/klickblitze-im-dunkeln/1130592. Accessed 29 Jan 2018

Sound of Vision. https://soundofvision.net/. Accessed 29 Jan 2018

Thaler, L., Wilson, R.C., Gee, B.K.: Correlation between vividness of visual imagery and echolocation ability in sighted, echo-naive people. Exp. Brain Res. 232(6), 1915–1925 (2014)

Ulrich, I., Borenstein, J.: The guidecane-applying mobile robot technologies to assist the visually impaired. IEEE Trans. Syst. Man Cybern. Part A: Syst. Hum. 31(2), 131–136 (2001)

Weinzierl, S.: Handbuch der Audiotechnik, 2008th edn. Springer, Berlin (2008). https://doi.org/10.1007/978-3-540-34301-1

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

von Zabiensky, F., Kreutzer, M., Bienhaus, D. (2018). Ultrasonic Waves to Support Human Echolocation. In: Antona, M., Stephanidis, C. (eds) Universal Access in Human-Computer Interaction. Methods, Technologies, and Users. UAHCI 2018. Lecture Notes in Computer Science(), vol 10907. Springer, Cham. https://doi.org/10.1007/978-3-319-92049-8_31

Download citation

DOI: https://doi.org/10.1007/978-3-319-92049-8_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-92048-1

Online ISBN: 978-3-319-92049-8

eBook Packages: Computer ScienceComputer Science (R0)